19 Elementary statistical testing with R

advertisement

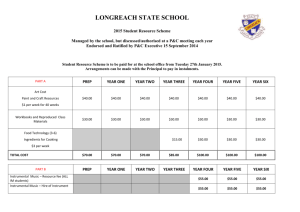

360 BASIC STATISTICAL A N ALY SI S We need to specify the probability P(u;iu) of an individual probability, given the value of u. We use: 19 (9) Elementary statistical testing with R STEfAN TH. GR I ES wbcrc Cis a constaul. Tht: role of Cis to detem1ine how strongly the individual pammctcrs u, depend on the general probability u. We use C= I 0. From the posterior distribution P(uiD) we can further compute the interval (r, .1) of values such as to give a probability of at least 95% that the value of u is within that interval; such an interval is called a 95% posterior inlerral in Bayesian literature. Introduction In Briun Joseph's fi11al cdit.oriul us the editor of what many sec as the flagship journal of the disci piine, Language, he commented on recent developments in the field. One of the t·ccent developments he has seen happening is the following: Linguistics hns ulways had a llltmcricnl and mathematical side . . . bm the use of quantitati ve mcthocb , anti, rcbttedly, formalization> and mudd ing, seems to be ever on the in~rca,c; rare is the paper that does not report on some ~tatistical analys is of relevalll data or otTer ~ome model of the (Joseph 200ll: 687) problem at hand. For several reasons, this appe:ll's to be a development for the better. First, it situates the field of linguistics more firm ly in the domains of social sciences and cogt1itivc science to which, !think. it belongs. Other fields in the social sciem:es and in cognitive science - l>~ychology. sociology, computer science, to name but a few - have long rccogni1.ed rhe power of quantitative methods for their respective fields of study. and since linguists deal with phenomena just as multifactorial and interrelated as scholars in these disciplines, it was time we also began to use the tools that have been so uscful in neighhoring disciplines. Second, the quantitative study of phenomena aiTords us with a higher degree of comparability, objectivity, and replicability. Consider the following slightly edited statement: there is :11 besr a very slight increase in the iirst foL<r decades, then a small but clear incrense in the next three tlct:atb, followed by a very large increase in the last two decades (this chapter, cf. helow Section 2.4). Such statements arc uninformative as long a&: • notions such as 'very slight increase', 'small but clear increase', ant.l 'very large increase' nrc not quantified: one r·esearcher considers an increase in frequency from 20 observations to 40 'large' (because the frequency increases by lOO'lc), another considers it small (because the frequency increases by only 20). and yet another cnnsicler~ it intermediate, but considers a change from 160 to 320 361 362 fi,IS l C: ST,\TISTlCAl ANALYSJS • large (because, while thi~ also involves an addition of 100% to the first number, it involves an addition of 160, not just 20) ...; the division of the nine decades into (three) stage.~ i~ ~imply as.~umed but not justified on the basis of operationalizablc data; the lengths or the nine stages (first four decade!>, then three decades, then two decades) is simply stated hut not justified on the ha~is ofdma. ------------- • Elementary statistical testing with R --------------------------------------- Third, there is increasing evidence that much of the cognitive and/or linguistic system is statistical or probabilistic in nature, as opposed to based on clear-cut categorical rules. Many scholars in flfSt language acquisition, cognitive linguistic,, soeiolinguistics, psycholinguistics etc. embrace, for instance, exemplar-based models of language acquisition, representation, processing, and change. Jt is ccrtainlv no coincidence that this theoretical development coincides with the method~logieal development pointed out by Joseph, and if one adopts a probabilistic theoretical perspective, then the choice of probabilistic - i.e. statistical tools is only natLU1ll; cf. Bod, Hay, and .fannccly (2003) for an exce.llent overview of probabiUstic linguistics. For obvious limitations of space, this chapter cannot provide a full-fledged introduction ro quanritarive methods (cf. the refen;nc~~ hdow). Howt:vt:r, tht: main body of this chapter explains how to set up different kinds of quantitative data for statistical analysis and exemplifies three tests for the three most cornrnon ~tatistical sct:narios: t11e study of frequencies, of <ll'cragc~, and of correlations. Joseph himself is a historical linguist and it is therefore only fitting that this chapter illustrates these guidelines and statistkal methods using examples from language variation and change. 363 trigger the reader's interest in additional references and the exploration of the methods presented here. 2.1 Tabular data and how to load them into R Trivially, before any statistical analysis or data cw1 be undertaken, three steps arc necessary. First, the data have to be gathered and organized in a suitable format. Second, they must be saved in a way Lhat allows them to be imported into statistical software. Third, the data have to be loaded into some statistical software. The lirst sub~cction of this section deals with these three steps. As for the fi rst step, it is absolutely essential to store your data in a spreadsheer. software application so that they can be easily evaluated both with that software and with statistical software. There are three main mles that need to he considered in the construction of a table of raw data: (I) each data point, i.e. count or measun:mcnt of the dependent variablc(s), is listed in a row ou it~ own; (2) every variable with respect to which each data point is described is recorJc:<l in a column on its own; (3) the tirst row contains the names of all variabks. For example, Hundt and Smith (2009) discuss the frequencies of pn:sent pe1fects and simple pasts in British and American English in the 1960s and the 1990s. One uf their overview tables, Table 2 (Appendix 2), is represented here as Table 19.1. Table 19.1. Table 2 (,1ppendix 2) from Hundt and Smith (2009), cross-tabulating tenses mul corpora 2 Elementary statistical tests In this section, I will first illustrate in wb.at format data need to be ~torcd for virrually all spreadsheet software application;, ami statistical tools and how to load them into R, the software that I will usc throughout this chapter (cf. Section 2.1 ). Then, Section 2.2 will discuss how to perform simple statistical tests on frequt:ncy data, Section 2.3 will outline how tht: (;elllraltendencies of \'al'iublcs (i.e. averages) can be tested, and Section 2.4 will be concerned with tests for correlations. Each section will also exemplify bri ~fly how to Crl~atc useful graphs within R. Limitati<ms of ~p<tce require two comments before we begin. First, 1 cannot discuss the very foundations of statistical thinking, the notions of (independent and dependent) variables, (null and alternative) hypotheses, Occam 's ramr, etc., but must assume the reader is already f<lllliliar with these (or read~ up on them in, say, Gries 2009: chapter 5, 2013: chapters I, 5). Second, l can only discuss a few very elementary statistical tests here, although many more and more interesting things are of course possible. I hope, however, that this chapter will present perfecr simple pa>t Totals LOB (BrE 1961) (BrE 1991) FLOB BROWN 4196 35821 400 17 4073 35276 39349 37223 40761 tAm!:! 1961) 353R FRO~ (ArnE 1992) 3499 36250 39749 This is an excellent overview and may look like a good starting poim, but th is is in fact nearly always already tJ1e result of an evaluation rather than the starting point of the raw data table. 1\otably, Table 19.1 does not: • have one row for each of the 159,876 data points, but it has only two • have one column for each of the three variables involved (tense : present perfect vs. simple pa'-1; variety: BrE vs. AmE; time: 1960s vs. 1990s), but it has one column for each combination of variny and time. l'OW\ Totals 15306 144570 159876 364 Elementary statistical testing witl1 R llA~IC STAT l~TICAL ANALYS I S The way that raw data tables normally have to be arranged for stmisti<.:al processing requires a reorganiatiou of Table 19.I into Table 19.2. which then fu lfills the three above-mentioned criteria for raw data tables. Once the data have been organized in this way. rhe second ~tcp before the sraris1ical analysis il> to save them so that they can be ea~i ly loaded into a sradstics application. To that end, you should save the data into a formal that makes them maximally readable by a wide variety of progrdlliS. The simplest way to do this is to save the data into a tab-separated file, i.e. a raw text fi le in which different columns arc separated from each other with tabs. In LibreOffice Calc, one first chooses File: Save As ..., then ~.:!looses 'Text CSV (.csv)' 1 as the fi k typt;, and chooses (Tab I as th~ E_ie!d delimiter. Table 19.2. Reor[?anizacion of 'table 2 (11ppendix 2).from Hundt and Smilh (2009) TENSE TIME YAIUETY 1% 0> DrE 4 I95 more rows like the one immediately above prc.se.nt_perfcct BrE 4072 more prescnt_pcrrcct A.mE pre.~ent_perfcct AmE row~ 1990s like the one immediately above 1960s 3537 mort: row~ like the one immediately above 1990s 3498 more rows like the one immediately above simplc_past BrE 1960s 35820 more rows like the one immediately above simple_past 1990s BrE 35275 more rows like the one immediately above simple_pasl simple_past ArnE well as supplementary packages can be downloaded from www.r-project.org. While R does not femure a cl ickahle graphical user interface (GUI) by default, such a (lllf can he installed (cf. the appendix) and R is extremely powc1ful both in terms of the ~i:tes of data sets it can handle and the number of procedures it allows tl1e user to perform - indeed, since R is a programming language, it can do whatever a user is able to program. In addition, R's graphical facilities are unrivaled and since it is an open source project, it is freely available and has extremely fast bugfix-release Limes. For these and many other reasons, R is used increasingly widely in the scientific community, but also in linguistics in particular, and I will usc it here, too. ·when R is started, by de.fault it only shows a fairly empty console and expects user input from the keybot~rd . Nearly all of the time, the input to R consists of what are called functions and tll'iftmwus . The former are c.onunands that tell R what to do; the Iauer are specifics for the commands, namely what to apply a function to (e.g. a value, the first row of a table, a t:omplete table, etc.) or bow to iipply the func lion l.o it. (t:.g. whut kind of logarithm to compLlle, a binary log, a natural log, ctc.).2 The simplest way J.o read a table with raw data from a tabseparated file created as above involves the function rrad . :) !) Ie, which, if the raw data table has been created as outlined above and in note I, requires only three arguments: the argument f i I e, which specifies the patl1 to tl1e file containing the data; the argument header, which can be set toT (or -RUE) or F (or FALSl), where T/ TRUE mean~ 'the first row contains the variable names', and -I- ALSI:. means the opposite; the argument so:>p, which specifie.~ rhe character that separates columns from each other and which should therefore be set to a tabstop, "\t". • • • 1960s 37223 more rows like the one immediately above 1990s AmE 36249 more rows like the one immediately above To perform the third step, i.e. to load the data inr.o statistil:a! software, you Thus, to import a raw data table from an input ftle < C:!Temp/cxamplcl.txt> and store that table in a so-called data fi ·a me (t:alled (a t. a . :ab 12) in R, you enter the following line of cod~: (where the"<-" tt:lls R to stort: something in the data structure to the left of the 'arrow' and where ''~" means 'press bN'J'bR'): must l'irst decide on which software to use. From my point of view, the best statistical package curremly availahlt: is lhe open source software environment R (cf. R Development Core Team 200fi- 2013). The basic software as 1 I recommend using only •.w rd chanlCters (lcncrs, number;. and under>C<Jr~~) wi1hi11 such tables. While Ihis is slrictly ~peaking t\Qtnecessary to guan:nl "~ po opcr data exohangc bel ween differ"nl progr,1ms - sinre mo.>l programs nowadays provide sophi>tic~red import iunclions or w~r.ard~ 11 is my cxperien<.<: tloul ·,;mple works besl.' data .table<- read . lable( "file=C : /Temp/ examplel . txt " , 11edde 1'=TRUE, sep=" \ t " )' 2 Th~ gcn~ral logic of funcli<>nS mul arguments and differenl kinds of dala stt11clllrcs while es;;entiallo wurl<ing wilh spreadsheel ~oflware such as LibrcOtTice Calc as well as progr•mming language~ such as R - will no1 be discussed here in d:tait; cf. lhe recommend<d further readings for more infonnation. 3G5 Elementary statistical testing with R 366 To check whether the data have been read in correctly, it is always u~cful to look at the structure of the imported data fi rst, using the function s l ~ , which provides all the column names together with some information on what the columns contain, namely their kind of data (integer numbers, charad cr strings as factors, etc.) as well a~ the tirst few values. lf you had read in a file of the kind 'howu in Table 19.2. then this is what the output would look like: str(data . tablel ~ ' dat~.frame ': 159876obs . of3variables: $TENS!:. : ~actorw/Zl!'vels "presenl_perfect" ... : 1: Ill' 111: .. . $VARIETY : Factorw/2levels "AmE". "BrE ": 22???72222 ... $TIME : ractorw/ 2lcvels "1960s" ,"1990s" : 1111 l • 1111 ... The simplest way to be able to access all values of a column att.he same lime by just using the column name involves the function attach, which requires the dm.a frame's name as its only argument ami typically does not retum any OLitput.: attach(data .tahl~l, While the above is the typical way to input data into R, when the data set. in question is small, another way is sometimes simpler, namely entering the data oneself. For example, if you have collected the lengths of five indirect nhjccts and of five direct objects in tenns of number of phonemes and want to quickly compare t]Jeir mean lengths, it is maybe not necessary to create a tab-delimited input file - you can just enter the data into R and assign them to a data structun:, a so-called vector, using the function c, which concatenates the clements provided as arguments (numbers or character su·ings) into a vector. indir . objects<-cO . 2, 3 , 4, 5) dtr . objecl~<-c<3 . 5 , 4. 4 , 3l Then, computing tl1e means is easy and returns 3 and 3.8 for indirect and direct ohjt:l:I.S respectively: 2.2 Two-dimensional frequency data The first application to be discussed here involves two-dimensional frequency tables, i.e. tables such as Table 19.2, and their evaluation. As an example, 1 will discuss ficti tious data that bear on the question to what degree, if any, the syntactic fonn of a response to a question is detennincd by the syntactic form of the question. Let us assume researchers asked subjects a ltogether 200 instances of two types of qu..:stions in Dutch, whose English glosses are listed in (4) and (S). (4) Of whom is this cap? [prepositional que>tion type] (5) Whose cap is this? [non-prepositional question type] The researchers then recorded subjects' answers tn these q ue~tiom and counted how many times the answer was a prepositional or non-prepositional one. One main point of such a study could be to find whether prepositional and nDn-prepositioual qut:sliom. trigger pn:po.~ i tional and non-prepositional responses respecti vely. 3 Ler us assume the frequencies listed in Table 19.3 were obtained. Table 19.3. Fir:titious frequencies obtained in a question-answer experiment Question: wilh prep. Answer: with prep. Answer: without prep. Totals Question: withot1t prep. 9R 2 100 04 36 100 Totals 162 38 200 r ust, the data need to be entered into R. With small two-dimensional tables like these, it is easy to enter them directly rathe.r than prepare the above type of raw data table to read in. In the fo llowing line, the function matri x creates a two·dimensional matrix of the four values, which are listed column-wise and arranged into nco1 2 columns. data . rratrix<-matrix(c(96. 2 , 64 , 36). ncol=2)' mean(ind ir. ob jectsJ' [ 1J 3 mean(di 1· .objects)' [ l) ~. fl Once the data are available in either the tabular data frame or t.he unidimensional vector format, it often takes only a very small amount of R code - usually only one line - to run statistical analyses or produce quite revealing grdphs, some of which will be exemplified in the three following 5ection~>. Typically, it is useful to also provide the matrix with row and column names because this facilitates the subsequent interpretation of statistics and graphs. The following line provides row names (1\~SVIC R= . . .) and column names (OUESTI ON=.. .) t.o the matrix and outputs it so one can check ir. 3 This example is modeled after Levell nnd Kelter ( 1982). 367 .BASIC 36B ST~ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ ___E_ Ie_m_e_n_ ta...:.rl_ ' s_tatistiClll testin-'g_w_it_h_R_ __ l_l\_9 I'I STTC:,\ 1. ANALYSIS att r( data. matrix. "d i mnames "l<-1 is t ( AI~SWER=c( "+prep". " prep"). OUEST ICN=c ( "+ ~rep". "- prep"~ l'l data . matrix QUESTION ANSI-IER +prep- prep +prep 98 64 -prep 2 36 corrclat[on looks like and whether the expected frequencies arc large enough to allow the chi-square test in the first place. ll.s for the latter, the chi-square test in R docs notju~t compute rhe ahove output but also some additional infom1ation such as Ihe expected frequencies, i.e. the frequencies one would expect to find if questions and answer<, were not related. These can be obtained by requesting them from the data ~tructurc d~t.~ .11~t.rl x. t.Pst: data .matrix . tcstscxp• QUESTION ANSWER + preo prep +prep 81 81 -p rep 19 19 lf you want to see the row and column totals, too, this is how they t'an be obtained: addmargins(data .ma trix) ~ ANSWCR + pr·~p - prPp Sum QUESTION +prep- prep Sum 98 2 100 64 162 36 38 100 200 This tabk not only shows that the expected frequencies are large enough to allow the chi-square test. They also show what the effect looks like: we observed: Obviously, when the question involves a preposition, the answer nearly always does, too, whereas if the question does not involve a preposition, then lhc answer still contains a preposition more often than not, but the effect is much less extreme. The question arises whether this difference - 98:2 vs. 64:36 - is large enough to be significant, a question which is addressed by the chi-square test for independence. This test requires that all observations arc independent of each other and that l!O+% of the expected frequencies are larger than 5. We HS.~ume for now that tile 200 responses are completely independent of each other (and will check the expected frequencies shor1ly). You can then use the function chisq . lcst, which in the standard form to be discus~ed here requires tin:: matrix to be tested (j< ta. mat · i x) and an argument c:>rrecl. which <.:an be set to TRUF or rAL SE depending on whether you want to use a <.:orrcction for continuity, which we do not want here (because the ~~mplc ~i:t<.: is greater than GO). For reasons that will become clear shortly, it is best to not just compute the test bul also assign tbe re~ult ol' the test to another dat.a structure: data .miltrix . test<-chisq. test ( data . matrix, ddld.maLrix . test PParson ·s Ch i -squared test data: data .ma Lri x correct=FALSEl~ X-squarcd=37.5569, df =l. p-value=8 . 879e 10 The t e~t shows that there is a highly signiticant effect:"" there is definitely u correlation betv•een the questions and the answers. The question is what this ' 'n1c choice of wurd• 'hishly signiticant' i> based on the follo.,ing, fr(X)ucntty-u..OO cla.,,ificauon: p<O.OOI: 'highly significant'; 0.001 :0;><0.01: ·very significant': O.Ol:Op<0.05: 'signiti~anl'. • more prepositional answers after prepositional questions expected (9!1> !ll); fewer prepositional answers after preposilion-less questions expected (64< 8 I); fewer preposition-less answers after prepositional questions expected (2> 19); more preposition-less answers aft.er preposition-Jess questions expected (36> 19). • • • than than than than Since this piecemeal comparison of observed and expected f requencies is somewhat tedious. it is usually easier to inspect the so-called Pearson residuals. Pearson residuals can be computed for each cell in a table; they are positive and negative when a cell's fret111ency i~ larger or ~maHer tban expected respectively, and the more they deviate from 0, the stronger the e.ffect. From a purely exploratory perspective, Pearson residuals smaller than -3.l\41 or greater than 3.841 are particularly noteworthy. data .matrix . test$res• OUEST! ON ANSWER +prep prep + prep prep 3.900067 3.900067 I . 888889 -1 . 8!l88!lY The findings are the same as above, but they arc easier to identify than from the comparbons of ohserved and ex1>ected frequencies, and we also now sec that t.hc effects for the prcpo~itionless answers arc somewhat more pronounced. 370 Elementary statistical testing with R D A SI C S'l A I'I STICAL AI' AI. YSIS A graphical representation that makes this even more obvious is the so-called association plot, which is shown in Figure 19.1: bla<:k boxes on top of the dashed lines and grey boxes below the dashe.d Iincs represent cell frequencies that are larger and smaller than expected respectively; the heights of the boxes are proportional to the ahove residuals and the widths are proportional to the square roots of the expected frequencies. Tf we compute Cramer's V for the present data set. we obtain the re.sult in (7). (7) Cramer's V 37.5569 - /37.5569- ~ -, ( - - -,' ) - \1---w<J - 0.4.>3 \ 200· miu(2, 2) In R: sqrt(data.~atrix.testtstat/(sum(data.matrixl*min(oim:data. matri xl ·1)) l" assocp'ot(t(data . matrixll~ X· squared 0.43334C8 Thus, the data show an effect such Lhat the type of question detennines the type of answer: prepositional and preposition less questions yield higher frequencies of occurrence of preposition:tl and prepositionlcss answers respectively; the effect is highly significant (p<O.OOO I) and intermediate.ly strong (Cramer's V=0.433).5 0. ~ 0. + cc w ~z 2.3 <( Differences between central tendencies of variables Often, the statistic of interest is not just the observed frequency of some phenomenon, but the ceniTal tendency of some phenomenon, i.c. what is commonly referred to as the average. At the risk of simplifying somewhat, we can say rhat there arc two main average~ for numeric data, the arithmetic mean and the median. Consider the following vector of numbers: - preo +prep x<- c<O .o.o. : . l.l.2,2.5l QUESTION l'igure 19.1. thsodutiu•l plot for rhe Telation between question and answer syntax The only thing that remains to be done i~ to quantify the size of the effect. Since c.; hi-square values an:: COITelated with sample sizes, one cunnot readily U>e chisquare to identify cffec.t si1.es or compare t11em ac.;ross different. studies. Instead, one can u~e a correlation coefficient called Cramer's V, which falls between 0 and I, and the larger the value, the stronger the correlation. Cramer's V is computed as shown in (6). (6) Cramer's V = ll1e arithmetic mean or the:: nu111hcn. in x i; the quoliem of the sum of the values in x (12) divided by the munbc.r of clements of ~ (9), i.e. I 1fJ. The median, by contrast, is the value you get when you so11 the numbers according to their size and pick the one in the middle. i.e. 1:6 mean(x)1] [1 J 1 . 333333 medi an(x l,i [1]1 5 6 Tile choic'C o f w<ml< 'intonncdiatcly strong ' is based on the following, frequently-use<l classilicarion: 0.1 <effcci :;izc<0.3: 'small d T"ct'; 0.3:Scffccl , ;,c< 0.5: 'medium effect'; effect sizc~0.5: ·large effect'. If the vector has an equal number of clements, the median is rhc arithmetic mean of Ihe Iwo middle values. 37 1 372 Elementary statistical testing with R LI ASI<' S TATlST I C~L A :'>I ALYS I S A frequent scenario is, then, that one wants to compare the central tendencies of two vectors to see whether they are significantly diffl:n.:ul from each other. C:on~ider the ~;as~.: where you have collected the lengths of subordinate clauses in words in 10 samples of spoken and 10 samples of written data and obtained the following results: (&) spoken: II , 10, 9, 6. 8, 9 I I, 7. &. 6 (9) written: 13. 14 . 12, 13, II, 14, 7. 10. 12, 12 Thc<.c data were stored in a tah-separatcd text file <C:ffemp/subcl_lengths. txt> as shown in Table 19.4, which you can load as discussed above in Section 2. L data.tabl ec-read .tHhlei"C: /Temp/subc l_lengths . t xt". header-TRUE. sep="\ t" l ~i strldata . tabl el, 'data . rrame' : 20 obs . o" 3 variables: SCASr: in t.123 4 56 789 10 .. . $ MODC : ~actor w/ 2levels " spnk~n ", " written ": 1 11111 11 11 .. . S LENG H: num1110958911785 ... This plot provides a great rleal of infonmtion: the thick hori:runllll lines correspond to the medians; the uppe r and lower horizontal line~ indicate the central 50% of the data around the median (approximately the 2nd and 3rd quartilex); the upper and lowc:r cud of tl~ whisken. extend to the most cxtn:mc data point which is no more than 1.5 times the height of the box away from the box; values outside of t11e mnge of the whiskers are marked individually as small circles; the notches on the sides of the boxes provide an approximate <J5% confidence interval for the difference of the medians: if they overlap, then the medians are most likely not significantly different. • • • • • Of course, we also want to know the exact medians. These can be computed with the following line of code, which basically means 'apply the function > < attachlda ~ a . tablel, g Table 19.4. Lengths of subordinaJe clallses in two .1amp/es (of spoken and written data J CASE .\10DE I.F. GTII spoken spoken II 2 19 20 written written 12 I ' 0 10 12 0 Once the data have been loaded, the best approach is often to explore rhem grnphicRlly. One immen,ely informative plot for summarizing numeric variables is the so-called boxplot. The corresponding R function, boxp I ot, takes two arguments: a formula in which a dependent variable (here, l'he length of the subordim1te clause) precedes the tilde and the independent variable (here, the mode) fo llows it, and the argument notc h=TRU l, which create& notches whose function will be explained shortly. The result is shown (in slightly rnodiliecl form) in Figure 19.2. spoken written Figure 19.2. Bwplm .fi11· rlw relcrlirm between mhordinarc r.iau.w~ length and mode medi an to the data you get when you split the values of L [ i11GT I~ into the groups resulting li-mn I~O DF ': tapply(LE~GTH, MODE. Median)• spoken \,ri t.tl'n lloxpl ot( LENGTH-MODF , notch= -RU[)' Mode !!.~ 12 . 0 Elementary statistical testing with R DASIC >TAT!STICAI. A I<.\LY S IS 37L And since one should always provide a measure of dispen;ion for measures of ceoLJal tendency, we apply the- same type of code to retrieve a simple measure of disper~ion Cor the lengths in the spoken and the wriuen data, the intcrquartile ranges, which indicate the spread ul' the central 50% around the rru::dians: Wid1 these setlings, a U·Wst yields the following re~ults: wi l cox. test(LENGTr--MOOE , paired~FALSE, correct=FALSE exacL~FALSE)., ~li 1coxon rank sum t est data : LENGTII by ~lODE W=II, p value- 0. 003028 alter na t ivehypothesb : lruelucd t ionshiftisnotequa l to O tapply! LE NGTH, '100 E. !Q RJ~ spoken 1~ritten 2 . 50 1.75 From Figure 19.2, it already seems as if the differences between the two modes will be significant ~incc the medians arc fairly far apart and the notches do not overlap. In spite of this, the data must, of t:ourse, be tested. and the test that would normally be used for such data is the t-tcst for independent samples (esp. since, unlike in most cases, tl1e data do not differ signiiicantly from a normal distribution).7 On the other ham!, since the sample sizes arc very small and linguistic data will often be non-nonnal, T will instead disCLlSS a test thai is slightly less powerful, but tl1at can be used regardless of wbether the data are nonmally distributed or not, the U-test (somerimcs also called the two-sample Wilcoxon test). This lest only requires that the observations arc all independent of each other and its function in R is wi lcox . trst.. ILis h::st used with four arguments: • • • • a formul a of the same kind as used for :>oxp- o L: dependent variable ·~ independent variable; the nrgumem pn i r •cd, whkh can be set to TRUe or -/>.LSF, where TRU [ means the values ofthc two groups l'onn meaningful pairs, and F1\ l SC means the opposite_ Since in 1his case the lenglh of any one suhordinate clause in speaking is not related to that of any one subordinate clause from a written file, we set pa i o'ed= FALSE; the argument :or 'e::. which can be set to nuc: or ~.~LS[ dgxiUJing on whctht:r you want to apply a correction for continuity or not as is sometimes recommended for small sample sizes. In the interest of comparability of the test with most other stati~tical sol'! ware, we set tlois to rAI SF for now; the argument exact, which can be set to I liJI: or H>.LSE depending on whct·her you want R ro compute an ~x act test (for small sample sizes) or not. Again, in the interest of compa.rahility, we set this to FA SF. 7 At the risk of considerable simplification. a dimibuoion of value:- is normal if, t>n the whole. most of its values do not diff•r mudo frvmthc overall mean ''"d if the difference.' of values from the overall mean ure e4ually 11mch po~itive and ncgalivc. In graphical tcmt~. norr.1nl distributions can ""'"ally be identified by a bell-shap;:d curvc.~lislogram . Tl~e last line can be ignored sinc.e it only summarize~ which statistical hypolhesrs was tested, namely that the two distrihutions aJe nut identical (such that their difference would be 0). All the findings show that the lengths of subordinatt: dauscs <tre longer but less diverse in writing (cf. 1hc interquartilc ranges), and that the difference between the clause lengths in the two modes of3.5 wun.ls is very significant (p;:::;0.003). 2.4 Correlations between numeric variables The fmal melhod to be discussed here involves tlle correlation between two variables that are numeric in nature, such as lengths (of XPs), re~ction rimes (in milliseconds), time (in yeal'l>), numbers of nodes in a phrase structure tree, etc. Dy computing a correlation coefficient, which usuaUy falls between - I and +l, one tries to answer the following questions: • • • is there <1 relationship between a variable x and <t variable y such that, on the whole, one can say ' the more x, the more v' and/or 'the less x, the less y' or, on the other hand, 'the more ;, the less y' and/or 'the less x, the more y'? If the relationsllip is of the former type, then tlle correlalion coefficient will be >0; if the relationship is of the Jailer type. then the correlation coefficient will be <0: if there is no rclation~hip between x and y, the correlation coefficient will be :::-:0; how strong is this relationship? The more the correlation coefficient differs from 0, the stronger the correlation; is the correlation statlstically ~ignificant'! For example, consider the ca~e wher-e one wants to determine whether the frequencies of two lexical items undergo a temporal trend such that, on the whole, they increase or decrease over lime. The two lexical items 10 he considered are in and just because, and the corpus to be investigated is \'lark Dav!e.•;'s TTMR corpus (hnp://corpus.hyu.edu/time), a corpus containing 100 m1lhon words of text of American English from 1923 to the present, as found in TIME magazine. 375 376 lt~SIC Elementary statistical testing with R STAHSTICAL ANA L YSIS A.~ a first step, the data have to be entered into R, and this is a c~se where they can be easily entered into R in the vector format with c. We create one vector for the time periods (using the decades as rcfcrt:nce points), times<-c0920. 1930. 1940, 1950. 1960. 197C. 1980. 1990,2000>• ami we create one vector for each lexical item that contains their relative frequencies per lO,OOO words in the same order <ls the vector t. i mes contains 7 the decades. That is, the relative frequem:y of in in the llJ20s is 188· /JO.ooo. the 174 8 relative frequency of in in the 1930s is ' / 10 .ooo. etc.: 1ex .i n<-c088.7. 174 .8 , 195 . 2, 211.1. 221.2, 200.5, 194 . 3. :85 . 8, 192. 5) ~1 lex .jb<- ciO.OO'i. 0.004 . 0.005 . 0 . 004. 0 . 010. 0.009, 0 . 013, 0 .029. 0.039) ~ Again. it i~ usually be~>t to first explore the data graphically. If one has two numeric vectors like here, one can usc the function ~·1 ot with a formula where again the dependent variable (the frequency or the lexical item) precedes the tilde and the independent variable (time) follows it. ln ttddition, we can provide the argument type, which specifies the type of plot we want: ''p" for points only, ''J'' for lines only, "h" for both, "h" for histograms/bar charts. etc. lype="b")• l'nes ClowessClex . jh-timPsl)1 p'ot(lex . jb-tin'e~ . As with the boxplot, thi' is another instance where a good graph helps us to analyse our data correctly and already very strongly suggc.,L~ the mucorne of the swdy. The frequencies of in Ouctuate across time without a clear pattern such that, for instance, the relative frequency of in in the last two decades is approximately the same as that in Lhc fi r~t. On the otlu::r hand, tl1e frequencies of just because exhibit a clear trend such that they clearly increase over Lime. lt is important to note, however, that the growth trend is not linear in the sense that rhere is at best a very slight increase in the first four decades, then a small but clear increase in the next three decades (from 0.0045 to 0.011 per 10,000 words), followed by a very large increase in the last two decades (from 0.01 1 to 0.034 per 10,000 words). /-~~~\ er,· ~ ~~~ ~ ¥'><. ··/·· .' 9 o-..::......o""'·· ••, _ c ' 1920 19:;() 1940 1950 1000 1970 1980 1900 2000 nne 1920 1931: 1940 H?50 1960 1970 '9<0 1990 2COO nne rigure 1\1.3. Lme plots a"d smootilerJ jor the 11onnalized jrrq1'r11rie.1 of in and jusl because plotClex . in-times, type="b" l'IJ Especial ly with data sets larger than the present one. it. i'> often also usel'ul to immediately add a .;moother, which is a line that tries to summari7c the way the points pattern within the coordinate system. Unlike a linear regression line, which is (too) often used on such occasions, such smoothing lim:~ do not have to be straiglu hut can be curved and are thus often beller at identifying curvature and nonI in car trends in the data. linesClowess<lcx .in-timesl)~ \Vc can rhcn do the same !'or just beca11se, and f-igure 19.3 shows slightly prettified versions of the graphs we obtain. 377 over time To determine whether the observed patterns are significantly conelated ,,·ith time or not, one can com pute " correlation coefficient. The probably most frequently used one is Pearson's producHnomem correlation r (which is related to linear regressions). However, just as a linear regression is often nm the best way to inspect data for trends, Pearson's r i~ often not ideal either. This is because Pearson's r requires that the vectors/variables that arc correlated are interval-scaled, do not contain influential outliers, nnd aJe hivariat.cly normally distribu1ed, and lingui, tic data often violate one or more of these as5umpt.ions. It is therefore oftc·n better to use a measure that, while a little less powerful, is also less sensitive to potcmially problematic di~tri ­ butions. One such measure is Kendall's tau T, which can he computed in R very easily. The necessary function is cor. tes l, whid1 takes three arguments: the two vectors 1o be correlated and the argument 11c Lhod, which is set to ''kendall". Por the entire time span from 1920 to 2000, this is the result for in: 3/8 ~lementary statistical llAS I (; SI'A T I STJCA I. ANALYSIS cor.test(times . lex. in . methor.="kendall")' Kendall's rank correlation tau data : times ar-d lex . 'n T ~'A, r-vdlue ~ 1 a l ternative hypothesis : truP tau is not equdl lo C sample estimates : tau 0 There is no con-elation whatsoever (r= O) and the result is completely insignificanl (p=l). (Note thm a restri~:Lion of the analysis to the period up to 1960 would yield a high positive cmTelation, and restriction to the period from 1960 a high negative correlation. Th t~'>, as Figure 19.3 indicates. th~: result of such analyse-. alway& depends on the choice of the period investigated, and it is indispensable to first insp~;:ct diachronic data visually before subjecting tJJem to statistical analysis. llilpen <Ill([ C'rries (2009) db~:uss techniques such as Variability- based Neighbor Clustering or regression with breakpoints, which can help analysts to discover structure in temporal data.) 8 The results for just because, on tht: other hand. are very different: there is a high positive correlation (r= 0.743), which is very significant (p::::::0.006). The higher the value for time (i.e. the more rcccm the corpus data), the higher the relative fn~4uency of just because, and this correlation is very unlikely to arise just by chance. 3 testing wilh R 379 Concluding remarks As mentioned at the outset of this chapter. rigorous quantitative analyses are not yet as frequent in linguistics us they could be, but thcv arc on the rise. This chapter has only discussed a few ~imph: Lc, ts, and , ur" course, linguistic data are often much more complex : For Cl\amplc, this chapter has only dealt with monofactorial tests, i.e. tests involving one independent and one dependent variable. Howcvc:r, more advancc::d scenarios may involve more independent and more dependent variables. l11is opens up a whole host of interesting research possibilities, but also requires more sophisticated methods to check onc::'s models. Also, the chapter ha~ not discussed cases where in~eractions - combinations of independem variables that have unexpected effects on dependent variables - arise and how to deal with these. Finally, the section on correlations has only mentioned correlation coefficients lltat arc typically used for linear trends, hut has not dealt witJJ other kinds of regression models. As the methodological landscape in Iinguistics is changing, it is important for the progress within our field(s) that we learn how to handle the kinds of complex and multifaceted ~c~:narius linguistic data pose. I hope that this chapter has provided a first overview of what is possible and has whetted the reader's appetite to apply more methods fTom this exciting domain to linguistic data. cor . test( times. lex . jb . method~" kenda' 1" )' Kendall' s rank correla t ion tau l ilne5 and 1ex . jb z ~ 2. 7406 . p·valtlt' = 0.006132 alternative hypolhesis : true tau is not equal to 0 sam~le estimijtes : :au da td : 0 . 7t.3160S In sum, lhc overall relative frequency of in doe' not change over time, but the frequency of just hecause does so quite markedly. While the above observations do not exhaust the range of methods that can be applied to the present and similar kinds of data, they already provide a good assessment of whether there is a trend or not, whether it is signi ficanLor not, and to some degree at least what its internal structure looks like. 8 I am omitllng n waming abou1 exact p-valuc;; and lies here. wluch informs the user thai, because the le~.jb values arc not all uifi<:rent rrom each other, the cstimaled p-valuc might not be perfeclly accumrc; for tire prnonl di~cussion, this 1s nol relevant; cf. Hilpert an<1 Gries (2009) fur lll~ c.llilCt p-valuc. Elementary statistical testing with R Pros and potentials • statistics package R is freely available • methods allow testing for statistical significance and graphic visualization of distributions • statistical techniques enable users to see contingencies and patterns that remain implicit without them Cons and caveats • quantitative methods and studies must be complemented by qualitative interpretation and validation • statistical comparisons must make linguistic sense • user needs some expertise to determine which tests and graphical displays are appropriate Further reading Baaycn, R. llarnld 2008. Analyzing linguistic data: a practiwl introduction to statistics using R. Cambridge Univ~rsily Pre,s. Crawley, Michael 2012. Jhe R book. 2nd edn. Chichester: John Wiley. Fox, John 2005. 'The R commander: a basic statistics grarhical user interface toR·. Journal of Statistical Software 14(9): t-42. Gries, Stefan Th. 2009. Quantitatil'e corpus linxui.wics with R: a practical imroduclion. I ,ondon anrl New York: Routledge, Taylor & Francis Group. 380 Elementary statistical testing with R JJASI C S TATlo J'I C AL ANALYS I S Gries. Stefan Th. 2013. Statistic.1'jor linguistic.\· wirh R: a prrU'Iiral imroducrioll. 2nd edn. Dcrlin and New York: DcGruyter Mouton. Hilpert, Marlin and G ric~. Stefan Th. 2009. 'A.sRessing li't:quency change~ iiiiiiUili-slagc diachronic corpora: applications for historical corpus linguistics and tile study of language acqubition•. Urerary all(/ Linguislic Compwiux 34(4): 385-401. Johnson, Keith 2008. Quanlitali\'e method.1 iu linguistics. Malden. MA and Oxford: Blackwell. She~kin, David 201 1. Ham/book ofparamelric and non-parametric statistical fJI'l)(edtlres. 5th edn. Boca Raton, FL: Chapman and Hall. Spector. Phil 2008. Data manipularion wiih R. Berlin and New York: Springer. The wcbsilt; ol' R: www.r-project.org The CRAN task views: hllp://cran.r-projcct.org/web/vicws 'l'hc ling-r-lang-L mailing list: llttps:,l/mailrnan.ucsil.edu/rnai lman/li~li n foil i1tg- r-Jang-l The Statistics for Linguists with R ncwsgroup: http://g roups.google.com/group/ stmforling-wilh-r An dt:l.:l11Jtlic textbook for statistics: www.statsoft.com/tcxtbook/stathomc.hlml Appendix The R commander While R does not by default feature a clickahle GUI, some application'> provide such a GCI. The best-known of these is probably !heR commander (d. Fox 2005). The two figures below illustrate how, once the data have been read into R, the U-tcst from Section 2.3 is computed with the R commander. F1le Ed1t Oata ~tattSUcs Grapns t-'odels 01stnbubons Tool::. HE!fp Scr1pt W1ndow c;umrn.;m~ Cont ngency table-s set View data set f;1odel. -:.No .lCtl·.,~ rrod~:l-" Means l"roportions Nonp~rametric tests Tv.o-!>ample Wilcoxon :~~t. U meoSioootl anal~s s Pa red-sampl~ W•lrolton t~t Fit n1ut.lt!l~ Places system 0 Foe Eo t Oat• StatiSt•<> Grophs Models Otslr•butlons Toob Help £- <.Uo active ~odel> Orff'tfence: <No groups selected> Al""'a<JYe Hypo<htsoS Type ri Tt"'..t Tv.<>Sidl!d • Oo'auk Oifurence < 0 Exact Dtffeence > o Normal approxll'nattOn l rwmal approwmiiltiOn w th continuity conecti<lfl OK Cancet • Help Figure 19.5. PP1fnrming a l/-IP.\'11 two-.l'llmple Wilw.ronresr in the R com/11(//lc/er Useful online resources Ea. Data sec. :$ Applicatiors Krus<ai-Wal~s test ... Friedman rdllk·SUfl1 test .Figure 19.4. Choo$ing a U-!e.<l i two-sample Wiicoxnn test m ri:e R rommnndrr 38L The reader may wonder why noL all stati sti~.:al tests in the present chapter were introduced using the R commander. TI1e main reason is that, while the R commander is without doubt a great tool, it is nevertheless incomplete and limited to what l.he package's maintainer included in it. Since old func.r.ions arc improved and new functions/packages arc developed all the time, the R commander provides access to 'only' the (mhnill.edly con~iderahle number of) functions included by the maintainer. Furthermore, the R commander is a useful tool in that it always outputs the code that resulted from the user's choices. In my opinion, it is too easy to become overly dependent on it and never get to sec the real power that R provides as a programming language. I therefore strongly encourage the reader to nearly always use the command line; in the loug ruu. this policy will pay off. X