Mixed-Integer Optimization and Global Optimization

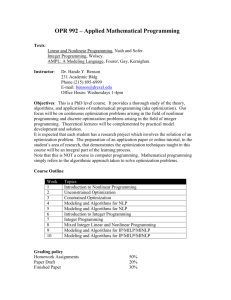

advertisement

Mixed Integer Nonlinear Programming (MINLP)

Sven Leyffer

Jeff Linderoth

MCS Division

Argonne National Lab

leyffer@mcs.anl.gov

ISE Department

Lehigh University

jtl3@lehigh.edu

1 / 160

Overview

1

Introduction, Applications, and Formulations

2

Classical Solution Methods

3

Modern Developments in MINLP

4

Implementation and Software

2 / 160

Part I

Introduction, Applications, and Formulations

3 / 160

The Problem of the Day

Mixed Integer Nonlinear Program (MINLP)

f (x, y )

minimize

x,y

subject to c(x, y ) ≤ 0

x ∈ X , y ∈ Y integer

4 / 160

The Problem of the Day

Mixed Integer Nonlinear Program (MINLP)

f (x, y )

minimize

x,y

subject to c(x, y ) ≤ 0

x ∈ X , y ∈ Y integer

f , c smooth (convex) functions

X , Y polyhedral sets, e.g. Y = {y ∈ [0, 1]p | Ay ≤ b}

5 / 160

The Problem of the Day

Mixed Integer Nonlinear Program (MINLP)

f (x, y )

minimize

x,y

subject to c(x, y ) ≤ 0

x ∈ X , y ∈ Y integer

f , c smooth (convex) functions

X , Y polyhedral sets, e.g. Y = {y ∈ [0, 1]p | Ay ≤ b}

y ∈ Y integer ⇒ hard problem

6 / 160

The Problem of the Day

Mixed Integer Nonlinear Program (MINLP)

f (x, y )

minimize

x,y

subject to c(x, y ) ≤ 0

x ∈ X , y ∈ Y integer

f , c smooth (convex) functions

X , Y polyhedral sets, e.g. Y = {y ∈ [0, 1]p | Ay ≤ b}

y ∈ Y integer ⇒ hard problem

f , c not convex ⇒ very hard problem

7 / 160

Why the MI?

We can use 0-1 (binary) variables for a variety of purposes

Modeling yes/no decisions

Enforcing disjunctions

Enforcing logical conditions

Modeling fixed costs

Modeling piecewise linear functions

8 / 160

Why the MI?

We can use 0-1 (binary) variables for a variety of purposes

Modeling yes/no decisions

Enforcing disjunctions

Enforcing logical conditions

Modeling fixed costs

Modeling piecewise linear functions

If the variable is associated with a physical entity that is indivisible,

then it must be integer

Number of aircraft carriers to to produce. Gomory’s Initial Motivation

9 / 160

A Popular MINLP Method

Dantzig’s Two-Phase Method for MINLP

Adapted by Leyffer and Linderoth

10 / 160

A Popular MINLP Method

Dantzig’s Two-Phase Method for MINLP

1

Adapted by Leyffer and Linderoth

Convince the user that he or she does not wish to solve a mixed

integer nonlinear programming problem at all!

11 / 160

A Popular MINLP Method

Dantzig’s Two-Phase Method for MINLP

Adapted by Leyffer and Linderoth

1

Convince the user that he or she does not wish to solve a mixed

integer nonlinear programming problem at all!

2

Otherwise, solve the continuous relaxation (NLP) and round off the

minimizer to the nearest integer.

12 / 160

A Popular MINLP Method

Dantzig’s Two-Phase Method for MINLP

Adapted by Leyffer and Linderoth

1

Convince the user that he or she does not wish to solve a mixed

integer nonlinear programming problem at all!

2

Otherwise, solve the continuous relaxation (NLP) and round off the

minimizer to the nearest integer.

For 0 − 1 problems, or those in which the |y | is “small”, the

continuous approximation to the discrete decision is not accurate

enough for practical purposes.

Conclusion: MINLP methods must be studied!

13 / 160

Example: Core Reload Operation

(Quist, A.J., 2000)

max. reactor efficiency after reload

subject to diffusion PDE & safety

diffusion PDE ' nonlinear equation

⇒ integer & nonlinear model

avoid reactor becoming sub-critical

14 / 160

Example: Core Reload Operation

(Quist, A.J., 2000)

max. reactor efficiency after reload

subject to diffusion PDE & safety

diffusion PDE ' nonlinear equation

⇒ integer & nonlinear model

avoid reactor becoming overheated

15 / 160

Example: Core Reload Operation

(Quist, A.J., 2000)

look for cycles for moving bundles:

e.g. 4 → 6 → 8 → 10

i.e. bundle moved from 4 to 6 ...

model with binary xilm ∈ {0, 1}

xilm = 1

⇔ node i has bundle l of cycle m

16 / 160

AMPL Model of Core Reload Operation

Exactly one bundle per node:

L X

M

X

xilm = 1

∀i ∈ I

l=1 m=1

AMPL model:

var x {I,L,M} binary ;

Bundle {i in I}: sum{l in L, m in M} x[i,l,m] = 1 ;

Multiple Choice: One of the most common uses of IP

Full AMPL model c-reload.mod at

www.mcs.anl.gov/~leyffer/MacMINLP/

17 / 160

Gas Transmission Problem

(De Wolf and Smeers, 2000)

Belgium has no gas!

18 / 160

Gas Transmission Problem

(De Wolf and Smeers, 2000)

Belgium has no gas!

All natural gas is imported

from Norway, Holland, or

Algeria.

Supply gas to all demand

points in a network in a

minimum cost fashion.

19 / 160

Gas Transmission Problem

(De Wolf and Smeers, 2000)

Belgium has no gas!

All natural gas is imported

from Norway, Holland, or

Algeria.

Supply gas to all demand

points in a network in a

minimum cost fashion.

Gas is pumped through the

network with a series of

compressors

There are constraints on the

pressure of the gas within

the pipe

20 / 160

Pressure Loss is Nonlinear

Assume horizontal pipes and

steady state flows

Pressure loss p across a pipe is

related to the flow rate f as

2

2

=

pin

− pout

1

sign(f )f 2

Ψ

Ψ: “Friction Factor”

21 / 160

Gas Transmission: Problem Input

Network (N, A). A = Ap ∪ Aa

Aa : active arcs have compressor. Flow rate can increase on arc

Ap : passive arcs simply conserve flow rate

Ns ⊆ N: set of supply nodes

ci , i ∈ Ns : Purchase cost of gas

s i , s i : Lower and upper bounds on gas “supply” at node i

p i , p i : Lower and upper bounds on gas pressure at node i

22 / 160

Gas Transmission: Problem Input

Network (N, A). A = Ap ∪ Aa

Aa : active arcs have compressor. Flow rate can increase on arc

Ap : passive arcs simply conserve flow rate

Ns ⊆ N: set of supply nodes

ci , i ∈ Ns : Purchase cost of gas

s i , s i : Lower and upper bounds on gas “supply” at node i

p i , p i : Lower and upper bounds on gas pressure at node i

si , i ∈ N: supply at node i.

si > 0 ⇒ gas added to the network at node i

si < 0 ⇒ gas removed from the network at node i to meet demand

fij , (i, j) ∈ A: flow along arc (i, j)

f (i, j) > 0 ⇒ gas flows i → j

f (i, j) < 0 ⇒ gas flows j → i

23 / 160

Gas Transmission Model

min

X

cj sj

j∈Ns

subject to

X

sign(fij )fij2 −

sign(fij )fij2 −

fij

j|(i,j)∈A

Ψij (pi2 − pj2 )

Ψij (pi2 − pj2 )

si

pi

fij

= si

=

≥

∈

∈

≥

∀i ∈ N

0

∀(i, j) ∈ Ap

0

∀(i, j) ∈ Aa

∀i ∈ N

[s i , s i ]

[p i , p i ]

∀i ∈ N

0

∀(i, j) ∈ Aa

24 / 160

Your First Modeling Trick

Don’t include nonlinearities or nonconvexities unless necessary!

Replace pi2 ← ρi

25 / 160

Your First Modeling Trick

Don’t include nonlinearities or nonconvexities unless necessary!

Replace pi2 ← ρi

sign(fij )fij2 − Ψij (ρi − ρj ) = 0

∀(i, j) ∈ Ap

2

fij − Ψij (ρi − ρj ) ≥ 0

∀(i, j) ∈ Aa

p p

∀i ∈ N

ρi ∈ [ p i , p i ]

26 / 160

Your First Modeling Trick

Don’t include nonlinearities or nonconvexities unless necessary!

Replace pi2 ← ρi

sign(fij )fij2 − Ψij (ρi − ρj ) = 0

∀(i, j) ∈ Ap

2

fij − Ψij (ρi − ρj ) ≥ 0

∀(i, j) ∈ Aa

p p

∀i ∈ N

ρi ∈ [ p i , p i ]

This trick only works because

1

2

pi2 terms appear only in the bound constraints

Also fij ≥ 0 ∀(i, j) ∈ Aa

This model is nonconvex: sign(fij )fij2 is a nonconvex function

Some solvers do not like sign

27 / 160

Dealing with sign(·): The NLP Way

Use auxiliary binary variables to indicate direction of flow

Let |fij | ≤ F ∀(i, j) ∈ Ap

1 fij ≥ 0

zij =

0 fij ≤ 0

fij ≥ −F (1 − zij )

fij ≤ Fzij

Note that

sign(fij ) = 2zij − 1

Write constraint as

(2zij − 1)fij2 − Ψij (ρi − ρj ) = 0.

28 / 160

Special Ordered Sets

Sven thinks this ’NLP trick’ is pretty cool

29 / 160

Special Ordered Sets

Sven thinks this ’NLP trick’ is pretty cool

It is not how it is done in De Wolf and Smeers (2000).

30 / 160

Special Ordered Sets

Sven thinks this ’NLP trick’ is pretty cool

It is not how it is done in De Wolf and Smeers (2000).

Heuristic for finding a good starting solution, then a local

optimization approach based on a piecewise-linear simplex method

Another (similar) approach involves approximating the nonlinear

function by piecewise linear segments, but searching for the globally

optimal solution: Special Ordered Sets of Type 2

If the “multidimensional” nonlinearity cannot be removed, resort to

Special Ordered Sets of Type 3

31 / 160

Portfolio Management

N: Universe of asset to purchase

xi : Amount of asset i to hold

B: Budget

(

min

|N|

x∈R+

u(x) |

)

X

xi = B

i∈N

32 / 160

Portfolio Management

N: Universe of asset to purchase

xi : Amount of asset i to hold

B: Budget

(

min

|N|

x∈R+

u(x) |

)

X

xi = B

i∈N

def

Markowitz: u(x) = −αT x + λx T Qx

α: Expected returns

Q: Variance-covariance matrix of expected returns

λ: Risk aversion parameter

33 / 160

More Realistic Models

b ∈ R|N| of “benchmark” holdings

def

Benchmark Tracking: u(x) = (x − b)T Q(x − b)

Constraint on E[Return]: αT x ≥ r

34 / 160

More Realistic Models

b ∈ R|N| of “benchmark” holdings

def

Benchmark Tracking: u(x) = (x − b)T Q(x − b)

Constraint on E[Return]: αT x ≥ r

Limit Names: |i ∈ N : xi > 0| ≤ K

Use binary indicator variables to model the implication

xi > 0 ⇒ yi = 1

Implication modeled with variable upper bounds:

xi ≤ Byi

P

i∈N

∀i ∈ N

yi ≤ K

35 / 160

Even More Models

Min Holdings: (xi = 0) ∨ (xi ≥ m)

Model implication: xi > 0 ⇒ xi ≥ m

xi > 0 ⇒ yi = 1 ⇒ xi ≥ m

xi ≤ Byi , xi ≥ myi ∀i ∈ N

36 / 160

Even More Models

Min Holdings: (xi = 0) ∨ (xi ≥ m)

Model implication: xi > 0 ⇒ xi ≥ m

xi > 0 ⇒ yi = 1 ⇒ xi ≥ m

xi ≤ Byi , xi ≥ myi ∀i ∈ N

Round Lots: xi ∈ {kLi , k = 1, 2, . . .}

xi − zi Li = 0, zi ∈ Z+ ∀i ∈ N

37 / 160

Even More Models

Min Holdings: (xi = 0) ∨ (xi ≥ m)

Model implication: xi > 0 ⇒ xi ≥ m

xi > 0 ⇒ yi = 1 ⇒ xi ≥ m

xi ≤ Byi , xi ≥ myi ∀i ∈ N

Round Lots: xi ∈ {kLi , k = 1, 2, . . .}

xi − zi Li = 0, zi ∈ Z+ ∀i ∈ N

Vector h of initial holdings

Transactions: ti = |xi − hi |

P

Turnover: i∈N ti ≤ ∆

P

Transaction Costs: i∈N ci ti in objective

P

Market Impact: i∈N γi ti2 in objective

38 / 160

Multiproduct Batch Plants

(Kocis and Gross-

mann, 1988)

M: Batch Processing Stages

N: Different Products

H: Horizon Time

Qi : Required quantity of product i

tij : Processing time product i stage j

Sij : “Size Factor” product i stage j

39 / 160

Multiproduct Batch Plants

(Kocis and Gross-

mann, 1988)

M: Batch Processing Stages

N: Different Products

H: Horizon Time

Qi : Required quantity of product i

tij : Processing time product i stage j

Sij : “Size Factor” product i stage j

Bi :

Vj :

Nj :

Ci :

Batch size of product i ∈ N

Stage j size: Vj ≥ Sij Bi ∀i, j

Number of machines at stage j

Longest stage time for product i: Ci ≥ tij /Nj ∀i, j

40 / 160

Multiproduct Batch Plants

min

X

βj

αj Nj Vj

j∈M

s.t.

Vj − Sij Bi ≥ 0

∀i ∈ N, ∀j ∈ M

Ci Nj ≥ tij

∀i ∈ N, ∀j ∈ M

X Qi

Ci ≤ H

Bi

i∈N

Bound Constraints on Vj , Ci , Bi , Nj

Nj ∈ Z

∀j ∈ M

41 / 160

Modeling Trick #2

Horizon Time and Objective Function Nonconvex. :-(

42 / 160

Modeling Trick #2

Horizon Time and Objective Function Nonconvex. :-(

Sometimes variable transformations work!

vj = ln(Vj ), nj = ln(Nj ), bi = ln(Bi ), ci = ln Ci

43 / 160

Modeling Trick #2

Horizon Time and Objective Function Nonconvex. :-(

Sometimes variable transformations work!

vj = ln(Vj ), nj = ln(Nj ), bi = ln(Bi ), ci = ln Ci

X

min

αj e Nj +βj Vj

j∈M

s.t. vj − ln(Sij )bi

X ci + nj

Qi e Ci −Bi

≥

≥

≤

0

∀i ∈ N, ∀j ∈ M

ln(τij )

∀i ∈ N, ∀j ∈ M

H

i∈N

(Transformed) Bound Constraints on Vj , Ci , Bi

44 / 160

How to Handle the Integrality?

But what to do about the integrality?

1 ≤ Nj ≤ N j

∀j ∈ M, Nj ∈ Z

∀j ∈ M

nj ∈ {0, ln(2), ln(3), . . . ...}

1 nj takes value ln(k)

Ykj =

0 Otherwise

nj −

K

X

ln(k)Ykj

= 0

∀j ∈ M

= 1

∀j ∈ M

k=1

K

X

Ykj

k=1

This model is available at http://www-unix.mcs.anl.gov/

~leyffer/macminlp/problems/batch.mod

45 / 160

A Small Smattering of Other Applications

Chemical Engineering Applications:

process synthesis (Kocis and Grossmann, 1988)

batch plant design (Grossmann and Sargent, 1979)

cyclic scheduling (Jain, V. and Grossmann, I.E., 1998)

design of distillation columns (Viswanathan and Grossmann, 1993)

pump configuration optimization (Westerlund, T., Pettersson, F. and

Grossmann, I.E., 1994)

Forestry/Paper

production (Westerlund, T., Isaksson, J. and Harjunkoski, I., 1995)

trimloss minimization (Harjunkoski, I., Westerlund, T., Pörn, R. and

Skrifvars, H., 1998)

Topology Optimization (Sigmund, 2001)

46 / 160

Part II

Classical Solution Methods

47 / 160

Classical Solution Methods for MINLP

1

Classical Branch-and-Bound

2

Outer Approximation & Benders Decomposition

Hybrid Methods

3

LP/NLP Based Branch-and-Bound

Integrating SQP with Branch-and-Bound

48 / 160

Branch-and-Bound

Solve relaxed NLP (0 ≤ y ≤ 1 continuous relaxation)

. . . solution value provides lower bound

integer feasible

etc.

Branch on yi non-integral

Solve NLPs & branch until

1

2

3

•

Node infeasible ...

Node integer feasible ... ⇒ get upper bound (U)

N

Lower bound ≥ U ...

etc.

yi = 1

dominated

by upper bound

yi = 0

infeasible

Search until no unexplored nodes on tree

49 / 160

Variable Selection for Branch-and-Bound

Assume yi ∈ {0, 1} for simplicity ...

(x̂, ŷ ) fractional solution to parent node; fˆ = f (x̂, ŷ )

1

maximal fractional branching: choose ŷi closest to

1

2

max {min(1 − ŷi , ŷi )}

i

50 / 160

Variable Selection for Branch-and-Bound

Assume yi ∈ {0, 1} for simplicity ...

(x̂, ŷ ) fractional solution to parent node; fˆ = f (x̂, ŷ )

1

maximal fractional branching: choose ŷi closest to

1

2

max {min(1 − ŷi , ŷi )}

i

2

strong branching: (approx) solve all NLP children:

f (x, y )

minimize

x,y

+/−

fi

←

subject to c(x, y ) ≤ 0

x ∈ X , y ∈ Y , yi = 1/0

branching variable yi that changes objective the most:

max min(fi + , fi − )

i

51 / 160

Node Selection for Branch-and-Bound

Which node n on tree T should be solved next?

1 depth-first search: select deepest node in tree

minimizes number of NLP nodes stored

exploit warm-starts (MILP/MIQP only)

52 / 160

Node Selection for Branch-and-Bound

Which node n on tree T should be solved next?

1 depth-first search: select deepest node in tree

minimizes number of NLP nodes stored

exploit warm-starts (MILP/MIQP only)

2

best estimate: choose node with best expected integer soln

X

+

min fp(n) +

min ei (1 − yi ), ei− yi

n∈T

i:yi fractional

+/−

where fp(n) = value of parent node, ei

= pseudo-costs

summing pseudo-cost estimates for all integers in subtree

53 / 160

Outer Approximation (Duran and Grossmann, 1986)

Motivation: avoid solving huge number of NLPs

• Exploit MILP/NLP solvers: decompose integer/nonlinear part

Key idea: reformulate MINLP as MILP (implicit)

• Solve alternating sequence of MILP & NLP

NLP subproblem yj fixed:

f (x, yj )

minimize

x

NLP(yj )

subject to c(x, yj ) ≤ 0

x ∈X

MILP

NLP(y j)

Main Assumption: f , c are convex

54 / 160

Outer Approximation (Duran and Grossmann, 1986)

η

• let (xj , yj ) solve NLP(yj )

• linearize f , c about (xj , yj ) =: zj

• new objective variable η ≥ f (x, y )

• MINLP (P) ≡ MILP (M)

f(x)

minimize η

z=(x,y ),η

subject to η ≥ fj + ∇fjT (z − zj ) ∀yj ∈ Y

(M)

0 ≥ cj + ∇cjT (z − zj ) ∀yj ∈ Y

x ∈ X , y ∈ Y integer

SNAG: need all yj ∈ Y linearizations

55 / 160

Outer Approximation (Duran and Grossmann, 1986)

(Mk ): lower bound (underestimate convex f , c)

NLP(yj ): upper bound U (fixed yj )

NLP gives

linearization

NLP( y ) subproblem

MILP finds

new y

MILP master program

MILP infeasible?

No

Yes

STOP

⇒ stop, if lower bound ≥ upper bound

56 / 160

Outer Approximation & Benders Decomposition

Take OA cuts for zj := (xj , yj ) ... wlog X = Rn

η ≥ fj + ∇fjT (z − zj )

&

0 ≥ cj + ∇cjT (z − zj )

sum with (1, λj ) ... λj multipliers of NLP(yj )

T

η ≥ fj + λT

j cj + (∇fj + ∇cj λj ) (z − zj )

KKT conditions of NLP(yj ) ⇒ ∇x fj + ∇x cj λj = 0

... eliminate x components from valid inequality in y

⇒

η ≥ fj + (∇y fj + ∇y cj λj )T (y − yj )

NB: µj = ∇y fj + ∇y cj λj multiplier of y = yj in NLP(yj )

References: (Geoffrion, 1972)

57 / 160

LP/NLP Based Branch-and-Bound

AIM: avoid re-solving MILP master (M)

Consider MILP branch-and-bound

58 / 160

LP/NLP Based Branch-and-Bound

AIM: avoid re-solving MILP master (M)

Consider MILP branch-and-bound

integer

feasible

interrupt MILP, when yj found

⇒ solve NLP(yj ) get xj

59 / 160

LP/NLP Based Branch-and-Bound

AIM: avoid re-solving MILP master (M)

Consider MILP branch-and-bound

interrupt MILP, when yj found

⇒ solve NLP(yj ) get xj

linearize f , c about (xj , yj )

⇒ add linearization to tree

60 / 160

LP/NLP Based Branch-and-Bound

AIM: avoid re-solving MILP master (M)

Consider MILP branch-and-bound

interrupt MILP, when yj found

⇒ solve NLP(yj ) get xj

linearize f , c about (xj , yj )

⇒ add linearization to tree

continue MILP tree-search

... until lower bound ≥ upper bound

61 / 160

LP/NLP Based Branch-and-Bound

need access to MILP solver ... call back

◦ exploit good MILP (branch-cut-price) solver

◦ (Akrotirianakis et al., 2001) use Gomory cuts in tree-search

preliminary results: order of magnitude faster than OA

◦ same number of NLPs, but only one MILP

similar ideas for Benders & Extended Cutting Plane methods

recent implementation by CMU/IBM group

References: (Quesada and Grossmann, 1992)

62 / 160

Integrating SQP & Branch-and-Bound

AIM: Avoid solving NLP node to convergence.

Sequential Quadratic Programming (SQP)

→ solve sequence (QPk ) at every node

fk + ∇fkT d + 21 d T Hk d

minimize

d

subject to ck + ∇ckT d ≤ 0

(QPk )

xk + dx ∈ X

yk + dy ∈ Ŷ .

Early branching:

After QP step choose non-integral yik+1 , branch & continue SQP

References: (Borchers and Mitchell, 1994; Leyffer, 2001)

63 / 160

Integrating SQP & Branch-and-Bound

SNAG: (QPk ) not lower bound

⇒ no fathoming from upper bound

minimize

d

fk + ∇fkT d + 12 d T Hk d

subject to ck + ∇ckT d ≤ 0

xk + dx ∈ X

yk + dy ∈ Ŷ .

NB: (QPk ) inconsistent and trust-region active ⇒ do not fathom

64 / 160

Integrating SQP & Branch-and-Bound

SNAG: (QPk ) not lower bound

⇒ no fathoming from upper bound

minimize

d

fk + ∇fkT d + 12 d T Hk d

subject to ck + ∇ckT d ≤ 0

xk + dx ∈ X

yk + dy ∈ Ŷ .

Remedy: Exploit OA underestimating property (Leyffer, 2001):

add objective cut fk + ∇fkT d ≤ U − to (QPk )

fathom node, if (QPk ) inconsistent

NB: (QPk ) inconsistent and trust-region active ⇒ do not fathom

65 / 160

Comparison of Classical MINLP Techniques

Summary of numerical experience

1

Quadratic OA master: usually fewer iteration

MIQP harder to solve

2

NLP branch-and-bound faster than OA

... depends on MIP solver

3

LP/NLP-based-BB order of magnitude faster than OA

. . . also faster than B&B

4

Integrated SQP-B&B up to 3× faster than B&B

' number of QPs per node

5

ECP works well, if function/gradient evals expensive

66 / 160

Part III

Modern Developments in MINLP

67 / 160

Modern Methods for MINLP

1

Formulations

Relaxations

Good formulations: big M 0 s and disaggregation

2

Cutting Planes

Cuts from relaxations and special structures

Cuts from integrality

3

Handling Nonconvexity

Envelopes

Methods

68 / 160

Relaxations

def

z(S) = minx∈S f (x)

def

z(T ) = minx∈T f (x)

Independent of f , S, T :

z(T ) ≤ z(S)

S

T

69 / 160

Relaxations

def

z(S) = minx∈S f (x)

def

z(T ) = minx∈T f (x)

Independent of f , S, T :

z(T ) ≤ z(S)

If xT∗ = arg minx∈T f (x)

S

And xT∗ ∈ S, then

T

70 / 160

Relaxations

def

z(S) = minx∈S f (x)

def

z(T ) = minx∈T f (x)

Independent of f , S, T :

z(T ) ≤ z(S)

If xT∗ = arg minx∈T f (x)

S

And xT∗ ∈ S, then

xT∗ = arg minx∈S f (x)

T

71 / 160

UFL: Uncapacitated Facility Location

Facilities: J

Customers: I

min

X

fj x j +

j∈J

X

XX

fij yij

i∈I j∈J

∀i ∈ I

yij

= 1

yij

≤ |I |xj

j∈J

X

∀j ∈ J

(1)

i∈I

OR yij

≤ xj

∀i ∈ I , j ∈ J

(2)

72 / 160

UFL: Uncapacitated Facility Location

Facilities: J

Customers: I

min

X

fj x j +

j∈J

X

XX

fij yij

i∈I j∈J

∀i ∈ I

yij

= 1

yij

≤ |I |xj

j∈J

X

∀j ∈ J

(1)

i∈I

OR yij

≤ xj

∀i ∈ I , j ∈ J

(2)

Which formulation is to be preferred?

73 / 160

UFL: Uncapacitated Facility Location

Facilities: J

Customers: I

min

X

fj x j +

j∈J

X

XX

fij yij

i∈I j∈J

∀i ∈ I

yij

= 1

yij

≤ |I |xj

j∈J

X

∀j ∈ J

(1)

i∈I

OR yij

≤ xj

∀i ∈ I , j ∈ J

(2)

Which formulation is to be preferred?

I = J = 40. Costs random.

74 / 160

UFL: Uncapacitated Facility Location

Facilities: J

Customers: I

min

X

fj x j +

j∈J

X

XX

fij yij

i∈I j∈J

∀i ∈ I

yij

= 1

yij

≤ |I |xj

j∈J

X

∀j ∈ J

(1)

i∈I

OR yij

≤ xj

∀i ∈ I , j ∈ J

(2)

Which formulation is to be preferred?

I = J = 40. Costs random.

Formulation 1. 53,121 seconds, optimal solution.

Formulation 2. 2 seconds, optimal solution.

75 / 160

Valid Inequalities

Sometimes we can get a better formulation by dynamically

improving it.

An inequality π T x ≤ π0 is a valid inequality for S if

π T x ≤ π0 ∀x ∈ S

Alternatively: maxx∈S {π T x} ≤ π0

76 / 160

Valid Inequalities

Sometimes we can get a better formulation by dynamically

improving it.

An inequality π T x ≤ π0 is a valid inequality for S if

π T x ≤ π0 ∀x ∈ S

Alternatively: maxx∈S {π T x} ≤ π0

Thm: (Hahn-Banach). Let S ⊂ Rn be

a closed, convex set, and let x̂ 6∈ S.

π Tx =

π0

Then there exists π ∈ Rn such that

π T x̂ > max{π T x}

x̂

S

x∈S

77 / 160

Nonlinear Branch-and-Cut

Consider MINLP

fxT x + fyT y

minimize

x,y

subject to c(x, y ) ≤ 0

y ∈ {0, 1}p , 0 ≤ x ≤ U

Note the Linear objective

This is WLOG:

min f (x, y )

⇔

min η s.t. η ≥ f (x, y )

78 / 160

It’s Actually Important!

We want to approximate the convex hull of integer solutions, but

without a linear objective function, the solution to the relaxation

might occur in the interior.

No Separating Hyperplane! :-(

min(y1 − 1/2)2 + (y2 − 1/2)2

y2

s.t. y1 ∈ {0, 1}, y2 ∈ {0, 1}

(ŷ1 , ŷ2 )

y1

79 / 160

It’s Actually Important!

We want to approximate the convex hull of integer solutions, but

without a linear objective function, the solution to the relaxation

might occur in the interior.

No Separating Hyperplane! :-(

min(y1 − 1/2)2 + (y2 − 1/2)2

y2

s.t. y1 ∈ {0, 1}, y2 ∈ {0, 1}

(ŷ1 , ŷ2 )

η ≥ (y1 − 1/2)2 + (y2 − 1/2)2

η

y1

80 / 160

Valid Inequalities From Relaxations

Idea: Inequalities valid for a relaxation are valid for original

Generating valid inequalities for a relaxation is often easier.

x̂

πT x = π

0

S

T

Separation Problem over T:

Given x̂, T find (π, π0 ) such

that π T x̂ > π0 ,

π T x ≤ π0 ∀x ∈ T

81 / 160

Simple Relaxations

Idea: Consider one row relaxations

82 / 160

Simple Relaxations

Idea: Consider one row relaxations

If P = {x ∈ {0, 1}n | Ax ≤ b}, then for any row i,

Pi = {x ∈ {0, 1}n | aiT x ≤ bi } is a relaxation of P.

83 / 160

Simple Relaxations

Idea: Consider one row relaxations

If P = {x ∈ {0, 1}n | Ax ≤ b}, then for any row i,

Pi = {x ∈ {0, 1}n | aiT x ≤ bi } is a relaxation of P.

If the intersection of the relaxations is a good approximation to the

true problem, then the inequalities will be quite useful.

84 / 160

Simple Relaxations

Idea: Consider one row relaxations

If P = {x ∈ {0, 1}n | Ax ≤ b}, then for any row i,

Pi = {x ∈ {0, 1}n | aiT x ≤ bi } is a relaxation of P.

If the intersection of the relaxations is a good approximation to the

true problem, then the inequalities will be quite useful.

Crowder et al. (1983) is the seminal paper that shows this to be true

for IP.

85 / 160

Simple Relaxations

Idea: Consider one row relaxations

If P = {x ∈ {0, 1}n | Ax ≤ b}, then for any row i,

Pi = {x ∈ {0, 1}n | aiT x ≤ bi } is a relaxation of P.

If the intersection of the relaxations is a good approximation to the

true problem, then the inequalities will be quite useful.

Crowder et al. (1983) is the seminal paper that shows this to be true

for IP.

MINLP: Single (linear) row relaxations are also valid ⇒ same

inequalities can also be used

86 / 160

Knapsack Covers

K = {x ∈ {0, 1}n | aT x ≤ b}

A set C ⊆ N is a cover if

P

j∈C

aj > b

A cover C is a minimal cover if C \ j is not a cover ∀j ∈ C

87 / 160

Knapsack Covers

K = {x ∈ {0, 1}n | aT x ≤ b}

A set C ⊆ N is a cover if

P

j∈C

aj > b

A cover C is a minimal cover if C \ j is not a cover ∀j ∈ C

If C ⊆ N is a cover, then the cover inequality

X

xj ≤ |C | − 1

j∈C

is a valid inequality for S

Sometimes (minimal) cover inequalities are facets of conv(K )

88 / 160

Other Substructures

Single node flow: (Padberg et al., 1985)

X

|N|

xj ≤ b, xj ≤ uj yj ∀ j ∈ N

S = x ∈ R+ , y ∈ {0, 1}|N| |

j∈N

Knapsack with single continuous variable: (Marchand and Wolsey,

1999)

X

S = x ∈ R+ , y ∈ {0, 1}|N| |

aj y j ≤ b + x

j∈N

Set Packing: (Borndörfer and Weismantel, 2000)

n

o

|N|

S = y ∈ {0, 1} | Ay ≤ e

A ∈ {0, 1}|M|×|N| , e = (1, 1, . . . , 1)T

89 / 160

The Chvátal-Gomory Procedure

A general procedure for generating valid inequalities for integer

programs

90 / 160

The Chvátal-Gomory Procedure

A general procedure for generating valid inequalities for integer

programs

Let the columns of A ∈ Rm×n be denoted by {a1 , a2 , . . . an }

S = {y ∈ Zn+ | Ay ≤ b}.

1

2

3

4

Choose nonnegative multipliers u ∈P

Rm

+

T

T

u Ay ≤ u b is a valid inequality ( j∈N u T aj yj ≤ u T b).

P

bu T a cy ≤ u T b (Since y ≥ 0).

Pj∈N T j j

T

T

j∈N bu aj cyj ≤ bu bc is valid for S since bu aj cyj is an integer

91 / 160

The Chvátal-Gomory Procedure

A general procedure for generating valid inequalities for integer

programs

Let the columns of A ∈ Rm×n be denoted by {a1 , a2 , . . . an }

S = {y ∈ Zn+ | Ay ≤ b}.

1

2

3

4

Choose nonnegative multipliers u ∈P

Rm

+

T

T

u Ay ≤ u b is a valid inequality ( j∈N u T aj yj ≤ u T b).

P

bu T a cy ≤ u T b (Since y ≥ 0).

Pj∈N T j j

T

T

j∈N bu aj cyj ≤ bu bc is valid for S since bu aj cyj is an integer

Simply Amazing: This simple procedure suffices to generate every

valid inequality for an integer program

92 / 160

Extension to MINLP

(Çezik and Iyengar, 2005)

This simple idea also extends to mixed 0-1 conic programming

minimize f T z

def

z = (x,y )

subject to Az K b

y ∈ {0, 1}p , 0 ≤ x ≤ U

K: Homogeneous, self-dual, proper, convex cone

x K y ⇔ (x − y ) ∈ K

93 / 160

Gomory On Cones

(Çezik and Iyengar, 2005)

LP: Kl = Rn+

SOCP: Kq = {(x0 , x̄) | x0 ≥ kx̄k}

SDP: Ks = {x = vec(X ) | X = X T , X p.s.d}

94 / 160

Gomory On Cones

(Çezik and Iyengar, 2005)

LP: Kl = Rn+

SOCP: Kq = {(x0 , x̄) | x0 ≥ kx̄k}

SDP: Ks = {x = vec(X ) | X = X T , X p.s.d}

def

Dual Cone: K∗ = {u | u T z ≥ 0 ∀z ∈ K}

Extension is clear from the following equivalence:

Az K b

⇔ u T Az ≥ u T b ∀u K∗ 0

95 / 160

Gomory On Cones

(Çezik and Iyengar, 2005)

LP: Kl = Rn+

SOCP: Kq = {(x0 , x̄) | x0 ≥ kx̄k}

SDP: Ks = {x = vec(X ) | X = X T , X p.s.d}

def

Dual Cone: K∗ = {u | u T z ≥ 0 ∀z ∈ K}

Extension is clear from the following equivalence:

Az K b

⇔ u T Az ≥ u T b ∀u K∗ 0

Many classes of nonlinear inequalities can be represented as

Ax Kq b or Ax Ks b

96 / 160

Using Gomory Cuts in MINLP

(Akrotirianakis et al., 2001)

LP/NLP Based Branch-and-Bound solves MILP instances:

minimize η

def

z = (x,y ),η

subject to η ≥ fj + ∇fjT (z − zj ) ∀yj ∈ Y k

0 ≥ cj + ∇cjT (z − zj ) ∀yj ∈ Y k

x ∈ X , y ∈ Y integer

97 / 160

Using Gomory Cuts in MINLP

(Akrotirianakis et al., 2001)

LP/NLP Based Branch-and-Bound solves MILP instances:

minimize η

def

z = (x,y ),η

subject to η ≥ fj + ∇fjT (z − zj ) ∀yj ∈ Y k

0 ≥ cj + ∇cjT (z − zj ) ∀yj ∈ Y k

x ∈ X , y ∈ Y integer

Create Gomory mixed integer cuts from

η ≥ fj + ∇fjT (z − zj )

0 ≥ cj + ∇cjT (z − zj )

Akrotirianakis et al. (2001) shows modest improvements

98 / 160

Using Gomory Cuts in MINLP

(Akrotirianakis et al., 2001)

LP/NLP Based Branch-and-Bound solves MILP instances:

minimize η

def

z = (x,y ),η

subject to η ≥ fj + ∇fjT (z − zj ) ∀yj ∈ Y k

0 ≥ cj + ∇cjT (z − zj ) ∀yj ∈ Y k

x ∈ X , y ∈ Y integer

Create Gomory mixed integer cuts from

η ≥ fj + ∇fjT (z − zj )

0 ≥ cj + ∇cjT (z − zj )

Akrotirianakis et al. (2001) shows modest improvements

Research Question: Other cut classes?

Research Question: Exploit “outer approximation” property?

99 / 160

Disjunctive Cuts for MINLP

(Stubbs and Mehrotra, 1999)

Extension of Disjunctive Cuts for MILP: (Balas, 1979; Balas et al., 1993)

def

Continuous relaxation (z = (x, y ))

x

def

C = {z|c(z) ≤ 0, 0 ≤ y ≤ 1, 0 ≤ x ≤ U}

continuous

relaxation

y

100 / 160

Disjunctive Cuts for MINLP

(Stubbs and Mehrotra, 1999)

Extension of Disjunctive Cuts for MILP: (Balas, 1979; Balas et al., 1993)

def

Continuous relaxation (z = (x, y ))

x

def

C = {z|c(z) ≤ 0, 0 ≤ y ≤ 1, 0 ≤ x ≤ U}

def

C = conv({x ∈ C | y ∈ {0, 1}p })

convex

hull

y

101 / 160

Disjunctive Cuts for MINLP

(Stubbs and Mehrotra, 1999)

Extension of Disjunctive Cuts for MILP: (Balas, 1979; Balas et al., 1993)

def

Continuous relaxation (z = (x, y ))

x

def

C = {z|c(z) ≤ 0, 0 ≤ y ≤ 1, 0 ≤ x ≤ U}

def

C = conv({x ∈ C | y ∈ {0, 1}p })

0/1 def

Cj

= {z ∈ C |yj = 0/1}

z = λ0 u0 + λ1 u1

def

λ0 + λ1 = 1, λ0 , λ1 ≥ 0

let Mj (C ) =

u0 ∈ Cj0 , u1 ∈ Cj1

integer

feasible

set

y

⇒ Pj (C ) := projection of Mj (C ) onto z

⇒ Pj (C ) = conv (C ∩ yj ∈ {0, 1}) and P1...p (C ) = C

102 / 160

Disjunctive Cuts: Example

minimize x | (x − 1/2)2 + (y − 3/4)2 ≤ 1, −2 ≤ x ≤ 2, y ∈ {0, 1}

x,y

x

Cj0

Given ẑ with ŷj 6∈ {0, 1} find separating

hyperplane

(

minimize kz − ẑk

z

⇒

subject to z ∈ Pj (C )

Cj1

y

ẑ = (x̂, ŷ )

103 / 160

Disjunctive Cuts Example

Cj1

Cj0

def

z ∗ = arg min kz − ẑk

z∗

s.t. λ0 u0 + λ1 u1

λ0 + λ1

−0.16

≤ u0

0

−0.47

≤ u1

0

λ0 , λ1

= z

= 1

0.66

≤

1

1.47

≤

1

≥ 0

ẑ = (x̂, ŷ )

104 / 160

Disjunctive Cuts Example

Cj1

Cj0

def

z ∗ = arg min kz − ẑk22

z∗

s.t. λ0 u0 + λ1 u1

λ0 + λ1

−0.16

≤ u0

0

−0.47

≤ u1

0

λ0 , λ1

= z

= 1

0.66

≤

1

1.47

≤

1

≥ 0

ẑ = (x̂, ŷ )

105 / 160

Disjunctive Cuts Example

Cj1

Cj0

def

z ∗ = arg min kz − ẑk∞

z∗

s.t. λ0 u0 + λ1 u1

λ0 + λ1

−0.16

≤ u0

0

−0.47

≤ u1

0

λ0 , λ1

= z

= 1

0.66

≤

1

1.47

≤

1

≥ 0

ẑ = (x̂, ŷ )

106 / 160

Disjunctive Cuts Example

Cj1

Cj0

def

z ∗ = arg min kz − ẑk

z∗

s.t. λ0 u0 + λ1 u1

λ0 + λ1

−0.16

≤ u0

0

−0.47

≤ u1

0

λ0 , λ1

= z

= 1

0.66

≤

1

1.47

≤

1

≥ 0

ẑ = (x̂, ŷ )

NONCONVEX

107 / 160

What to do?

(Stubbs and Mehrotra, 1999)

Look at the perspective of c(z)

P(c(z̃), µ) = µc(z̃/µ)

Think of z̃ = µz

108 / 160

What to do?

(Stubbs and Mehrotra, 1999)

Look at the perspective of c(z)

P(c(z̃), µ) = µc(z̃/µ)

Think of z̃ = µz

Perspective gives a convex reformulation of Mj (C ): Mj (C̃ ), where

µci (z/µ) ≤ 0

C̃ := (z, µ) 0 ≤ µ ≤ 1

0 ≤ x ≤ µU, 0 ≤ y ≤ µ

c(0/0) = 0 ⇒ convex representation

109 / 160

Disjunctive Cuts Example

µ (x/µ − 1/2)2 + (y /µ − 3/4)2 − 1 ≤ 0

x

−2µ ≤ x ≤ 2µ

C̃ = y 0≤y ≤µ

µ 0≤µ≤1 µ

x

x

Cj0

µ

Cj0

Cj1

y

Cj0

Cj1

C1

yµ j

y

x

110 / 160

Example, cont.

C̃j0 = {(z, µ) | yj = 0}

C̃j1 = {(z, µ) | yj = µ}

111 / 160

Example, cont.

C̃j0 = {(z, µ) | yj = 0}

C̃j1 = {(z, µ) | yj = µ}

Take v0 ← µ0 u0 v1 ← µ1 u1

min kz − ẑk

s.t. v0 + v1

µ0 + µ1

(v0 , µ0 )

(v1 , µ1 )

µ0 , µ1

=

=

∈

∈

≥

z

1

C̃j0

C̃j1

0

Solution to example:

∗ x

−0.401

=

y∗

0.780

separating hyperplane: ψ T (z − ẑ), where ψ ∈ ∂kz − ẑk

112 / 160

0

CExample,

j

.198

x

Cont.

Cj1

ψ=

+ 0.

061y

=−

0.03

2

z∗

2x ∗ + 0.5

2y ∗ − 0.75

0.198x + 0.061y ≥ −0.032

ẑ = (x̂, ŷ )

113 / 160

Nonlinear Branch-and-Cut

(Stubbs and Mehrotra, 1999)

Can do this at all nodes of the branch-and-bound tree

Generalize disjunctive approach from MILP

solve one convex NLP per cut

Generalizes Sherali and Adams (1990) and Lovász and Schrijver

(1991)

tighten cuts by adding semi-definite constraint

Stubbs and Mehrohtra (2002) also show how to generate convex

quadratic inequalities, but computational results are not that

promising

114 / 160

Generalized Disjunctive Programming

(Raman and Grossmann,

1994; Lee and Grossmann, 2000)

Consider disjunctive

minimize

x,Y

subject to

NLP

P

fi + f (x)

Yi

¬Y

i

W

ci (x) ≤ 0 Bi x = 0 ∀i ∈ I

fi = γ i

fi = 0

0 ≤ x ≤ U, Ω(Y ) = true, Y ∈ {true, false}p

Application: process synthesis

• Yi represents presence/absence of units

• Bi x = 0 eliminates variables if unit absent

Exploit disjunctive structure

• special branching ... OA/GBD algorithms

115 / 160

Generalized Disjunctive Programming

(Raman and Grossmann,

1994; Lee and Grossmann, 2000)

Consider disjunctive

minimize

x,Y

subject to

NLP

P

fi + f (x)

Yi

¬Y

i

W

ci (x) ≤ 0 Bi x = 0 ∀i ∈ I

fi = γ i

fi = 0

0 ≤ x ≤ U, Ω(Y ) = true, Y ∈ {true, false}p

Big-M formulation (notoriously bad), M > 0:

ci (x) ≤ M(1 − yi )

−Myi ≤ Bi x ≤ Myi

fi = yi γi

Ω(Y ) converted to linear inequalities

116 / 160

Generalized Disjunctive Programming

(Raman and Grossmann,

1994; Lee and Grossmann, 2000)

Consider disjunctive

minimize

x,Y

subject to

NLP

P

fi + f (x)

Yi

¬Y

i

W

ci (x) ≤ 0 Bi x = 0 ∀i ∈ I

fi = γ i

fi = 0

0 ≤ x ≤ U, Ω(Y ) = true, Y ∈ {true, false}p

convex hull representation ...

x = vi1 + vi0 ,

λi1 + λi0 = 1

λi1 ci (vi1 /λi1 ) ≤ 0,

Bi vi0 = 0

0 ≤ vij ≤ λij U,

0 ≤ λij ≤ 1,

fi = λi1 γi

117 / 160

Dealing with Nonconvexities

Functional nonconvexity causes

serious problems.

Branch and bound must have

true lower bound (global

solution)

118 / 160

Dealing with Nonconvexities

Functional nonconvexity causes

serious problems.

Branch and bound must have

true lower bound (global

solution)

Underestimate nonconvex

functions. Solve relaxation.

Provides lower bound.

119 / 160

Dealing with Nonconvexities

Functional nonconvexity causes

serious problems.

Branch and bound must have

true lower bound (global

solution)

Underestimate nonconvex

functions. Solve relaxation.

Provides lower bound.

If relaxation is not exact, then

branch

120 / 160

Dealing with Nonconvex Constraints

If nonconvexity in constraints,

may need to overestimate and

underestimate the function to

get a convex region

121 / 160

Envelopes

f :Ω→R

Convex Envelope (vexΩ (f )):

Pointwise supremum of convex

underestimators of f over Ω.

122 / 160

Envelopes

f :Ω→R

Convex Envelope (vexΩ (f )):

Pointwise supremum of convex

underestimators of f over Ω.

Concave Envelope (cavΩ (f )):

Pointwise infimum of concave

overestimators of f over Ω.

123 / 160

Branch-and-Bound Global Optimization Methods

Under/Overestimate “simple” parts of (Factorable) Functions

individually

Bilinear Terms

Trilinear Terms

Fractional Terms

Univariate convex/concave terms

124 / 160

Branch-and-Bound Global Optimization Methods

Under/Overestimate “simple” parts of (Factorable) Functions

individually

Bilinear Terms

Trilinear Terms

Fractional Terms

Univariate convex/concave terms

General nonconvex functions f (x) can be underestimated over a

region [l, u] “overpowering” the function with a quadratic function

that is ≤ 0 on the region of interest

L(x) = f (x) +

n

X

αi (li − xi )(ui − xi )

i=1

Refs: (McCormick, 1976; Adjiman et al., 1998; Tawarmalani and

Sahinidis, 2002)

125 / 160

Bilinear Terms

The convex and concave envelopes of the bilinear function xy over a

rectangular region

def

R = {(x, y ) ∈ R2 | lx ≤ x ≤ ux , ly ≤ y ≤ uy }

are given by the expressions

vexxyR (x, y ) = max{ly x + lx y − lx ly , uy x + ux y − ux uy }

cavxyR (x, y ) = min{uy x + lx y − lx uy , ly x + ux y − ux ly }

126 / 160

Worth 1000 Words?

xy

127 / 160

Worth 1000 Words?

vexR (xy )

128 / 160

Worth 1000 Words?

cavR (xy )

129 / 160

Summary

MINLP: Good relaxations are important

Relaxations can be improved

Statically: Better formulation/preprocessing

Dynamically: Cutting planes

Nonconvex MINLP:

Methods exist, again based on relaxations

Tight relaxations is an active area of research

Lots of empirical questions remain

130 / 160

Part IV

Implementation and Software

131 / 160

Implementation and Software for MINLP

1

Special Ordered Sets

2

Implementation & Software Issues

132 / 160

Special Ordered Sets of Type 1

SOS1:

P

λi = 1 & at most one λi is nonzero

Example 1: d ∈ {d1 , . . . , dp } discrete diameters

P

⇔ d = λi di and {λ1 , . . . , λp } is SOS1

P

P

⇔ d = λi di and

λi = 1 and λi ∈ {0, 1}

. . . d is convex combination with coefficients λi

Example 2: nonlinear function c(y ) of single integer

P

P

⇔y=

iλi and c =

c(i)λi and {λ1 , . . . , λp } is SOS1

References: (Beale, 1979; Nemhauser, G.L. and Wolsey, L.A., 1988;

Williams, 1993) . . .

133 / 160

Special Ordered Sets of Type 1

SOS1:

P

λi = 1 & at most one λi is nonzero

Branching on SOS1

1

2

reference row a1 < . . . < ap

e.g. diameters

P

fractionality: a := ai λi

a < at

a > a t+1

134 / 160

Special Ordered Sets of Type 1

SOS1:

P

λi = 1 & at most one λi is nonzero

Branching on SOS1

1

2

reference row a1 < . . . < ap

e.g. diameters

P

fractionality: a := ai λi

3

find t : at < a ≤ at+1

4

branch: {λt+1 , . . . , λp } = 0

or {λ1 , . . . , λt } = 0

a < at

a > a t+1

135 / 160

Special Ordered Sets of Type 2

SOS2:

P

λi = 1 & at most two adjacent λi nonzero

Example: Approximation of nonlinear function z = z(x)

z(x)

breakpoints x1 < . . . < xp

function values zi = z(xi )

piece-wise linear

x

136 / 160

Special Ordered Sets of Type 2

SOS2:

P

λi = 1 & at most two adjacent λi nonzero

Example: Approximation of nonlinear function z = z(x)

z(x)

breakpoints x1 < . . . < xp

function values zi = z(xi )

piece-wise linear

P

x=

λi xi

P

z=

λi zi

x

{λ1 , . . . , λp } is SOS2

. . . convex combination of two breakpoints . . .

137 / 160

Special Ordered Sets of Type 2

SOS2:

P

λi = 1 & at most two adjacent λi nonzero

Branching on SOS2

1

2

reference row a1 < . . . < ap

e.g. ai = xi

P

fractionality: a := ai λi

x < at

x > at

138 / 160

Special Ordered Sets of Type 2

SOS2:

P

λi = 1 & at most two adjacent λi nonzero

Branching on SOS2

1

2

reference row a1 < . . . < ap

e.g. ai = xi

P

fractionality: a := ai λi

3

find t : at < a ≤ at+1

4

branch: {λt+1 , . . . , λp } = 0

or {λ1 , . . . , λt−1 }

x < at

x > at

139 / 160

Special Ordered Sets of Type 3

Example: Approximation of 2D function u = g (v , w )

Triangularization of [vL , vU ] × [wL , wU ] domain

1

vL = v1 < . . . < vk = vU

2

wL = w1 < . . . < wl = wU

3

function uij := g (vi , wj )

4

λij weight of vertex (i, j)

140 / 160

Special Ordered Sets of Type 3

Example: Approximation of 2D function u = g (v , w )

Triangularization of [vL , vU ] × [wL , wU ] domain

1

vL = v1 < . . . < vk = vU

2

wL = w1 < . . . < wl = wU

3

function uij := g (vi , wj )

4

λij weight of vertex (i, j)

v=

w

P

λij vi

P

w=

λij wj

P

u = λij uij

v

141 / 160

Special Ordered Sets of Type 3

Example: Approximation of 2D function u = g (v , w )

Triangularization of [vL , vU ] × [wL , wU ] domain

1

vL = v1 < . . . < vk = vU

2

wL = w1 < . . . < wl = wU

3

function uij := g (vi , wj )

4

λij weight of vertex (i, j)

v=

w

P

λij vi

P

w=

λij wj

P

u = λij uij

P

1 = λij is SOS3 . . .

v

142 / 160

Special Ordered Sets of Type 3

SOS3:

P

λij = 1 & set condition holds

w

1

2

3

v=

P

λij vi ... convex combinations

P

w=

λij wj

P

u = λij uij

{λ11 , . . . , λkl } satisfies set condition

v

⇔ ∃ trangle ∆ : {(i, j) : λij > 0} ⊂ ∆

violates set condn

i.e. nonzeros in single triangle ∆

143 / 160

Branching on SOS3

λ violates set condition

w

compute

P centers:

v̂ = Pλij vi &

ŵ =

λij wi

find s, t such that

vs ≤ v̂ < vs+1 &

ws ≤ ŵ < ws+1

branch on v or w

v

violates set condn

144 / 160

Branching on SOS3

λ violates set condition

compute

P centers:

v̂ = Pλij vi &

ŵ =

λij wi

find s, t such that

vs ≤ v̂ < vs+1 &

ws ≤ ŵ < ws+1

branch on v or w

vertical branching:

X

L

λij = 1

X

λij = 1

R

145 / 160

Branching on SOS3

λ violates set condition

compute

P centers:

v̂ = Pλij vi &

ŵ =

λij wi

find s, t such that

vs ≤ v̂ < vs+1 &

ws ≤ ŵ < ws+1

branch on v or w

horizontal branching:

X

T

λij = 1

X

λij = 1

B

146 / 160

Extension to SOS-k

Example: electricity transmission network:

c(x) = 4x1 − x22 − 0.2 · x2 x4 sin(x3 )

(Martin et al., 2005) extend SOS3 to SOSk models for any k

⇒ function with p variables on N grid needs N p λ’s

147 / 160

Extension to SOS-k

Example: electricity transmission network:

c(x) = 4x1 − x22 − 0.2 · x2 x4 sin(x3 )

(Martin et al., 2005) extend SOS3 to SOSk models for any k

⇒ function with p variables on N grid needs N p λ’s

Alternative (Gatzke, 2005):

exploit computational graph

' automatic differentiation

148 / 160

Extension to SOS-k

Example: electricity transmission network:

c(x) = 4x1 − x22 − 0.2 · x2 x4 sin(x3 )

(Martin et al., 2005) extend SOS3 to SOSk models for any k

⇒ function with p variables on N grid needs N p λ’s

Alternative (Gatzke, 2005):

exploit computational graph

' automatic differentiation

only need SOS2 & SOS3 ...

replace nonconvex parts

149 / 160

Extension to SOS-k

Example: electricity transmission network:

c(x) = 4x1 − x22 − 0.2 · x2 x4 sin(x3 )

(Martin et al., 2005) extend SOS3 to SOSk models for any k

⇒ function with p variables on N grid needs N p λ’s

Alternative (Gatzke, 2005):

exploit computational graph

' automatic differentiation

only need SOS2 & SOS3 ...

replace nonconvex parts

piece-wise polyhedral approx.

150 / 160

Software for MINLP

Outer Approximation: DICOPT++ (& AIMMS)

NLP solvers: CONOPT, MINOS, SNOPT

MILP solvers: CPLEX, OSL2

Branch-and-Bound Solvers: SBB & MINLP

NLP solvers: CONOPT, MINOS, SNOPT & FilterSQP

variable & node selection; SOS1 & SOS2 support

Global MINLP: BARON & MINOPT underestimators & branching

CPLEX, MINOS, SNOPT, OSL

Online Tools: MINLP World, MacMINLP & NEOS MINLP World

www.gamsworld.org/minlp/

NEOS server www-neos.mcs.anl.gov/

151 / 160

COIN-OR

http://www.coin-or.org

COmputational INfrastructure for Operations Research

A library of (interoperable) software tools for optimization

A development platform for open source projects in the OR

community

Possibly Relevant Modules:

OSI: Open Solver Interface

CGL: Cut Generation Library

CLP: Coin Linear Programming Toolkit

CBC: Coin Branch and Cut

IPOPT: Interior Point OPTimizer for NLP

NLPAPI: NonLinear Programming API

152 / 160

MINLP with COIN-OR

New implementation of LP/NLP based BB

MIP branch-and-cut: CBC & CGL

NLPs: IPOPT interior point ... OK for NLP(yi )

New hybrid method:

solve more NLPs at non-integer yi

⇒ better outer approximation

allow complete MIP at some nodes

⇒ generate new integer assignment

... faster than DICOPT++, SBB

simplifies to OA and BB at extremes ... less efficient

... see Bonami et al. (2005) ... coming in 2006.

153 / 160

Conclusions

MINLP rich modeling paradigm

◦ most popular solver on NEOS

Algorithms for MINLP:

◦ Branch-and-bound (branch-and-cut)

◦ Outer approximation et al.

154 / 160

Conclusions

MINLP rich modeling paradigm

◦ most popular solver on NEOS

Algorithms for MINLP:

◦ Branch-and-bound (branch-and-cut)

◦ Outer approximation et al.

“MINLP solvers lag 15 years behind MIP solvers”

⇒ many research opportunities!!!

155 / 160

Part V

References

156 / 160

C. Adjiman, S. Dallwig, C. A. Floudas, and A. Neumaier. A global optimization method, aBB,

for general twice-differentiable constrained NLPs - I. Theoretical advances. Computers and

Chemical Engineering, 22:1137–1158, 1998.

I. Akrotirianakis, I. Maros, and B. Rustem. An outer approximation based branch-and-cut

algorithm for convex 0-1 MINLP problems. Optimization Methods and Software, 16:

21–47, 2001.

E. Balas. Disjunctive programming. In Annals of Discrete Mathematics 5: Discrete

Optimization, pages 3–51. North Holland, 1979.

E. Balas, S. Ceria, and G. Corneujols. A lift-and-project cutting plane algorithm for mixed 0-1

programs. Mathematical Programming, 58:295–324, 1993.

E. M. L. Beale. Branch-and-bound methods for mathematical programming systems. Annals

of Discrete Mathematics, 5:201–219, 1979.

P. Bonami, L. Biegler, A. Conn, G. Cornuéjols, I. Grossmann, C. Laird, J. Lee, A. Lodi,

F. Margot, N. Saaya, and A. Wächter. An algorithmic framework for convex mixed integer

nonlinear programs. Technical report, IBM Research Division, Thomas J. Watson Research

Center, 2005.

B. Borchers and J. E. Mitchell. An improved branch and bound algorithm for Mixed Integer

Nonlinear Programming. Computers and Operations Research, 21(4):359–367, 1994.

R. Borndörfer and R. Weismantel. Set packing relaxations of some integer programs.

Mathematical Programming, 88:425 – 450, 2000.

M. T. Çezik and G. Iyengar. Cuts for mixed 0-1 conic programming. Mathematical

Programming, 2005. to appear.

H. Crowder, E. L. Johnson, and M. W. Padberg. Solving large scale zero-one linear

programming problems. Operations Research, 31:803–834, 1983.

157 / 160

D. De Wolf and Y. Smeers. The gas transmission problem solved by an extension of the

simplex algorithm. Management Science, 46:1454–1465, 2000.

M. Duran and I. E. Grossmann. An outer-approximation algorithm for a class of mixed–integer

nonlinear programs. Mathematical Programming, 36:307–339, 1986.

A. M. Geoffrion. Generalized Benders decomposition. Journal of Optimization Theory and

Applications, 10:237–260, 1972.

I. E. Grossmann and R. W. H. Sargent. Optimal design of multipurpose batch plants.

Ind. Engng. Chem. Process Des. Dev., 18:343–348, 1979.

Harjunkoski, I., Westerlund, T., Pörn, R. and Skrifvars, H. Different transformations for

solving non-convex trim-loss problems by MINLP. European Journal of Opertational

Research, 105:594–603, 1998.

Jain, V. and Grossmann, I.E. Cyclic scheduling of continuous parallel-process units with

decaying performance. AIChE Journal, 44:1623–1636, 1998.

G. R. Kocis and I. E. Grossmann. Global optimization of nonconvex mixed–integer nonlinear

programming (MINLP) problems in process synthesis. Industrial Engineering Chemistry

Research, 27:1407–1421, 1988.

S. Lee and I. Grossmann. New algorithms for nonlinear disjunctive programming. Computers

and Chemical Engineering, 24:2125–2141, 2000.

S. Leyffer. Integrating SQP and branch-and-bound for mixed integer nonlinear programming.

Computational Optimization & Applications, 18:295–309, 2001.

L. Lovász and A. Schrijver. Cones of matrices and setfunctions, and 0-1 optimization. SIAM

Journal on Optimization, 1, 1991.

H. Marchand and L. Wolsey. The 0-1 knapsack problem with a single continuous variable.

Mathematical Programming, 85:15–33, 1999.

158 / 160

A. Martin, M. Möller, and S. Moritz. Mixed integer models for the stationary case of gas

network optimization. Technical report, Darmstadt University of Technology, 2005.

G. P. McCormick. Computability of global solutions to factorable nonconvex programs: Part

I—Convex underestimating problems. Mathematical Programming, 10:147–175, 1976.

Nemhauser, G.L. and Wolsey, L.A. Integer and Combinatorial Optimization. John Wiley,

New York, 1988.

M. Padberg, T. J. Van Roy, and L. Wolsey. Valid linear inequalities for fixed charge problems.

Operations Research, 33:842–861, 1985.

I. Quesada and I. E. Grossmann. An LP/NLP based branch–and–bound algorithm for convex

MINLP optimization problems. Computers and Chemical Engineering, 16:937–947, 1992.

Quist, A.J. Application of Mathematical Optimization Techniques to Nuclear Reactor

Reload Pattern Design. PhD thesis, Technische Universiteit Delft, Thomas Stieltjes

Institute for Mathematics, The Netherlands, 2000.

R. Raman and I. E. Grossmann. Modeling and computational techniques for logic based

integer programming. Computers and Chemical Engineering, 18:563–578, 1994.

H. D. Sherali and W. P. Adams. A hierarchy of relaxations between the continuous and

convex hull representations for zero-one programming problems. SIAM Journal on Discrete

Mathematics, 3:411–430, 1990.

O. Sigmund. A 99 line topology optimization code written in matlab. Structural

Multidisciplinary Optimization, 21:120–127, 2001.

R. Stubbs and S. Mehrohtra. Generating convex polynomial inequalities for mixed 0-1

programs. Journal of Global Optimization, 24:311–332, 2002.

R. A. Stubbs and S. Mehrotra. A branch–and–cut method for 0–1 mixed convex

programming. Mathematical Programming, 86:515–532, 1999.

159 / 160

M. Tawarmalani and N. V. Sahinidis. Convexification and Global Optimization in Continuous

and Mixed-Integer Nonlinear Programming: Theory, Algorithms, Software, and

Applications. Kluwer Academic Publishers, Boston MA, 2002.

J. Viswanathan and I. E. Grossmann. Optimal feed location and number of trays for

distillation columns with multiple feeds. I&EC Research, 32:2942–2949, 1993.

Westerlund, T., Isaksson, J. and Harjunkoski, I. Solving a production optimization problem in

the paper industry. Report 95–146–A, Department of Chemical Engineering, Abo Akademi,

Abo, Finland, 1995.

Westerlund, T., Pettersson, F. and Grossmann, I.E. Optimization of pump configurations as

MINLP problem. Computers & Chemical Engineering, 18(9):845–858, 1994.

H. P. Williams. Model Solving in Mathematical Programming. John Wiley & Sons Ltd.,

Chichester, 1993.

160 / 160