Elementary Subtraction

advertisement

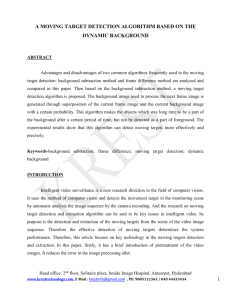

Journal of Experimental Psychology: Learning, Memory, and Cognition 2003, Vol. 29, No. 6, 1339 –1352 Copyright 2003 by the American Psychological Association, Inc. 0278-7393/03/$12.00 DOI: 10.1037/0278-7393.29.6.1339 Elementary Subtraction Donald J. Seyler Elizabeth P. Kirk Cleveland State University Florida State University Mark H. Ashcraft Cleveland State University Four experiments examined performance on the 100 “basic facts” of subtraction and found a discontinuous “stair step” function for reaction times and errors beginning with 11 – n facts. Participants’ immediate retrospective reports of nonretrieval showed the same pattern in Experiment 3. The degree to which elementary subtraction depends on working memory (WM) was examined in a dual-task paradigm in Experiment 4. The reconstructive processing used with larger basic facts was strongly associated with greater WM disruption, as evidenced by errors in the secondary task; this was especially the case for participants with lower WM spans. The results support the R. S. Siegler and E. Jenkins (1989) distribution of associations model, although discriminating among the alternative solution processes appears to be a serious challenge. signature finding in these studies (see Ashcraft, 1995) is the problem size or problem difficulty effect: As the numbers in a problem grow larger, so too do reaction times (RTs) and error rates. For instance, RTs and errors will be larger for 9 ⫹ 7 or 8 ⫻ 6 than for 5 ⫹ 3 or 4 ⫻ 2. Furthermore, the slope and shape of the problem size effect have been used as evidence for a variety of important—and sometimes contradictory— explanations of mental arithmetic performance, including counting-based (Groen & Parkman, 1972) versus retrieval-based (Ashcraft & Battaglia, 1978) performance in addition, a developmental shift from counting to retrieval in addition (Ashcraft & Fierman, 1982) and multiplication (Cooney, Swanson, & Ladd, 1988), an interference-based explanation of performance in multiplication (Campbell, 1987), and a mixture of retrieval and reconstructive processing (LeFevre, Sadesky, & Bisanz, 1996; Siegler & Shrager, 1984). Thus, all serious theoretical work in this area has addressed the problem size effect as the first and most important empirical phenomenon to explain. Strangely, little of the accumulated research has focused on elementary subtraction, the basic facts of subtraction taught almost universally in second grade. There have been several developmental studies of simple subtraction (e.g., Robinson, 2001; Siegler, 1987; Woods, Resnick, & Groen, 1975; see Fuson, 1992, for a review), and there is the well-known work on the “bugs” in children’s learning of the complex subtraction algorithm for borrowing (VanLehn, 1990). There is also increasing use of subtraction in studies of neural representation and functioning, with provocative evidence that subtraction and addition may rely on different neural substrates than multiplication (e.g., Cohen & Dehaene, 2000; Lee, 2000). However, few studies have examined the basic processing characteristics of simple subtraction among normal adults, and none have discussed the results at more than a moderate level of detail. Given the importance of the shape of the problem size effect and the absence of detailed information in the literature about this effect in subtraction, our main purpose here Mathematical cognition has become a popular research topic in the past 30 years. The appeal of the area is obvious: A rich body of knowledge about arithmetic and the number system is acquired—with some difficulty— during early education and forms the basis for higher mathematics, problem solving, and math reasoning. This acquired knowledge amplifies the simpler understanding of numerosity and comparison evidenced by lower mammals (e.g., Meck & Church, 1983), infants (Wynn, 1992), and adults (Whalen, Gallistel, & Gelman, 1999) in nonverbal counting or comparison tasks. Despite the apparent ease with which we decide that 2 is smaller than 3 or that 6 ⫻ 8 ⫽ 48, the literature attests to the complexity of processing involved in such seemingly elementary tasks (e.g., Ashcraft, 1995; Banks, 1977; Dehaene & Cohen, 1995; Geary, 1994). Within the area of mental arithmetic, considerable attention has been devoted to the study of simple addition and multiplication, especially processing of the 100 “basic facts” of addition and multiplication (0 ⫹ 0/0 ⫻ 0 up through 9 ⫹ 9/9 ⫻ 9). The Donald J. Seyler and Mark H. Ashcraft, Department of Psychology, Cleveland State University; Elizabeth P. Kirk, Department of Psychology, Florida State University. Preliminary reports on Experiments 1, 2, and 4 were presented, respectively, at the annual meeting of the Midwestern Psychological Association, May 1999; at the annual meeting of the Psychonomic Society, November 2001; and at the annual meeting of the Midwestern Psychological Association, May 2002. Experiment 4 was conducted as part of Donald J. Seyler’s master’s thesis, submitted to Cleveland State University, and was partially funded by a Council of Graduate Departments of Psychology/ American Psychological Foundation Research Scholarship awarded to Donald J. Seyler. We are grateful to Candice Coker Morey for assistance in data collection for Experiment 3. Correspondence concerning this article should be addressed to Mark H. Ashcraft, Department of Psychology, Cleveland State University, Cleveland, Ohio 44115. E-mail: m.ashcraft@csuohio.edu 1339 SEYLER, KIRK, AND ASHCRAFT 1340 was simply to conduct and present foundation work on the processing characteristics of simple subtraction. Two published studies that examined subtraction do deserve mention here. The most thorough testing of adults’ subtraction (Campbell & Xue, 2001) was part of a large project on all four arithmetic operations that focused on cross-cultural comparisons of Canadian and Chinese participants. Campbell and Xue found a significant problem size effect among all samples and across all four arithmetic operations, as well as a significant increase in reports of nonretrieval across problem sizes. Likewise, Geary, Frensch, and Wiley (1993) examined simple and complex subtraction but were especially interested in younger versus older adult samples and the performance characteristics of the various processing strategies these participants reported. Geary et al. found that two strategies, along with retrieval, were common in subtraction: addition reference (e.g., referring to 3 ⫹ 6 ⫽ 9 to solve 9 ⫺ 3) and counting down (e.g., for 8 ⫺ 2, implicitly counting down “eight, seven, six”). They also noted that trials reported as involving the use of reconstructive strategies were considerably slower and more error prone than those reported as retrieval trials (see also Campbell & Xue’s, 2001, Canadian sample). Our intent in the present studies was to provide additional information on such findings in the context of a full presentation of results on adults’ subtraction. Given the high level of familiarity and fluent retrieval processing characteristic of simple addition (e.g., Ashcraft, 1995; but see LeFevre et al., 1996) and the inverse mathematical relationship between addition and subtraction, the most straightforward prediction for adults’ subtraction is that it will resemble addition performance in all essential respects. We expected a monotonically increasing RT function across increasing problem sizes, with mean RTs for small problems in the 800 – 1,000-ms range and mean RTs for large problems in the 1,300 – 1,400-ms range (these values were derived from Campbell & Xue, 2001, Table 2, Canadian sample). Experiments 1 and 2 Experiment 1 presented the 100 basic facts of subtraction to a sample of college adults. Participants were shown problems such as 15 ⫺ 8, in column format, and were timed as they produced their answers verbally. Because the results were surprising, we conducted the study a second time, 2 years later, using a different experimenter. The results were very similar, so the two experiments are reported together. Method Participants Twenty-six participants, recruited from undergraduate psychology classes at Cleveland State University, received course credit for taking part in Experiment 1; 20 participants were tested in Experiment 2. Participants completed paper-and-pencil tests of math and test anxiety, followed by a computer-based test of simple subtraction. Against the remote possibility that administering the anxiety tests first had in some way aroused participants’ suspicions and influenced their performance, the subtraction task preceded the math anxiety instrument in Experiment 2. Sessions lasted approximately 40 min. Apparatus The experimental task was performed on ordinary laboratory personal computers through the use of the Micro Experimental Laboratory software package (Version 2.01; Psychology Software Tools, Pittsburgh, PA; Schneider, 1988). The software presented all task instructions on the screen and recorded RTs automatically through a microphone and voice key apparatus. At the end of each trial, the experimenter keyed in the participant’s verbal response to the subtraction problem, and the software scored responses for accuracy. Stimuli In a subtraction problem (m ⫺ s ⫽ r), the first value is the minuend (m), the top number in a column-format problem. It is the large number being subtracted from. The value being subtracted is the subtrahend (s), and the answer in the problem is termed the remainder (r) or difference. The basic facts of subtraction are the inverses of the 100 basic addition facts. Using the same labels, the inverse of m ⫺ s ⫽ r would be s ⫹ r ⫽ m, augend (s, the first operand) plus addend (r, the second operand) equals sum (m). The basic facts of subtraction can be divided into nearly equal-sized groups of “small” and “large” problems by separating the problems into those with one- versus two-digit minuends (0 –9 vs. 10 –18); under this scheme, there are 55 small problems and 45 large problems. For our initial analyses, this was the partition that defined small versus large problems. Because we tested the inverses of the 100 basic addition facts, all subtraction problems had one-digit subtrahends and remainders, and the smallest remainder (correct answer) was 0. Procedure Participants were tested individually, with the experimenter present throughout the experimental session. Participants were provided a brief explanation of the experiment, completed the math and test anxiety scales and the subtraction task, and were then debriefed and excused; in Experiment 2, subtraction preceded the math anxiety test, and the test anxiety instrument was dropped. We administered 10 practice trials, with the problems drawn randomly from the 100 basic facts, to familiarize participants with the articulation-based RT procedure and the need to avoid extraneous vocalizations (e.g., “um” and “uh”). The instructions placed equal emphasis on speed and accuracy of responding. Each participant responded to all 100 basic subtraction facts, in an order randomly determined by the software. A short rest break was provided to participants halfway through the experimental trials. On each trial, participants saw a problem in column form, centered on the screen, and stated the answer out loud. Results Spoiled trials, on which the voice key did not register the participant’s original vocalization or an extraneous noise triggered the key, accounted for 129 of the observations (4.9% of the total possible observations). An additional 41 scores (1.6% of the total possible) were eliminated when they exceeded the p ⬍ .01 criterion for extreme scores (Dixon, 1953). Extreme scores were screened by examining all of a participant’s correct trial RTs within a problem size category. The comparable values in Experiment 2 were 5.4% for spoiled trials and 1.5% for outliers. Chronometric Measures The grand mean for correct RTs was 1,136 ms. The participants’ average RT was 908 ms for small problems and 1,363 ms for large problems; these values were remarkably similar to those reported by Campbell and Xue (2001): 947 ms and 1,386 ms for small and large problems, respectively. The 455-ms problem size effect was significant in a simple within-subjects analysis of variance (ANOVA), F(1, 25) ⫽ 74.24, MSE ⫽ 36,246 (unless otherwise ELEMENTARY SUBTRACTION reported, all significant effects reported here achieved at least the p ⬍ .05 level of significance). The overall error rate was 8.4%. Mean error rates were 2.7% for small problems and 14.2% for large problems, a significant difference, F(1, 25) ⫽ 60.99, MSE ⫽ 28.02. These rates were somewhat higher than those reported by Campbell and Xue for subtraction (3.1% and 7%, respectively, for small and large problems). In Experiment 2, small problems averaged 873 ms, with 1.6% errors, and large problems averaged 1,351 ms, with 11.1% errors. Both problem size effects were significant: RTs, F(1, 19) ⫽ 48.62, MSE ⫽ 47,048.1, and errors, F(1, 19) ⫽ 19.49, MSE ⫽ 4,607. We examined the problem size effect further, as a function of the different operands in the problem, in an attempt to go beyond the relatively crude two-level ANOVA factor. When RT was examined by remainder, a relatively ordinary problem size effect was apparent, with RT increasing in linear fashion from the 800-ms to the 1,400-ms level; remainder correlated .63 with RT for the 100 problems. A similar increase was found as a function of subtrahend, which correlated .42 with problem RT. Regression analysis revealed a stronger relationship between RT and minuend (r ⫽ .74); when the minuend was included in the regression model, adding subtrahend or remainder contributed only 2% to the variance accounted for. Quite surprisingly, the RT pattern for minuend was not a continuously increasing, monotonic function. Instead, a pronounced jump in RT was apparent, beginning with minuend 11. Fully 25 of the 26 participants showed the stair-step or discontinuity pattern in their RTs, 20 of them at minuend 11 and the remaining 5 at either 12 or 13. Computing the difference in RT for these adjacent minuends (e.g., 10 vs. 11), the smallest increase for the 25 participants was 172 ms, and the largest was 2,071 ms. The same pattern was obtained in Experiment 2, in which all 20 participants showed the discontinuity. Figure 1 displays mean RT profiles across minuends for both experiments. Error rates in both experiments (Figure 2) also revealed the stair-step function, with a major discontinuity at minuend 11 (not 1341 Figure 2. Mean error percentages, by minuend, for the elementary subtraction facts from Experiments 1 and 2. surprisingly, the same stair-step function was also found with standard deviations: from 200 –300 ms for small problems but from 600 –900 ms for large problems). The substantial jump in these measures, occurring at the same point in the minuends, signals an important change in underlying mental processing. One likely interpretation is that performance on the larger subtraction facts involves a mixture of processing operations. In other words, relative uniformity of mental processes typical with smaller problems may have given way at the larger problems to a mixture of solution processes, similar to LeFevre et al.’s (1996) interpretation of patterns in simple addition.1 The tent-shaped frequency distribution of problems across minuends means that problems with minuends 9 and 10 are the most frequent in the basic subtraction facts, with minuends 8 and 11 the next most frequent, and so forth. This rules out the possibility that the discontinuity at minuend 11 might be due to reduced sample size or some other statistical artifact. On the other hand, there was a pronounced decrease at minuend 18 for both performance measures, in both experiments, surely because only one basic subtraction fact has this minuend (18 – 9 ⫽ 9). This would reduce the stability of the mean from a statistical standpoint, but it would also reduce the uncertainty of responding from the participants’ standpoint (i.e., minuend 18 does not participate in several subtraction facts the way that minuends 11 and 12 do). Error Analysis As shown in Table 1, errors were most frequently within 2 numbers of the correct answer for both small and large problems. Incorrect answers wrong by more than 2 became very infrequent, although there was a slight increase in answers wrong by 4 or more within small problems. Inspection showed that these errors were almost invariably of two types: repetition of either the minuend or 1 Figure 1. Mean reaction times (RTs), by minuend, for the elementary subtraction facts from Experiments 1 and 2. Although not as pronounced, the problem size effect in LeFevre et al.’s addition data (see especially their Figures 1 and 2) demonstrates a discontinuity, as do their unpublished subtraction data (J. LeFevre, personal communication, October 24, 2002). SEYLER, KIRK, AND ASHCRAFT 1342 Table 1 Frequencies and Percentages of Errors in Experiments 1 and 2, Tallied Separately for Small (n ⫽ 55) and Large (n ⫽ 45) Problems Problem size Small Answers wrong by ⫹/⫺ 1 2 3 4 5 6 Total Large No. % No. % 27 7 3 9 3 3 52 51.9 13.5 5.8 17.3 5.8 5.8 100 126 66 14 8 3 1 218 57.8 30.2 6.4 3.7 1.4 0.5 100 subtrahend (e.g., 9 – 6 ⫽ 9 or 12 – 9 ⫽ 9) or what seemed to be a counting up or down association to the subtrahend (e.g., 11 – 3 ⫽ 4 or 12 – 9 ⫽ 8). We judged the three instances of “zero errors” (e.g., 4 – 0 ⫽ 0) to be repetition errors instead of confusion with the zero rule from multiplication, because no other errors seemed to stem from confusion with other arithmetic operations. Correlation Findings For both experiments, we correlated RTs, error rates, standard deviations, and the two anxiety measures; we also included each participant’s RT difference between small and large problems (i.e., the size of each participant’s stair step). Because only the customary patterns were observed (e.g., r ⫽ .84 between RTs and standard deviations and r ⫽ .33 between math and test anxiety), we do not consider these results further. Summary There is a strong problem size effect in subtraction, just as in the other arithmetic operations, although the size of the effect is larger than in addition or multiplication. The shape of the problem size effect, however, is dramatically different from the customary finding of a continuous, monotonic function. All dependent variables examined here showed a pronounced discontinuity at minuend 11, signaling a substantial shift in processing between smaller and larger subtraction facts. Smaller facts, with short latencies and low error rates and variability, probably rely quite heavily on simple retrieval from long-term memory. Larger facts, in contrast, seem not to be processed in such a homogeneous fashion. Instead, they seem to call forth a mixture of processes, probably with relatively greater reliance on reconstructive strategies. To our knowledge, every study that has examined strategy-based trials separately has shown them to be slower and far more prone to error than retrieval (e.g., Campbell & Xue, 2001; Geary et al., 1993; LeFevre et al., 1996; Siegler, 1987). Interestingly, however, none of the published studies has noted both the unusually shaped problem size effect and the degree of discontinuity that we report here. As an archival resource, we present problem-by-problem results (RTs, error rates, and standard deviations) in the Appendix.2 Experiment 3 Our interpretation of the stair-step function in Experiments 1 and 2 is that participants were relying on strategies for problem solution on some appreciable portion of the trials, rather than simpler retrieval from long-term memory. To test this directly, we had participants in Experiment 3 respond in the standard timed subtraction task and then provide immediate verbal reports on their solution processes. We expected to find reconstructive strategies such as counting and transformation being used far more heavily on the larger facts, corresponding to the elevated portion of the problem size function beginning at minuend 11. An earlier study (Kirk & Ashcraft, 2001) examined many of the methodological challenges involved in collecting valid, reliable math strategy reports. We concluded there that math strategy research could provide valuable insight into the cognitive processing of basic arithmetic but that research involving verbal strategy reports must be done in accordance with recommended methodology (see Ericsson & Simon, 1993, for a detailed review of these issues) and, in particular, under neutral experimental instructions. There is an extensive literature on the use of verbal reports in cognitive tasks (e.g., see Crutcher, 1994; Ericsson & Simon, 1993; Payne, 1994; Russo, Johnson, & Stephens, 1989; Wilson, 1994) that reflects a general consensus on two main sources of concern when such reports are used: veridicality and reactivity (for a thorough discussion of these issues in the context of a math task, see Kirk & Ashcraft, 2001). Veridicality concerns whether verbal reports accurately reflect underlying cognitive processes. Ericsson and Simon (1993) cited two types of cognitive processes that cannot be tapped by verbal reports: thoughts that either are not already in or cannot be easily transferred into a verbal code and processes that are unmediated and highly automated. Only information that enters short-term memory and is attended can be reported; thus, verbal reports are informative only for sequential, time-ordered processes (Payne, 1994). Note that if two processes occur in parallel, such as relatively rapid retrieval from long-term memory along with a slower, strategy-based solution (e.g., Ashcraft, 1992; Compton & Logan, 1991), the verbal report will likely describe the slower process, even though the faster process governs the overt, timed response. The converging time and accuracy measures included here were intended to address these issues. Reactivity, an issue of potentially greater concern, refers to the possibility that the requirement to give verbal reports may alter participants’ thought processes. For instance, having to generate a verbal report might induce participants to rely on a slower but more easily described process for problem solution or result in 2 We are grateful to an anonymous reviewer for reminding us that many other processes, such as encoding and retrieval, vary in their time to completion as a function of numerical magnitude and will therefore be part of the increasing RT profile across minuends (see Banks, 1977, for example, on magnitude, distance, and congruity effects). Indeed, as noted by several authors, even the frequency with which we encounter numbers, both as digits and as words, varies inversely with magnitude (Kučera & Francis, 1967). Thus, problems with larger minuends here also tend to have larger subtrahends and remainders (r ⫽ .707 for both correlations with minuend), and processes not directly related to subtraction will contribute to latencies and errors. On the other hand, magnitude and distance effects tend to be small relative to the problem size effects found here. The answers that participants generated in the present tasks varied from 0 –9, a range showing tremendous variation in occurrence frequency (Kučera & Francis, 1967) but only minimal variation in RT and errors here; word frequency differences for values 11 through 18 are minimal, although these minuends showed large effects here. ELEMENTARY SUBTRACTION participants feeling obligated to report a strategy they had not used (i.e., a situation in which participants respond to the demand characteristics of the experiment). Kirk and Ashcraft (2001) demonstrated that biased instructions could have a similar, reactive effect on verbal reports and performance. Awareness of the need to report may also consume processing resources and may bias participants to trade speed for accuracy (Russo et al., 1989). Any of these effects should cause discrepancies between verbal reports and other performance measures. Consistent with the methodology used in Kirk and Ashcraft (2001), we included a silent control condition here as a direct check on such reactivity effects. We also gave participants appropriate training in providing verbal reports, along with experimental instructions designed to avoid bias and demand characteristics. Method Participants Forty-five participants from undergraduate psychology courses at Florida State University were tested in exchange for credit in their classes. Materials Participants completed the same demographic information sheet and math anxiety scale used in Experiments 1 and 2. They also completed a standard math fluency measure (French, Ekstrom, & Price, 1963) to assess their ability to work on basic arithmetic operations with both speed and accuracy. Participants performed the same simple subtraction task used in the first two experiments and gave verbal reports after each trial. Procedure The experiment consisted of two sessions, spaced 1 week apart. In Session 1, 30 participants were randomly assigned to the verbal report condition, and the remaining 15 were assigned to a silent control condition. They were given the instructions and verbal report training described subsequently, after which they completed the subtraction task and ancillary instruments. They reported to the laboratory the following week for Session 2, designed to explore verbal reporting characteristics under instructions emphasizing either speed or accuracy, with half of the participants assigned to each emphasis. The 15 silent control participants performed silently again, under the same instructions as their first session (i.e., equal emphasis on speed and accuracy). The ancillary measures were also readministered in the second session, and the order of computer and ancillary measures was counterbalanced across sessions. Instructions and Verbal Report Training Immediately before the subtraction task, all participants were given instructions and practice in how to provide concurrent and retrospective verbal reports. The report training instructions (Ericsson & Kirk, 2000, 2001) were designed to facilitate valid, reliable verbal reports on short RT stimuli. When originally developed and tested on the basic addition facts, they yielded persuasive evidence of veridicality and reliability. For example, intercoder reliability was high, there were no significant differences between reporting and control groups on either latencies or errors, and there were discrepancies among untrained participants between the strategies they reported and their RT and accuracy profiles, as compared with consistent, converging patterns of reports, RTs, and accuracy among trained participants (Ericsson & Kirk, 2001). The experimenter conducted report training by reading explanatory instructions and asking participants to answer practice questions with concurrent, think-aloud reports. On some problems, participants were also 1343 immediately asked to give a retrospective report of the exact thoughts they had from stimulus onset until answering the practice question. Some practice questions could be answered by direct retrieval, and some required multistep solutions. Report training averaged 15–25 min in Session 1 and 5–10 min on an abbreviated set of instructions in Session 2. To avoid biasing participants’ reports, no examples of strategies or reports were provided during training. The practice problems emphasized two points. First, questions solved by direct access demonstrated that it was acceptable to have nothing to report beyond the problem answer. Second, questions solved by multistep solutions helped participants learn to distinguish between reports of actual thoughts, on the one hand, and summaries, explanations, or other nonveridical accounts of their thinking, on the other. That is, participants learned that a valid retrospective report needed to resemble their concurrent report of the problem. Emphasizing these points addressed the most serious threats to validity: reporting processes that did not occur, summarizing or interpreting processes that did occur, and altering routine problem-solving processes to have something to report. After this training, report participants were told that they would be giving only retrospective reports on the main experiment. Control participants were told that they would not be giving verbal reports at all but would instead repeat the problem and answer on each trial. All participants were told that they should solve the subtraction problems as quickly and accurately as possible and then report the answer out loud. Verbal reports were tape recorded for later transcription. Verbal Report Coding When participants reported only the problem answer, the trial was scored as “retrieval.” So-called “addition reference” solutions indicated that the problem was solved with reference to the corresponding basic addition fact; for example, in the case of 15 – 8, a participant might respond “7 ⫹ 8 ⫽ 15, so 7.” Because the participant had to first retrieve the subtraction problem answer, 7, to formulate the addition fact 7 ⫹ 8, these trials were also scored as “retrieval.” A report was scored as “counting” if a participant reported an ascending or descending counting sequence (e.g., for 11 – 3, counting down “10, 9, 8” and then stating “8” as the answer; see Woods et al., 1975). A report was scored as “transformation” if a participant reported changing the problem to a simpler, known problem (e.g., for 15 – 8, decrementing both values by 5 and then solving 10 – 3 ⫽ 7). Other solution methods, including “I don’t know,” were scored as “other.” Results Session 1 The overall error rate was 3.2%, and this rate did not vary as a function of report group. Another 4.4% of the trials were spoiled as a result of inaccurate firing of the voice key. We altered our procedures for detecting and eliminating extreme scores to ensure that infrequent minuend values, especially when examined separately by reported solution method, would have sufficient observations. In brief, if a participant’s mean RT at a particular minuend fell outside of a critical range—the group’s mean ⫾2.5 SDs at that minuend—then the participant’s individual RTs were screened. If a trial RT was extreme, it was excluded, a new critical range was computed, and the eliminated score was replaced by the upper bound of the new critical range. Only 17 scores (0.4% of valid scores) were replaced through the use of this procedure. Finally, 4 participants were missing data for minuend 0, 1, or 18, so we substituted the group’s upper bound at the minuend in question for the missing score. Despite the errors, spoiled trials, and extreme scores, fully 92.5% of the possible observations were available for analysis. 1344 SEYLER, KIRK, AND ASHCRAFT Consistent with prediction, requiring verbal reports did not alter subtraction performance to any detectable degree. There were no significant report group effects on RTs or standard deviations. The similarities between the RT profiles of the silent control and verbal report groups suggested that reactivity was not an issue in our results. The stair step between minuends 10 and 11 in the problem size effect was even greater than in the first two experiments, a mean RT increase of 850 ms; in addition, the effect was significant across all minuends, F(18, 756) ⫽ 48.81, MSE ⫽ 240,460. Standard deviations were in the 200 –300-ms range for small problems but ranged from 650 – 800 ms for large problems. Mean RTs for the large facts were also somewhat longer than those in Experiments 1 and 2, ranging between 1,789 and 2,147 ms. This difference was most likely attributable to the sample. Because there were no differences between report groups, the longer RTs cannot be attributed to having participants give verbal reports, a tendency that is sometimes observed in report data (Ericsson & Simon, 1993). As found in the first two experiments, the error rate increased sharply at minuend 11; the main effect of minuend on errors was significant, F(18, 756) ⫽ 2.58, MSE ⫽ 109.06. Overall, error rates varied between 1% and 3% on the smaller subtraction facts but ranged from 4%– 8% on those at minuend 11 and beyond. There was also a Minuend ⫻ Report Group interaction, F(36, 756) ⫽ 1.68. This interaction was probably attributable to participants in one of the report conditions who had an error rate of 12% at minuend 11, as compared with rates of 2% to 4.4% among the other groups. Inspection of individual participants’ errors revealed that the error pattern was general for the condition rather than being attributable to a subset of inaccurate individuals. Overall, participants reported using a strategy other than retrieval on 15% of all trials (8% used counting, 6% used transformation, and less than 1% used another type of strategy). The types of strategies reported were generally consistent with those described by Geary et al. (1993). More interestingly, the verbal reports also showed the discontinuous pattern between small and large facts found in the other performance measures. Reported strategy use ranged between 0.7% and 7.7% for small problems (M ⫽ 3.2%) but ranged between 28% and 46% for large problems (M ⫽ 33.4%), across-minuend, F(18, 504) ⫽ 18.21, MSE ⫽ 431.64. At the critical adjacent pair of minuends, reported strategy use jumped from 2.8% at minuend 10 to 34% at minuend 11. Figure 3 displays percentages of reported nonretrieval strategies across minuends; frequencies of retrieval and nonretrieval reports on a problem-by-problem basis are shown in the Appendix. Interestingly, the nonretrieval percentages obtained here were considerably smaller than those reported by Campbell and Xue (2001; 27% for small problems and 58% for large problems). With such a substantial percentage of performance attributed to nonretrieval, it is possible that the problem size effects found in Experiments 1 and 2 were largely or even completely due to slow, effortful strategies. In like manner to LeFevre et al.’s (1996) procedure, we reanalyzed RTs from the verbal report groups here, examining only those trials classified as “retrieval.” Figure 4 presents the results of this analysis, the top curve showing the problem size effect on RT for all trials and the lower curve showing the effect for retrieval-only trials. Although a considerable part of the now-familiar stair-step function is clearly due to slower strategies, there just as clearly remains a prominent increase in the RT function when retrieval-only trials are examined: F(18, Figure 3. Mean percentages of trials reported as solved by a strategy other than retrieval, by minuend: Experiment 3. 504) ⫽ 20.35, MSE ⫽ 144,172, for the retrieval-only analysis across minuends. Furthermore, the differences in RTs between the two curves were significant according to a simple ANOVA of difference scores (overall RT minus RT for retrieval-only trials), F(18, 504) ⫽ 9.17, MSE ⫽ 227,113. Processing through reconstructive strategies was significantly slower than retrieval, as is commonly reported, and clearly accounted for part of the stair-step increase in RTs across problem size. There was nonetheless a substantial “residual stair step”—the increase in RTs that remained after nonretrieval trials had been excluded—that cannot be easily attributed to such strategies. We have several reasons to believe in the validity and reliability of these verbal report data. First, intercoder reliability for strategy coding was .98, suggesting that there was little doubt as to how particular reports should be categorized. Second, 30% of our reporting participants claimed to have used retrieval exclusively, and another 20% reported using retrieval on at least 90% of their trials. Reactivity to the report requirement (e.g., Russo et al., 1989) was apparently not widespread, if it occurred at all. Finally, there were no significant differences between the report and silent control groups in terms of RTs, standard deviations, or error rates. The absence of such differences, the high intercoder reliability, and the converging evidence for the familiar stair-step pattern across minuends together support the veridicality of the verbal strategy reports. Session 2 As noted previously, Session 2 was an exploratory session in which the usefulness of verbal reports across repeated testing was investigated, along with a manipulation of speed versus accuracy emphasis in the instructions. The Session 2 data are not directly comparable to the results presented so far because of the inherent practice effect of multiple sessions; thus, we limit our discussion of this session to two general characteristics. First, regardless of report condition, all three groups once again showed the familiar stair-step function at minuend 11, on both RTs, F(18, 756) ⫽ 48.85, MSE ⫽ 201,831, and errors, F(18, 756) ⫽ 3.69, MSE ⫽ 84.99. These results clearly rule out lack of ELEMENTARY SUBTRACTION 1345 eliminated from consideration. We pursue the source of this “residual stair step” in the General Discussion section. Experiment 4 Figure 4. Mean reaction times (RTs), by minuend, on elementary subtraction facts for all correct trials and trials reported as “retrieval”: Experiment 3. recent practice as an explanation for the discontinuous function found in Experiments 1–3. Second, verbal strategy reports also showed the discontinuity, with reports of strategy use for problems at minuends 10 and 11 jumping from 3.4% to 41% in the speeded condition and from 4% to 34% in the accuracy condition. Thus, the same marked increase in strategy reports for minuends 11–17 was found in both sessions. As a result, lack of recent practice is also an inadequate explanation of participants’ reported reliance on strategies in Session 1. Participants’ use of reconstructive strategies on roughly one third of the larger subtraction facts appears to reflect their normal problem-solving procedures and does not appear to be some short-lived effect due to relative inaccessibility or lack of practice. Correlation Findings All variables showed high test–retest correlations across sessions (e.g., r ⫽ .89 for RTs, r ⫽ .81 for strategy use, and r ⫽ .90 for math anxiety scores). The analysis supported the general findings that strategy use is associated with slower performance (e.g., r ⫽ .60 for Session 1 RTs and strategy use) and that slower performance is associated with lower math fluency scores (e.g., r ⫽ ⫺.61 for Session 1 RTs and math fluency scores). As found in studies of simple addition and multiplication (e.g., Faust, Ashcraft, & Fleck, 1996), the correlations between math anxiety and performance on basic subtraction facts were either weak (r ⫽ .40 for error rates in Session 1) or nonsignificant. No other important outcomes were observed in the correlations, so this analysis is not pursued further here. Summary The elevated portion of the problem size effect evident in RTs and errors corresponded exactly with the self-reported increase in the use of strategies for problem solution. The problem size effect in basic subtraction, according to the subsequent analyses, was partially a function of slower, reconstructive strategy use, although it retained a distinctive stair-step shape when strategy trials were Given that strategy-based solutions to simple arithmetic problems are considerably slower and more error prone than retrievalbased solutions (e.g., Geary et al., 1993; LeFevre et al., 1996), it seems especially likely that these solutions would place a particularly heavy load on WM. We tested this hypothesis in Experiment 4. Two methods for testing the involvement of WM are in general use. In the individual-differences approach, participants are given a WM span assessment and are then tested on some task of interest in a between-groups manipulation of span. Group differences on the task are then interpreted as due to differences in WM capacity assessed by span tests. In the dual-task approach, performance on a primary task is examined while participants perform a concurrent secondary task, one known to place demands on WM. As the difficulty of the secondary task increases, performance on the primary task is expected to suffer to the extent that it depends on WM capacity for successful execution, or, likewise, secondary task performance begins to suffer as the primary task increases in difficulty. Thus, in general, this approach looks for an interaction between task (dual vs. control) and processing difficulty (where difficulty pertains to the primary task, the secondary task, or both). We elected to use both approaches here (see Rosen & Engle, 1997, for a comparable design). That is, we performed a dual-task experiment and also obtained WM span scores for the participants, categorizing them into low, medium, and high memory span groups. Specifically, we tested subtraction (primary task) concurrently with performance on a short-term memory letter recall task (secondary task) of graded difficulty. Large subtraction facts, those identified as frequently being performed with reconstructive strategies, were expected to suffer particularly when the secondary task placed heavy demands on WM. That is, if the strategies used frequently for large subtraction facts indeed rely on conscious, WM-intensive processing, then performance on those facts should suffer under heavy memory loads due to the secondary task; alternatively, trials that load WM most heavily in the secondary task should suffer the greatest interference from subtraction if subtraction relies on WM resources. Furthermore, we expected groups formed on the basis of memory span to be differentially affected by the graded manipulations of difficulty in the subtraction and letter recall tasks. On the other hand, if the strategies reported for larger problems are relatively well practiced and automatic, as might be expected after years of practice (e.g., Siegler & Jenkins, 1989), then there might be only minimal interference from a demanding secondary task and little or no effect of the categorization into low, medium, or high span groups. Method Participants Thirty lower level undergraduates from the Cleveland State University psychology department participant pool received course credit for taking part. Instruments Participants were assessed with the Listening Span (L-span) task of the Salthouse–Babcock (1990) WM capacity test. This task requires the par- 1346 SEYLER, KIRK, AND ASHCRAFT ticipant to hold and then recall an increasing amount of information in WM while simultaneously processing test information; thus, it requires both storage and processing operations to occur concurrently. After completing the span assessment, participants were tested on the basic facts of subtraction in the same production task format as in the first three experiments. Procedure In the L-span task, the participants were presented sentences orally, beginning with 3 one-sentence sets, then 3 two-sentence sets, and so forth. In the case of the one-sentence sets, participants heard the sentence and then answered a question based on its meaning (e.g., The boy ran with the dog. Who ran? The boy). The question (e.g., Who ran?) was presented in a multiple-choice format on the front of the answer sheet. To complete the trial successfully, participants had to answer the question correctly (The boy) and then recall the final word of the sentence (dog), writing this word on the opposite side of the answer sheet. If at least two trials were completed successfully at the one-sentence level, the participant moved to the two-sentence level. At this level, two sentences were presented successively, each with a question to be answered, after which the final words of both sentences had to be recalled. As before, if at least two of the three trials at this level were completed correctly, the participant was tested with three sentences, and so on. If the participant passed the three-sentence test but failed at the four-sentence level, his or her WM span score was 3, the hardest level at which the participant succeeded. After completing the L-span task, participants completed the subtraction task on the computer. The subtraction phase was divided into three conditions, the dual-task condition along with the two control conditions, subtraction only and letters only. For all three tasks, participants were urged to respond rapidly while maintaining accuracy; equal emphasis was placed on subtraction accuracy and letter recall accuracy. Each of the 36 dual-task trials consisted of three main events. After a 1-s fixation point, the letter set was presented (Event a), and participants were encouraged to name the letters aloud once; the 2-, 4-, and 6-letter sets were shown for 1.5, 2.5, and 3.5 s, respectively. The letters were then removed from the screen, and after a 1-s interval the subtraction problem was presented (Event b), centered on the screen. Participants responded verbally, as in the previous experiments. After a 1-s delay, the words “RECALL LETTERS” appeared, prompting recall of the previously presented letter set (Event c). The experimenter recorded letter recall data, keyed in the participant’s response to the subtraction problem, and then triggered the next trial. The two component tasks were assessed separately to obtain reliable baseline estimates for control purposes. In the subtraction-only task (36 trials), participants responded to the set of subtraction facts in regular fashion. To control for overall verbalizations, we showed participants letter sets (Event a) comparable to those in the dual task before the subtraction problem (Event b) and asked them to verbalize the letter set again (Event c) after they gave the subtraction answer. To eliminate the memory demand of the letter task, we replaced the words “RECALL LETTERS” with the actual letter set so that participants had only to read the letters off the screen. Similarly, in the letters-only control condition (24 trials), participants saw and recited a 2-, 4-, or 6-letter set (Event a), saw a subtraction problem and verbalized its answer (Event b), and then had to recall the letter set (Event c). To eliminate the mental processing of subtraction, we showed the answer along with the problem during Event b so that participants could merely read the answer off the screen. Thus, both control tasks included verbalizations of the letters and subtraction answers, but selectively omitted either the secondary letter recall task or the primary subtraction task. Finally, we had to prevent participants in the letters-only task from adopting a short-cut strategy—naming the subtraction answer very rapidly and thereby shortening the retention interval for the letters—for improving their letter recall. To accomplish this objective, we kept the subtraction problem and answer on the screen for 1.5 s and required participants to read the entire subtraction problem out loud before the screen advanced to the recall instruction. Letter sequences were randomly constructed by selecting 2, 4, or 6 consonants and then excluding any combinations that contained meaningful abbreviations or acronyms and reordering sequences as necessary to avoid obvious alphabetic sequences; different letter sets were used in each task. We randomly sampled 36 subtraction facts for use, excluding from the pool ties and problems with a minuend of 0 or 10; the computer software randomized the order of presentation in each task. The order of tasks (letters only, subtraction only, or dual task) was counterbalanced across participants. After completing the computer-based subtraction test, participants completed the math anxiety assessment and then were debriefed and excused. Because no noteworthy results were obtained with this assessment, the results are not considered here. Results Listening Span Task Participants’ scores on the L-span task ranged from 1 to 5. For purposes of analysis, we classified the 12 participants with scores of 1 or 2 as low capacity, the 11 participants with a score of 3 as medium capacity, and the 7 participants with scores of 4 or 5 as high capacity. Error Analysis Examination of the subtraction errors committed in the subtraction-only and dual-task conditions revealed a pattern that was completely redundant with the patterns shown in Experiments 1 and 2, so this analysis is not pursued further here. Chronometric Measures In all three tasks, a trial was scored as an error if the subtraction answer was incorrect or if the participant failed to recall the entire letter sequence in order. Subtraction errors accounted for 11.5% of trials in the subtraction-only condition and 12.9% of trials in the dual-task condition. Letter recall errors occurred on 13.1% of the letters-only trials but 28.9% of the dual-task trials. Despite instructions to treat both tasks as equally important, participants here clearly demonstrated the interfering effect of concurrent processing more on the letter recall task. An additional 4% of subtraction-only RTs and 7.2% of dual-task RTs were spoiled as a result of extraneous noises or microphone sensitivity difficulties. Finally, 2.9% of the subtraction-only trials were excluded because of extreme RTs ( p ⬍ .01, Dixon’s test). The comparable extreme score rate in the dual-task condition (0.07%) was much lower, largely because trials with especially long RTs commonly ended with incorrect letter recall and so had already been dropped from the analysis. Control Conditions The control conditions were analyzed first, in an ANOVA with WM span group (low, medium, or high) as a between-subjects variable and problem size (small or large) and set size (2, 4, or 6 letters) as within-subject variables. Briefly, recall errors in the letters-only task yielded a main effect of set size, F(2, 54) ⫽ 54.01, MSE ⫽ 228.77; error rates were 1.1%, 8.6%, and 29.6% for the 2-, 4-, and 6-letter sets, respectively. In the subtraction-only analyses, both correct RTs and subtraction error rates showed the familiar stair-step pattern of problem size effects, F(1, 27) ⫽ 24.14, MSE ⫽ 412,123.01, and F(1, 27) ⫽ 23.4, MSE ⫽ 88.70, respectively. ELEMENTARY SUBTRACTION Subtraction errors also revealed an interaction between problem size and span group, F(2, 27) ⫽ 3.38, MSE ⫽ 88.70. The low span group averaged 12.5% errors on small problems, as compared with error rates of 7.5% and 4.0%, respectively, for the medium and high capacity groups. The groups did not differ systematically on large problems; mean error rates ranged from 12.1% to 16.7%. Dual-Task Analyses In the dual-task analyses, the task factor (dual vs. either subtraction only or letters only) was added to the design just described, yielding a four-factor design with one between-subjects variable. RT evidence. The ANOVA focusing on correct RTs compared dual-task performance and subtraction-only performance. There was a significant (504 ms) problem size effect, F(1, 27) ⫽ 27.97, MSE ⫽ 772,290, and a 159-ms increase in average latencies in the dual-task condition, F(1, 27) ⫽ 7.47, MSE ⫽ 288,339. This slowing of RT in the dual-task condition was also observed in the Task ⫻ Set Size interaction, F(2, 54) ⫽ 3.25, MSE ⫽ 51,646. Subtraction performance was 70 ms slower overall in the dual-task condition than in the subtraction-only condition when the letter set task involved only 2 letters. However, RTs were approximately 200 ms slower for 4- and 6-letter trials in the dual task. Errors in subtraction. The analysis of subtraction errors revealed a significant problem size effect, F(1, 27) ⫽ 22.2, MSE ⫽ 201.05; error rates averaged 8.6% for small problems and 15.8% for large problems. The Task ⫻ Set Size interaction was also 1347 significant, F(2, 54) ⫽ 3.31, MSE ⫽ 179.62, showing a somewhat steeper increase in subtraction errors for 6-letter trials than for either 2- or 4-letter trials. Finally, the three-way interaction among task, problem size, and WM span group was significant, F(2, 27) ⫽ 4.28, MSE ⫽ 107.38. The source of the interaction appeared to be a shallower problem size effect (an increase of 3.2%) for high span participants in the dual-task condition than for either low or medium span participants in that condition (9.7% and 9.6% increases, respectively), along with the surprisingly inaccurate performance of the low span participants in both tasks; their error rates for small and large problems were 12.5% and 16.2%, respectively, in the subtraction-only task and 10.7% and 20.4%, respectively, in the dual task. These results are consistent with the hypothesis that larger subtraction problems depend more heavily on WM resources, a situation that puts low span participants at a special disadvantage. Errors in letter recall. The analysis of letter recall errors revealed considerable evidence of dual-task interference (see Table 2 for the ANOVA summary). The percentage of trials with letter recall errors increased significantly in the dual-task condition (28.9%) relative to the letters-only condition (13.1%) and increased substantially as set sizes grew larger; error rates were 4.6%, 14.0%, and 44.5%, respectively, for the 2-, 4-, and 6-letter sets. Span groups also differed significantly in their overall error rates; the low span group averaged 27.5% errors overall, as compared with 21.5% for the medium span group and 14.0% for the high span group. Table 2 Analysis of Variance Summary for Letter Recall Errors: Experiment 4 Source df F MSE p Between subjects Listening span group (G) Subjects (S) within group error 2 27 ⬍.025 4.58 1,066.25 Within subjects Task (T) T⫻G T ⫻ S within-group error Problem size (P) P⫻G P ⫻ S within-group error Letter set size (L) L⫻G L ⫻ S within-group error T⫻P T⫻P⫻G T ⫻ P ⫻ S within-group error T⫻L T⫻L⫻G T ⫻ L ⫻ S within-group error P⫻L P⫻L⫻G P ⫻ L ⫻ S within-group error T⫻P⫻L T⫻P⫻L⫻G T ⫻ P ⫻ L ⫻ S within-group error a Computed p value. 1 2 27 1 2 27 2 4 54 1 2 27 2 4 54 2 4 54 2 4 54 ⬍.001 ⬍.025 134.94 4.71 157.16 3.42 1.76 193.47 123.10 3.06 402.02 12.16 2.30 184.09 25.50 0.35 168.11 3.27 1.23 ⬍.076a ns ⬍.001 ⬍.025 ⬍.005 ns ⬍.001 ns ⬍.05 ns 105.12 0.46 0.66 ns ns 133.11 1348 SEYLER, KIRK, AND ASHCRAFT These main effects, as well as the nearly significant main effect of problem size, were all qualified by several two-way interactions, as shown in Table 2. Problem size interacted with set size in the analysis. A moderate (5%) problem size effect was obtained on the 2- and 4-letter trials, with error rates in the 2% to 16% range. On 6-letter trials, however, recall errors were quite high for both small (45.1%) and large (43.9%) problems. Two important double interactions were obtained with the task variable, the Task ⫻ Problem Size interaction and the Task ⫻ Set Size interaction; both indicated the damaging effects that subtraction exerted on letter recall as a result of competition for processing capacity. In the first interaction, shown in Figure 5, it is clear that the burden of dual processing fell especially heavily on large subtraction problems. This is consistent with the prediction that larger problems will suffer more from dual-task interference, because they are performed more frequently by means of reconstructive processing that consumes WM resources. Similarly, the Task ⫻ Set Size interaction, shown in Figure 6, showed both consistently worse recall in the dual-task condition and stronger interference at the larger set sizes; error rates on 2-letter trials differed by only 6% between tasks, as compared with a 30% difference for 6-letter trials. Finally, two significant interactions involved the betweensubjects effect of memory span, further buttressing evidence for a strong WM component in subtraction performance. First, the increasing interference due to heavier memory loads was characteristic of all three groups but was especially pronounced for the low capacity group, as shown by the Span Group ⫻ Set Size interaction depicted in Figure 7. No group differences were apparent at the smallest memory load. But at the maximal 6-letter load, high span participants averaged 31.2% errors, as compared with error rates of 45.8% and 56.4%, respectively, among medium and low span participants. Clearly, low span participants experienced stronger interference due to a heavy concurrent load on WM. Second, the increase in errors due to the dual task was apparent in all groups but was especially strong for the low span group (see the Task ⫻ Figure 5. Mean percentages of letter recall errors for the control versus dual-task conditions as a function of problem size: Experiment 4. Figure 6. Mean percentages of letter recall errors for the control versus dual-task conditions as a function of letter set size: Experiment 4. Span Group interaction depicted in Figure 8). As would be expected if both difficult subtraction and difficult letter recall depend on WM capacity, concurrent processing led to greater interference, in the form of disrupted recall of the memory sets. This interference was particularly pronounced for low span participants, especially when concurrent processing involved a heavy WM load. Figure 7. Mean percentages of letter recall errors for the low, medium, and high working memory capacity groups as a function of letter set size: Experiment 4. ELEMENTARY SUBTRACTION 1349 in Experiment 3. These interference effects demonstrate the importance of WM processing in subtraction, in that subtraction and letter storage–recall were clearly competing for the same processing resources. Thus, elementary subtraction relies heavily on WM. It disrupts concurrent memory load tasks, especially when those tasks impose a heavy memory load and especially when participants of low WM capacity are tested. General Discussion Figure 8. Mean percentages of letter recall errors for the low, medium, and high working memory capacity groups as a function of control versus dual task: Experiment 4. One final aspect of the results bears brief mention. In the most difficult condition here, large problems performed under a 6-letter memory load, high span participants averaged 44% errors in their letter recall. Low span participants approached this level of inaccuracy in their letter recall in the letters-only condition, with an error rate of 38% on 6-letter trials. That is, when they were not under the demands of the dual task, the low span group showed only slightly better performance than the high span participants did under the dual-task demands (for comparison, the low span group committed 74% errors in this condition in the dual task). This is reminiscent of the results of Rosen and Engle’s (1997) study, in which the demands of the dual task reduced high span participants’ performance to the level achieved by low span participants in a single task setting. The plausible reason for our result is also comparable to that offered by Rosen and Engle. Large subtraction problems require considerable WM capacity, as do maintenance and recall of a heavy memory load. The combination of the tasks puts all groups at a disadvantage as a result of competition for processing resources. Low memory span participants are particularly disadvantaged, however, because they have fewer processing resources to begin with. Indeed, the letter recall task by itself damaged low span participants’ performance, whereas for high span participants, a comparable level of damage was reached only when they performed the most difficult conditions concurrently. Summary The patterns of interference observed in Experiment 4 indicated a heavy reliance on WM during the processing of subtraction facts, especially those with minuends of 11 or greater. This, of course, is the same problem size range that yielded the stair-step functions in Experiments 1–3 and the dramatic increase in reported strategy use We conclude with a brief discussion of three points. First, adults’ mental subtraction seems to consist more heavily of reconstructive, strategy-based processing than either addition or multiplication (see also Campbell & Xue, 2001). Kirk and Ashcraft (2001) found, with controlled verbal report techniques, that the bulk of adults’ processing of addition and multiplication can be attributed to retrieval characterized by a conventional, monotonic problem size effect. Subtraction, however, seems to rely more heavily on reconstructive strategies; fully one third of the large problem trials in Experiment 3 seem to have been performed through such strategies, along with 58% in Campbell and Xue’s Canadian sample. Experiment 4 demonstrated an additional effect, that solving these larger problems depends heavily on WM capacity. Second, Siegler’s distribution of associations model seems to be the most accommodating theoretical framework for the present results (e.g., Siegler & Jenkins, 1989). According to this model, problems and strategies accrue associative strength on the basis of experience, as do problems and answers. If a particular strategy is repeatedly used for a particular problem, the model suggests that the accrued strength of the problem–strategy association can easily be higher than the direct association between problem and answer. If so, then the more likely solution method for that problem would be application of the strategy rather than retrieval from memory. Such a strategy could be selected and initiated more rapidly than retrieval for the problem, as a result of higher associative strength, although execution of the multistep strategy would probably still yield a higher rate of errors. Finally, although the present results are consistent with this theoretical position, and although they indeed suggest that strategies are common and dominant in adults’ mental representations of larger subtraction facts, there are some difficulties with this explanation. A strong test of the Siegler and Jenkins (1989) model would require an a priori prediction about which problem–strategy pairs attain sufficient strength for any given participant and which strategies are most likely for different problems. It is not clear how to derive such predictions independent of obtained performance data. Furthermore, at least three competing explanations can be offered for the “residual stair step” observed in Experiment 3. First, it might simply be that our verbal report methods did not elicit full reporting of strategy use, although the congruence of the RT and error patterns between reporting and silent groups argues against this interpretation. Second, and somewhat more plausibly, the residual stair-step pattern might reflect selection and execution of a “retrieve then check” strategy, which would match the “addition reference” reports we observed. Participants might retrieve an answer to a large subtraction fact but, because of their relatively low confidence in the answer, follow this with an answer-checking process in which they retrieve the corresponding addition fact SEYLER, KIRK, AND ASHCRAFT 1350 (e.g., for 15 – 8, responding “7 ⫹ 8 is 15, so 7”). Although this would represent a genuine multistep process with implications for RTs and errors, the “check” itself would involve another retrieval rather than reconstructive processing (unless the corresponding addition fact itself needed to be processed by means of a strategy; e.g., see LeFevre et al., 1996). A third alternative is that the verbal report technique accurately identified the nonretrieval trials, and the residual stair step depicts a time- and accuracy-based profile of genuine retrieval from longterm memory. Under this alternative, retrieval of larger subtraction facts would simply be especially slow and effortful, whether as a result of lower associative strength of problem–answer pairs, greater competition with strategy associations, or greater competition from other, similar associative pathways (e.g., Campbell, 1987). Such an explanation has in fact been offered in a rather different context; Rosen and Engle’s (1997) high WM capacity participants were said to augment their automatic semantic memory search with a more controlled, deliberate, and relatively slow retrieval process. Note, however, that it may be difficult to establish this possibility in the context of arithmetic, in which a procedural, computational solution is possible. Although the present results do not contradict the differences between subtraction and other operations found in cognitive neuropsychological research (e.g., Cohen & Dehaene, 2000; Lee, 2000), they also do not speak directly to such reported differences. It is possible, or even probable, that strategy-based performance on larger facts would be discriminable from retrieval in neuroimaging studies, although to our knowledge such results have not yet been reported (but see El Yagoubi, Lemaire, & Besson, 2003, for event-related potential evidence discriminating between two strategies used in verifying complex addition equations). It is sufficient here to report the full set of data so that other investigators may consider these chronometric and strategy-based results more precisely in future cognitive neuroscience studies of mathematical cognition, perhaps searching for the retrieval–strategy differences we are reporting. In any event, it is somewhat surprising that a simple, elementary arithmetic operation routinely taught in second grade could show such provocative and complex patterns of performance years later, when normal college adults are tested. References Ashcraft, M. H. (1992). Cognitive arithmetic: A review of data and theory. Cognition, 44, 75–106. Ashcraft, M. H. (1995). Cognitive psychology and simple arithmetic: A review and summary of new directions. Mathematical Cognition, 1, 3–34. Ashcraft, M. H., & Battaglia, J. (1978). Cognitive arithmetic: Evidence for retrieval and decision processes in mental addition. Journal of Experimental Psychology: Human Learning and Memory, 4, 527–538. Ashcraft, M. H., & Fierman, B. A. (1982). Mental addition in third, fourth, and sixth graders. Journal of Experimental Child Psychology, 3, 216 – 234. Banks, W. P. (1977). Encoding and processing of symbolic information in comparative judgments. In G. H. Bower (Ed.), The psychology of learning and motivation (Vol. 11, pp. 101–159). New York: Academic Press. Campbell, J. I. D. (1987). Network interference and mental multiplication. Journal of Experimental Psychology: Learning, Memory, and Cognition, 13, 109 –123. Campbell, J. I. D., & Xue, Q. (2001). Cognitive arithmetic across cultures. Journal of Experimental Psychology: General, 130, 299 –315. Cohen, L., & Dehaene, S. (2000). Calculating without reading: Unsus- pected residual abilities in pure alexia. Cognitive Neuropsychology, 17, 563–583. Compton, B. J., & Logan, G. D. (1991). The transition from algorithm to retrieval in memory-based theories of automaticity. Memory & Cognition, 19, 128 –151. Cooney, J., Swanson, H. L., & Ladd, S. F. (1988). Acquisition of mental multiplication skill: Evidence for the transition between counting and retrieval strategies. Cognition and Instruction, 5, 349 –364. Crutcher, R. J. (1994). Telling what you know: The use of verbal report methodologies in psychological research. Psychological Science, 5, 241–244. Dehaene, S., & Cohen, L. (1995). Towards an anatomical and functional model of number processing. Mathematical Cognition, 1, 83–120. Dixon, W. J. (1953). Processing data for outliers. Biometrics, 9, 74 – 89. El Yagoubi, R. E., Lemaire, P., & Besson, M. (2003). Different brain mechanisms mediate two strategies in arithmetic: Evidence from eventrelated brain potentials. Neuropsychologia, 41, 855– 862. Ericsson, K. A., & Kirk, E. P. (2000). Instructions for giving concurrent and retrospective verbal reports. Unpublished manuscript. Ericsson, K. A., & Kirk, E. P. (2001). Validity of verbal reports on retrieval as a function of report instructions: A regression approach. Unpublished manuscript. Ericsson, K. A., & Simon, H. A. (1993). Protocol analysis: Verbal reports as data (rev. ed.). Cambridge, MA: Bradford Books/MIT Press. Faust, M. W., Ashcraft, M. H., & Fleck, D. E. (1996). Mathematics anxiety effects in simple and complex addition. Mathematical Cognition, 2, 25– 62. French, J. W., Ekstrom, R. B., & Price, I. A. (1963). Kit of reference tests for cognitive factors. Princeton, NJ: Educational Testing Service. Fuson, K. C. (1992). Research on whole number addition and subtraction. In D. A. Grouws (Ed.), Handbook of research on mathematics teaching and learning (pp. 243–275). New York: Macmillan. Geary, D. G. (1994). Children’s mathematical development. Washington, DC: American Psychological Association. Geary, D. G., Frensch, P. A., & Wiley, J. G. (1993). Simple and complex mental subtraction: Strategy choice and speed of processing in younger and older adults. Psychology and Aging, 8, 242–256. Groen, G. J., & Parkman, J. M. (1972). A chronometric analysis of simple addition. Psychological Review, 79, 329 –343. Kirk, E. P., & Ashcraft, M. H. (2001). Telling stories: The perils and promise of using verbal reports to study math strategies. Journal of Experimental Psychology: Learning, Memory, and Cognition, 27, 157– 175. Kučera, H., & Francis, W. N. (1967). Computational analysis of presentday American English. Providence, RI: Brown University Press. Lee, K. M. (2000). Cortical areas differentially involved in multiplication and subtraction: A functional imaging study and correlation with a case of selective acalculia. Annals of Neurology, 48, 657– 661. LeFevre, J., Sadesky, G. S., & Bisanz, J. (1996). Selection of procedures in mental addition: Reassessing the problem size effect in adults. Journal of Experimental Psychology: Learning, Memory, and Cognition, 22, 216 –239. Meck, W. H., & Church, R. M. (1983). A mode control model of counting and timing processes. Journal of Experimental Psychology: Animal Behavior Processes, 9, 320 –334. Payne, J. W. (1994). Thinking aloud: Insights into information processing. Psychological Science, 5, 241–248. Robinson, K. M. (2001). The validity of verbal reports in children’s subtraction. Journal of Educational Psychology, 93, 211–222. Rosen, V. M., & Engle, R. W. (1997). The role of working memory capacity in retrieval. Journal of Experimental Psychology: General, 126, 211–227. Russo, J. E., Johnson, E. J., & Stephens, D. L. (1989). The validity of verbal protocols. Memory & Cognition, 17, 759 –769. Salthouse, T. A., & Babcock, R. L. (1990). Computation span and listening ELEMENTARY SUBTRACTION span tasks. Unpublished manuscript, Georgia Institute of Technology, Atlanta. Schneider, W. (1988). Micro Experimental Laboratory: An integrated system for IBM PC compatibles. Behavior Research Methods, Instruments, & Computers, 20, 206 –217. Siegler, R. S. (1987). Strategy choices in subtraction. In J. A. Slobada & D. Rogers (Eds.), Cognitive processes in mathematics (pp. 81–106). Oxford, England: Clarendon Press. Siegler, R. S., & Jenkins, E. (1989). How children discover new strategies. Hillsdale, NJ: Erlbaum. Siegler, R. S., & Shrager, J. (1984). Strategy choices in addition and subtraction: How do children know what to do? In C. Sophian (Ed.), Origins of cognitive skills (pp. 229 –293). Hillsdale, NJ: Erlbaum. 1351 VanLehn, K. (1990). Mind bugs: The origins of procedural misconceptions. Cambridge, MA: MIT Press. Whalen, J., Gallistel, C. R., & Gelman, R. (1999). Nonverbal counting in humans: The psychophysics of number representation. Psychological Science, 10, 130 –137. Wilson, T. D. (1994). The proper protocol: Validity and completeness of verbal reports. Psychological Science, 5, 249 –252. Woods, S. S., Resnick, L. B., & Groen, G. J. (1975). An experimental test of five process models for subtraction. Journal of Educational Psychology, 67, 17–21. Wynn, K. (1992). Addition and subtraction by human infants. Nature, 358, 749 –750. Appendix Basic Facts of Subtraction Subtrahend Remainder 0 1 2 3 4 Minuend RT SD % Error RET Non-RET 0 822 178 0 29 0 1 894 296 0 27 0 2 839 186 0 29 1 3 793 159 0 29 1 4 783 127 0 29 1 Minuend RT SD % Error RET Non-RET 1 918 270 7 24 1 2 833 238 0 30 0 3 799 166 0 29 0 4 829 324 0 28 0 Minuend RT SD % Error RET Non-RET 2 919 351 0 22 1 3 861 174 7 28 1 4 837 344 2 25 1 Minuend RT SD % Error RET Non-RET 3 870 232 2 22 0 4 875 285 0 29 0 Minuend RT SD % Error RET Non-RET 4 815 218 13 28 0 Minuend RT SD % Error RET Non-RET 5 880 315 7 29 0 5 6 7 8 9 5 787 166 0 28 0 6 789 165 0 29 2 7 778 156 0 26 1 8 849 234 0 29 0 9 825 143 0 29 1 5 821 244 0 29 0 6 961 981 2 28 0 7 848 266 2 28 0 8 856 201 7 29 0 9 846 220 0 28 0 10 970 304 0 28 0 5 934 315 2 24 2 6 981 331 0 24 4 7 879 261 2 23 4 8 1,014 390 2 25 3 9 966 337 11 25 5 10 904 210 0 25 2 11 1,422 1,065 13 15 7 5 895 305 2 29 0 6 880 362 0 27 2 7 1,196 338 2 26 3 8 957 337 0 23 2 9 1,041 446 2 27 1 10 990 457 0 28 1 11 1,530 792 2 13 13 12 1,194 570 15 13 11 5 825 209 2 26 1 6 1,029 313 2 28 1 7 1,060 503 9 25 4 8 850 241 2 29 0 9 957 272 2 27 0 10 951 258 2 28 0 11 1,520 961 30 13 12 12 1,582 1,372 7 20 9 13 1,532 827 9 19 11 6 859 212 2 29 0 7 1,015 536 0 27 2 8 992 455 2 25 4 9 1,009 416 4 27 2 10 856 290 0 28 1 11 1,113 392 11 23 6 12 1,450 882 17 16 10 13 1,696 1,023 17 13 10 14 1,400 591 15 18 9 0 1 2 3 4 5 (Appendix continues) SEYLER, KIRK, AND ASHCRAFT 1352 Appendix (continued) Subtrahend Remainder 0 1 2 3 4 5 6 7 8 9 Minuend RT SD % Error RET Non-RET 6 881 305 4 29 0 7 940 284 0 28 0 8 1,048 292 4 24 6 9 1,201 461 4 24 6 10 1,126 513 2 26 1 11 1,337 709 2 20 6 12 1,102 467 7 26 2 13 1,614 1,179 2 17 7 14 1,764 944 15 13 14 15 1,428 527 22 18 8 Minuend RT SD % Error RET Non-RET 7 857 315 0 28 0 8 903 248 2 30 0 9 999 393 2 22 5 10 967 523 2 15 0 11 1,763 1,105 4 16 8 12 1,322 662 9 18 8 13 1,477 664 11 19 6 14 1,079 518 9 23 4 15 1,630 1,055 22 16 11 16 1,723 1,065 17 11 12 Minuend RT SD % Error RET Non-RET 8 854 203 4 27 0 9 840 176 0 27 2 10 912 396 0 26 2 11 1,627 936 26 12 12 12 1,515 853 15 20 8 13 1,747 806 9 15 10 14 1,618 651 13 16 12 15 1,637 891 9 18 11 16 1,050 694 7 26 1 17 1,536 798 11 15 13 Minuend RT SD % Error RET Non-RET 9 898 301 0 28 1 10 862 183 0 29 0 11 1,400 720 9 21 6 12 1,502 809 15 21 6 13 1,900 1,026 15 17 10 14 1,578 722 17 19 10 15 1,818 967 13 17 8 16 1,951 934 26 15 9 17 1,533 706 7 14 13 18 1,162 501 4 26 2 6 7 8 9 Note. The 100 cells depicted correspond to the 100 basic facts of subtraction; the minuend is the first entry in each cell, the subtrahend is the column header, and the remainder is the row header. Thus, the bottom-most right cell corresponds to the problem 18 ⫺ 9 ⫽ 9. The next three entries in each cell are the mean reaction time (RT), standard deviation, and error rate for that problem (1,162 ms, 501 ms, and 4%), averaged across all participants in Experiments 1 and 2 (N ⫽ 46). The final two entries in each cell are the reported number of retrieval (RET) trials for that problem and the reported number of non-RET trials, based on the 30 participants in the verbal report groups of Experiment 3, Session 1; because of errors and occasional nonreports, these values do not necessarily sum to 30. Received April 2, 2002 Revision received April 7, 2003 Accepted April 11, 2003 䡲