Square-root Lasso: Pivotal Recovery of Sparse Signals via Conic

advertisement

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

Biometrika, pp. 1–18

°

C 2011 Biometrika Trust

Printed in Great Britain

Advance Access publication on ???

Square-root Lasso: Pivotal Recovery of Sparse Signals via

Conic Programming

B Y A. BELLONI

Duke University, Fuqua School of Business, Decision Science Group. 100 Fuqua Street,

Durham, North Carolina 27708

abn5@duke.edu

V. CHERNOZHUKOV

MIT, Department of Economics and Operations Research Center. 52 Memorial Drive,

Cambridge, Massachusetts 02142

vchern@mit.edu

L. WANG

MIT, Department of Mathematics. 77 Massachusetts Avenue, Cambridge, Massachusetts 02139

liewang@math.mit.edu

AND

S UMMARY

We propose a pivotal method for estimating high-dimensional sparse linear regression models, where the overall number of regressors p is large, possibly much larger than n, but only s

regressors are significant. The method is a modification of Lasso, called square-root Lasso. The

method neither relies on the knowledge of the standard deviation σ of the regression errors nor

does it need to pre-estimate σ. Despite not knowing σ, square-root

p Lasso achieves near-oracle

performance, attaining the prediction norm convergence rate σ (s/n) log p, and thus matching the performance of the Lasso that knows σ. Moreover, we show that these results are valid

for both Gaussian and non-Gaussian errors, under some mild moment restrictions, using moderate deviation theory. Finally, we formulate the square-root Lasso as a solution to a convex

conic programming problem. This formulation allows us to implement the estimator using efficient algorithmic methods, such as interior point and first order methods specialized to conic

programming problems of a very large size.

Some key words: conic programming; high-dimensional sparse model; unknown sigma.

1. I NTRODUCTION

We consider the following classical linear regression model for outcome yi given fixed pdimensional regressors xi :

yi = x0i β0 + σ²i , i = 1, ..., n,

(1.1)

with independent and identically distributed noise ²i , i = 1, ..., n, having law F0 such that

EF0 [²i ] = 0 and EF0 [²2i ] = 1.

(1.2)

2

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

A. B ELLONI , V. C HERNOZHUKOV AND L. WANG

Vector β0 ∈ Rp is the unknown true parameter value, and σ > 0 is the unknown standard deviation. The regressors xi are p-dimensional,

xi = (xij , j = 1, ..., p),

where the dimension p is possibly much larger than the sample size n. Accordingly, the true

parameter value β0 lies in a very high-dimensional space Rp . However, the key assumption that

makes the estimation possible is the sparsity of β0 , which says that only s < n regressors are

significant, namely

T = supp(β0 ) has s elements.

(1.3)

The identity T of the significant regressors is unknown. Throughout, without loss of generality,

we normalize

n

1X 2

xij = 1 for all j = 1, . . . , p.

n

(1.4)

i=1

In making asymptotic statements below we allow for s → ∞ and p → ∞ as n → ∞.

Clearly, the ordinary least squares estimator is not consistent for estimating β0 in the setting

with p > n. The Lasso estimator considered in Tibshirani (1996) can achieve consistency under

mild conditions by penalizing the sum of absolute parameter values:

b

β̄ ∈ arg minp Q(β)

+

β∈R

λ

· kβk1 ,

n

(1.5)

P

P

b

where Q(β)

:= n−1 ni=1 (yi − x0i β)2 and kβk1 = pj=1 |βj |. The Lasso estimator is computationally attractive because it minimizes a structured convex function. Moreover, when errors are

normal, F0 = N (0, 1), and suitable design conditions hold, if one uses the penalty level

√

λ = σ · c · 2 n · Φ−1 (1 − α/2p)

(1.6)

for some numeric constant c > 1, this estimator achieves the near-oracle performance, namely

r

s log(2p/α)

kβ̄ − β0 k2 . σ

,

(1.7)

n

with probability at least 1 − α. Remarkably, in (1.7) the overall number of regressors p shows up

only through

√ a logarithmic factor, so that if p is polynomial in n, the oracle rate is achieved up to

a factor of log n. Recallpthat the oracle knows the identity T of significant regressors, and so it

can achieve the rate of σ s/n. The result above was demonstrated by Bickel et al. (2009), and

closely related results were given in Meinshausen & Yu (2009), Zhang & Huang (2008), Candes

& Tao (2007), and van de Geer (2008). Bunea et al. (2007), Zhao & Yu (2006), Huang et al.

(2008), Lounici et al. (2010), Wainwright (2009), and Zhang (2009) contain other fundamental

results obtained for related problems; please see Bickel et al. (2009) for further references.

Despite these attractive features, the Lasso construction (1.5)-(1.6) relies on the knowledge

of the standard deviation of the noise σ. Estimation of σ is a non-trivial task when p is large,

particularly when p À n, and remains an outstanding practical and theoretical problem. The

estimator we propose in this paper, the square-root Lasso or Square-root Lasso, is a modification

of Lasso, which eliminates completely the need to know or pre-estimate σ. In addition, by using

the moderate deviation theory, we can dispense with the normality assumption F0 = Φ under

some conditions.

Biometrika style

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

3

The Square-root Lasso estimator of β0 is defined as the solution to the following optimization

problem:

q

λ

b

βb ∈ arg minp Q(β)

(1.8)

+ · kβk1 ,

β∈IR

n

with the penalty level

λ=c·

√

n · Φ−1 (1 − α/2p),

(1.9)

for some numeric constant c > 1. We stress that the penalty level in (1.9) is independent of σ,

in contrast to (1.6). Furthermore, under reasonable conditions, the proposed penalty level (1.9)

will also be valid asymptotically without imposing normality F0 = Φ, by virtue of moderate

deviation theory (this side result is new and is of independent interest for other problems with

`1 -penalization).

We will show that the Square-root Lasso estimator achieves the near-oracle rates of convergence under suitable design conditions and suitable conditions on F0 that extend significantly

beyond normality:

r

s log(2p/α)

kβb − βk2 . σ

,

(1.10)

n

with probability approaching 1 − α. Thus, this estimator matches the near-oracle performance of

Lasso, even though it does not know the noise level σ. This is the main result of this paper. It is

important to emphasize here that this result is not a direct consequence of the analogous result for

Lasso. Indeed, for a given value of the penalty level, the statistical structure of Square-root Lasso

is different from that of Lasso, and so our proofs are also different.

Importantly, despite taking the square root of the least squares criterion function, the problem (1.8) retains global convexity, making the estimator computationally attractive. In fact, the

second main result of this paper is to formulate Square-root Lasso as a solution of a conic programming problem. Conic programming problems can be seen as linear programming problems

with conic constraints, so it generalizes the canonical linear programming with non-negative

orthant constraints. Conic programming inherits a rich set of theoretical properties and algorithmic methods from linear programming. In our case, the constraints take the form of a second

order cone, leading to a particular, highly tractable form of conic programming. In turn, this

formulation allows us to implement the estimator using efficient algorithmic methods, such as

interior point methods, which provide polynomial-time bounds on computational time (Nesterov

& Nemirovskii, 1993; Renegar, 2001), and modern first order methods that have been recently

extended to handle conic programming problems of a very large size (Nesterov, 2005, 2007; Lan

et al., 2011; Beck & Teboulle, 2009; Becker et al., 2010a).

N OTATION . In what follows, all true parameter values, such as β0 , σ, F0 , are implicitly indexed by the sample size n, but we omit the index in our notations whenever this does not

cause confusion. The regressors xi , i = 1, ..., n, are taken to be fixed throughout. This includes

random design as a special case, where we condition on the realized values of the regressors.

In making asymptotic statements, we assume that n → ∞ and p = pn → ∞, and we also allow for s = sn → ∞. The notation o(·) is defined with respect to n → ∞. We use the notation (a)+ = max{a, 0}, a ∨ b = max{a, b} and a ∧ b = min{a, b}. The `2 -norm is denoted by

k · k2 , and `∞ norm by k · k∞ . Given a vector δ ∈ IRp and a set of indices T ⊂ {1, . . . , p},

we denote by δT the P

vector in which δT j = δj if j ∈ T , δT j = 0 if j ∈

/ T . We also use

n

En [f ] := En [f (z)] := i=1 f (zi )/n. We use a . b to denote a 6 cb for some constant c > 0

that does not depend on n.

4

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

A. B ELLONI , V. C HERNOZHUKOV AND L. WANG

2. T HE C HOICE OF P ENALTY L EVEL

2·1. The General Principle and Heuristics

The key quantity determining the choice of the penalty level for Square-root Lasso is the score

√b

– the gradient of Q

evaluated at the true parameter value β = β0 :

q

b )

Q(β

E [xσ²]

En [x²]

b 0) = ∇

q 0 =pn

S̃ := ∇ Q(β

=p

.

En [σ 2 ²2 ]

En [²2 ]

b 0)

2 Q(β

The score S̃ does not depend on the unknown standard deviation σ or the unknown true parameter

value β0 , and therefore, the score is pivotal with respect to these parameters. Under the classical

normality assumption, namely F0 = Φ, the score is in fact completely pivotal, conditional on

X. This means that in principle we know the distribution of S̃ in this case, or at least we can

compute it by simulation.

The score S̃ summarizes the estimation noise in our problem, and we may set the penalty level

λ/n to dominate the noise. For efficiency reasons, we set λ/n at a smallest level that dominates

the estimation noise, namely we choose the smallest λ such that

λ > cΛ, for Λ := nkS̃k∞ ,

(2.11)

with a high probability, say 1 − α, where Λ is the maximal score scaled by n, and c > 1 is a

theoretical constant of Bickel et al. (2009) to be stated later. We note that the principle of setting

λ to dominate the score of the criterion function is motivated by Bickel et al. (2009)’s choice

of penalty level for Lasso. In fact, this is a general principle that carries over to other convex

problems, including ours, and that leads to the optimal – near-oracle – performance of other

`1 -penalized estimators.

In the case of Square-root Lasso the maximal score is pivotal, so the penalty level in (2.11)

must be pivotal too. In fact, we used the square-root transformation in the Square-root Lasso

formulation (1.8) precisely to guarantee this pivotality. In contrast, for Lasso, the score S =

b 0 ) = 2σ · En [x²] is obviously non-pivotal, since it depends on σ. Thus, the penalty level

∇Q(β

for Lasso must be non-pivotal. These theoretical differences translate into obvious practical differences: In Lasso, we need to guess conservative upper bounds σ̄ on σ; or we need to use

preliminary estimation of σ using a pilot Lasso, which uses a conservative upper bound σ̄ on

σ. In Square-root Lasso, none of the above is needed. Finally, we note that the use of pivotality

principle for constructing the penalty level is also fruitful in other problems with pivotal scores,

for example, median regression (Belloni & Chernozhukov, 2010).

The rule (2.11) is not practical yet, since we do not observe Λ directly. However, we can

proceed as follows.

1. When we know the distribution of errors exactly, e.g. F0 = Φ, we propose to set λ as c times

the (1 − α)-quantile of Λ given X and F0 . This choice of the penalty level precisely implements (2.11), and we can easily compute it by simulation.

2. When we do not know F0 exactly, but instead know that F0 is an element of some family F,

we can rely on either finite-sample or asymptotic upper bounds on quantiles of Λ given X.

For

√ example, as mentioned in the introduction, under some mild conditions on F, we can use

c nΦ−1 (1 − α/2p) as a valid asymptotic choice.

What follows below elaborates these approaches more formally.

Finally, before proceeding to formal constructions, it is useful to mention some simple heuristics for the principle (2.11). These heuristics arise from considering the simplest case, where

Biometrika style

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

5

none of the regressors are significant so that β0 = 0. We want our estimator to perform at a nearoracle level in all cases, including this case, but here the oracle estimator β̃ knows that none of

regressors are significant, and so it sets β̃ = β0 = 0. We also want βb = β0 = 0 in this case, at

least with a high probability 1 − α. From the subgradient optimality conditions of (1.8), in order

for this to be true we must have

−S̃j + λ/n > 0 and S̃j + λ/n > 0, for all 1 6 j 6 p.

We can only guarantee this by setting the penalty level λ/n such that λ > n max16j6p |S̃j | =

nkS̃k∞ with probability at least 1 − α. This is precisely the rule (2.11) appearing above, and, as

it turns out, this rule delivers near-oracle performance more generally, when β0 6= 0.

2·2. The Formal Choice of Penalty Level and Its Properties

In order to describe our choice of λ formally, define for 0 < α < 1

Λ(1 − α|X, F ) := (1 − α) − quantile of Λ|X, F0 = F

p

√

Λ(1 − α) := nΦ−1 (1 − α/2p) 6 2n log(2p/α).

(2.12)

(2.13)

We can compute the first quantity by simulation.

When F0 = Φ, we can set λ according to either of the following options:

exact

λ = cΛ(1 − α|X, Φ)√

asymptotic λ = cΛ(1 − α) := c nΦ−1 (1 − α/2p)

(2.14)

The parameter 1 − α is a confidence level which guarantees near-oracle performance with probability 1 − α; we recommend 1 − α = 95%. The constant c > 1 is a theoretical constant of Bickel

et al. (2009), which is needed to guarantee a regularization event introduced in the next section;

we recommend c = 1.1. The options in (2.14) are valid either in finite or large samples under

the conditions stated below. They are also supported by the finite-sample experiments reported

in Section 5. Moreover, we recommend using the exact option over the asymptotic option, because by construction the former is better tailored to the given sample size n and design matrix

X. Nonetheless, the asymptotic option is easier to compute. In the next section, our theoretical

results shows that the options in (2.14) lead to near-oracle rates of convergence.

For the asymptotic results, we shall impose the following growth condition:

C ONDITION G. We have that log2 (p/α) log(1/α) = o(n) and p/α → ∞ as n → ∞.

The following lemma shows that the exact and asymptotic options in (2.14) implement the

regularization event λ > cΛ in the Gaussian case with the exact or asymptotic probability 1 − α

respectively. The lemma also bounds the magnitude of the penalty level for the exact option,

which will be useful for stating bounds on the estimation error later. We assume throughout the

paper that 0 < α < 1 is bounded away from 1, but we allow for α to approach 0 as n grows.

L EMMA 1 (P ROPERTIES OF THE P ENALTY L EVEL IN THE G AUSSIAN C ASE ). Suppose

that F0 = Φ. (i) The exact option in (2.14) implements

λ > cΛ with probability at least 1 − α.

p

(ii) Assume that p/α > 8. For any 1 < ` < n/ log(1/α), the asymptotic option in (2.14)

implements λ > cΛ with probability at least

p

¶

µ

exp(2 log(2p/α)` log(1/α)/n)

1

2

p

− α` /4−1 ,

1 − α · τ, with τ = 1 +

log(p/α)

1 − ` log(1/α)/n

where under condition G we have τ = 1 + o(1).

6

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

A. B ELLONI , V. C HERNOZHUKOV AND L. WANG

(iii) Assume that p/α > 8 and n > 4 log(2/α). Then we have

p

Λ(1 − α|X, Φ) 6 ν · Λ(1 − α) 6 ν · 2n log(2p/α), with ν =

p

1 + 2/ log(2p/α)

p

,

1 − 2 log(2/α)/n

where under condition G we have ν = 1 + o(1).

In the non-normal case, we can set λ according to one of the following options:

exact

λ = cΛ(1 − α|X, F )

semi-exact λ = c maxF ∈F Λ(1 −

√ α|X, F )

asymptotic λ = cΛ(1 − α) := c nΦ−1 (1 − α/2p)

(2.15)

We set the confidence level 1 − α and the constant c > 1 as before. The exact option is applicable when we know that F0 = F , as for example in the previous normal case. The semi-exact

option is applicable when we know that F0 is a member of some family F, or that the family F

will give a more conservative penalty level. We also assume that F in (2.15) is either finite or,

more generally, that the maximum in (2.15) is well defined. For example, in applications, where

the regression errors ²0i s are thought of having a potentially wide range of tail behavior, it is

useful to set F = {t(4), t(8), t(∞)} where t(k) denotes the Student distribution with k degrees

of freedom. As stated previously, we can compute the quantiles Λ(1 − α|X, F ) by simulation.

Therefore, we can implement the exact option easily, and if F is not too large, we can also implement the semi-exact option easily. Finally, the asymptotic option is applicable when F0 and

design X satisfy some moment conditions stated below. We recommend using either the exact

or semi-exact options, when applicable, since they are better tailored to the given design X and

sample size n. However, the asymptotic option also has attractive features, namely it is trivial

to compute, and it often provides a very similar, albeit slightly more conservative, penalty level

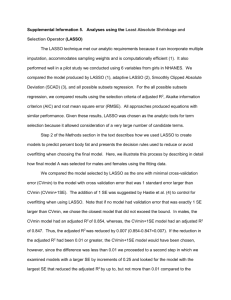

as the exact or semi-exact options do; indeed, Fig. 1 provides a numerical illustration of this

phenomenon.

Fig. 1. The asymptotic bound Λ(0.95) and realized values of Λ(0.95|X, F ) sorted in increasing order, shown for

100 realizations of X = [x1 , ..., xn ]0 , and for three cases of error distribution, F = t(4), t(8), and t(∞). For each

realization, we have generated X = [x1 , ..., xn ]0 as independent and identically distributed draws of x ∼ N (0, Σ)

with Σjk = (1/2)|j−k| .

Biometrika style

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

305

306

307

308

309

310

311

312

313

314

315

316

317

318

319

320

321

322

323

324

325

326

327

328

329

330

331

332

333

334

335

336

7

For the asymptotic results in the non-normal case, we shall impose the following moment

conditions.

C ONDITION M. There exist finite constants q > 2 and n0 > 0 such that the following holds:

The law F0 is an element of the family F such that supn>n0 ,F ∈F EF [|²|q ] < ∞; the design X

obeys supn>n0 ,16j6p En [|xj |q ] < ∞.

We also have to restrict the growth of p relative to n, and we also assume that α is either

bounded away from zero or approaches zero not too rapidly.

C ONDITION R. We have that p 6 αnη(q−2)/2 /2 for some constant 0 < η < 1, and α−1 =

o(n[(q/2−1)∧(q/4)]∨(q/2−2) /(log n)q/2 ) as n → ∞ where q > 2 is given in Condition M.

The following lemma shows that the options (2.15) implement the regularization event λ > cΛ

in the non-Gaussian case with exact or asymptotic probability 1 − α. In particular, conditions R

and M, through relations (A12) and (A14), imply that for any fixed c > 0,

pr(|En [²2 ] − 1| > c|F0 = F ) = o(α).

(2.16)

The lemma also bounds the magnitude of the penalty level λ for the exact and semi-exact options,

which is useful for stating bounds on the estimation error later.

L EMMA 2 (P ROPERTIES OF THE P ENALTY L EVEL IN THE N ON -G AUSSIAN C ASE ). (i)

The exact option in (2.15) implements λ > cΛ with probability at least 1 − α, if F0 = F . (ii)

The semi-exact option in (2.15) implements λ > cΛ with probability at least 1 − α, if either

F0 ∈ F or Λ(1 − α|X, F ) > Λ(1 − α|X, F0 ) for some F ∈ F.

Suppose further that conditions M and R hold. Then, (iii) the asymptotic option in (2.15)

implements λ > cΛ with probability at least 1 − α + o(α), and (iv) the magnitude of the penalty

level of the exact and semi-exact options in (2.15) satisfies the inequality

p

max Λ(1 − α|X, F ) 6 Λ(1 − α)(1 + o(1)) 6 2n log(2p/α)(1 + o(1)).

F ∈F

The lemma says that all of the asymptotic conclusions reached in Lemma 1 about the penalty

level in the Gaussian case continue to hold in the non-Gaussian case, albeit under more restricted

conditions on the growth of p relative to n. The growth condition depends on the number of

bounded moments q of regressors and the error terms – the higher q is, the more rapidly p can

grow with n. We emphasize that the condition given above is only one possible set of sufficient

conditions that guarantees the Gaussian-like conclusions of Lemma 2; in a companion work, we

are providing a more detailed, more exhaustive set of sufficient conditions.

3.

F INITE -S AMPLE AND A SYMPTOTIC B OUNDS ON THE E STIMATION E RROR OF

S QUARE - ROOT L ASSO

3·1. Conditions on the Gram Matrix

√

We shall state convergence rates for δb := βb − β0 in the Euclidean norm kδk2 = δ 0 δ and also

in the prediction norm

p

p

kδk2,n := En [(x0 δ)2 ] = δ 0 En [xx0 ]δ.

The latter norm directly depends on the Gram matrix En [xx0 ]. The choice of penalty level described in Section 2 ensures the regularization event λ > cΛ, with probability 1 − α or with

8

337

338

339

340

341

342

343

344

345

346

347

348

349

350

351

352

353

354

355

356

357

358

359

360

361

362

363

364

365

366

367

368

369

370

371

372

373

374

375

376

377

378

379

380

381

382

383

384

A. B ELLONI , V. C HERNOZHUKOV AND L. WANG

probability approaching 1 − α. This event will in turn imply another regularization event, namely

that δb belongs to the restricted set ∆c̄ , where

∆c̄ = {δ ∈ Rp : kδT c k1 6 c̄kδT k1 , δ 6= 0}, for c̄ :=

c+1

.

c−1

b 2,n and kδk

b 2 in terms of the

Accordingly, we will state the bounds on estimation errors kδk

0

following restricted eigenvalues of the Gram matrix En [xx ]:

√

kδk2,n

skδk2,n

and κ

ec̄ := min

.

κc̄ := min

(3.17)

δ∈∆c̄ kδk2

δ∈∆c̄ kδT k1

These restricted eigenvalues can depend on n and T , but we suppress the dependence in our

notations.

In making simplified asymptotic statements, such as the ones appearing in the introduction,

we will often invoke the following condition on the restricted eigenvalues:

C ONDITION RE. There exist finite constants n0 > 0 and κ > 0, such that the restricted eigenvalues obey κc̄ > κ and κ̃c̄ > κ for all n > n0 .

The restricted eigenvalues (3.17) are simply variants of the restricted eigenvalues introduced

in Bickel et al. (2009). Even though the minimal eigenvalue of the Gram matrix En [xx0 ] is zero

whenever p > n, Bickel et al. (2009) show that its restricted eigenvalues can in fact be bounded

away from zero. Bickel et al. (2009) and others provide a detailed set of sufficient primitive

conditions for this, which are sufficiently general to cover many fixed and random designs of

interest, and which allow for reasonably general (though not arbitrary) forms of correlation between regressors. This makes conditions on restricted eigenvalues useful for many applications.

Consequently, we take the restricted eigenvalues as primitive quantities and Condition RE as

a primitive condition. Note also that the restricted eigenvalues are tightly tailored to the `1 penalized estimation problem. Indeed, κc̄ is the modulus of continuity between the estimation

norm and the penalty-related term computed over the restricted set, containing the deviation of

the estimator from the true value; and κ̃c̄ is the modulus of continuity between the estimation

norm and the Euclidean norm over this set.

It is useful to recall at least one simple sufficient condition for bounded restricted eigenvalues.

If for m = s log n and the m-sparse eigenvalues of the Gram matrix En [xx0 ] are bounded away

from zero and from above for all n > n0 :

0<k6

min

kδT c k0 6m,δ6=0

kδk22,n

kδk22

6

max

kδT c k0 6m,δ6=0

kδk22,n

kδk22

6 k 0 < ∞,

(3.18)

for some positive finite constants k, k 0 , and n0 , then Condition RE holds once n is sufficiently

large. In words, (3.18) only requires the eigenvalues of certain “small” m × m submatrices of

the large p × p Gram matrix to be bounded from above and below. The sufficiency of (3.18) for

Condition RE follows from Bickel et al. (2009), and many sufficient conditions for (3.18) are

provided by Bickel et al. (2009), Zhang & Huang (2008), and Meinshausen & Yu (2009).

3·2. Finite-Sample and Asymptotic Bounds on Estimation Error

We now present the main result of this paper. Recall that we are not assuming that the noise is

(sub) Gaussian, nor that σ is known.

T HEOREM 1 (F INITE S AMPLE B OUNDS ON E STIMATION E RROR ). Consider the model described in (1.1)-(1.4). Let c > 1, c̄ = (c + 1)/(c − 1), and suppose that λ obeys the growth re-

Biometrika style

385

386

387

388

389

390

391

392

393

394

395

396

397

398

399

400

401

402

403

404

405

406

407

408

409

410

411

412

413

414

415

416

417

418

419

420

421

422

423

424

425

426

427

428

429

430

431

432

9

√

striction λ s 6 nκc̄ ρ, for some ρ < 1. If λ > cΛ, then δb = βb − β0 obeys

√

p

2(1

+

1/c)

λ

s

2

b 2,n 6

kδk

σ En [² ]

.

2

1−ρ

nκc̄

In particular, if λ > cΛ with probability at least 1 − α, and En [²2 ] 6 ω 2 with probability at least

1 − γ, then with probability at least 1 − α − γ, δb = βb − β0 obeys

√

√

2(1

+

1/c)

λ

s

2(1

+

1/c)

λ

s

b 2,n 6

b 26

kδk

σω

and kδk

σω

.

2

2

1−ρ

nκc̄

1−ρ

nκc̄ κ̃c̄

This result provides a finite-sample bound for δb that is similar to that for the Lasso estimator

that knows σ, and this result leads to the same rates of convergence as in the case of Lasso. It

is important to note some differences: First, for a given value of the penalty level, the statistical

structure of Square-root Lasso is different from that of Lasso, and so our proof of Theorem

1√

is also different. Second, in the proof we have to invoke the additional growth restriction,

λ s < nκc̄ , which is not present in the Lasso analysis that treats σ as known. We may think of

this restriction as the price of not knowing σ in our framework. However, this additional condition

is very mild and holds under typical conditions if (s/n) log(p/α) → 0, as the corollaries below

indicate, and this condition is independent of the noise level σ. In comparison, for the Lasso

estimator, if we treat σ as unknown and attempt to estimate σ consistently using a pilot Lasso,

which uses an upper bound σ̄ > σ instead of σ, a similar growth condition σ̄(s/n) log(p/α) → 0

would have to be imposed, but this condition depends on the noise level σ via the inequality

σ̄ > σ. It is also clear that this growth condition is more restrictive than our growth condition

(s/n) log(p/α) → 0 when σ̄ is large.

Theorem 1 immediately implies various finite-sample bounds by combining the finite-sample

bounds on λ specified in the previous section with the finite-sample bounds on quantiles of the

sample average En [²2 ]. This corollary also implies various asymptotic bounds when combined

with Lemma 1, Lemma 2, and the application a law of large numbers for arrays.

We proceed to note the following immediate corollary of the result.

C OROLLARY 1 (F INITE S AMPLE B OUNDS IN THE G AUSSIAN C ASE ). Consider the model

described in (1.1)-(1.4). Suppose further that F0 = Φ, λ is chosen according to the exact

> 8 and n > 4 log(2/α). Let c > 1, c̄ = (c +

p option in (2.14), p/αp

p1)/(c − 1), ν =

1 + 2/ log(2p/α)/(1 − 2 log(2/α)/n), and for any ` such that 1 < ` < n/ log(1/α), set

p

2

ω 2 = 1 + `(log(1/α)/n)1/2 + `2 log(1/α)/(2n) and γ = α` /4 . Then, if cν 2s log(2p/α) 6

√

nκc̄ ρ for some ρ < 1, with probability at least 1 − α − γ we have that δb = βb − β0 obeys

p

p

νω σ 2s log(2p/α)

νω σ 2s log(2p/α)

b

b

√

√

kδk2,n 6 2(1 + c)

and kδk2 6 2(1 + c)

.

1 − ρ2

1 − ρ2

nκc̄

nκc̄ κc̄

The corollary above derive finite sample bounds to the classical Gaussian case. It is instructive

to state an asymptotic version of the corollary above.

C OROLLARY 2 (A SYMPTOTIC B OUNDS IN THE G AUSSIAN CASE ). Consider the model described in (1.1)-(1.4) and suppose that F0 = Φ, Conditions RE and G hold, and

(s/n) log(p/α) → 0. Let λ be specified according to either the exact or asymptotic option in

(2.14). Fix any µ > 1. Then, for n large enough, δb = βb − β0 obeys

r

r

σ 2s log(2p/α)

σ 2s log(2p/α)

b

b

and kδk2 6 2(1 + c)µ 2

,

kδk2,n 6 2(1 + c)µ

κ

n

κ

n

10

433

434

435

436

437

438

439

440

441

442

443

444

445

446

447

448

449

450

451

452

453

454

455

456

457

458

459

460

461

462

463

464

465

466

467

468

469

470

471

472

473

474

475

476

477

478

479

480

A. B ELLONI , V. C HERNOZHUKOV AND L. WANG

with probability 1 − α(1 + o(1)).

A similar corollary applies in the non-Gaussian case.

C OROLLARY 3 (A SYMPTOTIC B OUNDS IN THE NON -G AUSSIAN CASE ). Consider

the model described in (1.1)-(1.4). Suppose that Conditions RE, M, and R hold, and

(s/n) log(p/α) → 0. Let λ be specified according to the asymptotic, exact, or semi-exact option

in (2.15). Fix any µ > 1. Then, for n large enough, δb = βb − β0 obeys

r

r

σ 2s log(2p/α)

σ 2s log(2p/α)

b

b

kδk2,n 6 2(1 + c)µ

and kδk2 6 2(1 + c)µ 2

,

κ

n

κ

n

with probability at least 1 − α(1 + o(1)).

As in Lemma 2, in order to achieve Gaussian-like asymptotic conclusions in the non-Gaussian

case, we impose stronger conditions on the growth of p relative to n. The result exploits that

these conditions imply that the average square of the noise concentrates around its expectation

as stated in (2.16).

4. C OMPUTATIONAL P ROPERTIES OF S QUARE - ROOT L ASSO

The second main result of this paper is to formulate the Square-root Lasso as a conic programming problem, with constraints given by a second order cone (also informally known as

the ice-cream cone). This formulation allows us to implement the estimator using efficient algorithmic methods, such as interior point methods, which provide polynomial-time bounds on

computational time (Nesterov & Nemirovskii, 1993; Renegar, 2001), and modern first order

methods that have been recently extended to handle conic programming problems of a very large

size (Nesterov, 2005, 2007; Lan et al., 2011; Beck & Teboulle, 2009; Becker et al., 2010a). Before describing the details, it is useful to recall that a conic programming problem takes the form

min c0 u subject to Au = b and u ∈ C, where C is a cone. This formulation naturally nests classical LP as the case in which the cone is simply the non-negative orthant. Conic programming

has a tractable dual form max b0 w subject to w0 A + s = c and s ∈ C ∗ , where C ∗ := {s : s0 u >

0, for all u ∈ C} is the dual cone of C. A particularly important, highly tractable class of problems arises when C is the ice-cream cone, C = Qn+1 = {(v, t) ∈ Rn × R : t > kvk}, which is

self-dual, C = C ∗ .

Square-root Lasso is precisely a conic programming problem with second-order conic constraints. Indeed, we can reformulate (1.8) as follows:

¯

p

´ ¯

t

λ X³ +

¯ v = yi − x0i β + + x0i β − , i = 1, . . . , n,

min √ +

βj + βj− ¯ i

(4.19)

p

p

¯ (v, t) ∈ Qn+1 , β + ∈ R+ , β − ∈ R+ .

n n

t,v,β + ,β −

i=1

Furthermore, we can show that this problem admits the following dual problem:

n

1X

maxn

yi ai

a∈R

n

i=1

n

¯ X

¯ |

xij ai /n| 6 λ/n, j = 1, . . . , p,

¯

¯ i=1

¯

√

kai k 6 n.

(4.20)

From a statistical perspective, this dual problem maximizes the sample correlation of the score

variable ai with the outcome variables yi subject to the constraint that the score ai is approximately uncorrelated with the covariates xij . The optimal scores b

ai are in fact equal to the resid-

Biometrika style

481

482

483

484

485

486

487

488

489

490

491

492

493

494

495

496

497

498

499

500

501

502

503

504

505

506

507

508

509

510

511

512

513

514

515

516

517

518

519

520

521

522

523

524

525

526

527

528

11

b for all i = 1, ..., n, up to a renormalization factor. The optimal score plays a key

uals yi − x0i β,

b

role in deriving sparsity bounds on β.

We say that strong duality holds between a primal and its dual problem if their optimal values

are the same (i.e. no duality gap). This is typically an assumption for interior point methods and

first order methods to work. We formalize the preceding discussion in the following theorem.

T HEOREM 2. The Square-root Lasso problem (1.8) is equivalent to conic programming problem (4.19), which admits the dual problem (4.20) for which strong duality holds. The solution βb

to the problem (1.8), the solution βb+ , βb− , vb = (b

v1 , ..., vbn ) to (4.19), and the solution b

a to (4.20)

√

b i = 1, ..., n, and b

a = nb

v /kb

v k.

are related via βb = βb+ − βb− , vbi = yi − x0i β,

Recall that strong duality holds between a primal and its dual problem if their optimal values

are the same, that is, there is no duality gap. The strong duality demonstrated in the theorem is in

fact required for the use of both the interior point and first order methods adapted to conic programs. We have implemented these methods, as well as a coodinatewise method, for Square-root

LASSO and made the code available through the author’s webpages. Interestingly, our implementations of Square-root LASSO run at least as fast as the corresponding implementations of

these methods for Lasso, for instance, the Sdpt3 implementation of interior point method for

LASSO (Toh et al., 1999, 2010), and the Tfocs implementation of first order methods (Becker

et al., 2010b). The running times are recorded in an extended working version of this paper.

5. E MPIRICAL P ERFORMANCE OF S QUARE - ROOT L ASSO R ELATIVE TO L ASSO

In this section we use Monte-Carlo experiments to assess the finite sample performance

of the following estimators: (a) the Infeasible Lasso, which knows σ which is unknown

outside the experiments, (b) post Infeasible Lasso, which applies ordinary least squares to

the model selected by Infeasible Lasso, (c) Square-root Lasso, which does not know σ, and

(d) post-Square-root Lasso, which applies ordinary least squares to the model selected by

Square-root Lasso.

We set the penalty level for Infeasible Lasso and Square-root Lasso according to the asymptotic options (1.6) and (1.9) respectively, with 1 − α = 0.95 and c = 1.1. We have also performed experiments where we set the penalty levels according to the exact option. The results

are similar to the case with the penalty level set according to the asymptotic option, so we only

report the results for the latter.

We use the linear regression model stated in the introduction as a data-generating process,

with either standard normal or t(4) errors:

√

(a) ²i ∼ N (0, 1) or (b) ²i ∼ t(4)/ 2,

so that E[²2i ] = 1 in either case. We set the true parameter value as

β0 = [1, 1, 1, 1, 1, 0, ..., 0]0 ,

(5.21)

and the standard deviation as σ. We vary the parameter σ between 0.25 and 3. The number of

regressors is p = 500, the sample size is n = 100, and we used 1000 simulations for each design.

We generate regressors as xi ∼ N (0, Σ) with the Toeplitz correlation matrix Σjk = (1/2)|j−k| .

We use as benchmark the performance of the oracle estimator which knows the which knows the

true support of β0 which is unknown outside the experiment.

A. B ELLONI , V. C HERNOZHUKOV AND L. WANG

²i ∼ N(0,1)

5

Relative Empirical Risk

529

530

531

532

533

534

535

536

537

538

539

540

541

542

543

544

545

546

547

548

549

550

551

552

553

554

555

556

557

558

559

560

561

562

563

564

565

566

567

568

569

570

571

572

573

574

575

576

Relative Empirical Risk

12

4

3

2

1

0

5

4

3

2

1

0

0

0.5

1

Infeasible Lasso

1.5

σ

2

2.5

Square-root Lasso

3

√

²i ∼ t(4)/ 2

0

0.5

post Infeasible Lasso

1

1.5

σ

2

2.5

3

post Square-root Lasso

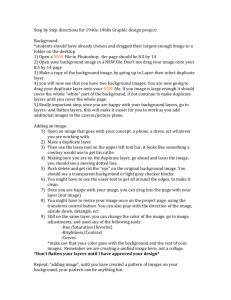

Fig. 2. The average relative empirical risk of the estimators with respect to the oracle estimator, that knows the true

support, as a function of the standard deviation of the noise σ.

b denote the support selected by the

Fig. 3. Let T = supp(β0 ) denote the true unknown support and Tb = supp(β)

square-root Lasso estimator or Infeasible Lasso. As a function of the noise level σ, we display the average number

of regressors missed from the true support E|T \ Tb| and the average number of regressors selected outside the true

support E|Tb \ T |.

We present the results of computational experiments for designs a) and b) in Figs. 2 and 3. For

each model, the figures show the following quantities as a function of the standard deviation of

e

the noise σ for each estimator β:

Biometrika style

577

578

579

580

581

582

583

584

585

586

587

588

589

590

591

592

593

594

595

596

597

598

599

600

601

602

603

604

605

606

607

608

609

610

611

612

613

614

615

616

617

618

619

620

621

622

623

624

13

r Figure 2: the relative average empirical risk with respect to the oracle estimator β ∗ ,

·q

E

¸

·q

¸

En [(x0i (βe − β0 ))2 ] /E

En [(x0i (β ∗ − β0 ))2 ] ,

r Figure 3: the average number of regressors missed from the true model and the average number of regressors selected outside the true model, respectively

e and E[|supp(β)

e \ supp(β0 )|].

E[|supp(β0 ) \ supp(β)|]

Figure 2, left panel, shows the empirical risk for the normal case. We see that, for a wide

range of the standard deviation of the noise σ, Infeasible Lasso and Square-root Lasso perform similarly in terms of empirical risk, although Infeasible Lasso outperforms somewhat

Square-root Lasso. At the same time, post-Square-root Lasso outperforms somewhat post Infeasible Lasso, and in fact outperforms all of the estimators considered. Overall, we are pleased with

the performance of Square-root Lasso and post-Square-root Lasso relatively to Lasso and postLasso. Despite not knowing σ, Square-root Lasso performs comparably to the standard Lasso

that knows σ. These results are in close agreement with our theoretical results, which state that

the upper bounds on empirical risk for Square-root Lasso asymptotically approach the analogous

bounds for Infeasible Lasso.

Figure 3 provide additional insight into the performance of the estimators. Figure 3 shows that

such heavier penalty translates into Square-root Lasso achieving better sparsity than Infeasible

Lasso. This in turn translates into post-Square-root Lasso outperforming post Infeasible Lasso

in terms of empirical risk, as we saw in Fig. 2. We note that the finite-sample differences in empirical risk for Infeasible Lasso and Square-root Lasso arise primarily due to Square-root Lasso

having a larger bias than Infeasible Lasso. This bias arises because Square-root Lasso uses an

b in place of σ 2 . In these experiments, as we changed

b β)

effectively heavier penalty induced by Q(

√b b

the standard deviation of the noise, the average values of Q(

β)/σ were between 1.18 and 1.22.

Finally, Figure 2, right panel, shows the empirical risk for the t(4) case. We see that the results

for the Gaussian case carry over to the t(4) case, with nearly undetectable changes. In fact, the

performance of Infeasible Lasso and Square-root Lasso under t(4) errors nearly coincides with

their performance under Gaussian errors. This is exactly what is predicted by our theoretical

results, using moderate deviation theory.

ACKNOWLEDGEMENT

We would like to thank Isaiah Andrews, Denis Chetverikov, JB Doyle, Ilze Kalnina, and James

MacKinnon for very helpful comments.

A PPENDIX 1

Proofs of Theorems 1 and 2

Proof of Theorem 1. Step 1. In this step we show that δb = βb − β0 ∈ ∆c̄ under the prescribed penalty

level. By definition of βb

q

q

b − Q(β

b 1 6 λ (kδbT k1 − kδbT c k1 ),

b β)

b 0 ) 6 λ kβ0 k1 − λ kβk

Q(

(A1)

n

n

n

where the last inequality holds because

b 1 = kβ0T k1 − kβbT k1 − kβbT c k1 6 kδbT k1 − kδbT c k1 .

kβ0 k1 − kβk

(A2)

A. B ELLONI , V. C HERNOZHUKOV AND L. WANG

14

625

626

627

628

629

630

631

632

633

634

635

636

637

638

639

640

641

642

643

644

645

646

647

648

649

650

651

652

653

654

655

656

657

658

659

660

661

662

663

664

665

666

667

668

669

670

671

672

Also, if λ > cnkS̃k∞ then

q

q

b − Q(β

b 1 > − λ (kδbT k1 + kδbT c k1 ),

b β)

b 0 ) > S̃ 0 δb > −kS̃k∞ kδk

Q(

cn

q

b Combining (A1) with (A3) we obtain

where the first inequality hold by convexity of Q.

−

λ

λ b

(kδT k1 + kδbT c k1 ) 6 (kδbT k1 − kδbT c k1 ),

cn

n

(A3)

(A4)

that is

c+1 b

· kδT k1 = c̄kδbT k1 , or δb ∈ ∆c̄ .

(A5)

c−1

Step 2. In this step we derive bounds on the estimation error. We shall use the following relations:

kδbT c k1 6

b − Q(β

b 2 − 2En σ²x0 δ,

b

b β)

b 0 ) = kδk

Q(

2,n

µq

¶ µq

¶

q

q

b − Q(β

b + Q(β

b − Q(β

b β)

b 0) =

b β)

b 0)

b β)

b 0) ,

Q(

Q(

Q(

q

b 6 2 Q(β

b 1,

b 0 )kS̃k∞ kδk

2|En [σ²x0 δ]|

√ b

skδk2,n

kδbT k1 6

for δb ∈ ∆c̄ ,

κc̄

where (A8) holds by Holder inequality and the last inequality holds by the definition of κc̄ .

Using (A1) and (A6)-(A9) we obtain

!

µq

¶ Ã√ b

q

q

λ

sk

δk

2,n

b 2 6 2 Q(β

b 1+

b + Q(β

b 0 )kS̃k∞ kδk

b β)

b 0)

kδk

Q(

− kδbT c k1 .

2,n

n

κc̄

Also using (A1) and (A9) we obtain

Ã√

!

q

q

b 2,n

λ

skδk

b

b

b

b

Q(β) 6 Q(β0 ) +

− kδT c k1 .

n

κc̄

(A6)

(A7)

(A8)

(A9)

(A10)

(A11)

Combining inequalities (A11) and (A10), we obtain

µ √

¶2

√

q

q

q

λ s b

λ s b

2

b

b

b

b

b 0 ) λ kδbT c k1 .

kδk2,n 6 2 Q(β0 )kS̃k∞ kδk1 + 2 Q(β0 )

kδk2,n +

kδk2,n − 2 Q(β

nκc̄

nκc̄

n

Since λ > cnkS̃k∞ , we obtain

µ √

¶2

√

q

q

λ s b

λ s b

2

b

b

b

b

kδk2,n +

kδk2,n ,

kδk2,n 6 2 Q(β0 )kS̃k∞ kδT k1 + 2 Q(β0 )

nκc̄

nκc̄

and then using (A9) we obtain

"

µ √ ¶2 #

µ

¶q

√

λ s

b 2 6 2 1 +1

b 2,n .

b 0 ) λ s kδk

1−

kδk

Q(β

2,n

nκc̄

c

nκc̄

Provided that

√

λ s

nκc̄

6 ρ < 1 and solving the inequality above we obtain the bound stated in the theorem.¤

Proof of Theorem 2. The equivalence of Square-root Lasso problem (1.8) and the conic programming

problem (4.19) follows immediately from the definitions. To establish the duality, for e = (1, . . . , 1)0 , we

can write (4.19) in the matrix form as

¯

t

λ 0 + λ 0 − ¯¯ v + Xβ + − Xβ − = Y

min √ + e β + e β ¯

p

p

¯ (v, t) ∈ Qn+1 , β + ∈ R+ , β − ∈ R+

n

n n

t,v,β + ,β −

Biometrika style

673

674

675

676

677

678

679

680

681

682

683

684

685

686

687

688

689

690

691

692

693

694

695

696

697

698

699

700

701

702

703

704

705

706

707

708

709

710

711

712

713

714

715

716

717

718

719

720

15

By the conic duality theorem, this has the dual

√

¯ t

¯ s = 1/ n, a + sv = 0

¯

max

Y 0 a ¯ X 0 a + s+ = λe/n, −X 0 a + s− = λe/n

¯

a,st ,sv ,s+ ,s−

(sv , st ) ∈ Qn+1 , s+ ∈ Rp+ , s− ∈ Rp+

The constraints X 0 a + s+ =√λ/n and −X 0 a + s− = λ/n leads to kX 0 ak∞ 6 λ/n. The conic constraint

(sv , st ) ∈ Qn+1 leads to 1/ n = st > ksv k = kak. By scaling the variable a by n we obtain the stated

dual problem.

Since the primal problem is strongly feasible, strong duality holds by Theorem 3.2.6 of Renegar (2001).

Pn

Pp

Pn

b

βk

√

Thus, by strong duality, we have n1 i=1 yi b

ai = kY −X

+ nλ j=1 |βbj |. Since n1 i=1 xij b

ai βbj =

n

λ|βbj |/n for every j = 1, . . . , p, we have

p

p

n

n

n

X

b

b

kY − X βk

kY − X βk

1X X

1X

1X

b

√

√

yi b

ai =

+

xij b

ai βj =

+

b

ai

xij βbj .

n i=1

n

n

n

n

j=1

i=1

i=1

j=1

i

Pn h

√

b ai = kY − X βk/

b √n. Since kb

Rearranging the terms we have n1 i=1 (yi − x0i β)b

ak 6 n the equality

q

√

b

b = (Y − X β)/

b

b

b β).

can only hold for b

a = n(Y − X β)/kY

− X βk

Q(

¤

A PPENDIX 2

Proofs of Lemmas 1 and 2

Proof of Lemma 1. p

Statement (i) holds by definition. To show statement (ii), we define tn = Φ−1 (1 −

α/2p) and 0 < rn = ` log(1/α)/n < 1. It is known that log(p/α) < t2n < 2 log(2p/α) when p/α > 8.

Then

√

pr(cΛ > c ntn |X, F0 = Φ)

p

6 p max pr(|n1/2 En [xj ²]| > tn (1 − rn )|F0 = Φ) + pr( En [²2 ] < 1 − rn |F0 = Φ)

16j6p

p

6 2p Φ̄(tn (1 − rn )) + pr( En [²2 ] < 1 − rn |F0 = Φ).

By Chernoff tail bound for χ2 (n), Lemma 1 in Laurent & Massart (2000), we know that

p

pr( En [²2 ] < 1 − rn |F0 = Φ) 6 exp(−nrn2 /4),

and

φ(tn (1 − rn ))

φ(tn ) exp(t2n rn − 12 t2n rn2 )

= 2p

·

tn (1 − rn )

tn

1 − rn

µ

¶

1 2 2

2

2

1 + tn exp(tn rn − 2 tn rn )

1 exp(t2n rn )

6 2pΦ̄(tn ) 2 ·

6α 1+ 2

tn

1 − rn

tn

1 − rn

µ

¶

1

exp(2 log(2p/α)rn )

6 α 1+

.

log(p/α)

1 − rn

√

For statement (iii), it is sufficient

to show that pr(cΛ > cv ntn |X,

p

pF0 = Φ) 6 α. It can be seen that

there exists a v 0 such that v 0 > 1 + 2/ log(2p/α) and 1 − v 0 /v > 2 log(2/α)/n so that

p

√

pr(cΛ > cv ntn |X, F0 = Φ) 6 ppr(|n1/2 En [xj ²]| > v 0 tn |F0 = Φ) + pr( En [²2 ] < v 0 /v|F0 = Φ)

p

= 2pΦ̄(v 0 tn ) + pr( En [²2 ] < v 0 /v|F0 = Φ).

2p Φ̄(tn (1 − rn )) 6 2p

Similarly, by Chernoff tail bound for χ2 (n), Lemma 1 in Laurent & Massart (2000),

p

pr( En [²2 ] < v 0 /v|F0 = Φ) 6 exp(−n(1 − v 0 /v)2 /4) 6 α/2.

A. B ELLONI , V. C HERNOZHUKOV AND L. WANG

16

721

722

723

724

725

726

727

728

729

730

731

732

733

734

735

736

737

738

739

740

741

742

743

744

745

746

747

748

749

750

751

752

753

754

755

756

757

758

759

760

761

762

763

764

765

766

767

768

And

φ(v 0 tn )

φ(tn ) exp(− 12 t2n (v 02 − 1))

=

2p

·

v 0 tn

tn

v0

µ

¶

µ

¶

1 2

exp(− 2 tn (v 02 − 1))

1

1 exp(− 21 t2n (v 02 − 1))

6 2pΦ̄(tn ) 1 + 2 ·

=α 1+ 2

tn

v0

tn

v0

µ

¶

02

1

exp(− log(2p/α)(v − 1))

6 α 1+

6 2α exp(− log(2p/α)(v 02 − 1)) < α/2.

log(p/α)

v0

√

Putting them together, we know that pr(cΛ > cv ntn |X, F0 = Φ) 6 α.

Finally, the asymptotic result follows directly from the finite sample boundspand noting that p/α

√ →∞

and that under the growth condition we can choose ` → ∞ so that ` log(p/α) log(1/α) = o( n). ¤

2pΦ̄(v 0 tn ) 6 2p

Proof of Lemma 2. Statements (i) and (ii) hold by definition. To show statement (iii), consider first the

2

case of 2 < q 6 8, we define tn = Φ−1 (1 − α/2p) and rn = α− q n−[(1−2/q)∧1/2] `n , for some `n which

grows to infinity but so slowly that the condition stated below is satisfied. Then for any F = Fn and

X = Xn that obey Condition M:

√

pr(cΛ > c ntn |X, F0 = F )

p

6(1) p max pr(|n1/2 En [xj ²]| > tn (1 − rn )|F0 = F ) + pr( En [²2 ] < 1 − rn |F0 = F )

16j6p

6(2) p max pr(|n1/2 En [xj ²]| > tn (1 − rn )|F0 = F ) + o(α)

16j6p

=(3) 2p Φ̄(tn (1 − rn ))(1 + o(1)) + o(α) =(4) 2p

= 2p

=(5)

φ(tn (1 − rn ))

(1 + o(1)) + o(α)

tn (1 − rn )

φ(tn ) exp(t2n rn − t2n rn2 /2)

(1 + o(1)) + o(α)

tn

1 − rn

φ(tn )

2p

(1 + o(1)) + o(α) =(6) 2p Φ̄(tn )(1 + o(1)) + o(α) = α(1 + o(1)),

tn

where (1) holds by the union bound; (2) holds by the application of either Rosenthal’s inequality Rosenthal

(1970) for the case of q > 4 and Vonbahr-Esseen’s inequalities von Bahr & Esseen (1965) for the case of

2 < q 6 4,

p

pr( En [²2 ] < 1 − rn |F0 = F ) 6 pr(|En [²2 ] − 1| > rn |F0 = F ) . α`−q/2

= o(α),

(A12)

n

(4) and (6) by φ(t)/t ∼ Φ̄(t) as t → ∞; (5) by t2n rn = o(1), which holds if

2

log(p/α)α− q n−[(1−2/q)∧1/2] `n = o(1). Under our condition log(p/α) = O(log n), this condition

is satisfied for some slowly growing `n , if

α−1 = o(n(q/2−1)∧q/4 /(log n)q/2 ).

(A13)

To verify relation (3), note that by Condition M and Slastnikov’s theorem on moderate deviations,

Slast√

nikov (1982) and Rubin & Sethuraman (1965), we have that uniformly in 0 6 |t| 6 k log n for some

k 2 < q − 2, uniformly in 1 6 j 6 p and for any F = Fn ∈ F,

pr(n1/2 |En xj ²| > t|F0 = F )

→ 1,

2Φ̄(t)

p

p

so the relation (3) holds for t = tn (1 − rn ) 6 P2 log(2p/α) 6 η(q − 2) log n for η < 1 by assumpn

tion. We apply Slastnikov’s theorem to n−1/2 | i=1 zi,n | for zi,n = xij ²i , where we allow the design

X, the law F , and index j to be (implicitly) indexed by n. Slastnikov’s theorem then applies provided supn,j6p En EFn |zn |q = supn,j6p En |xj |q EFn |²|q < ∞, which is implied by our Condition M,

and where we used the condition that the design is fixed, so that ²i are independent of xij . Thus, we ob-

Biometrika style

769

770

771

772

773

774

775

776

777

778

779

780

781

782

783

784

785

786

787

788

789

790

791

792

793

794

795

796

797

798

799

800

801

802

803

804

805

806

807

808

809

810

811

812

813

814

815

816

17

tained the moderate deviation result uniformly in 1 6 j 6 p and for any sequence of distributions F = Fn

and designs X = Xn that obey our Condition M.

Next suppose that q > 8. Then the same argument applies, except that now relation (2) could

p also be

log n/n;

established by using Slastnikov’s

theorem

on

moderate

deviations.

In

this

case

redefine

r

=

k

n

p

then, for some constant k 2 < (q/2) − 2 we have

p

2

pr( En [²2 ] < 1 − rn |F0 = F ) 6 pr(|En [²2 ] − 1| > rn |F0 = F ) . n−k ,

(A14)

so the relation (2) holds if

2

1/α = o(nk ).

(A15)

This applies whenever q > 4, and this results in weaker requirements on α if q > 8. The relation (5) then

follows if t2n rn = o(1), which is easily satisfied for the new rn , and the result follows.

Combining conditions in (A13) and (A15) to give the weakest restrictions on the growth of α−1 , we

obtain the growth conditions stated in the lemma.

0

To

√ show statement (iv) of the lemma, we note that it suffices to show that for any ν > 1, pr(cΛ >

0

cν ntn |X, F0 = F ) = o(α), which follows analogously to the proof of statement (iii); we omit the

details for brevity.

¤

R EFERENCES

B ECK , A. & T EBOULLE , M. (2009). A fast iterative shrinkage-thresholding algorithm for linear inverse problems.

SIAM Journal on Imaging Sciences 2, 183–202.

B ECKER , S., C AND ÈS , E. & G RANT, M. (2010a). Templates for convex cone problems with applications to sparse

signal recovery. ArXiv .

B ECKER , S., C AND ÈS , E. & G RANT, M. (2010b). Tfocs v1.0 user guide (beta) .

B ELLONI , A. & C HERNOZHUKOV, V. (2010). `1 -penalized quantile regression for high dimensional sparse models.

accepted at the Annals of Statistics .

B ICKEL , P. J., R ITOV, Y. & T SYBAKOV, A. B. (2009). Simultaneous analysis of Lasso and Dantzig selector. Annals

of Statistics 37, 1705–1732.

B UNEA , F., T SYBAKOV, A. B. & W EGKAMP, M. H. (2007). Aggregation for Gaussian regression. The Annals of

Statistics 35, 1674–1697.

C ANDES , E. & TAO , T. (2007). The Dantzig selector: statistical estimation when p is much larger than n. Ann.

Statist. 35, 2313–2351.

H UANG , J., H OROWITZ , J. L. & M A , S. (2008). Asymptotic properties of bridge estimators in sparse highdimensional regression models. The Annals of Statistics 36, 587613.

L AN , G., L U , Z. & M ONTEIRO , R. D. C. (2011). Primal-dual first-order methods with o(1/²) interation-complexity

for cone programming. Mathematical Programming , 1–29.

L AURENT, B. & M ASSART, P. (2000). Adaptive estimation of a quadratic functional by model selection. Ann. Statist.

28, 1302–1338.

L OUNICI , K., P ONTIL , M., T SYBAKOV, A. B. & VAN DE G EER , S. (2010). Taking advantage of sparsity in multitask learning. arXiv:0903.1468v1 [stat.ML] .

M EINSHAUSEN , N. & Y U , B. (2009). Lasso-type recovery of sparse representations for high-dimensional data.

Annals of Statistics 37, 2246–2270.

N ESTEROV, Y. (2005). Smooth minimization of non-smooth functions, mathematical programming. Mathematical

Programming 103, 127–152.

N ESTEROV, Y. (2007). Dual extrapolation and its applications to solving variational inequalities and related problems.

Mathematical Programming 109, 319–344.

N ESTEROV, Y. & N EMIROVSKII , A. (1993). Interior-Point Polynomial Algorithms in Convex Programming.

Philadelphia: Society for Industrial and Applied Mathematics (SIAM).

R ENEGAR , J. (2001). A Mathematical View of Interior-Point Methods in Convex Optimization. Philadelphia: Society

for Industrial and Applied Mathematics (SIAM).

ROSENTHAL , H. P. (1970). On the subspaces of Lp (p > 2) spanned by sequences of independent random variables.

Israel J. Math. 8, 273–303.

RUBIN , H. & S ETHURAMAN , J. (1965). Probabilities of moderatie deviations. Sankhyā Ser. A 27, 325–346.

S LASTNIKOV, A. D. (1982). Large deviations for sums of nonidentically distributed random variables. Teor. Veroyatnost. i Primenen. 27, 36–46.

T IBSHIRANI , R. (1996). Regression shrinkage and selection via the lasso. J. Roy. Statist. Soc. Ser. B 58, 267–288.

18

817

818

819

820

821

822

823

824

825

826

827

828

829

830

831

832

833

834

835

836

837

838

839

840

841

842

843

844

845

846

847

848

849

850

851

852

853

854

855

856

857

858

859

860

861

862

863

864

A. B ELLONI , V. C HERNOZHUKOV AND L. WANG

T OH , K. C., T ODD , M. J. & T UTUNCU , R. H. (1999). Sdpt3 — a matlab software package for semidefinite programming. Optimization Methods and Software 11, 545–581.

T OH , K. C., T ODD , M. J. & T UTUNCU , R. H. (2010). On the implementation and usage of sdpt3 – a matlab

software package for semidefinite-quadratic-linear programming, version 4.0. Handbook of semidefinite, cone and

polynomial optimization: theory, algorithms, software and applications Edited by M. Anjos and J.B. Lasserre.

VAN DE G EER , S. A. (2008). High-dimensional generalized linear models and the lasso. Annals of Statistics 36,

614–645.

VON BAHR , B. & E SSEEN , C.-G. (1965). Inequalities for the rth absolute moment of a sum of random variables,

1 6 r 6 2. Ann. Math. Statist 36, 299–303.

WAINWRIGHT, M. (2009). Sharp thresholds for noisy and high-dimensional recovery of sparsity using `1 -constrained

quadratic programming (lasso). IEEE Transactions on Information Theory 55, 2183–2202.

Z HANG , C.-H. & H UANG , J. (2008). The sparsity and bias of the lasso selection in high-dimensional linear regression. Ann. Statist. 36, 1567–1594.

Z HANG , T. (2009). On the consistency of feature selection using greedy least squares regression. Journal of Machine

Learning Research 10, 555–568.

Z HAO , P. & Y U , B. (2006). On model selection consistency of lasso. J. Machine Learning Research 7, 2541–2567.

[Received September 2010. Revised January 2011]