TR_twocol

advertisement

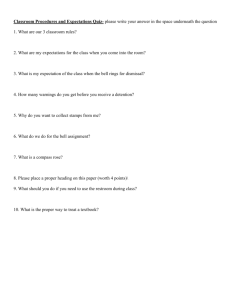

Setting the Record Straight on Quantum Entanglement Caroline H Thompson* (Started March 16, 2004) Abstract All too often, in serious as well as popular articles, we see statements to the effect that “quantum entanglement” of separated particles is an experimentally established fact and the world cannot be explained using the methods of “local realism”. The experts, however, know full well that there are “loopholes” in the experiments concerned — the “Bell tests”. What they do not appear to know is just how serious the loopholes are, how readily they allow for alternative “local realist” explanations for the actual observations, or how to conduct valid tests for their presence. I set out, with the aid of an intuitive model, to explain how the “fair sampling” loophole works and introduce briefly some other less well known ones. Early attempts at exploiting loopholes in order to produce “realist” explanations for the observations were mostly in fact unrealistic, created in ignorance of certain important experimental facts. Prestigious journals have been driven in desperation to reject all papers on the Bell test loopholes as being of no interest to physics. The situation has now changed, and ought to be of interest to physics, since new realist work has two vital consequences: it restores the principle of local causality, necessary for the rational conduct of science, and redeems the wave model of light, which has been quite unnecessarily replaced by the “photon” model. There is also a practical consideration: further development of applications that are currently claimed as involving quantum entanglement will be easier if it is admitted that they do not do so, any “success” they achieve being in fact due to ordinary correlations — shared values set at the source. 1: INTRODUCTION Zeilinger and Greenberger, in their widely publicised “Petition to the American Physical Society” of April 10, 20021, typify the attitude of the establishment towards the supposed fact of “quantum entanglement”. They not only take it for granted that it really happens but proceed to build on the idea, implying that it is an essential feature of phenomena as diverse as quantum computing, neutron interferometry and Bose-Einstein Condensates. Yet they, as experts in the field, know that there is no firm evidence, no actual experimental result to which they can point and say: “Here we really do see entanglement.” They know that their interpretation of the experimental evidence — the observed infringements of “Bell inequalities” — rests on assumptions that they consider “plausible” but which cannot be justified scientifically. They believe that, in view of the supposed universal success of quantum mechanics (QM) in other areas, some day a perfect, “loophole-free”, experiment will be conducted, and this will prove once and for all that you cannot model separated “entangled particles” realistically — you cannot assign separate real properties (“hidden variables”) to each. It seems clear to me that they have not fully understood just how readily their assumptions can fail, or what an important role they play in the experiments. * Fig. 1: Anne, Bob and the Chaotic Ball. The letter S is visible while N, opposite to it, is out of sight. a and b are directions in which the assistants are viewing the ball; the angle between them. The main purpose of the present paper is to make available to all the means to understand exactly what is involved in the best-known loophole, variously known as the “fair sampling”, “efficiency” or “variable detection probability” one. I do this by means of an analogy that is, like the loophole itself, very straightforward, demanding no knowledge of QM, no mathematics, just geometry and some common sense facts about how probabilities work. Before introducing my “Chaotic Ball” model (see fig. 1), though, I feel it necessary to say something about the effects that belief in QM and the supposed impossibility of local realist models has had on a section of the scientific community. Email: ch.thompson1@virgin.net; Web site: http://freespace.virgin.net/ch.thompson1/ D:\106742796.doc, 15/02/2016 Setting the Record Straight 1.1: The consequences of belief in “entanglement” Let me make it quite clear from the outset: I do not regard entanglement of separated particles as physically possible. I class it as one of the indications that QM is not a fully logical theory2. Where I appear to cast the blame for the present sorry state of affairs on a few individuals, therefore, this is not really so. I recognise that illogical decisions are the inevitable result of trying to conduct science within an illogical framework. Ever since Alain Aspect’s experiments of 1981-23, people who have believed what the scientific press has told them — that we now have experimental evidence for quantum entanglement — have searched in desperation for a means to reconcile “violation of the Bell inequality” with local realism. Everyone, experts included, would like to save local realism if they can. The theorists’ approach is varied. Zeilinger, for instance, says that nothing mysterious really happens in his experiments, as it is just a matter of the natural behaviour of conditional probabilities — the effect of change of information4. Some argue that Bell’s logic was wrong, that he should, for example, have used Bayesian instead of traditional statistical methods5. Many are confused by inappropriate notation6. Others argue that some kind of faster-thanlight signals must be being transferred between the two sides of the experiments, though quite how these could produce the observations has never been spelled out 7. Yet others suggest a real link between the two sides, analogous to a connecting pipe8. One would naturally assume that “experts”, at least, have access to the full facts, but the writers of popular accounts certainly do not — which makes the task of the intelligent amateur unfairly difficult. How are they to make a rational assessment of the situation when they have been misinformed about which test has been infringed (note that Bell’s original versions have never been used), or are assured that Aspect, for instance, produced coincidence curves that covered almost the full range from 0.0 to 1.0 (they covered this range only after both subtraction of accidentals and “normalisation”)? Regarding local realist explanations, most accounts suggest just the one possibility — the basic model covering Bohm’s thoughtexperiment and resulting in a straight line prediction. They are not told of the curve, remarkably similar to the QM prediction, that comes from the standard local realist assumptions for the actual experiments. The attempt to live with entanglement, combined with belief in the photon as an indivisible particle, appears also to have had grave consequences on scientific method. The QM model for the Bell test experiments does not have — and, indeed, cannot have without drastic change — enough parameters. The result is that the experimenter is free to choose certain key settings of his apparatus, those most relevant to the present article concerning the intensity of light used and the detailed specification of the photodetectors. He can choose both the make and the settings of his photodetectors as he wishes, justifying his choice by the apparently laudable aim of producing one D:\106742796.doc click for each input photon. But he cannot fail to notice that his choice in fact affects the value he obtains for his Bell test statistic. QM tells him that all he is doing by choosing a different setting is changing the “quantum efficiency” — the probability of detection per photon — which ought to have no effect on the result. Rather than challenge the theory, though, the experimenter takes advantage of the resulting flexibility. He publishes just one result, not all the others obtained in preliminary runs with different detector settings or different beam intensities9. His aim seems to have become not to search for the best possible explanation for all his observations but to see if he can find particular conditions in which his apparatus seems to obey the QM formula. A further publication problem, common to the whole scientific endeavor, is the failure to publish both null results and anomalous ones that do not reach “statistical significance”. There are occasions on which it is the anomalies that are critical. Clearly these should be investigated, increasing replication if necessary. A few are to be found in the PhD thesis of the most famous of the Bell test experimenters, Alain Aspect, of which more in the Section 2.2 below. 1.2: Background to the Bell tests, the “fair sampling” assumption and the Chaotic Ball model Bell devised the original test in 196410. His inequality was designed to settle experimentally a dispute that had been going on since the 1930’s, centered around Einstein, Podolsky and Rosen’s 1935 paper11, which had attempted to clarify the consequences of acceptance of the “nonseparable” formula for separated particles that was implied by the quantum formalism. The situation Bell had in mind was that discussed by Bohm12, in which atoms that had previously been part of the same molecule separated and were detected after passage between pairs of “Stern-Gerlach” magnets. It seemed reasonable for him to assume that every atom was detected. It would be categorised either as “spin up” or “spin down” according as to which way it had been deflected. When it came to real experiments, however, it was found that the only practical ones13 were those involving pairs of light signals, treated as “photons”. It was recognised that these were not all detected: photodetectors only register a proportion of the input photons. There was a problem: it was not known in advance how many pairs of photons were produced by the source. Clauser, Horne, Shimony and Holt published in 1969 a paper that has been interpreted as proposing what has now become the standard test — the CHSH test. The authors did not in fact recommend it, saying that in practice a different test (effectively the version published by Clauser and Horne in 197414 — the CH74 test) should be used instead, and until Aspect’s first 1982 experiment this is what was used. Pearle in 197015 had explained just why the CHSH test was unsatisfactory: unless the detection efficiency was very high, it was possible there could be “variable detection probabilities” and these could cause a local realist model to violate it. Pearle’s paper, however, does 2 Setting the Record Straight not appear to have been widely read. It is highly mathematical, with little appeal to the intuition. demonstrate more clearly the failure of the fair sampling assumption for real optical Bell tests. The main features of the CHSH and CH74 tests are set out in Appendix A. The critical difference is that the CHSH test relies on “fair sampling”, whilst the CH74 one does not. The latter requires only the relatively innocuous assumption of “no enhancement” — the presence of a polariser does not, for any hidden variable value, increase the probability of detection. The Chaotic Ball model presented here was designed in 1994 in order to move the above discussion to an intuitive level. The model does not pretend to represent any real experiment, only the principle involved. For the real experiments, it is better to work with algebraic models, introduced briefly later (see Section 2.3). This is because of the geometrical differences between spins (for which it is natural to assume each represented by a vector in three dimensions) and polarisation, which is defined in just two dimensions, with the directions diametrically opposite being equivalent. For the full generality of a local realist model it is best to use computer simulation, modelling each event as it happens19. One may well ask why the community reverted after 1982 to the CHSH test, having previously rejected it. Aspect considered it to be closer to Bell’s original than the CH74 one, the latter using only one of the two possible outputs from the polarisers and requiring extra experimental runs with polarisers absent, but this is not sufficient reason. The rejected test can be derived independently, is equally valid and is, in view of its non-dependency on fair sampling, in most situations superior. A possible reason, however, has recently come to my attention. There exist alternative derivations of the CH74 inequality. It can be derived in a way that parallels that of the CHSH one, copying a whole group of assumptions that depend on fair sampling. Aspect had seen one of these, and perhaps thought it the only one. He thought (and apparently continues to think16) that the CH74 test is not only a departure from Bell’s intentions but, if possible, subject to even more potential bias than the CHSH one. Where Aspect has led, others have followed. Again unfortunately so far as the pursuit of truth is concerned, the community has become convinced that it is not possible to test for fair sampling. In fact it is possible to do considerably more in this direction than has become customary. Aspect is among those who did perform the minimum test (see later), looking for constancy of the observed total coincidence count, but after finding (as reported in his PhD thesis 17) that there were slight variations, he did not decide to abandon the Bell test. Instead he devised a further modification that he thought would correct for any bias. Nobody appears to have checked his assumptions here. His test may not have been correct. Later workers appear to have followed Aspect’s example without much question, assuming the constancy of the total counts without necessarily fully testing it. As will be shown, the greatest variation is expected to be between detector settings midway between those used in the Bell tests. They are frequently not even investigated. A test of constancy for just the “Bell test angles” is not a test of fair sampling at all: the coincidence rates are expected in local realist models as well as quantum theory to all be equal, by symmetry. Though variations in total counts are small and so perhaps difficult to establish, a recent paper by Adenier and Khrennikov18 suggests a related test, using a straightforward subsidiary experiment, that should D:\106742796.doc 1.3: Other Bell test loopholes Other loopholes are covered briefly below, but for more information the reader is referred to papers available at http://arxiv.org/abs/quant-ph/ (9711044, 9903066, 9912082 and 0210150). The second of these covers the matter of “subtraction of accidentals”, which can be shown to be of crucial importance in certain experiments. The background to this is covered informally in a paper published in Accountability in Research20. 2: THE “CHAOTIC BALL” Much of the following material has been available electronically for some time, at http://arxiv.org/abs/quantph/0210150. The reader is reminded that the ball model as it stands corresponds to experiments that have never actually been done. It illustrates a principle only. Let us consider Bohm’s thought experiment, commonly taken as the standard example of the entanglement conundrum that Einstein, Podolsky and Rosen discussed in their seminal 1935 paper. A molecule is assumed to split into two atoms, A and B, of opposite spin, that separate in opposite directions. They are sent to pairs of “Stern-Gerlach” magnets, whose orientations can be chosen by the experimenter, and counts taken of the various “coincidences” of spin “up” and spin “down”. The obvious “realist” assumption is that each atom leaves the source with its own well-defined spin (a vector pointing in any direction), and it is the fact that the spins are opposite that accounts for the observed coincidence pattern. (The realist notion of spin cannot be the same as the quantum theory one, since in quantum theory “up” and “down” are concepts defined with respect to the magnet orientations, which can be varied. Under quantum mechanics, the particles exist in a superposition of up and down states until measured.) Bell’s original inequality was designed to apply to the estimated “quantum correlation21” between the particles. He proved that the realist assumption, based on the premise that the detection events for a given pair of particles are independent, leads to statistical limits on this correlation that are exceeded by the QM prediction. 3 Setting the Record Straight However, as mentioned above, his inequality depended on the assumption that all particles were detected. When detection is perfect there is no problem, but when it is not, the “detection loophole” creeps in. What assumptions can we reasonably make? Under quantum theory, the most natural one is that all emitted particles have an equal chance of non-detection (the sample detected is “fair”, not varying with the settings of the detectors). The realist picture, however, is different. Let us replace the detectors by two assistants, Anne (A) and Bob (B), the source of particles by a large ball on which are marked, at opposite points on the surface, an N and an S (fig. 1). The assistants look at the ball, which turns randomly about its centre (the term “chaotic”, though bearing little relation to the modern use of the term, is retained for historical reasons). They record, at agreed times, whether they see an N or an S. When sufficient records have been made they get together and compile a list of the coincidences — the numbers of occurrences of NN, SS, NS and SN, where the first letter is Anne’s and the second Bob’s observation. The astute reader will notice that, if the vector from S to N corresponds to the “spin” of the atom, the model covers the case in which the spins on the A and B sides are identical, not opposite. Anne and Bob are looking at identical copies of the ball, which can conveniently be represented as a single one. This simplification aids visualisation whilst having no significant effect on the logic. The difference mathematically is just a matter of change of sign, with no effect on numerical values. In point of fact, the assumption of identical spins makes the model better suited to some of the actual optical experiments. Aspect’s, for example, involved planepolarised “photons” (not, incidentally, circularly polarised, as frequently reported22) with parallel, not orthogonal, polarisation directions. With this simplification, geometry dictates that if the ball takes up all possible orientations with equal frequency (there is “rotational invariance”) then the relative frequencies of the four different coincidence types will correspond to four areas on the surface of an abstract fixed sphere as shown in fig. 2. Anne’s observations correspond to two hemispheres, Bob’s to a different pair, the dividing circles being determined by the positions of the assistants. D:\106742796.doc Fig. 2: The registered coincidences: Chaotic Ball with perfect detectors. The first letter of each pair denotes what Anne records, the second Bob, when the S is in the region indicated. We conduct a series of experiments, each with fixed lines of sight (“detector settings”) a and b. It can readily be verified that the model will reproduce the standard “deterministic local realist” prediction, with linear relationship between the number of coincidences and , the angle between the settings23. This is shown in fig. 3, which also shows the quantum mechanical prediction, a sine curve. Fig. 3: Predicted coincidence curves. The straight line gives the local realist prediction for the probability that both Anne and Bob see an S, if there are no missing bands; the curve is the QM prediction, ½ cos2 (/2). What happens, though, if the assistants do not both make a record at every agreed time? If the only reason they miss a record is that they are very easily distracted, this poses little problem. So long as the probability of nondetection can be taken to be random, the expected pattern of coincidences will remain unaltered. What if the reason for the missing record varies with the orientation of the a ball, though — with the “hidden variable”, , the vector from S to N? 4 Setting the Record Straight difference, = b – a, which is 45º for three of the terms and 135º for the fourth. We can therefore immediately read off the required values from a graph such as that of fig. 5, where the curve is calculated from the geometry of fig. 4 (see next section and Appendix B). Fig. 4: Chaotic Ball with missing bands. There is no coincidence unless both assistants make a record, so some data is thrown away. Suppose the ball is so large that the assistants cannot see the whole of the hemisphere nearest to them. The picture changes to that shown in fig. 4, in which the shaded areas represent the regions in which, when occupied by the S, coincidences will be recorded as indicated. The ratios between the areas, which are what matter in Bell tests, change — indeed, some areas may disappear altogether. If the bands are very large, there will be certain positions of the assistants for which the estimated quantum correlation (E, equation (1) below) is not even defined, since there are no coincidences. New decisions are required. Whereas before it was clear that if we wanted to normalise our coincidence rates we would divide by the total number of observations, which would correspond to the area of the whole surface, there is now a temptation to divide instead by the total shaded area. The former is correct if we want the proportion of coincidences to emitted pairs, but it is, regrettably, the latter that has been chosen in actual Bell test experiments. It is easily shown that the model will now inevitably, for a range of parameter choices, infringe the relevant Bell test if our estimates of “quantum correlation” are the usual ones, namely, E ( a, b) NN SS NS SN , NN SS NS SN (1) where the terms NN etc. stand for counts of coincidences in a self-evident manner. The Bell test in question is the CHSH test referred to above. It takes the form –2 S 2, where the test statistic is S E (a, b) E (a, b' ) E (a' , b) E (a' , b' ). (2) The parameters a, a, b and b are the detector settings: to evaluate the four terms four separate sub-experiments are needed. The settings chosen for the Bell test are those that produce the greatest difference between the QM and standard local realist predictions, namely a = 0, a = 90º, b = 45º and b = 135º. Since we are assuming rotational invariance, the value of E does not depend on the individual values of the parameters but on their D:\106742796.doc Fig. 5: Predicted quantum correlation, E, versus angle. The curve corresponds to (moderate-sized) missing bands, the straight line to none. See Appendix B for the formula for the central section of the curve. When there are no missing bands it is clear that the numerical value of each term is 0.5 and that they are all positive. Thus with no missing bands the model shows that we have exact equality, with S actually equalling 2. If we do have missing bands, however, although the four terms are still all equal and all positive, each will have increased! The Bell test will be infringed. An “imperfection” has increased the correlation, in contradiction to the opinion, voiced among others by Bell himself, that imperfections are unlikely ever to do this. It is not hard to imagine real situations, especially in the optical experiments, in which something like “missing bands” will occur, biasing this version of Bell’s test in favour of quantum mechanics. Note that the “visibility” test used in recent experiments such as Tittel’s longdistance Bell tests24 is equally unsatisfactory, biased from this same cause. The realist upper limit on the standard test statistic when there is imperfect detection is 4, not 2, well above the quantum-mechanical one of 22 2.8. The visibility can be as high as 1, not limited to the maximum of 0.5 that follows from the commonlyaccepted assumptions. 2. 1: Detailed Predictions for the basic model For the case of “hard-edged” symmetrical missing bands, the predictions can be given exactly for any choice of missing band width and angle between detectors. The formula for the coincidence rate PSS (fig. 6) is given in Appendix B, though qualitative predictions can be made just by inspection of diagrams such as fig. 4. The graphs below show the results when the missing bands subtend an angle of 30º at the centre of the ball, corresponding to = 75° = 5/12 in the notation of the appendix. 5 Setting the Record Straight but it is of interest to look also at the “un-normalised” one, (NN + SS – NS – SN)/N , with expected value: PNN + PSS – PNS – PSN , plotted in fig. 8. Fig. 6: Predicted coincidence rate, PSS. Note that the curve is actually zero for certain angles. This has the interesting consequence that under certain conditions the quantum correlation is not even defined. These correspond to cases in which Anne and Bob are very close to the ball so that each sees only a small circle. Their circles may not overlap: they may score no coincidences. In real experiments this would never quite be seen to happen, since there are always “dark counts”, but no useful Bell test could be conducted: the variance of the statistic would be too large. Fig. 8: Un-normalised "quantum correlation". The match with the QM prediction is considerably less impressive, the curve not reaching the maximum of 1 and not having the feature of a zero slope for parallel detectors. Whilst for the chosen example (missing bands subtending 30°) the model gives the CHSH test statistic of S = 3.331 > 2, the un-normalised estimate will never exceed 2 because the values at the “Bell test angles” will always all be numerically less than 0.5. Clearly (NN + SS – NS – SN)/N is an unbiased estimate of the quantum correlation; the usual expression, (NN + SS – NS – SN)/(NN + SS + NS + SN) is not. Bell’s inequality assumes the use of unbiased estimates. 2.2: Discussion Fig. 7: Total coincidence rate, Tobs/N. The total coincidence rate, Tobs /N = (NN + SS + NS + SN)/N , for this model is illustrated in fig. 7. The fact that it is not constant provides a useful, though not quite conclusive, test for unfair sampling — the presence of something equivalent to our missing bands. In real situations, in which there are no hard edges to the bands but a gradation from white to black, the curve will be smoother and the contrast between maximum and minimum perhaps not so great, but if the “detection loophole” is in operation and is causing infringements of the CHSH inequality, some difference between the total at 0º and that at 90º should be present and detectable. It is important to notice, though, that no difference is to be expected between the “Bell test angles”, 45º and 135º — a fact that can be deduced from Pearle’s paper of 1970 but which seems now to have been forgotten. (See also Section 2.2 below.) We can derive the expected value of the ordinary “normalised” quantum correlation (1) in which division is by NN + SS + NS + SN , with results as shown in fig. 5, D:\106742796.doc Is the kind of missing band effect modelled by the Chaotic Ball likely to occur in real experiments? The answer is, effectively, “Yes.” If an experiment using Stern-Gerlach magnets and spin-1/2 particles were ever to be possible, then perhaps all particles would be detected so the problem would not arise — though if there were to be any non-detections, is it not likely that they would occur mostly for those particles whose spin was almost orthogonal to the direction determined by the magnets, so that it was not clear in which direction they “should” be deflected? The vast majority of real experiments to date, though, have used light, with the direction of plane polarisation used in place of spin. In these, the sampling will be biased unless Malus’ Law (that intensity is proportional to cos2 ) is obeyed exactly, for all intensities of input signal. (Note that I am assuming that individual “photons” are really classical pulses of light, and these can vary in intensity.) The situation that gives rise to effectively missing bands and hence to high values of the CHSH test statistic is one in which the probabilities of detection are (whether because of the behaviour at the polariser or at the detector) lower than given by Malus’ Law for angles of polarisation of around 45º and less. 6 Setting the Record Straight Little or no effort seems to be made by most experimenters to check the true operating characteristics of their apparatus, which is, in practice, not perfect. Whilst in most context imperfections can simply be accepted, in this case, where they can bias the results, the true characteristics need to be built into the model. Is enough done to test for the presence of missing bands (or regions of the hidden variable space that have less than the full probability of detection)? The answer would seem to be that, as mentioned in the introductory sections, in recent experiments understanding of what is needed has somehow become lost. Though, for example, Fattal et al.25 check that the total coincidence count is constant for their Bell test angles, they do not report looking at any others. From fig. 8 above it is immediately clear that this is not enough: the total for the Bell test angles is not expected to vary. For a test of constancy of total coincidence count to be of any value, other angles — preferably 0 and 90º (0 and 45º in polarisation experiments) — must be included. Note that testing for constancy of the singles rates is by no means sufficient: it is constant for the ball model with missing bands, yet the total coincidence count varies. The CHSH test must, I think, be taken to be unreliable, probably always biased towards the quantum theory prediction. As already mentioned, Clauser and Horne devised a test (the CH74 test, see Appendix A) that is, so long as there is no “enhancement”, not intrinsically biased. It is, in my view unsurprisingly, not so readily violated. Most early experiments did violate it so some extent, but not by the margins achieved for the CHSH test, the reasons for violation lying, I believe, in more subtle loopholes such as synchronisation problems (see below). The fact that this alternative test is available and less prone to bias is yet another truth that seems to have been lost. I have already suggested a possible reason: perhaps Alain Aspect’s belief is typical. He states16 that the CH74 test depends on the fair sampling assumption. This is not in fact true, or at least, not in a way that directly causes bias. It is clear from the referenced paper that his belief comes from use of a derivation that does depend on fair sampling. Clauser and Horne’s derivation, however, does not. It follows that the test itself does not. in principle, to one in which the missing bands for Anne and Bob are of unequal width and are not centralised. To achieve a better fit with the QM prediction, we can make the edges of the ball “fuzzy”, so that the probability of detection varies gradually from 0 to 1. There is no purpose, however, in carrying this out in detail, since what is really needed is a model for the two-dimensional case with equivalence between opposite points that corresponds to the real optical experiments. We turn instead to the general realist model, coming directly from Bell’s assumptions so long as there are no problems with synchronisation etc.. The coincidence probability is: P(a, b) d ( ) p A (a, ) p B (b, ) (3) where the integration is performed over the complete “hidden variable” space spanned by , the weighting factor, , represents the relative frequencies of the different “states”, , of the source. pA and pB are the probabilities of detection, given the detector setting (a or b) and . The simplest assumption is that pA and pB are cos2 (a – ) and cos2 (b – ) respectively, reflecting adherence to Malus’ law on passage through the polariser, together with “perfect” detectors, the probability of detection being exactly proportional to the input intensity. is constant if we have “rotational invariance” of the source. The predicted quantum correlation for this case is shown in fig. 9. Marshall et al.26 have shown in their article of 1983 how replacing the cosine-squared terms by rather more general expressions can produce realist predictions arbitrarily close to the quantum theory curve. 2.3: Generalising the ball model As it stands, the model explains the fact that Aspect observed slight variations in the total coincidence count. It can readily be generalised to explain another “anomaly” mentioned in his PhD thesis in relation to his two-channel experiment, namely the fact that his counts equivalent to my NS and SN were not quite equal. There was a small difference, not quite reaching “statistical significance”. This can be explained if we allow for two asymmetries in his actual setup: the fact that his polarisers did not split exactly 50-50 and the fact that the “photons” on the two sides were of different wavelengths, requiring different photodetectors whose characteristics could not be expected to be identical. The situation thus corresponds, D:\106742796.doc Fig. 9: Local realist prediction for “quantum correlation” for (perfect) optical Bell tests The full curve is the realist prediction, the dotted curve the QM one. For yet further generality, covering problems such as the presence of accidentals or matters to do with synchronisation (see next section), recourse to computer simulation, taking full account of the specific experimental details, is likely to be needed. (See endnote 19.) The principle is always the same, and always 7 Setting the Record Straight straightforward. There should be no need to call in an expert. 3: OTHER LOOPHOLES The detection loophole is, at least among professionals, well known, but the fact that it affects some versions of Bell’s test and not others is perhaps less well understood. Different loopholes apply to different versions, for each version comes with its attendant assumptions. Some come very much under the heading of “experimental detail” and have, as such, little interest to the theoretician. If we wish to decide on the value to be placed on a Bell test, however, such details cannot be ignored. I. Subtraction of “accidentals”: Adjustment of the data by subtraction of “accidentals”, though standard practice in many applications, can bias Bell tests in favour of quantum theory. After a period in which this fact has been ignored by some experimenters, it is now once again accepted27. The reader should be aware, though, that it invalidates many published results 28. II. Failure of rotational invariance: The general form of a Bell test does not assume rotational invariance, but a number of experiments have been analysed using a simplified formula that depends upon it. It is possible that there has not always been adequate testing to justify this. Even where, as is usually the case, the actual test applied is general, if the hidden variables are not rotationally invariant, i.e. if some values are favoured more than others, this can result in misleading descriptions of the results. Graphs may be presented, for example, of coincidence rate against , the difference between the settings a and b, but if a more comprehensive set of experiments had been done it might have become clear that the rate depended on a and b separately29. Cases in point may be Weihs’ experiment, presented as having closed the “locality” loophole30, and Kwiat’s demonstration of entanglement using an “ultrabright photon source31”. III. Synchronisation problems: There is reason to think that in a few experiments bias could be caused when the coincidence window is shorter than some of the light pulses involved32. These include one of historical importance — that of Freedman and Clauser, in 197233 — which used a test not sullied by either of the above possibilities. IV. “Enhancement”: Tests such as that used by Freedman and Clauser (essentially the CH74 test) are subject to the assumption that there is “no enhancement”, i.e. that there is no hidden variable value for which the presence of a polariser increases the probability of detection. This assumption is considered suspect by some authors, notably Marshall and Santos, but in practice, in the few instances in which the CH74 inequality has been used, the test has been invalidated by other more evident loopholes such as the subtraction of accidentals. 5. Asymmetry: Whilst not necessarily invalidating Bell tests, the presence of asymmetry (for instance, the different frequencies of the light on the two sides of D:\106742796.doc Aspect’s experiments) increases the options for local realist models34. A loophole that is notably absent from the above list is the so-called “timing”, “locality” or “light-cone” one, whereby some unspecified mechanism is taken as conveying additional information between the two detectors so as to increase their correlation above the classical limit. In the view of many realists, this has never been a serious contender. John Bell supported Aspect’s investigation of it (see page 109 of Speakable and Unspeakable35) and had some active involvement with the work, being on the examining board for Aspect’s PhD. Weihs improved upon the test in his experiment of 199830, but nobody has ever put forward plausible ideas for the mechanism. Its properties would have to be quite extraordinary, as it is required to explain “entanglement” in a great variety of geometrical setups, including over a distance of several kilometers in the Geneva experiments of 1997-824,28. There may well be yet more loopholes. For instance, in many experiments the electronics is such that simultaneous ‘+’ and ‘–’ counts from both outputs of a polariser can never occur, only one or the other being recorded. Under QM, they will not occur anyway, but under a wave theory the suppression of these counts will cause even the basic realist prediction to yield “unfair sampling”. The effect is negligible, however, if the detection efficiencies are low, since the three- or four-fold coincidences involved (two on one side, one or more on the other) then hardly ever happen. 4: CONCLUSION The “Chaotic Ball” models a hypothetical Bell test experiment in a manner that encourages the use of intuition and realism. It illustrates the fact, well known to those working in the field, that if not all particles are detected there is risk of bias in the standard tests used, which are no longer able to discriminate between the nonseparable quantum-mechanical model and local realism. Knowledge of an alternative test, and the fact that this test does not suffer from the same bias, appears to have been lost, as has understanding of a reasonable check that could at least indicate when the observed coincidences are not a fair sample. Perhaps too much emphasis has been placed on Bell tests at the expense of ordinary scientific method, which would have led to comprehensive investigation of the relative merits of the QM versus local realist models. As suggested above, there may be deviations from Malus’ Law that become noticeable when the light is weaker or the detectors less efficient. Should not the intensity of the light and characteristics of the detectors be parameters of a genuine physical model? Contrary to the expressed opinion of such authorities as Bell (page 109 of Speakable and Unspeakble) and Clauser and Shimony36, the Chaotic Ball model tells us that imperfections do not always decrease quantum correlations. Less efficient detectors 8 Setting the Record Straight are likely to result in wider effective missing bands and hence stronger estimated correlations. Hopefully, I have convinced readers that not all local realist models are contrived, or are as weird as quantum theory. They do not, as expressed for example by Prof Laloë37, require conspiracies between the detectors. Nor are they complicated. The basic formula (equation (3) above), valid in the absence of complicating factors such as “accidentals” or synchronisation problems, has been known all along. It is a straightforward consequence of the assumption that the observed correlations originate from shared properties acquired at the source, and the individual detection events are independent. A frequent objection is that local realism cannot match quantum theory when it comes to accurate quantatitive predictions. True, it cannot easily match exactly the quantum-mechanical coincidence formulae (the ball model, illustrating principles only, does not even attempt to), but what is required is surely a match with experimental results, not with the quantum theory predictions. Though the quantum-mechanical predictions have generally been presented as being correct, do they remain correct when the experimental conditions are slightly altered? Do they correctly predict the whole observed coincidence curve? The QM formula can be — and frequently has been — altered to allow for a few changes in conditions, but such adaptations require considerable expertise in the formalism. Adapting the local realist model, on the other hand, requires no special training in any formalism, only understanding of chains of cause and effect. “Any theory will account for some facts; but only the true explanation will satisfy all the conditions of the problem …” (William Crookes, 187539) ACKNOWLEDGEMENTS Thanks are due to Franck Laloë for encouragement to air again the Chaotic Ball model. The work would not have been completed without the moral support of David Falla and Horst Holstein of the University of Wales, Aberystwyth, and of the many who have expressed appreciation of my web site or contributions to Internet discussions. My use, from 1993 to 2003, of the computer and library facilities at Aberystwyth was by courtesy of the Department of Computer Science, of which I was an associate member. The realist model for the optical experiments seems to require that we model light as a wave 38, assuming the energy of each individual light pulse (“photon”) to be split at the polariser — something no photon can do. The intensity of the emerging pulse then influences statistically the probability of the detector firing. Despite the success of the photon model of light in many applications of quantum optics, this success is at the price of recognised conceptual difficulties. The possibility that wave models that allow for the idiosyncrasies of the apparatus used — deviations from Malus’ Law for example — may be able to account in a much more straightforward manner for all “quantum optical” effects is the subject of ongoing research. The spin-offs from experiments related to attempted applications of quantum entanglement — improved technology, for example, in the areas of optical and nanoscale communications and computing — justify continued research in this area. The technology stands in its own right. The theory behind it, though, remains an open question. It is likely to remain so for some time to come, since, by the indiscriminate rejection of all papers on the Bell tests loopholes (see Appendix C), the prestigious journals are inadvertently suppressing physically plausible local realist explanations. D:\106742796.doc 9 Setting the Record Straight APPENDIX A: COMPARISON OF CHSH AND CH74 TESTS Source CHSH CH74 Attributed to CHSH 1969 paper. Never in fact supported by authors. Best derivation: appendix to (reproduced in quant-ph/9903066). Two-channel40: Single-channel: –2S2 S<0 CH74 paper Experimental design where where Formula S E ( a, b) E ( a, b' ) E ( a ' , b) E ( a ' , b' ) and E ( a, b) N N N N N N N N S P ( a, b) P ( a, b' ) P ( a ' , b) P ( a ' , b' ) P ( a ' , ) P ( , b ) N ( a , b) and P(a, b) , N (, ) the symbol indicating absence of polariser41 Used Variants of this and the related “visibility” test have been used in the majority of experiments since 1982. Variants were used in all experiments up to 1982. Advantages Relatively easy to violate. Does not depend on fair sampling. Disadvantages Depends on the fair sampling assumption, which implies among other things: N N N N N D:\106742796.doc Assumes “no enhancement” Hard to violate. 10 Setting the Record Straight realism. Of course nobody proposed a local realistic theory that would reproduce quantitative predictions of quantum theory (energy levels, transition rates, etc.). APPENDIX B: CALCULATED PREDICTIONS OF THE CHAOTIC BALL MODEL This loophole hunting has no interest whatsoever in physics. It tells us nothing on the properties of nature. It makes no prediction that can be tested in new experiments. Therefore I recommend not to publish such papers in Physical Review A. Perhaps they might be suitable for a journal on the philosophy of science. The above attitude has also caused failure of another important paper, that on the Subtraction of Accidentals”. Fig. B1: Definition of angles used in equation (B1). The main formula for the proportion PSS of “like” coincidences such as SS with respect to the number of emitted pairs N comes from the area of overlap of two circles on the surface of a sphere (see figs. 4 and B1). The result, as calculated by H. Holstein42, is: Despite my protestations that the loopholes are there to be discovered, not “invented”; that it is unreasonable to expect a paper that explains the Bell test results — essentially a matter of logic and experimental method — also to discuss energy levels and transition rates; that my ideas do lead to new physics (in that they give new reason to replace the photon model of light by a wave model); that they do make testable predictions; and that it is not philosophers of science who need to know about them but experimenters and theorists, there seems never to have been any chance of acceptance of my submissions. I am not alone in this experience. Though I should be the first to admit that most so-called “realist” papers on the subject fully deserve rejection, to hold rigidly to the above policy statement cannot be in the long-term interests of physics. 1 tan sin PSS ( , ) 1 (cos 1 ( sin ) cos ( tan ) cos ), (B1) where = /2 and is the half-angle defining the proportion of the surface for which each assistant makes a definite reading (zero corresponds to none; /2 to the whole surface). PSS achieves a maximum of ½ (1 – cos ) when = 0, which is less than the QM prediction of 0.5 unless is /2. When , it is zero (see fig. 6 of main text). APPENDIX C: POLICY STATEMENT Attempts at publishing the core of the current paper in American Physical Society journals have failed due to application of the following editorial policy statement: In 1964, John Bell proved that local realistic theories led to an upper bound on correlations between distant events (Bell's inequality) and that quantum mechanics had predictions that violated that inequality. Ten years later, experimenters started to test in the laboratory the violation of Bell's inequality (or similar predictions of local realism). No experiment is perfect, and various authors invented "loopholes" such that the experiments were still compatible with local D:\106742796.doc A. Zeilinger and D. Greenberg, “Petition to the American Physical Society for the Creation of a Topical Group on Quantum Information, Concepts, and Computation (Quicc)”, New York, April 10, 2002, http://www.sci.ccny.cuny.edu/~greenbgr/letter.html 1 2 If QM were logical, would we find both Bohr and Feynman telling us that nobody understands it? 3 A. Aspect, et al., Phys. Rev. Lett. 47, 460 (1981); 49, 91 (1982) and 49, 1804 (1982). The two 1982 papers are available electronically at http://fangio.magnet.fsu.edu/~vlad/pr100/ 4 A. Zeilinger et al., Physics Today, February 1999, pp 11-15 and 89-92, correspondence re Goldstein’s article, Physics Today, March 1998, pp 42-46. What Zeilinger and others do not seem to realise is that, if the results really can be fully explained as the consequences of change of information, then this amounts to admission that the Bell inequality being used is not a genuine one. If it were, then no such simple explanation would be possible. 5 A. F. Kracklauer (private communication) argues that Bell “misused the chain rule” of probability theory. [To me, his arguments amount to evidence that he has not understood the role of hidden variables.] E. T. Jaynes 11 Setting the Record Straight argues that Bell should have used Bayesian methods. See his article: “Clearing up the mysteries (the original goal)”, pp. 1-27 of Maximum Entropy and Bayesian Methods, J. Skilling, Editor, Kluwer Academic Publishers, Dordrecht, Holland (1989), http://bayes.wustl.edu/etj/articles/cmystery.pdf [Much as I admire Jaynes, he is wrong here. Indeed, his simple example of balls in a “Bernouilli urn” is not appropriate, since in the real experiments we effectively have sampling with replacement, not without.] 6 Bell in his original paper (ref 10 below) used Boolean notation, A for ‘+’ outcome, Ā for ‘–’. This precludes a zero or null outcome or (a possiblity rarely even mentioned) the simultaneous registering of a ‘+’ and a ‘–’ from the two output ports of the same polariser. Though it is possible to adapt Bell’s notation to cover zero’s, it is better by far to abandon it completely when dealing with the optical experiments and switch to Clauser and Horne’s 1974 approach. C & H concentrate on just the ‘+’ outcomes and use the notation p(, a) for the probability of a detection of a “photon” with hidden variable by an analyser set at angle a. 7 M. Wolff, Exploring the Physics of the Unknown Universe, Technotran Press, California 1990. D. Aerts et al., “The Violation of Bell Inequalities in the Macroworld”, http://arxiv.org/abs/quant-ph/0007044 or the original (1982) exposition of Aerts’ linked-vessel analogy, item 11 at http://www.vub.ac.be/CLEA/aerts/publications/chronolog ical.html 8 9 It should be noted that, under a wave model of light, there is more than one way to alter a beam intensity. The number of light pulses per second can be altered, or the intensity per pulse, or both at once. If all that is altered is the number per second, keeping the intensity per pulse fixed, then QM is quite correct: this should have no effect on the Bell test. If, however, the apparatus is manipulated (by means of focusing, filters or whatever) so that the intensity per pulse is changed, this can affect the result, since real photodetectors are not quite as “linear” as they should be. Bell, John S, “On the Einstein-Podolsky-Rosen paradox”, Physics 1, 195 (1964), reproduced as Ch. 2, pp 14-21, of J. S. Bell, Speakable and Unspeakable in Quantum Mechanics, (Cambridge University Press 1987). 10 Einstein, A., B. Podolsky, and N. Rosen, “Can Quantum-Mechanical Description of Physical Reality be Considered Complete?”, Phys. Rev. 47, 77 (1935). 11 12 Bohm, D., Quantum Mechanics, Prentice-Hall 1951 13 There have been several attempts at Bell tests using particles but none has been satisfactory. They are much more difficult both to conduct and to interpret, the interpretation invariably depending strongly on theory. See for example M. Lamehi-Rachti and W Mittig, “Quantum Mechanics and hidden variables: a test of Bell’s inequality by the measurement of the spin D:\106742796.doc correlation in low-energy proton scattering”, Phys. Rev. D 14, 2543-2555 (1976). J. F. Clauser and M. A. Horne, “Experimental consequences of objective local theories”, Phys. Rev. D 10, 526-35 (1974). 14 Pearle, P, “Hidden-Variable Example Based upon Data Rejection”, Phys. Rev. D 2, 1418-25 (1970). 15 Alain Aspect, “Bell’s theorem: the naïve view of an experimentalist”, Text prepared for a talk at a conference in memory of John Bell, held in Vienna in December 2000. Published in "Quantum [Un]speakables – From Bell to Quantum information", edited by R. A. Bertlmann and A. Zeilinger, Springer (2002); http://arxiv.org/abs/quant-ph/0402001 16 17 A. Aspect, Trois tests expérimentaux des inégalités de Bell par mesure de corrélation de polarisation de photons, PhD thesis No. 2674, Université de Paris-Sud, Centre D’Orsay, (1983). G. Adenier and A. Khrennikov, “Testing the Fair Sampling Assumption for EPR-Bell Experiments with Polarizer Beamsplitters”, http://arXiv.org/abs/quantph/0306045 18 19 Note that it is possible to model any real Bell test on a computer by taking each event as it happens: pairs of light signals are generated, with correlated (equal?) polarisation directions; the intensity of each is reduced by passage through a polariser; the resulting signal interacts with a detector and is either detected or not, at a time that is partly random; coincidence circuitry tests whether or not the detection times of the two signals are within a chosen time window. This procedure, as the author admits (private correspondence) is not carried out in Kracklauer’s reported realist “simulation” in “Betting on Bell”, http://arxiv.org/abs/quant-ph/0302113 . Given his method, it comes as no surprise that he comes to a false conclusion: that a local realist model that obeys Malus’ Law exactly can reproduce the QM formula. C. H. Thompson, “The Tangled Methods of Quantum Entanglement Experiments”, Accountability in Research, 6 (4), 311-332 (1999); http://freespace.virgin.net/ch.thompson1/Tangled/tangled. html 20 21 The definition that Bell gave (page 15 of ref 35) for quantum correlation was the “expectation” value of the product of the “outcomes” on the two sides, where the “outcome” is defined to be +1 or –1 according to which of two possible cases is observed. It is to be assumed that he was using the word “expectation” in its usual statistical sense and that an unbiased estimate would be used. 22 See for example Johnjoe McFadden, Quantum Evolution: Life in the Multiverse, (Flamingo, London, 2000) page 200. The prediction of a linear relationship for the “perfect” case is most easily verified by drawing diagrams of the ball as seen from above. The dividing circles are then 23 12 Setting the Record Straight straight lines through the centre and the areas required are proportional to the angles between them. W. Tittel et al., “Experimental demonstration of quantum-correlations over more than 10 kilometers”, Phys. Rev. A, 57, 3229 (1997), http://arxiv.org/abs/quantph/9707042 24 D. Fattal et al., “Entanglement formation and violation of Bell’s inequality with a semiconductor single photon source”, Phys. Rev. Lett. 92, 037903 (2004), http://arxiv.org/abs/quant-ph/0305048 25 single-channel experiments. See for example P. G. Kwiat et al., “Ultrabright source of polarization-entangled photons”, Phys. Rev. A 60 (2), R773-R776 (1999), http://arXiv.org/abs/quant-ph/9810003 41 Though the derivation of the CH74 inequality is in terms of probabilities, the actual test (as Clauser and Horne recognised) could be conducted on the raw counts, since the limit is zero. Normalising by division by N(,) is for convenience when comparing experiments. 42 H. Holstein, private communication, 2002. T. W. Marshall, E. Santos and F. Selleri: “Local Realism has not been Refuted by Atomic-Cascade Experiments”, Phys. Lett. A 98, 5-9 (1983). 26 W. Tittel et al., “Long-distance Bell-type tests using energy-time entangled photons”, http://arxiv.org/abs/quant-ph/9809025 (1998). 27 C. H. Thompson, “Rotational invariance, phase relationships and the quantum entanglement illusion”, http://xxx.lanl.gov/abs/quant-ph/9912082 (1999). 28 C. H. Thompson, “Rotational invariance, phase relationships and the quantum entanglement illusion”, http://xxx.lanl.gov/abs/quant-ph/9912082 (1999). 29 G. Weihs, et al., “Violation of Bell’s inequality under strict Einstein locality conditions”, Phys. Rev. Lett. 81, 5039 (1998) and http://arXiv.org/abs/quant-ph/9910080, and private correspondence. 30 P.G. Kwiat et al., “Ultrabright source of polarizationentangled photons”, Phys. Rev. A 60 (2), R773-R776 (1999), http://arXiv.org/abs/quant-ph/9810003 31 C. H. Thompson, “Timing, ‘accidentals’ and other artifacts in EPR Experiments” (1997), http://arxiv.org/abs/quant-ph/9711044 32 33 S. J. Freedman and J. F. Clauser, Phys. Rev. Lett. 28, 938 (1972). S. Caser, “Objective local theories and the symmetry between analysers”, Phys. Lett. A 102, 152-8 (1984). 34 35 J. S. Bell, Speakable and Unspeakable in Quantum Mechanics, (Cambridge University Press 1987). J. F. Clauser and A. Shimony, “Bell’s theorem: experimental tests and implications”, Reports on Progress in Physics 41, 1881 (1978). 36 F. Laloë, “Do we really understand quantum mechanics? Strange correlations, paradoxes and theorems”, Am. J. Phys., 69(6), 655-701, (June 2001). 37 38 Adenier and Krennikov (ref 16 above) have devised a photon model with variable detection probabilities, but it does not make realistic assumptions about the behaviour at polarisers. It assumes that the light that emerges has a wide spread of possible polarisation directions, which is known experimentally not to be the case. W. Crookes, “The Mechanical Action of Light”, Quarterly Journal of Science VI, 337-352 (July 1875). 39 40 Though intended for use with two-channel detectors, the CHSH test can, with a little ingenuity, be used for D:\106742796.doc 13