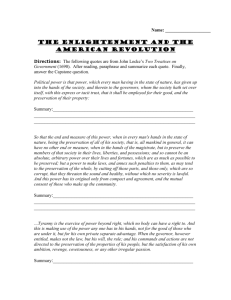

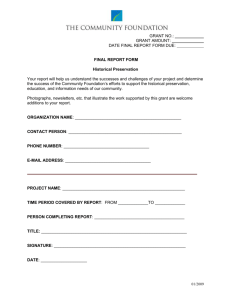

REPORT on Long Term Preservation

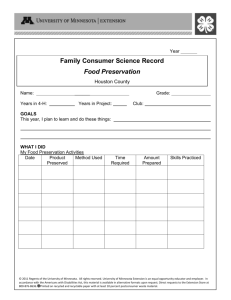

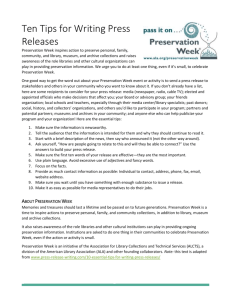

advertisement