Logic Argument of Research Article

advertisement

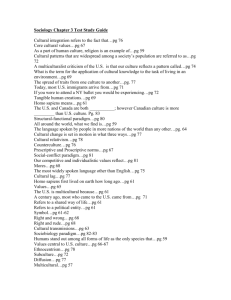

Chapter 3-4. Logic and Errors Hume’s Refutation of Induction Definition. Induction Inference of a generalized conclusion from particular instances. [Merriam-Webster, 1999]: When asked the question of what logical construct is used in medical research, there are some who would claim it is induction. They would say that by the process of replicating studies, we establish the validity of our theories. Researchers trained in Philosophy of Science, contrariwise, are aware that the philosopher David Hume in 1739 refuted the logic of induction. Lanes [1988, p.60] states “How can we establish which exposures are causes of disease? This question has been asked in a more general form for many centuries. In 1739, Hume challenged the question’s premise: We are never able, in a single instance, to discover any power or necessary connection, any quality which binds the effect to the cause...we only find that one does, in fact, follow the other.2 Hume reasoned that there is no logical way to establish an association as causal because causal associations possess no unique empirical quality. This contention conflicts with the goal of establishing causality that has guided medical science for the past century.1,3 It also calls into question the scientific basis of the many causes of disease that have been discovered in pursuit of this goal. According to Hume, repeated observation only influences our belief that an association is causal. But, since replication carries no implication for validity (because errors may be repeated), our beliefs are without logical justification. The so-called logic of induction, which asserts that through replication we achieve confirmation, is not a logic at all.” ------------------------------------1. Koch, R. Die aetiologie der Tuberculose. Mitteilungen aus dem Kaiserlichen Gesundheitsamte 1884;2:1. 2. Hume D. Treatise of Human Nature. Longdon: John Noon, 1739; revised and reprinted, Selby-Bigge LA, ed. Oxford: Clarendon Press, 1985. 3. Hutchison GB. The epidemiologic method. In: Schottenfeld D, Fraumeni JF, eds. Cancer Epidemiology and Prevention. Philadelphia: W.B. Saunders Company, 1982:9. _____________________ Source: Stoddard GJ. Biostatistics and Epidemiology Using Stata: A Course Manual [unpublished manuscript] University of Utah School of Medicine, 2011. http://www.ccts.utah.edu/biostats/?pageId=5385 Chapter 3-4 (revision 19 Aug 2011) p. 1 Popper’s Deductive Testing of Theories Definition. Deduction Inference in which the conclusion about particulars follows necessarily from general or universal premises. [Merriam-Webster, 1999] In 1934, the philosopher Karl Popper published Logik der Forschung, which was translated into English in 1959 as The Logic of Scientific Discovery. [Popper, 1968, 2nd ed], played a significant role in popularizing deduction in scientific research. Popper’s viewpoint was that science progresses by refutation of theories, the theories formed by whatever means. Using a deductive logic and starting with a theory, research studies empirically test the theory. Once a theory is convincingly demonstrated false, refuted with empirical evidence by one or more research studies, a reformulated (and hopefully better) theory takes its place and is presented to researchers to be refuted anew. A currently accepted theory survives until refuted [Popper, 1968]. This deductive refutation of theories, which does not depend on induction at all, still is subject to the same criticism that Hume advanced against induction. That is, how do we know that our studies, however many, which we concluded as a refutation of a theory were not, themselves, subject to error? Proof in Science Occasionally you will catch a researcher stating “…the study proved that….” Because we can never be certain that we did not make an error, we can never logically claim to have proved anything. This is a principle understood in Philosophy of Science ever since Hume’s refutation of induction two and one-half centuries ago. In research papers, then, we replace “proved” with the weaker statement of “…the study demonstrated that….” Disjunctive Syllogism To apply the logical construct, disjunctive syllogism, first one creates an exhaustive (all possible) and disjoint (only one can happen at a time) list of explanations for some outcome. Then, after disproving all but one possible explanation, the one remaining explanation must be the true explanation for the outcome. (We are all familiar with this logic, because we use it on multiple-choice examinations in school.) As with the other logical constructs, we can still make errors when applying the disjunctive syllogism (not listing all explanations, for one thing). Chapter 3-4 (revision 19 Aug 2011) p. 2 Logical Argument of a Scientific Paper In medical research, when we think of theories, we use a bit of induction and a bit more of deduction. At the individual study level, however, we utilize an “operational” version of the disjunctive syllogism (subject to error but good enough for research). It is the logical argument that is presented in scientific papers. Elwood (1988, p.58) states, “If any association is shown within the study, it must be due to one of four mechanisms: causation, observation bias, confounding or chance.” Diagrammatically, our possible explanations for the observed effect are: Since we cannot be sure of causation, we operationally call this an association. That is, our causal factor may simply be a surrogate for whatever cause is underlying what we observe, which takes us back to the confounding box. Our goal is to eliminate all explanations but the one we are interested in: Having done this, we conclude the observed outcome (or study effect) must be due to the study intervention. Or more generally (applicable to all study designs), we demonstrated an association between the dependent and independent variable that apparently exists in the sampled population. Chapter 3-4 (revision 19 Aug 2011) p. 3 Having directly applied a logical construct (the disjunctive syllogism), by going through this process in our research and then describing it in our study report, we are making a “logical” argument. It is the logical argument specifically made in a scientific paper. Organization of a Scientific Paper In general, a scientific paper is organized into the following sections: Introduction, Methods, Results, And Discussion (hence, the acronym IMRAD). [Day, 1994] In the Uniform Requirements for Manuscripts Submitted to Biomedical Journals: Writing and Editing for Biomedical Publication, which is used by all of the major medical journals, you find: “The text of observational and experimental articles is usually (but not necessarily) divided into sections with the headings Introduction, Methods, Results, and Discussion. This so-called “IMRAD” structure is not simply an arbitrary publication format, but rather a direct reflection of the process of scientific discovery.” Exercise. Match the two columns (draw lines between the items) to show how scientific papers make a disjunctive syllogism argument: Logical Framework of Research IMRAD Organization of a Scientific Paper a) bias a) introduction b) confounding b) methods c) chance c) results d) association d) discussion In applying this logic, the research article authors eliminate all of the other possible explanations for the study’s observed effect, leaving the association as the only remaining possibility in the Discussion section. This is the same gambit expert salespersons use. First they handle all of the customer’s objections to making the purchase, so now without a reason to say no, the only thing left for the customer to do when handed the pen is to sign the contract. (Hopkins, 1982) Chapter 3-4 (revision 19 Aug 2011) p. 4 How Good Are Researchers at Applying the Logical Framework of Research? Regarding statistics and confounding, Zolman (1993, pp.3-4) states that Critical reviews of papers in medical journals have consistently found that about 50% of published articles used incorrect statistical methods (see Altman, 1983; Godfrey, 1986; Glantz, 1987; Williamson, Goldschmidt, and Colton, 1986). Incorrect analyses can lead to invalid results and inappropriate conclusions. A conservative estimate is that about 25% of medical research is flawed because of incorrect conclusions drawn from confounded experimental designs and the misuse of statistical methods, and that the bias generally favors the treatment over the control (see Hofacker, 1983; Williamson, Goldschmidt, and Colton, 1986). __________ Altman DG. (1983). Statistics in medical journals. Statistics in Medicine 1:59-71. Godfey K. (1986). Comparing the means of several groups. In J.C. Bailar, III, and F. Mosteller (eds.), Medical Use of Statistics, Boston MA, New England Journal of Medicine Books, pp.205-234. Glantz SA (1987). Primer of Biostatistics. 2nd ed. New York, McGraw-Hill, pp.6-9. Hofacker CF (1983). Abuse of statistical packages: The case of the general linear model. American Journal of Physiology, 245:R299-R302. Williamson JW, Goldschmidt PG, Colton T. (1986). The quality of medical literature: an analysis of validation assessments. In J.C. Bailar, III, and F. Mosteller (eds), Medical Use of Statistics, Boston MA, New England Journal of Medicine Books, pp.370-391. Bias is also a problem. Altman (1994) reports that numerous studies (citations in footnote) of the medical literature have shown that all of the following mistakes are commonly made by researchers in the medical literature: Use of the wrong techniques (either willfully or in ignorance) Use the right techniques wrongly Misinterpret their results Report their results selectively Cite the literature selectively Draw unjustified conclusions. “Why are errors so common? Put simply, much poor research arises because researchers feel compelled for career reasons to carry out research that they are ill equipped to perform, and nobody stops them.” _____________ Altman DG. (1983). Statistics in medical journals. Statistics in Medicine 1:59-71. Pocock SJ, Hughes MD, Lee RJ. (1987). Statistical problems in the reporting of clinical trials. A survey of three medical journals. N Engl J Med 317:426-32. Smith DG, Clemens J, Crede W, et al. (1987). Impact of multiple comparisons in randomized clinical trials. Am J Med 83:545-50. Murray GD. (1988). The task of statistical referee. Br J Surg 75:664-7. Gotzsche PC. (1989). Methodology an overt and hidden bias in reports of 196 double-blind trials of nonsteriodal anti-inflammatory drugs in rheumatoid arthritis. Controlled Clin Trials 10:31-59. Williams HC, Seed P. (1993). Inadequate size of negative clinical trials in dermatology. Br J Dermatol 128:317-26. Anderson B. (1990). Methodological errors in medical research. An incomplete catalogue. Oxford, Blackwell. Chapter 3-4 (revision 19 Aug 2011) p. 5 Recently, there has been a flurry of articles being concerned about incorrect conclusions in published research. A lot of the concern is about chance findings in data from researchers mining their data for a significant p value. Because of this, statisticians and journal reviewers are being more insistent about pre-specified hypotheses and pre-specified statistical analysis plans. Two recently published, easy to read, and interesting articles are: 1) Ioannidis (2005) states “It can be proven that most claimed research findings are false.” 2) Lehrer (2010) writes on the reproducibility of findings, “But now all sorts of well-established, multiply confirmed findings have started to look increasingly uncertain. It’s as if our facts were losing their truth: claims that have been enshrined in textbooks are suddenly unprovable. This phenomenon doesn’t yet have an official name, but it’s occurring across a wide range of fields, from psychology to ecology. In the field of medicine, the phenomenon seems extremely widespread, affecting not only antipsychotics but also therapies ranging from cardiac stents to Vitamin E and antidepressants: Davis has a forthcoming analysis demonstrating that the efficacy of antidepressants has gone down as much as threefold in recent decades.” Related articles include: Halpern SD, et al (2002); Ioannidis JPA et al (2009), and DeLong and Lang (1992). Chapter 3-4 (revision 19 Aug 2011) p. 6 Stoddard’s Aphorism A little reflection would lead one to suspect that in addition to the 25% of medical journal articles reporting flawed results leading to incorrect conclusions simply from the incorrect use of statistics, there might be at least 25% more which are flawed and reach incorrect conclusions due to other errors such as bias. This follows from the realization that there are many more subtle ways to unwittingly conduct a study poorly than there are ways to use statistics incorrectly. One might assume, then, that at least half the medical literature reports an incorrect conclusion. Given the fallibility of logical constructs, and researcher errors in conducting studies, perhaps the best approach to evaluating knowledge is simply adopting probabilistic thinking as outlined in the following aphorism. Stoddard’s Aphorism (perhaps more properly, the Watts-Johnson-Stoddard Aphorism) Johnson (1998) comments, We are socialized into a lack of critical thinking. For example, we are not encouraged to critically question the beliefs of the religion we are born into. We must break out of our socialization to adopt critical thinking in our professional lives. The first step to this is to grasp the difference between beliefs and faith. Alan Watts explains “Belief…is the insistence that the truth is what one would ‘lief’ or wish it to be…Faith…is an unreserved opening of the mind to the truth, whatever it may turn out to be. Faith has no preconceptions; it is a plunge into the unknown. Belief clings, but faith lets go…faith is the essential virtue of science…” To train students in critical thinking, I reworded the above explanation of Alan Watts from Johnson’s article into the following aphorism. Aphorism for Critical Thinking Beliefs are a practice of the intellectually immature. Have no beliefs. Instead, hold each explanation in your mind with its subjective probability, always allowing new explanations to enter and always allowing the probabilities to change. Faith, on the other hand, is a virtue. --Gregory J. Stoddard ______________________ Johnson F. (1998). Beyond belief: a skeptic searches for an American faith. Harper’s Magazine, September:39-54. Chapter 3-4 (revision 19 Aug 2011) p. 7 In applying Stoddard’s aphorism, you do not need to actually assign a number for a subjective probability. It is enough to simply consider, “For now, this explanation seems more likely than that explanation.” So what about something you really do not want to question, but you want to just keep it as a belief? For whatever that is, you can assign it the following subjective probability, P(my “whatever” is false) = 1/∞ That is, the probability that your “whatever” is false is the very smallest number possible. It is the closest you can get to zero, without actually being zero, where zero represents no chance it is false. Then, you never have to think about it again. The probability is so small that for all practical purposes it is zero, allowing you to basically consider it a belief. However, if you assign it this probability, a wonderful thing happens. You have given yourself permission to question all things, so your mind is free to soar. You might even turn into a critical thinker. Chapter 3-4 (revision 19 Aug 2011) p. 8 More Philosophy of Science Paradigm Eras paradigm = (Kuhn, 1970, p. viii) universally recognized scientific achievements that for a time provide model problems and solutions to a community of practitioners. paradigm = (Lincoln and Guba, 1985, p.15) A set of basic or metaphysical beliefs constituted into a system of ideas that “either give us some judgment about the nature of reality, or a reason why we must be content with knowing something less than the nature of reality, along with a method for taking hold of whatever can be known”(Reese, 1980, p.352) “A paradigm is a world view, a general perspective, a way of breaking down the complexity of the real world. As such, paradigms are deeply embedded in the socialization of adherents and practitioners: paradigms tell them what is important, legitimate, and reasonable. Paradigms are also normative, telling the practitioner what to do without the necessity of long existential or epistemological consideration. But it is this aspect of paradigms that constitutes both their strength and their weakness--their strength in that it makes action possible, their weakness in that the very reason for action is hidden in the unquestioned assumptions of the paradigm.” (Patton, 1978, p.203) Lincoln and Guba (1985, p.15) take the posture that inquiry, in both the physical and social sciences, has passed through a number of “paradigm eras”, periods in which certain sets of basic beliefs guided inquiry in quite different ways. These periods are the prepositivist, positivist, and postpositivist, each period having its own unique set of “basic beliefs” or metaphysical principles in which its adherents believed and acted upon. Chapter 3-4 (revision 19 Aug 2011) p. 9 The Prepositivist Era This era lasted 2000 years, from the time of Aristotle (384-322 B.C.) to that of (but not including) David Hume (1711-1776). Science was essentially at a standstill during this period because Aristotle (and many others) took the stance of “passive observer”. What there was in “physics,” Aristotle argued, occurred “naturally”. Attempts by humans to learn about nature were interventionist and unnatural, and so distorted what was learned. “Aristotle believed in natural motion. Humans’ interference produced discontinuous and unnatural movements. And, to Aristotle, such movements were not God’s way. For example, Aristotle envisioned the idea of “force.” The heavy cart being drawn along the road by the horse is an unnatural movement. That is why the horse is struggling so. That is why the motion is so jerky and uneven. The horse must exert a “force” to get the cart moving. The horse must continue to exert a “force” to keep the cart moving. As soon as the horse stops pulling, it stops exerting a “force” on the cart. Consequently, the cart comes to its natural place, which is at rest on the road.”(Wolf, 1981, p.22) Aristotle contributed to logic, 1) Law of Contradiction: no proposition can be both true and false at the same time 2) Law of the Excluded Middle: every proposition must be either true or false. When applied to passive observations, these two laws seemed sufficient to generate the entire gamut of needed scientific understandings. There was no need for science to experiment or try ideas, as this would distort the truths in nature. The Positivist Era When science began to reach out and touch, to try ideas and see if they worked, they became active observers and science passed into the positivist period. This began to happen with three astronomers (Copernicus, 1473-1543; Galileo, 1564-1642; Kepler, 1571-1630) and logician/mathematician (Descarte, 1596-1650), all of which came from essentially noninterventionist fields. Their work did little to challenge the prevailing paradigm of inquiry, but it did pave the way for that challenge. Positivism may be defined as “a family of philosophies characterized by an extremely positive evaluation of science and scientific method”(Reese, 1980, p.450). Chapter 3-4 (revision 19 Aug 2011) p. 10 The Postpositivist Era Although biomedicine (scientific medicine), including epidemiology and pharmaceutical science, continue to operate in the positivist paradigm, many quantum physicists, biologists, and social scientists have entered the third paradigm era (postpositivist era). A prominent paradigm of the postpositivist era is the Naturalistic Paradigm. A contrast of the two paradigms can be made by considering their axioms (axiom: statement accepted as true to form a basis for argument or inference). Table 1.1 Contrasting Positivist and Naturalist Axioms ============================================================= Axioms About Positivist Paradigm Naturalist Paradigm --------------------------------------------------------------------------------------------------------The nature of reality Reality is single, tangible, Realities are multiple, and fragmentable. constructed, and holistic. The relationship of knower to the known Knower and known are independent, a dualism. Knower and known are interactive, inseparable. The possibility of generalization Time- and context-free generalizations (nomothetic statements) are possible. Only time- and contextbound working hypotheses (idiographic statements) are possible. The possibility of causal linkages There are real causes, temporally precedent to or simultaneous with their effects. All entities are in a state of mutual simultaneous shaping, so that it is impossible to distinguish causes from effects. The role of values Inquiry is value-free. Inquiry is value-bound. -----------------------------------------------------------------------------------------------------------Source: table in Lincoln and Guba, 1985, p. 37 Those who have studied Eastern Philosophy will recognize its influence on the Naturalist Paradigm. Chapter 3-4 (revision 19 Aug 2011) p. 11 Paradigm Transitions (Scientific Revolutions) Although the transition is occurring in biomedicine, it will be a difficult change. Biomedicine still treats disease in a single body system, without considering the whole body, mind, emotions, or social environment (axiom 1). One of, if not the foremost, primary tenant of epidemiology is that a cause precedes the disease. At some point, biomedicine will have to follow quantum mechanics’ lead and enter the postpositivist era. Quantum mechanics has proposed the non-reality of particles, replacing them with electromagnetic field interactions. That is, matter is nothing more than energy complexes, which interact in convoluted and intricate ways (Lincoln and Guba, 1985, p.147). Fritjof Capra explains, “Quantum theory has abolished the notion of fundamentally separated objects, has introduced the concept of participator to replace that of the observer, and has come to see the universe as an interconnected web of relations whose parts are only defined through their connections to the whole.” (Wilber, 1985, p.40) Lincoln and Guba (1985, p.16) give this caveat: “Since all theories and other leading ideas of scientific history have, so far, been shown to be false and unacceptable, so surely will any theories that we expound today.” It is difficult for a fish to understand the limitations of water when his whole life has been in water, just as it is difficult for a scientist trained in the biomedical model to understand how it could be based on an incorrect paradigm. Perhaps we can more easily give up our “reluctant mind” if we take a lesson from the past (our predecessors’ transition from Prepositivism to Positivism). “Harre’s (1981) reference to the ‘Paduan professors who refused to look through Galileo’s telescope’ is a famous instance of what might be called the ‘reluctant mind,’ the mind that refuses to accept the need for a new paradigm.... Galileo, it will be recalled, had gotten himself into trouble with the Church (it was only in 1983 that the Church admitted having committed a major blunder in Galileo’s case; Time, May 23, 1983, p.58) because of his publications outlining a heliocentric universe, a formulation clearly at odds with the Church’s position that the universe was geocentric. Ordered to an inquisition in 1633, he was censured and actually condemned to death, although the sentence was suspended in view of his abject recantation. But prior to the official ‘hearing,’ Galileo was visited in Padua by a group of inquisitors, composed (to the eternal shame of all academics) mainly of professors from the nearby university. According to the story, which may well be apocryphal, Galileo invited his visitors to look through his telescope at the moon and see for themselves the mountains and the craters that the marvelous instrument revealed for the first time. But his inquisitors refused to do so, arguing that whatever might be visible through the telescope would be a product not of nature but of the instrument. How consistent this position is with Aristotelian ‘naturalism’! Galileo’s active intervention in building the telescope and viewing the moon through it were, to the inquisitors’ prepositivist minds, an exact illustration of how the active observer could distort the ‘real’ or ‘natural’ order. Their posture was perhaps not so much a ‘glorying in ignorance,’ as Harre claims, but adherence to the best ‘scientific’ principles of the time.” (Lincoln and Guba, 1985, p.45) Chapter 3-4 (revision 19 Aug 2011) p. 12 Blood Letting: To Summarize The practice of bloodletting as a tried and truth therapy lasted for 1,000 years. It seems surprising that bloodletting could last so long as a standard of practice. It is just another example of applying the scientific paradigm of that Prepositivism era, the physicians practicing that therapy being no different than the Paduan professors in the Galileo story on the previous page. Bloodletting was simply not put to the test of questioning with empiric data during that era. This brings us back to beliefs (see Stoddard’s aphorism above). Wouldn’t you say that holding on to a belief is behaving consistently with Prepositivism? References Altman DG. (1994). The scandal of poor medical research. BMJ 308:283-284. Day RA. (1994). How to Write and Publish a Scientific Paper. 4th ed. Phoenix AZ, The Oryx Press, pp. 4-7. De Long JB, Lang K. (1992). Are all economic hypotheses false? Journal of Political Economy 100(6):1257-1272. Elwood JM. (1988). Causal Relationships in Medicine: a Practical System for Critical Appraisal. New York, Oxford University Press. Halpern SD, Karlawish JHT, Berlin JA. (2002). The continuing unethical conduct of underpowered clinical trials. JAMA 288:358-362. Harre R. (1981). The positivist-empiricist approach and its alternative. In Reason P and Rowan J (eds) Human Inquiry: a Sourcebook of New Paradigm Research. New York, John Wiley. Hopkins T. (1982). How to Master the Art of Selling. 2nd ed, New York, Warner Books. Ioannidis JPA. (2005). Why most published research findings are false. PLoS Med 2(8)e124:696-701. Ioannidis JPA, Allison DB, Ball CA, et al. (2009). Repeatability of published microarray gene expression analyses. Nature Genetics 41(2):149-155. Kuhn TS. (1970). The Structure of Scientific Revolutions. 2nd ed, Enlarged. Chicago, The University of Chicago Press. Lanes SF. (1988). The logic of causal inference in medicine. In Causal Inference, Rothman KJ (ed) Chestnut Hill, MA, Epidemiology Resources, Inc. Last JM. (1995). A Dictionary of Epidemiology. New York, Oxford University Press. Chapter 3-4 (revision 19 Aug 2011) p. 13 Lehrer J. (2010). The truth wears off. The New Yorker, December 13:52-57. Lincoln YS, Guba EG. (1985). Naturalistic Inquiry, Newbury Park, Sage Publications. Merriam-Webster’s Collegiate Dictionary, 10th ed. (1999). Springfield MA, Merriam-Webster, Inc. Patton MQ. (1978). Utilization-Focused Evaluation. Beverly Hills, Calif, Sage Publications. Popper KR. (1968). The Logic of Scientific Discovery. New York, Harper and Row, pp.32-34. Reese WL. (1980). Dictionary of Philosophy and Religion. Atlantic Highlands, NJ: Humanities. Wilber K. (1985). No Boundary. Boston, Shambhala Publications, Inc. Wolf FA. (1981). Taking the Quantum Leap. San Francisco, Harper & Row. Zolman JF. (1993). Biostatistics: Experimental Design and Statistical Inference. New York, Oxford University Press. Chapter 3-4 (revision 19 Aug 2011) p. 14