In memory Computing – A solution to the Von Neumann Bottleneck

advertisement

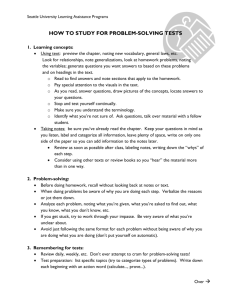

Sylvain EUDIER MSCS Candidate Union College - Graduate Seminar - In-Memory Computing: A Solution To The Von Neumann Bottleneck Winter 2004 Graduate Seminar | In-Memory Computing : A Solution to the Von Neumann bottleneck I. Introduction to a new architecture: 1. The von Neumann architecture: Today’s computers are all based on the von Neumann architecture and it is important to understand this concept for the rest of this paper. A computer as we see it, is actually a von Neumann machine in which lies 3 parts: - a central processing unit, also called the C.P.U., - a store, usually the R.A.M. - a connecting tube that will transmit a word of data between the store and the CPU or and address. J.W Backus proposed to call this tube the von Neumann bottleneck :`The task of a program is to change the store in a major way; when one considers that this task must be accomplished entirely by pumping single words back and forth through the von Neumann bottleneck, the reason for its name becomes clear.' We can naturally wonder: what is the influence of the von Neumann bottleneck on today’s architectures? We will shed light on this in the following paragraph. 2. A little bit of history: During many years, processors performances have been doubling every 18 to 24 months, closely following Moore’s law1. This increase in performances has been possible because the die sizes increased as well. As a consequence, increasing the die implies to increase Moore’s law: Moore’s Law states that the processing power doubles every 18 months. This law has be closely followed by the CPU for a lot of years now. 1 2 Graduate Seminar | In-Memory Computing : A Solution to the Von Neumann bottleneck the maximal distance between two random points on the processor, clock-cycles speaking. To solve this problem pipelining has been invented and used widely. However, such a solution also has a counterpart: it increases some latencies such that cache Figure 1: Evolution of the performance gap between memory and processors from 1980 to nowadays access, brings a negative impact on the branch prediction (if a prediction ends up being false, the whole pipelined instructions has to be processed backward) and obviously complexifies the processor design. A second major problem in today’s architecture is the growing gap between processor speeds and memory speeds. While the former saw its performance increasing by 66% a year, the latter only increased its performances by 7% a year (basically bandwidth). This has created a problem for data-intensive applications. 3. Some solutions To bridge these growing gaps, many methods have been proposed: On the processor side: - Caching: Caching has been the most widely used technique to reduce this gap. The idea is to store useful data (usually based on the number of access to a location) into a very fast and small memory with higher bandwidth to provide fast 3 Graduate Seminar | In-Memory Computing : A Solution to the Von Neumann bottleneck access to this particular information without having to looking in the main memory (a lot slower but also bigger). - Prefetching: This is a kind of prediction where some data will be fetched into the cache even before it has been requested. - Multithreading: This is an attempt to hide the latencies by working on multiples processes at the same time. If a process requires some data to continue its execution, the processor will pause this one and switch to another to process the available data. Recently Intel improved this idea with the Hyper Threading technology. The pentium 4 having such a long pipeline, it was hard to obtain a 100% CPU charge with processing-intensive applications. The HT technology simulates 2 processors so they can share the amount of work and balance the CPU utilization. Despite all these improvement, some of them are not necessarily useful for data-intensive application. We will see that memories also tried to soften these gaps. On the memory side: - Access times: This has been the first step for improvement. When a processor wants to access a word in memory, it will send an address and will get back a word of data. However, the way most programs are written, if one accesses data at address n, it will likely access the data at n+1. Memory specifications are based on access times, usually four numbers to represent to how many cycles are needed to access the first word, the second, the third and fourth.(example: 3-1-1-1). 4 Graduate Seminar | In-Memory Computing : A Solution to the Von Neumann bottleneck - DDR-SDRAM: DDR-SDRAM (Double Data Rate SDRAM) is an evolution of the classic SDRAM (Synchronous Dynamic Random Access Memory) on the way the data is accessed. With DDR memory, it is possible to access data from both the rising and falling edges of the clock, therefore doubling the effective bandwidth. - RAMBUS: This is a different interface based on a simplified bus that carries data at higher frequency rates. When using two independent channels, it becomes possible to double the bandwidth. To summarize the impact of all these performances disparities and architecture differences, let’s have a look to the following chart. Figure 2: Memory bandwidth in a computer based on a 256MB of 16MB, 50ns DRAM chips and a 100 Mhz CPU 5 Graduate Seminar | In-Memory Computing : A Solution to the Von Neumann bottleneck We clearly see that despite all these improvements, the bottleneck is still present in this architecture. And even though Rambus’ technology offers a wide bandwidth, the difference with what is available at the sense amplifiers inside the memory it a factor of almost a thousand. Can we avoid the bottleneck and take advantage of this so-important bandwidth? 4. A new architecture: Instead of focusing on the processor-centric architecture, researchers proposed a few years ago to switch to a memory-centric architecture. After the observation of D. Elliott2 that memory chips have huge internal bandwidth and that the memory pins are the cause of its degradation. The main idea was then to fusion the storage and the processing elements together on a single chip and to create memories with processing capacity. Several denomination have been around to describe this, the most common are: intelligent RAM (IRAM), processor in memory (PIM), smart memories… As we will see later, the denomination is related to the application / design of these chips: main processor in the system, special purposes… To increase the amount of memory, the actual smart memories often use SDRAM instead of SRAM. 2 Duncan Elliott started to work at the University of Toronto and continues his researches at the University of Alberta, Canada. 6 Graduate Seminar | In-Memory Computing : A Solution to the Von Neumann bottleneck II. Different architectures 1. The IRAM architecture The IRAM acronym stands for Intelligent RAM. It has been developed at the Berkeley University of California. IRAM has been designed to be a stand alone chip, to allow memories to be the only processing element in any kind of computing machine, i.e. PDA’s or cell phones… A prototype has been taped out in October 2002 by IBM, for a total of 72 chips on the wafer. I sent an email to the testing group and the only reply I had was that it is still in progress. However, some estimations give can give us an idea of the available power from these memories: for a 200Mhz memory, the expected processing power is 200 Mhz * 2 ALU’s * 8 data per clock cycle = 3.2 Gops for 32 bit data, which gives us 1.6 GFlops on 32 bits data. Figure 3: The IRAM design 7 Graduate Seminar | In-Memory Computing : A Solution to the Von Neumann bottleneck Figure 4: The IRAM block diagram We can see on this block diagram the two ALU’s of the IRAM and the vector architecture used for the processors. The vector functional units can be partitioned into several smaller units, depending on the precision required. For example, a vector unit can be partitioned into 4 units of 64 bits or 8 units for 32 bits operations. 8 Graduate Seminar | In-Memory Computing : A Solution to the Von Neumann bottleneck 2. The RAW architecture The RAW architecture has been designed by the MIT. Here, the approach is different, because the processing power relies on a mesh topology. Several processors are implemented are connected altogether by 2 networks. The idea here is more to aim at a specific parallel computing memory for high scalability. A typical tile is a RISC (Reduced Instruction Set Computer) processor, 128KB of SRAM, a FPU (Floating Point Unit) and a communication processor. Figure 5: The RAW design The networks used to make these tiles communicate are in the number of two: - 2 static networks: The communication on these networks is performed by a switch processor at each tile. This processor provides throughput to the tile processor of one word per cycle with an additional latency of three cycles between nearest neighbors. - 2 dynamic networks: When this networks are used, the data is sent in a packet to another tile, with header and data (like in a normal network). 9 Graduate Seminar | In-Memory Computing : A Solution to the Von Neumann bottleneck The memory is located at the periphery part of the mesh and can be accessed either through either networks. The expected performances of this design for a memory running at 300Mhz would be about 3.2Gflops for 32 bits data. 3. The CRAM architecture The CRAM (for Computational RAM) architecture has been designed at the University of Alberta by Professor Elliott and continued at the Carleton University in Ottawa. This memory is probably the oldest available design because they already developed four prototypes since 1996 and the last one is currently under design. For this architecture, the plan was to offer a massively parallel processing power for a cheap cost with the highest bandwidth available. So the PE’s (Processing Elements) are implemented directly at the sense amplifiers and are very simple elements (1-bit serial) designed to process basic information. This way, CRAM is designed to be a multipurposes and highly parallel processing memory. 10 Graduate Seminar | In-Memory Computing : A Solution to the Von Neumann bottleneck Figure 6: The CRAM design The different PE’s can all communicate between each other through a right / left shift register. We will describe in the next section how the CRAM performs against actual computers and in which applications the design gives its power. III. The CRAM Architecture In this part we will focus on the CRAM architecture for two main reasons. First, it is one of the most advanced project with already 4 prototypes working hence validity of the performance results. Secondly, the CRAM is firstly designed for multi-purposes applications and is more likely to be widely use. 11 Graduate Seminar | In-Memory Computing : A Solution to the Von Neumann bottleneck 1. Applications The CRAM is very efficient for parallel computation especially if the algorithm are parallel reducible. The greater the degree of parallelism of a computation the better. Because of the way the PE’s are combined with the memory, a bigger computation does not necessary mean more time. If the problem fits in the memory, it will just be computed by more PE’s. As a consequence, more memory equals more available processing power. However, even if the problem is not prone to parallel computing, the fact that the PE’s are directly implemented at the sense amplifiers gives anyway a great bandwidth and amortizes the decrease in performances. In order to get an idea of how this PIM performs, we will compare results from different applications on different architectures. For the test and applications, we will focus on three fields: Image processing, Databases searches and multimedia compression. These tests are very interesting for three reasons: - For the different fields they represent, to demonstrate the general purpose of the CRAM, - For their different computation models and complexity, - Because they are based on practical problems. Applications details: Image processing: I decided to work on two tests for their different purposes and model complexity used. 12 Graduate Seminar | In-Memory Computing : A Solution to the Von Neumann bottleneck - Brightness is the typical parallel reducible algorithm, perfect for CRAM computation. In this algorithm, the image is placed in the memory and all the PE’s will work together on each image’s row. This is pure CRAM computation due to the parallel design. - Average filter is a totally different algorithm because it makes the PE’s to communicate information to their neighbors. The average filter computes the value of a middle pixel by averaging the values surrounding it. Thus, even though the PE’s have a parallel work to accomplish, they also have to provide this result of their computation to their neighbor (the computation of this algorithm is by nature done on 3 columns and implies the use of the bit shifting register). Databases searches: In this application we are searching for example for the maximum value over a randomly generated list of numbers. This test is interesting because both the normal computer and the CRAM will have to go through all the elements to decide which one is the biggest. This is a linear time search for both of them. Multimedia compression: This algorithm will have to determine which part of the movie is actually moving between 2 images. As a result, it involves a lot of communication among the PE’s because of the image processing. However, this computation also brings a lot of redundant processes as we are working on blocks of pixels: good parallelism properties. Such a test will give us an idea on how the communication among the PE’s influences the performances when other advantages get in the game. 13 Graduate Seminar | In-Memory Computing : A Solution to the Von Neumann bottleneck 2. Performances a. Preliminary observations Now before going on the tests, let’s have a look to the processing complexity of basic operations for the CRAM. Figure 7: Complexity of basic operations in CRAM Due to the design of the PE’s as 1-bit serial processing units, the addition is performed in linear time as well as logical operations. But when we look at the multiplication and division complexity, it gets to a quadratic order and the theoretical performances are also reduced by a quadratic factor. 14 Graduate Seminar | In-Memory Computing : A Solution to the Von Neumann bottleneck b. Test configurations The tests had been run on three different platforms: - a Pentium 133Mhz with 32 MB of RAM, - a Sun Sparc Station 167Mhz with 64MB of RAM - 32 MB of CRAM at 200Mhz with 64K PE’s on a Pentium 133Mhz. (simulated) The simulation of the CRAM had been done with the simulator because of the lack of available chips for the test. Nevertheless, simulated results are close to reality are some comparisons with a real chip confirmed it. c. Basic operations test Before entering the applicative tests, we will have a look on how the CRAM performs on basic operations compared to these computers: Figure 8: Basic operations comparison We see clearly from these figures that the CRAM dominates the other machines. Performances are ranging from 30 times the speed to 8500 times. On basic operations 15 Graduate Seminar | In-Memory Computing : A Solution to the Von Neumann bottleneck however, the results are not really meaningful for everyday applications even if we can expect good performances from it. Another fact to notice is that the switch from 32 to 8 bits has a linear impact on the CRAM performances whereas the PC / Workstation ‘s performances not even decrease 2fold. As a consequence when working with CRAM, programmer have to be careful on the type of data they are using. Usually in C or Java, the integer definition is way too big for most programs because using this precision is affordable on a PC. d. Image processing test Figure 9: Low level image processing performances The low level image processing test brings us some very interesting results. Firstly we can notice that the speedup of CRAM over the two computers is as we expected really different for the two processes. The brightness adjustment is clearly intrinsically designed to be computed on parallel systems, hence the 800-fold speedup. In comparison to this, the average filter that requires communication between the PE’s lowers the performances by a factor of 8. 16 Graduate Seminar | In-Memory Computing : A Solution to the Von Neumann bottleneck Secondly, we notice the differences between the ‘CRAM without Overhead’ and ‘CRAM with Overhead’. To evaluate performances, tests have been simulated as if the CRAM was either used as the main memory (without overhead) or as an extension card (with overhead). If CRAM is not the main memory, the data have to be transferred from the host to the CRAM with all the overhead it includes. However, considering the kind of processes we apply to the data, ie. low level image processing, the result with overhead does not really have any importance for a real use. Indeed, this kind of filtering is usually the first pass of a multi-filtering process thus the overhead is shared among several operations. But in case CRAM is used as an extension card, the figures obtained show us that due to the very quick process of brightness, the overheaded process is much slower than the average filtering because here, the overhead transfer time becomes really close to the processing time. e. Database searches test: Figure 10: Databases Searches performances On the databases searches, the results give us another picture of the CRAM capacities. In this problems, the search has to be applied to the whole list of numbers to find the extreme values. So in any case, the computation has to be linear (because of the randomly generated list) and the CRAM gives us the same results as the normal computers, expect 17 Graduate Seminar | In-Memory Computing : A Solution to the Von Neumann bottleneck the 200 speedup factor in favor of CRAM. Here, the CRAM is just showing us its huge bandwidth possibilities. f. Multimedia compression test: Figure 11: Multimedia performances The multimedia test produces results in between the two previous tests. We take advantage of the parallel design of motion estimation and we also take advantage of the bandwidth inside the CRAM. We see that without overhead, the CRAM offers a gain of 1500% over the normal processor based machines. This is basically due to the redundant computation brought up by the nature of the problem. Even with overhead, performances are still good because the motion estimation is applied to a block of pixels, by consequence decreasing the overall overhead. g. Overall performances When summarizing the results we got from all these different applications, we see a certain continuity in the results. The CRAM performs at its best on simple, massively parallel computation but can also perform at a high level when only relying on the memory bandwidth. We can conclude from these figures that this processing in memory is very efficient at equivalent frequency rates, always getting a significant speedup over 18 Graduate Seminar | In-Memory Computing : A Solution to the Von Neumann bottleneck the processor centered machines and sometimes outperforms these when it comes to its favorite field: memory-intensive and parallel computation. 3. Implications a. Software design The CRAM implies a new way of writing programs and need new interfaces to operate with current languages. The first modification is obviously the fact that everything is performed at a parallel level and therefore need provide the programmer with some CRAM specific parallel functions. The design of programs for CRAM is based on three levels, these are from high to low level : algorithmic, high level language (C++) and low level languages (assembly). We will see how one can create a program for a CRAM-based architecture. i) Algorithm design Figure 12: Pseudo code for brightness adjustment This is the first step to design a program for a CRAM architecture: the program has to take advantage as much as possible of the parallel characteristics of the problem. With 19 Graduate Seminar | In-Memory Computing : A Solution to the Von Neumann bottleneck the brightness adjustment, we have a simplification on the loop: instead of looping on all the elements of the image, we compute in parallel the new brightness value. This can yield to a much clearer code because its closeness to an English sentence: “Apply this brightness value on all the pixels of this image” <-> “On every pixel, do in parallel the computation”. ii) C++ Code development Figure 13: C++ Code for brightness application Libraries have been developed for the C/C++ languages to write programs for the CRAM architecture and parallel patterns in general. For example, a parallel if statement ‘cif’ has been implemented to perform the comparison in parallel or parallel variable types like cint. On this example, the CRAM program is much more talkative than the standard version because it abstracts the fact that we have to work on all the pixels. Everything is done in parallel from the declaration type to the comparison and the assignment. iii) Optimization with Host / CRAM code 20 Graduate Seminar | In-Memory Computing : A Solution to the Von Neumann bottleneck Figure 14: Host / CRAM assembly codes There are not yet comprehensive compilers ready to translate program efficiently and to balance CRAM code with HOST code: some computations may run faster in the CRAM or on the host system, depending on whether or not we use CRAM as the main memory or as an extension card, or even for precision purposes. So the programmers still have to write some assembly code to state that this part of the code will be executed in the CRAM whereas the rest will be done locally. This is written with the keywords ‘CRAM’ and ‘END CRAM’ with CRAM-specific instruction in the block. The design process of a CRAM program is not really cumbersome but some concepts and ideas must be followed to produce efficient code (parallel processing and precision needed) 21 Graduate Seminar | In-Memory Computing : A Solution to the Von Neumann bottleneck b. Energy consumption: Due to the integration of all the elements on chip (memory, bus, processor) the consumption gets very low. The main advantage of this Processing in memory design is that no bus is required hence a lot of energy saved. Typically, when data are sent on a bus, a lot of overhead is necessary to give the destination of the data and some error control codes and as a consequence, energy is wasted in useless data transportation. In CRAM the PE’s are integrated at the sense amplifiers so almost all the data bits driven are used in the computation. A study on energy consumption compares a Pentium 200Mhz and a CRAM chip of 16Kb also at 200Mhz with standard electric interface for each of them. The results are impressive: the Pentium will yield to energy consumption of 110pJ per data bit and on the other side, the CRAM is about 5.5pJ. The consumption is reduced by a factor of 20. Regarding nowadays computers, energy saving is of great concern because of the heat produced from the processors. For a Pentium 4 processor, the consumption can be around 100 Watts thus needing a powerful and noisy cooling system. IV. The future Some questions may arise from this analysis of the CRAM architecture, concerning its evolution, its adoption from the global market, its application in the professional market… 22 Graduate Seminar | In-Memory Computing : A Solution to the Von Neumann bottleneck Firstly, among all the three architectures presented, CRAM / IRAM / RAW, we can wonder whether one them will lead the market are if one of them will arise as an everyday’s technology. To this question, we should remember the Rambus case, where the technology of the Rambus memory was better than SDRAM and DDR-SDRAM. They had important sponsors for their memory (Intel was part of them) and the product did not reach the expected sales. The main reason was probably due to the high price of this memory despite its obvious qualities, the market refused it. An architecture will need more than just sheer performance to come to the top and seduce the users, it will also need to be easy to implement, to write program for and offer constant improvement, not huge speedups in some very specific domains. Secondly, we may see these memory as the end of today’s architectures and processor centered computer. Indeed, some researchers strongly believe in this and above all see this as the only possible evolution of nowadays computers. It offers higher performances, reduces the energy consumption and provide an highly scalable architecture. When we look at super computers, we know can easily imagine that cooling 2000 processors is not an easy task and such solutions would be welcomed. Then, another important point about these memory is the possibility to reach the Petaops with CRAM. This is actually possible with the current technologies and a study came up with interesting results. Four scientists from the University of Alberta in Canada (Prof. Elliott is part of them) found that with 500Mhz SRAM and PE’s for every 512 bytes, you just need 1 TB of RAM to obtain a processing power of a Petaops. While it may seem to 23 Graduate Seminar | In-Memory Computing : A Solution to the Von Neumann bottleneck be a crazy idea, in some of the actual supercomputers, the main memory is often close the terabyte. Reaching the Petaops has been one of the most challenging goal for supercomputers in the last few years since it became possible to think of its realization. Finally, to emphasize the usefulness and powerfulness of these PIM designs, I will present an IBM project called Blue Gene. A supercomputer is needed to simulate folding of proteins, problem of unbelievable complexity. Figure 15: Blue gene/P architecture This project is interesting in this discussion because the supercomputer is based on PIM’s. The main idea being to implement the processor and the memory on the same chip and to put as much chips as possible together: to literally create arrays of PIM’s. IBM’s plan is based on three supercomputers, Blue Gene/C, L and then P. The first one, Blue Gene/C has been released last year and is ranked as 73rd in the 500 most powerful 24 Graduate Seminar | In-Memory Computing : A Solution to the Von Neumann bottleneck computers. IBM wants to release the next version in 2005, Blue Gene/L, which will be about 200/360 TeraFlops. To represent the power of this supercomputer, it is as faster than the total computing power of today’s 500 most powerful supercomputers. Then, in 2007, the final version called Blue Gene/P will be released and will finally reach the PetaFlops. To compare it to the famous Deep Blue, this is 1000 times faster. To continue with numbers, this is also equivalent to 6 times the processing power of today’s most powerful supercomputer, which is the Earth Simulator Center in Japan. About the energy consumption, it will be 1/15th of the ESC, 10 times smaller (just half a tennis court). Because of this special heating reduction, this super computer will be air cooled, process uncommon enough to be highlighted. To come back to our personal computers, we would need 2 millions computers to equal this power. 25 Graduate Seminar | In-Memory Computing : A Solution to the Von Neumann bottleneck Figure 16: Supercomputers and the last Apple G5 processor compared To sum it up, the technology for processing in memory is ready and working, IBM has released the first version of this architecturally new supercomputer and the figures obtained from this are very enthusiastic: more power in less space, more scalability and less power consumption, more than a prototype, Blue Gene is a good outlook of what the future is made of for supercomputers and personal computers. 26 Graduate Seminar | In-Memory Computing : A Solution to the Von Neumann bottleneck Bibliography: 1. “Liberation from the Von-Neumann bottleneck?” Mark Scheffer 2. “Computational Ram” by Duncan Elliott http://www.eecg.toronto.edu/~dunc/cram/ 3. The IRAM project at the Berkeley University of California http://iram.cs.berkeley.edu 4. “System Design for a Computational-RAM Logic in memory Parallel Processing Machine” Peter M. Nyasulu, B.Sc., M.Eng. 5. CRAM information: Toronto University : http://www.eecg.toronto.edu/~dunc/cram/ 6. CRAM information : Alberta University: http://www.ece.ualberta.ca/~elliott/ 7. IRAM : Berkeley University: http://iram.cs.berkeley.edu/ 8. IRAM publications: http://iram.cs.berkeley.edu/publications.html 9. PIM documentation : http://www.nd.edu/~pim/ 10. ACM Digital Library : http://www.acm.org/dl/ 11. The top 500 supercomputers in the world : www.top500.org 12. “The Gap between Processor and Memory Speeds” Carlos Carvalho 13. “Bridging the processor-memory gap” David Patterson and Katherine Yelick 27