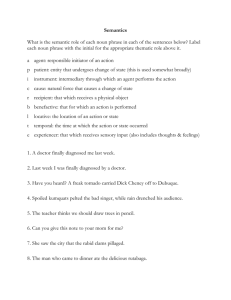

Evaluation of Evidence

advertisement

1 Evaluation of Evidence Professor Martina Morris Notes for Papers Ideally, social science methodology consists of rules that are used to construct and evaluate the adequacy of proposed explanations. The purpose of methodology is to eliminate unsupported differences (often termed biases) between individuals. It allows you to trust another person's research conclusions in the sense that you could expect to reach the same conclusions if you had conducted the research yourself in an objective manner. If two social scientists follow accepted methodology in their research, they should obtain the same findings with respect to the same data. For diverse reasons, different people conduct differentially insightful and adequate research. Rules of methodology serve as guidelines for critically evaluating the relative adequacy of research arguments proposed by different people. Rules of methodology enable you to determine the extent to which you can trust the conclusions presented in a study. In a brief paper (3 to 5 TYPED pages) you are to justify your trust in one or more of the conclusions reached in the assigned article. It is up to you to identify a theme, an angle, for your paper. Use your imagination, but here are some points that are likely to be useful guides to selecting a paper topic: Focus your attention on the most central propositions in the research argument. Avoid tangential or trivial conclusions. A proposition is central to the extent that it is a basis for other propositions, or the conclusion. A datum or class of data is central to the extent that it is the only evidence available to support the most central propositions and conclusions.. In evaluating a research argument, extensive flaws in tangential conclusions are less significant than a single flaw in a central conclusion. Go for the throat of the argument. Focus on bias rather than noise. Random errors in observation are worth noting, but they merely attenuate the strength of empirical evidence. Systematic errors are much more critical. Focus on possible confounding variables that have been ignored. However, remember that it is not enough to argue that a variable may have been overlooked. You must argue that this variable would change the findings, explain how you would use it to do so, and (if possible) present some evidence suggesting your argument is supported. Where possible, present your analysis as one of two (or more) arguments explaining the same data. This strategy has been explicit in class. Given the many arguments that are possible and plausible explanations for a social fact, it is less informative to present evidence on one plausible argument than it is to evaluate the relative merits of alternative arguments purporting to explain the same data. I recommend three sections at a minimum: 2/15/2016 2 Evaluation of Evidence Professor Martina Morris (PURPOSE) In the first paragraph of your paper, briefly state the argument made in the article you are evaluating, and the argument that you plan to make. You are not writing a mystery novel; put your conclusions up front. (ARGUMENT) The body of your paper should be a logical sequence of paragraphs, where each paragraph describes an element in your argument and presents your evidence on that element. (CONCLUSION) Spend a paragraph restating your conclusion and summarizing the overall strength of the evidence presented for your conclusion. RE-READ THE PRECEDING POINTS. Far too many students write ineffective papers because they do not take a little time to organize what they wish to communicate with their paper. If you cannot state the purpose of your paper in a sentence, you can not communicate it to others effectively. Stop. Condense your remarks down to a key theme. Make that theme explicit in your opening and closing paragraphs. Before you turn in your paper, check it for the following common errors: Never use the word PROVE except in logic and mathematics. Evidence confirms, supports or illustrates an argument (rather than proving the argument). Same goes for DISPROVES. Avoid anthropomorphizing. Papers don't argue, people do. The author is responsible for the argument. The paper is merely the vehicle for delivering the argument. Data are plural. Datum is singular. Data typically refer to past events and so should be discussed in the past tense. Arguments are present tense unless you are commenting on an already published argument. Be extremely careful with the language of causation. The typical social science causal variable is presented as a sufficient cause, not a necessary one. Minimize expressions of personal judgment and maximize argument. Avoid statements such as the following: "Durkheim is brilliant." "Durkheim is fundamentally flawed." Check your spelling. There is no excuse for spelling incorrectly at this stage in your career with the many tools you have at your disposal. Spelling errors make you look foolish. Some key elements of a research argument (and useful references for more detailed discussion of the elements) are listed below. The elements, and the critical questions that they imply, can be raised as issues in evaluating the adequacy of an argument. They are central issues in many research arguments, so may be useful to you in formulating topics for your papers. You need not consider all of them, nor even any of them, but then you should have something better. The list is not exhaustive. The definitions are not proposed 2/15/2016 3 Evaluation of Evidence Professor Martina Morris as the only definitions accepted among social scientists. They are often accepted definitions. Remember, this is a course in the evaluation of evidence. Data, observations, are no more than facts. Do not become obsessed with facts. In later courses, you will obtain a familiarity with sophisticated methodology that social scientists invoke to ensure precision in data analysis. Evidence is the central concern here. Evidence is a fact placed appropriately in a logical argument. ELEMENTS IN THEORY concept -- an unobserved property of a unit of analysis that discriminates among units definition -- a concept or set of concepts that gives precise meaning to each element of an argument. proposition or hypothesis -- a connection between two or more concepts indicating how categories of one concept are associated with categories of other concepts unit of analysis -- an entity within which a proposition operates and across which concepts can vary (e.g., people, informal groups, corporations, societies) universe -- the set of units of analysis for which a proposition is argued to be true. ELEMENTS IN DATA covariation or correlation-- a tendency for values/categories of one variable to occur together with specific values/categories of another variable. variable -- an observed property of a piece of data that takes on different values in the data set, i.e., it varies from one piece of data to the next. measurement -- the assignment of observations to categories of a concept (also termed "operationalization", should be reliable and valid) reliability -- the extent to which a measurement could be replicated by other people at other times (should be high) validity -- the extent to which a measurement produces a variable that measures what it purports to measure (should be high) hypothesis -- a connection, deduced from on or more propositions, between categories of variables (e.g., predicted covariation) empirical generalization -- an observed, but not explained, connection between categories of variables (e.g., often observed, but unexplained, covariation) 2/15/2016 4 Evaluation of Evidence Professor Martina Morris population -- a group (or set of units of analysis) that one wants to study and make conclusions about. sample -- a subset of units in a population drawn to "represent" the population of interest. ELEMENTS IN ANALYSIS operationalize -- to translate a concept into something that can be measured. face validity -- an appeal to common sense. typology - an explanatory classification. controls -- factors held constant so as to reveal social facts or covariation independent of the factors held constant (i.e., factors on which individuals are homogeneous before they can be meaningfully compared) proxy variable -- an available substitute for an unmeasured variable of interest. confounding variable -- an uncontrolled variable Z that distorts the true association between variables X and Y, making it appear that there is a relation when there is none (a spurious relation), or making it appear that there is no relation when there is one (a suppressive effect). spurious effect -- an association between X and Y that disappears when the true causes of Y are held constant deductive reasoning -- reasoning from theory to evidence by generating a prediction. inductive reasoning -- reasoning from evidence to theory by making a generalization. verification -- a search for evidence supporting a hypothesis falsification -- a search for evidence contradicting a hypothesis disconfirming evidence -- evidence that conclusively demonstrates that an hypothesis is incorrect. confirming evidence -- evidence that supports, but does not prove, an hypothesis. counterfactual -- a thought experiment that generates a (testable) prediction by imagining an alternative initial condition. 2/15/2016 5 Evaluation of Evidence Professor Martina Morris SOME METHODOLOGICAL QUESTIONS WHY WAS THE STUDY CONDUCTED? What is the general issue that it addresses? How did the authors come to the propositions that they test and the data that they analyze? What was the hoped for result? Could this hope have biased the presentation of evidence or the adequacy of the conclusions? HOW ADEQUATE ARE THE DEFINITIONS? Are the key terms in the argument clearly defined? Are there ambiguities or inconsistencies in the definitions? HOW ADEQUATE IS THE CONNECTION BETWEEN THEORY AND DATA? Are the theoretical propositions clearly stated so that concepts, units of analysis and hypotheses can be stated clearly and easily? Is the presentation of hypotheses logical and consistent in light of available research and the propositions under consideration? HOW ADEQUATE IS THE MEASUREMENT? Are concepts adequately operationalized? What evidence of validity is presented? How might the variables be invalid measures of the concepts under discussion? What evidence is presented on reliability? How might the measured variables be unreliable? HOW ADEQUATE IS THE SELECTION OF OBSERVATIONS FOR THE STUDY? Does the samples adequately represent the population that the authors claim to be interested in? Or are they biased in some manner such that evidence supporting hypotheses could be exaggerated (or understated)? HOW ADEQUATE IS THE SEARCH FOR EVIDENCE? Are the controls appropriate? Do the authors bury evidence pertinent (supportive or antagonistic) to the hypothesis being tested? Are the authors effective in holding constant relevant confounding variables? HOW ADEQUATE IS THE VERIFICATION? What hypotheses are supported? How strong is the evidence in support of the accepted hypotheses? Have hypotheses implied by the proposition under test not been subjected to empirical tests -- even though they could have been verified with the available data? HOW ADEQUATE IS THE FALSIFICATION? What hypotheses are rejected? Is the evidence against rejected hypotheses as strong as that supporting accepted hypotheses? Is it strong enough to justify rejection? Have hypotheses implied by the proposition under test not been subjected to empirical tests -- even though they could have been falsified by the available data? HOW ADEQUATE IS THE STUDY AS A WHOLE? Not every element in a research argument will be as solid as every other element. Some are much stronger than others. To what extent are the most central elements in the research argument well presented and supported with evidence? 2/15/2016