OptimizingHSTScience..

advertisement

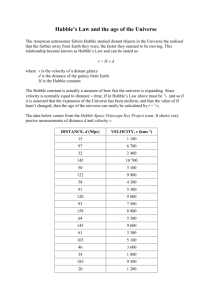

Optimizing Science with the Hubble Space Telescope Brad Whitmore, whitmore@stsci.edu One of the primary goals of the Space Telescope Science Institute (STScI) is to optimize science with the Hubble Space Telescope. This mantra has been with me since I joined the Institute some 27 years ago. It infuses everything we do at the Institute, from organizing the telescope allocation process, to scheduling the telescope, to calibrating and characterizing the instruments, to making the data available through the archives. I was recently reminded of this by a presentation given by Riccardo Giacconi at the 20th anniversary Hubble Fellowship Symposium. He talked about the early days when he was the Institute’s Director, and about the “science system engineering” view we took to optimizing science from Hubble. He has expanded on this topic in his book, Secrets of the Hoary Deep: A Personal History of Modern Astronomy. In the context of the symposium, Giacconi explained that this “system” approach even included the creation of the Hubble Fellowship program. I recently became the Institute’s Project Scientist for Hubble. I was not expecting to apply for this position, but when the job advertisement came out and included statements like “explore potential science-driven enhancements that STScI can make to increase the impact of Hubble,” I found that it resonated. I felt it was a description of all the positions I have held over the years while at the Institute. In preparing for the job interview I started thinking about the various facets of optimizing science, not realizing that this was simply following the system-engineering approach that had been ingrained in me since arriving at the Institute. I found myself thinking about a metric called “discovery efficiency,” which I first heard used by Holland Ford to characterize the improvement in science that the planned Advanced Camera for Surveys (ACS) would have over the existing Wide Field Planetary Camera 2 (WFPC2). The discovery efficiency is the product of the quantum efficiency (QE) times the field of view (FOV) of a detector. Unless a proposed new instrument improves the discovery efficiency by at least an order of magnitude over its predecessor, it is hard to generate the enthusiasm needed to win over a review committee. In a recent example, the discovery efficiency in the ultraviolet for Wide Field Camera 3 (WFC3) is about 50 times better than WFPC2 (primarily due to the better QE) and better by a similar margin over the ACS (primarily due to the smaller FOV of the High Resolution Camera on ACS). The discovery efficiency of WFC3 in the infrared is also about 30 times better than NICMOS, due to a combination of both QE and FOV. This concept of a science metric led me to develop the following framework for optimizing science with Hubble, based on three categories of performance: telescope capabilities, project turnaround time, and user bandwidth. Component 1. Telescope Capabilities The primary attributes defining the telescope capabilities are, of course, the capabilities of the instruments themselves. The QE and FOV of the detectors play major roles, but so do instrument modes—which ones are enabled, and how well are they calibrated, for example, at 10, 1, or 0.1% level? Often, hard tradeoffs are based on only partial information. For example, does the science that would result from enabling a rarely used mode justify the observing time and analysis effort to calibrate and characterize the mode? Capabilities can also be improved by utilization factors, such as maximizing the scheduling efficiency, making good use of special observing modes like snapshots and parallels, and maximizing the lifetime of the instruments (health and safety issues are always paramount). For example, starting in Cycle 17, we improved our utilization of Hubble by enabling a new way of performing pure parallel observations. It is clear that parallel observations have the potential to greatly enhance the overall science from the telescope, since in principle nearly twice as much data can be taken. In practice, the gain is somewhat less, since parallel observations cannot be attached to all primary observations. There are two classes of parallel observations; coordinated parallels, where an observer includes the parallel observations in their own proposal, and pure parallels, where (before Cycle 17) the schedulers added parallel observations based on a generic algorithm (e.g., if in the galactic plane, use this set of filters, but if out of the plane, use a different set of filters). In practice, the science gains for the pure parallels have been relatively minor, partly because there was no “owner” who had specifically designed the observations for their project. In Cycle 17, we changed the way we do pure parallels. The basic difference is that opportunities for deep parallel observations are now identified after the Phase I allocation (e.g., long primary COS and STIS exposures where several orbits are available for parallel observations of “blank fields”), and these opportunities are then matched to specific projects that have been allocated time for deep parallel observations. Component 2. Project Turnaround Time Typically, the turnaround time for a Hubble observing project—defined as the time from the initial idea to the published paper—is several years. Our goal is to reduce the turnaround time by eliminating unnecessary delays in the timeline and improving the efficiency of data analysis. Observers should be using most of their time designing observations and interpreting the results, rather than waiting on the clock or calendar, or performing routine aspects of the analysis, like flat-fielding. Over the years, we have cut several months off the time delay between the selection of proposals and when observations actually start. Efficiency improvements have included providing high- quality data products via the calibration pipeline to reduce redundancy, and developing a set of generic analysis software tools for the community (Space Telescope Science Data Analysis Software, STSDAS). While scientists will always need to write some unique software code that is specific to their own project, the goal is to minimize the redundancy and provide standardized tools for the parts of the analysis that are repeated by a large number of users. Reducing project turnaround times was the primary goal of developing the Hubble Legacy Archive (HLA) in recent years. The HLA makes it easier to determine whether some observation already exists in the archive, and provides general-use, value-added products that go a step beyond anything available in the past (e.g., combined astrometrically corrected images and mosaics, color images, and source lists). Many users can go directly to the data products they need and skip the whole proposal–observation– analysis cycle. Component 3. User Bandwidth The third component of our science optimization framework is “user bandwidth.” While this component is more nebulous than the others, we might paraphrase it as the attempt to find the best mix of proposals from the broadest spectrum of observers for as many years as possible. The Telescope Allocations Committee (TAC) is charged with recommending the highest-quality science program. Over the years, the Institute has developed a set of procedures and guidelines to ensure a good mix of small proposals (allowing quick, innovative research) and large proposals (survey-type studies involving large statistical samples). Similarly, we strive to have a diverse set of users, including theorist and observers, experienced and novice users with fresh ideas, a wide range of scientific disciplines, and a multi-wavelength user base. Another aspect of bandwidth relates to science opportunities. We want the Calls for Proposals to shape the allocation of Hubble to achieve the best total science. We want to keep Hubble operating long enough to overlap with the Webb, which would provide powerful synergy. Also, we want to ensure that Hubble data will be readily available to future generations via the archives. An example of a recent development in this area is the Multi-Cycle Treasury Program (MCTP), an extension of the original “Key Projects,” which were completed in the early cycles (e.g., Distance Scale, QSO Absorption Lines, and Medium-Deep Survey), and more recently, the Treasury Program. The goal of MCTP is to make it possible for very large projects to compete more effectively by designating a certain fraction of the observing time for them. Figure 1. The footprint page from the Hubble Legacy Archive (www.hla.stsci.edu) for a search of 30 Dor. The positions of available images are shown for all instruments. In this particular case, a WFPC2 image has been selected by the user (the yellow outline). Figure 2. The color image from WFPC2 identified by the yellow outline in Fig. 1. For a complete description, see the HUBBLEOBSERVER FACEBOOK page (www.facebook.com/pages/HubbleObserver/276537282901/; May 24, 2010 post). First Thoughts & What You Can Do Other frameworks for optimizing Hubble science can and have been developed, of course. One example is an end-to-end or cradle-to-grave approach, where each step in the project lifecycle is scrutinized and optimized (i.e., proposal submission → allocation → phase II development → scheduling → calibration → archiving → analysis → publication). This approach largely reflects how the Institute is organized, with separate branches for most of the steps. The framework outlined above is designed to take a somewhat orthogonal approach, which may identify opportunities that may have been missed in the past and might now be explored. The focus of this framework is more on the long-term rather than day-to-day considerations, especially the user bandwidth component. For example, it is important to ensure that our data products are compatible with the Virtual Observatory, and ready for full integration. This bandwidth consideration will ensure that data products will be easily available and fully useful for future generations of astronomers. As another facet of this goal, we must capture as much documentation describing the instruments and calibration of the data as possible, to ensure current knowledge and expertise is not lost. Another innovation is the focus on project turnaround time, which promotes a user viewpoint. For example, developing a tool or a data product that greatly simplifies a common analysis step for many users might reduce the average turnaround time, and hence, have a very high science impact. One important factor in optimizing science with Hubble is keeping our users informed. This Newsletter is one channel. On shorter timescales, we use e-mail or post items on the Institute web pages. Two new methods are the HUBBLEOBSERVER FACEBOOK and TWITTER pages, which enable observers to stay informed about the latest relevant Hubble information without requiring them to scout it out. Examples of topics highlighted in the past few months have ranged from proposal statistics, instrument news, and recent press releases, to Data Release 4 of the HLA. One of the main reasons for this article is to elicit your help in thinking about potential ways to optimize the science coming from Hubble, both now and for the future. If you have some pet idea—or perhaps reading this has inspired some new possibility—please pass it along for consideration (hubbleobserver@stsci.edu). While we will be scaling back our level of support for Hubble over the next several years as we ramp up to support Webb, we are always looking for new ideas where the cost-benefit ratio is advantageous. I will plan to come back in a year or so and report on changes we have made based on your feedback. Figure 3. A snapshot of the HUBBLEOBSERVER FACEBOOK page, taken in April 2010.