Introduction and Synchronization Mechanisms (Chapters 1&2)

advertisement

Topics of discussion

Distributed operating systems

Multiprocessor operating systems

Database operating systems

Real-time operating systems

1

Synchronization Mechanisms

2

Process

A process can be though of as a program whose execution has started

and not yet terminated.

A process can be in three states:

Running: The process is using a processor to execute instructions.

Ready: The process is executable, but other processes are executing and all

processors are currently in use.

Blocked: The process is waiting for an event to occur.

Each process uses a Process Control Block (PCB) data structure to

store complete information about the process. PCB contains the

following information:

Process name and id, processor state (program counter, register contents,

interrupt masks, etc.), process state, priority, privileges, virtual memory

address translation maps, information about other resources owned by the

process, etc.

3

Concurrent Processes

Two processes are concurrent if their execution can overlap in time.

In multiprocessor systems, concurrent processes can use different CPUs at

the same time.

In single processing systems, concurrent processing can be thought of as

one process using the CPU while another process uses system I/O, etc. If a

CPU interleaves the execution of several processes, logical concurrency is

obtained.

Processes are serial if the execution of one must be complete before the

execution of the other can start.

Concurrent processes interact using the following mechanisms:

Shared variables.

Message passing.

4

Threads

Threads are mini processes within a process and therefore share the

address space with the processes.

Every process has at least one thread.

A thread executes a portion of the program and cooperates with other

threads concurrently executing within the same process and address

space.

Because threads share the same address space, it is more efficient to

perform a context switch between two threads of the same process

than it is to perform a context switch between two separate processes.

Each thread has it’s own program counter and control block.

5

Critical Sections

In multiprocessing systems, processes or threads may compete with

each other for system resources that can only be used exclusively.

CPU is a good example of this type of resource as only one process or thread

can execute instructions on a single CPU at a time.

Processes may also cooperate with each other by using shared

memory or message passing.

For both of these cases, mutual exclusion is necessary to insure that

only one process or thread at one time holds a resource or modify

shared memory.

A critical section is a code segment in a process in which some shared

resource is accessed.

6

A solution to the mutual exclusion problem must satisfy the following

requirements:

Only one process can execute its critical section at any one time.

When no process is executing in its critical section, any process that requests

entry to its critical section must be permitted to enter without delay.

When two or more processes compete to enter their respective critical sections,

the selection cannot be postponed indefinitely (Deadlock).

No process can prevent any other process from entering its critical section

indefinitely (Starvation).

7

Some Mechanisms for Insuring Mutual Exclusion

Polling or Busy waiting

Disabling interrupts

Semaphores

8

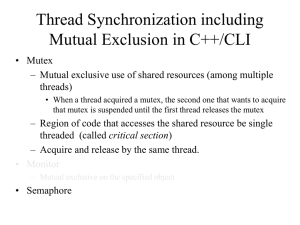

Semaphores

A semaphore is an integer variable S and an associated group of

waiting processes for which only two operations may be performed:

Wait state P(S): if S 1 then S := S – 1

else the executing process waiting for the semaphore S joins the wait queue for S and

forfeits the resource;

endif

Signal state V(S): if wait queue for S is not empty then remove a process from the queue

and give it the resource

else S := S + 1

endif

9

A semaphore can be used for synchronization and mutual exclusion.

Synchronization: Coordination of various tasks or processes.

Mutual exclusion: Protection of one or more resources or implementation of

critical sections among several competing processes sharing the critical

section.

There are three types of semaphores:

Binary semaphores

Mutual exclusion semaphores (mutex)

Counting semaphores

10

Binary Semaphores

Simplest form of the semaphore.

Primarily used for synchronization. Can also be used for mutual exclusion.

Using a binary semaphore, a process can wait (block or pend) on an event

which triggers the release of a semaphore.

Should be used in place of polling.

- Polling is an inefficient way of synchronization or event waiting because of the use of processor

resources.

- Using semaphores, the process is in a blocked or pended state while other processes use the

processor.

- Upon the occurrence of the event, the pended process becomes ready to execute.

11

Mutual Exclusion Semaphores

Used to provide resource consistency in a system for resources shared

simultaneously by multiple tasks.

- Shared resources can be such as shared data, files, hardware, devices, etc.

To protect against inconsistency in a multi-tasking system, each task must

obtain exclusive access right to a shared resource.

12

One common problem that can occur without designing proper mutual

exclusion in a multi-tasking system sharing resources simultaneously is

known as the “Racing condition”.

- Based on which task gets to the resource first the system behavior is different.

- Racing conditions can go on undetected for a very long time in a system and can be a very

difficult problem to detect and fix.

When using mutual exclusion semaphores, be aware of the racing

condition; deadlock.

- Use only one mutual exclusion semaphore to protect resources that are used together.

When using mutual exclusion semaphores, avoid starvation situations.

- Do not include unnecessary sections in the critical section.

Mutual exclusion semaphores can not be used in interrupt service routines.

13

Counting Semaphores

Yet another mean to implement task synchronization and mutual exclusion.

Counting semaphore is just like the binary semaphore with the exception

that it keeps track of the number of times a semaphore has been given or

taken.

- Counting semaphores use a counter to keep track of the semaphores and hence the name,

counting semaphore!

- The semaphore is created with a fixed number of semaphores available.

- Every time that a semaphore is taken, the semaphores count is decremented.

- When a semaphore is given back, if a task is blocked on the semaphore, it takes the semaphore.

Otherwise, the semaphores count is incremented.

Counting semaphores are generally used to guard resources with multiple

instances.

14

Classical Synchronization Problems

The dining philosophers problem

The producer-consumer problem

The readers-writes problem

Reader’s priority

Writer’s priority

15

Language Mechanisms for Synchronization

Monitors

Used in multiprogramming environment.

Abstract data types for defining/accessing shared resources.

Consists of procedures, shared resource, and other data.

- Procedures are the gateway to the shared resource and are called by the processes needing

to access the resource.

Only one process can be active within the monitor at a time. Processes trying

to enter the monitor are placed in the monitor’s entry queue.

Procedures of a monitor can only access data local to the monitor.

Variables/data local to a monitor cannot be directly accessed from outside the

monitor.

16

Synchronization of processes is accomplished via two special

operations, ”wait” and “signal”, executed within the monitor’s

procedures.

Execution of a wait operation suspends the caller process and the caller

process relinquishes control of the monitor.

-

<condition variable>.wait

When a waiting process is signaled, it starts execution from the very next

statement following the wait statement.

-

<condition variable>.signal

Waiting processes are placed on queues (priority or standard).

-

<condition variable>.queue returns true if the queue associated with the condition variable is

not empty.

Monitor solution for the reader’s priority problem.

17

readers-writes: monitor;

begin

readercount : integer;

busy : boolean;

Oktoread, Oktowrite : condition;

procedure startread;

begin

if busy then Oktoread.wait;

readercount := readercount +1;

Oktoread.signal; { once one reader can start reading, then they all can }

end startread;

procedure endread;

begin

readercount := readercount -1;

if readercount = 0 then Oktowrite.signal;

end endread;

procedure startwrite;

begin

if busy OR readercout 0 then Oktowrite.wait;

busy := true;

end startwrite;

procedure endwrite;

begin

busy := false;

if Oktoread.queue then Oktoread.signal { reader gets priority over writer hence reader’s-priority solution }

else Oktowrite.signal;

end endwrite;

18

begin { Initialization }

readercount :=0;

busy := false;

end;

end readers-writers;

19

Serializers

Used in multiprogramming environment.

Abstract data types defined by a set of procedures.

Can encapsulate the shared resource.

Only one process has access to the serializer at a time.

However, procedures of a serializer may have hollow regions wherein

multiple processes can be concurrently active. When a process enters a

hollow region, it releases the possession of the serializer.

- join-crowd (<crowd>) then <body> end

- leave-crowd (<crowd>)

Uses “queues” along with a condition to provide synchronization (delay or

block).

- enqueue (<priority>, <queue-name>) until (<condition>)

20

readerwrite: serializer

var

readq: queue;

writeq: queue;

rcrowd: crowd; { readers crowd }

wcrowd: crowd; { writers crowd }

db: database; { the shared resource }

procedure read (k:key, var data: datatype)

begin

enqueue (readq) until empty(wcrowd);

joincrowd (rcrowd) then

data := read-open(db[key]);

end

return (data)

end read;

procedure write (k:key, data:datatype);

begin

enqueue (writeq) until

(empty(wcrowd) AND empty(rcrowd) AND empty(readq));

joincrowd (wcrowd) then

write-opn (db[key], data);

end

21

end write;

22

Communicating Sequential Processing (CSP)

Processes interact by means of input-output commands (based on

message passing not shared memory).

Communication:

An input command in one process specifies the name of another process as

its source

An output command in the other process specifies the name of the first

process as its destination

23

Processes are synchronized by delaying an input (output) command in

one process until a matching output (input) command is executed by

another process.

Commands are not buffered

Guarded Commands:

G CL

“G” is referred to as a guard and is a Boolean expression

CL is a list of commands

The command list is executed only if the corresponding guard is evaluated as

true. Otherwise the guarded command fails.

An input command can be placed in a guard. In such situation, the guard is

not true until the corresponding output command has been executed by some

other process.

24

Alternative Commands:

G1 CL1 G2 CL2 G3 CL3 … Gn CLn

Specifies execution of one of its constituent guarded commands.

Repetitive Commands:

* [G1 CL1 G2 CL2 G3 CL3 … Gn CLn ]

Specifies repeated executions of its alternative commands until the guards of

all guarded commands fail.

Producer-Consumer problem

25

Ada Concurrent Programming Mechanisms

Ada Packages

Package is the fundamental program building block

Package Specification

package Name is

Declaration of visible procedures (headers only), necessary types,

constants, and variables.

end;

Package Body

package body Name is

Declaration of local procedures, types, constants, and variables.

begin

Initialization statements to execute for each instantiation.

end;

26

Ada Tasks

Task is a process

Task Specification

task [type] Name is

entry specifications

end;

Task Body

task body Name is

Declaration of local procedures, types, constants, and variables.

begin

Statements of the task.

exception

Exception handlers for this task.

end;

27

An entry specification has the same syntactic structure as the header of a

procedure.

Corresponding to an entry is one or more accept statements in the executable

part of the task body.

accept Entryname (formal parameters) do

Statements forming the body of the entry

end Entryname;

A task entry, E of a task T is called in other tasks with the form:

T.E (parameters)

accept statement executes when normal processing reaches it and another

task executes a corresponding entry call statement.

This is a synchronization method known as Ada Rendezvous and is similar to

Remote Procedure Call (RPC).

Ada offers Select statement for asynchronous & nondeterministic processing.

28

Path Expressions

A path expression restricts the set of admissible execution histories of

the operations on the shared resource so that no incorrect state is ever

reached

It indicates the order in which operations on a shared resource can be

interleaved

The general form of a path expression follows:

path <exp> end;

<exp> denotes possible execution histories with the following

possible opperators:

29

Sequencing: x;y

Synchronizes the beginning of y with the completion of x

Selection: (<exp> + <exp> + … + <exp>)

in some literature: ( <exp>,…, <exp>)

Only one of the operations connected by + can be executed at a time

Concurrency: { }

Any number of instances of the operation delimited can be in execution at a

time

Weak reader’s priority solution:

path {read} + write end

Writer’s priority solution:

path start-read + {start-write ; write} end

path { start-read ; read} + write end

30

Axiomatic Verification of Parallel Programs

Helps us prove various properties about a parallel program

Gives us intuitive guidance concerning the development of correct

parallel programs

31

The language

Cobegin statement

Source r1 (variable list), . . . , rm (variable list):

cobegin S1 || S2 || . . . || Sn coend

Resource ri is a set of shared variables

S1, S2, . . . , Sn are statements called processes to be executed in parallel

With-when statement ( critical section statement )

with r when B do S

Reduces the problems caused by concurrent access to shared variables by

ensuring that only one process will access the variables in a resource at a

time

32

The Axioms

Invariant for a resource r, I( r ) describes acceptable states of the

resource

I( r ) must be true before the execution of parallel program begins and

must remain true during its execution except when the critical section for r is

being executed

Parallel Execution Axiom

If {P1} S1 {Q1}, . . . , {Pn} Sn {Qn}, no variable free in Pi or Qi is

changed in Sj (ij), and all variables in I( r ) belong to resource r,

then

{P1 P2 . . . Pn I( r )}

resource r: cobegin S1 || S2 || . . . || Sn coend

{Q1 Q2 . . . Qn I( r ) }

Critical Section Axiom

If {I( r ) P B} S { I( r ) Q} and I( r) is the invariant of the

cobegin statement for which S is a process, and no variable free in P

and Q is changed in any other process, then

33

{P} with r when B do S{Q}

34