Semaphores

advertisement

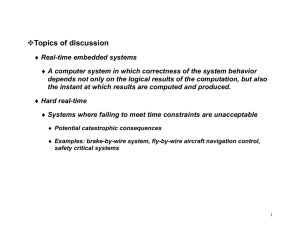

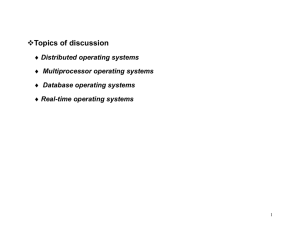

Topics of discussion Real-time embedded systems A computer system in which correctness of the system behavior depends not only on the logical results of the computation, but also the instant at which results are computed and produced. Hard real-time Systems where failing to meet time constraint are unacceptable Potential for catastrophic consequence Examples: brake-by-wire system, fly-by-wire aircraft navigation control, safety critical systems Soft real-time Systems where under certain circumstances, it can tolerate a certain amount of delay is acceptable but not desirable 1 Distributed Hard Real-Time Embedded Systems 2 Hard real-time and safety critical systems Heterogeneous distributed systems, heterogeneous processing nodes Several different networks interconnected with each other Two such networks are connected and communicate via a gateway Each function may not be running on a dedicated processing node 3 Middleware software Used to abstract software functions from hardware difference of the processing nodes in a heterogeneous embedded system Applications distributed across networks Applications distributed on the same network Or even on the same processing node 4 Hard real-time and safety critical embedded systems are the main focus of the book Additional topics added: Distributed systems Topics in soft real-time software based on Linux and VxWorks Operating Systems Topic on soft real-time Java 5 Analysis and synthesis of safety-critical distributed applications implemented using hard real-time embedded systems Architecture selection Hardware platform selection and platform based design Software function specification Using control and dataflow graphs Task mapping and schedule mapping Inter-node and inter-process communications Universal Modeling Language (UML) used for Object Oriented Analysis & Design (OOA) (OOD) 6 The design tasks have to be performed such that the timing constraints of hard real-time applications are satisfied and implementation costs are minimized Design of real-time systems or any software system very seldom starts from scratch Systems are typically start from an already existing system Software reuse based on middleware software and common hardware platform design All systems must be structured such that additional functionality can easily be added and accommodated in the future Incremental design process 7 Time-driven systems Activation of processes and transmission of messages happen at predetermined points in time Communication protocols used: Time division Multiple Access (TDMA) Controller Area Network Protocol (CAN) Non-preemptive static scheduling approach for both processes and messages Time Triggered Protocol (TTP) 8 Event-driven systems Activation of processes done at the occurrence of significant events Multi-cluster systems Combines time-driven and event-driven systems into heterogeneous network 9 Concurrent Processes Two processes are concurrent if their execution can overlap in time. In multi-processor systems, concurrent processes can use different CPUs at the same time. In single processing systems, concurrent processing can be thought of as one process using the CPU while another process uses system I/O, etc. If a CPU interleaves the execution of several processes, logical concurrency is obtained. Processes are serial if the execution of one must be complete before the execution of the other can start. Concurrent processes interact using the following mechanisms: Shared variables. Message passing. 10 Threads Threads are mini processes within a process and therefore share the address space with the processes. Every process has at least one thread. A thread executes a portion of the program and cooperates with other threads concurrently executing within the same process and address space. Because threads share the same address space, it is more efficient to perform a context switch between two threads of the same process than it is to perform a context switch between two separate processes. Each thread has its own program counter and control block. 11 Critical Sections In multiprocessing systems, processes or threads may compete with each other for system resources that can only be used exclusively. CPU is a good example of this type of resource as only one process or thread can execute instructions on a single CPU at a time. Processes may also cooperate with each other by using shared memory or message passing. For both of these cases, mutual exclusion is necessary to insure that only one process or thread at one time holds a resource or modify shared memory. A critical section is a code segment in a process in which some shared resource is accessed. 12 A solution to the mutual exclusion problem must satisfy the following requirements: Only one process can execute its critical section at any one time. When no process is executing in its critical section, any process that requests entry to its critical section must be permitted to enter without delay. When two or more processes compete to enter their respective critical sections, the selection cannot be postponed indefinitely (Deadlock). No process can prevent any other process from entering its critical section indefinitely (Starvation). 13 Some Mechanisms for Insuring Mutual Exclusion Polling or Busy waiting Disabling interrupts Semaphores 14 Semaphores A semaphore is an integer variable S and an associated group of waiting processes for which only two operations may be performed: Wait state P(S): if S 1 then S := S – 1 else the executing process waiting for the semaphore S joins the wait queue for S and forfeits the resource; endif Signal state V(S): if wait queue for S is not empty then remove a process from the queue and give it the resource else S := S + 1 endif 15 A semaphore can be used for synchronization and mutual exclusion. Synchronization: Coordination of various tasks or processes. Mutual exclusion: Protection of one or more resources or implementation of critical sections among several competing processes sharing the critical section. There are three types of semaphores: Binary semaphores Mutual exclusion semaphores (mutex) Counting semaphores 16 Binary Semaphores Simplest form of the semaphore. Primarily used for synchronization. Can also be used for mutual exclusion. Using a binary semaphore, a process can wait (block or pend) on an event which triggers the release of a semaphore. Should be used in place of polling. - Polling is an inefficient way of synchronization or event waiting because of the use of processor resources. - Using semaphores, the process is in a blocked or pended state while other processes use the processor. - Upon the occurrence of the event, the pended process becomes ready to execute. 17 Mutual Exclusion Semaphores Used to provide resource consistency in a system for resources shared simultaneously by multiple tasks. - Shared resources can be such as shared data, files, hardware, devices, etc. To protect against inconsistency in a multi-tasking system, each task must obtain exclusive access right to a shared resource. 18 One common problem that can occur without designing proper mutual exclusion in a multi-tasking system sharing resources simultaneously is known as the “Racing condition”. - Based on which task gets to the resource first the system behavior is different. - Racing conditions can go on undetected for a very long time in a system and can be a very difficult problem to detect and fix. When using mutual exclusion semaphores, be aware of the racing condition; deadlock. - Use only one mutual exclusion semaphore to protect resources that are used together. When using mutual exclusion semaphores, avoid starvation situations. - Do not include unnecessary sections in the critical section. Mutual exclusion semaphores can not be used in interrupt service routines. 19 Counting Semaphores Yet another mean to implement task synchronization and mutual exclusion. Counting semaphore is just like the binary semaphore with the exception that it keeps track of the number of times a semaphore has been given or taken. - Counting semaphores use a counter to keep track of the semaphores and hence the name, counting semaphore! - The semaphore is created with a fixed number of semaphores available. - Every time that a semaphore is taken, the semaphores count is decremented. - When a semaphore is given back, if a task is blocked on the semaphore, it takes the semaphore. Otherwise, the semaphores count is incremented. Counting semaphores are generally used to guard resources with multiple instances. 20 Classical Synchronization Problems The dining philosophers problem The producer-consumer problem The readers-writes problem Reader’s priority Writer’s priority 21