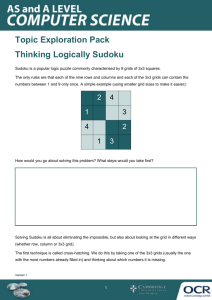

How to Solve Sudoku By Computer

advertisement

How to 2 1 4 5 6 9 5 6 2 1 1 8 2 6 5 7 9 2 4 4 8 6 8 8 5 8 5 7 4 3 Solve Sudoku By Computer Let me first remind you about the rules of the puzzle game Sudoku: The numbers 1 to 9 have to be placed in the boxes in such a way that each row, each column and each 3 X 3 block of squares contains each of those numbers once. A number of squares are given. I handed out two examples (one easy, one hard) for you to try if you get bored with my talk. But, you will probably have guessed that this talk is not really about Sudoku. In fact, I am going to use Sudoku as an example of the use of probability theory. Indeed, I will show that the computer can solve Sudoku by making clever guesses! To see that apparently random guesses can in fact give concrete answers to specific questions, let me first show a simple example. Who knows the area of a circle? One can approximate the value of by randomly placing a large number of points in a square and counting the number inside a circle. Since the ratio of the area of the circle and the area of the square is /4, it follows that n/N, where n is the number of points inside the circle, and N is the total number of points. This demonstrates that there are in fact Laws of Probability! The first pioneers of Probability Theory were Fermat, Pascal, Huygens, and Bernoulli: Fermat Pascal Fermat and Pascal had an exchange of letters about the relation between counting and chances. They realised that the odds of obtaining a certain result is greater if the number of different ways in which this result can be obtained is greater. For example, in throwing two dice, the chance of getting a total of 9 is four times greater than that of getting a total of 2, because 9 can be obtained in 4 different ways: 9 = 6 + 3, 9 = 5 + 4, 9 = 4 + 5, and 9 = 3 + 6. (Of course, 2 can only be obtained as 2 = 1 + 1.) This led Pascal to introduce his famous triangle: 1 1 1 1 1 1 1 6 2 3 4 5 1 1 3 6 1 4 1 10 10 5 1 15 20 15 6 1 etc. This has the following meaning: Suppose one throws a coin n times and counts the number of times a head comes up. Then the k-th number in the n-th row tells us how much more likely it is that the number of heads is k-1 as opposed to 0. For example, if n=6 then it is 15 times more likely that 2 heads come up rather than none. The first published account of the mathematics of chance was by Huygens, a Dutch astronomer. I have heard that many of the early probabilists were astronomers, but I don't know if that is true. Huygens Huygens also had good contacts with Leibnitz, whom he instructed about probability. One of Leibnitz' students was Jacob Bernoulli, a Swiss mathematician. Bernoulli The Bernoulli family was a true dynasty of mathematicians, several of whom made important contributions. Jacob Bernoulli developed probability theory further. In particular, he investigated the coin tossing problem in more detail, and in using Pascal's triangle, proved the so-called Law of Large Numbers. This law says that as the number n of tosses increases, it becomes more and more likely that the average number of heads is close to n/2. In fact, he proved more: if the coin is not fair, i.e. if a head has a probability p different from 1/2 then after many tosses the number of heads will be close to np with high probability. Probability theory became a mature subject in the 18-th and 19-th century. Then also random variables with a continuum of possible values were introduced. The famous Gauss introduced in particular the bell-shaped curve and proved the first version of the Central Limit Theorem, which says roughly that for large n the deviations from the mean are distributed according to the bell-shaped curve. Gauss The bell-shaped curve The bell-shaped curve was first applied in Physics in 1898 by Maxwell. He argued that the distribution of the molecular velocities in a gas should also be a bellshaped curve because these velocities are the result of many independent collisions. Boltzmann generalised this law to general systems at equilibrium. He realised that Maxwell's distribution is of the form exp(-E/T), where T is the (absolute) temperature of the gas and E is the total kinetic energy of the molecules. He then argued that this should be true in general provided potential energy is taken into account. Maxwell Boltzmann A proper axiomatic approach to probability was developed only at the beginning of the 20th century, first by Borel and Cantelli, and its final form by the great Russian mathematician Andreii N. Kolmogorov. Kolmogorov He postulated a few basic axioms and was then able to derive very general results. For example, together with Chebyshev, he proved a very general version of the Law of Large Numbers, not just for coin tossing, but for any random variable. This law is also the basis of the Monte Carlo method for approximating As N increases the fraction of points inside the circle approaches the average or expected number, i.e. N /4 with high probability. Can we apply the same technique to solving Sudoku? That is, could we simply guess at the answer? Let us count the number of possible combinations. In the first example, the first block of 9 squares has 6 unknown squares, the second 4, etc. If we guess an arbitrary order for the remaining numbers in each block, we have for the first block 6! = 6 x 5 x 4 x 3 x 2 x 1 = 720 possibilities, for the second 4! = 24 etc. and therefore the total number of possible choices is 6! x 4! x 6! x 7! x 5! x 7! x 6! x 4! x 6! = 453296916542545920000000 = 4.5 x 1023 If a computer could check each possibility in 1 nanosecond, it would take 4.5 x 1014 seconds, i.e. about 15 million years to check all possibilities! This does not sound like a good idea! However, there is a better way. This method has its roots in Physics. When modern computers were still in their infancy, and few places had access to one, it was proposed (by Ulam) that one might use it to compute the properties of materials from first principles by simulation on a computer. Any material, however, contains an enormous number of molecules and to track the motion of each would be impossible. Even if one assumes that the properties are not significantly changed if one considers only a small number, say 1000 or so, the possibilities for their motion is still huge. Therefore, Mayer and Ulam independently suggested taking an average over a random sample of possible motions. This seems like a reasonable idea, but it is still not good enough. N. Metropolis et al. found a better way of sampling. Metropolis Teller To illustrate the basic problem, consider the following example. Suppose we want to approximate the area underneath the curve of the function e-x say from 0 to 100. If we enclose it by a rectangle as before and choose points at random, most of these will lie above the graph of the function and it will take a long time to get a reasonable approximation. This is because for most values of x, it is very unlikely that the value of y is less than the function value. Metropolis et al. realised that the same is also the case for the motion of the molecules: certain motions are much more likely than others. Boltzmann had shown that, if the material is at a given temperature T, the likelihood of a particular molecular movement is proportional to the Boltzmann factor exp(-E/T), where E is the total energy of all molecular motions. (This is conserved during the motion.) Therefore, one should make sure that motions with a relatively large Boltzmann factor are chosen proportionately more often than those with a small Boltzmann factor. This is achieved by the Metropolis algorithm. It is used nowadays in a multitude of applications. Let us now see how to apply this method to the problem of solving Sudoku. First we need to assign a fictitious 'energy' to every possible choice of the numbers in each block. We do this by counting, for each square, the number of identical numbers in the same row or the same column, and adding the results for all squares. Obviously, the higher this number, the further we are from a solution in some sense. We now want to choose successive assignments of the numbers, similar to successive movements of molecules. We do this by successively interchanging two numbers in a 9 x 9 block (only those that have not been given of course). The next problem is what to value to take for the 'temperature'. Here the analogy with molecular motion is useful. If the temperature is high, the molecules move vigorously, which is demonstrated by the fact that the Boltzmann factor of many possible molecular movements are large, so that there are many equally likely movements. At low temperature, very few movements are possible: the molecules are nearly fixed. Given an arbitrary initial guess for the numbers in each block, if we take the temperature too low, it will take a long time to find an move with large Boltzmann factor. On the other hand, if the temperature is too high, many motions are allowed, but this does not distinguish well enough between 'good' and 'bad' moves. Curiously, taking a temperature somewhere in between will not do either! This can be illustrated by the following 'graph': In fact, the best way to find the pattern with the minimal energy is to gradually decrease the temperature. This procedure is well-known in materials science and is called annealing. The same method is used in practice to obtain perfect crystals. The same method explained here is used for many applications, e.g. in elementary particle physics, to calculate the predicted outcome of experiments, in biology, to determine probable relations between gene expression and disease, and in pattern recognition programs of various kinds. As a final note, the method is also useful for computing an optimal table arrangement at dinners or a seating plan for offices as suggested in the following cartoon: