experimental result - Applied Science University

advertisement

Video Classification Using Normalized Information Distance

Khalid Kaabneh

Graduate College of Computer Studies

Amman Arab University for Graduate Studies

Amman-Jordan

Abstract— There has been a vast collection of multimedia

resources on the net. This has opened an opening for researchers

to explore and advance the science in the field of research in

storing, handling, and retrieving digital videos. Video

classification and segmentation are fundamental steps for

efficient accessing; retrieving, browsing and compressing large

amount of video data. The basic operation video analysis is to

design a system that can accurately and automatically

segments video material into shots and scenes. This paper

presents a detailed video segmentation technique based on

pervious researches which lacks performance and since some

of the videos is stored in a compressed form using the

Normalized Information Distance (NID) which approximates

the value of a theoretical distance between objects using the

Kolmogrov Complexity Theory. This technique produced a

better results in reference to performance, high recall and

precision results .

Keywords— Shot-Boundary Detection, Kolmogorov

complexity, Normalized Information Distance.

Asmaa Abdullah

Zeineb Al-Halalemah

Amman-Jordan

binary strings. The Kolmogorov complexity, K(x) of x is

defined as the length of the shortest program that can produce

x.

The conditional Kolmogorov complexity of x and y, K(x | y), is

the length of the shortest program that produce x when y is

given as input.[8] The Normalized Information Distance (NID)

is an approximation of the Kolmogorov complexity using

binary data and standard compression algorithms. The distance

calculation between two images using NID is:

d(x, y)

max{(| c(xy) | - | c(y) |), ((| c(yx) | - | c(x) |)}

max{| c(x) |, | c(y) |}

…(1)

where |c(x)| and |c(y)| is the length of the compressed data for

x and y respectively. |c(xy)| is the length of the compressed

data for the concatenation of x and y, and similarly for |c(yx)|.

Lower values for d(x,y) indicate a smaller “distance” between

the two images, indicating some similarity has been detected.

The question is whether a small distance in from this

calculation will translate into relevance between the two

images.

I. INTRODUCTION

I

ndexing and retrieval of digital video is an active research

area in computer science . The increasing availability and

use of on-line video has led to a demand for efficient and

accurate automated video analysis techniques. In videos, a shot

is an unbroken sequence of frames from one camera;

meanwhile a scene is defined as a collection of one or more

adjoining shots that focus on an object or objects of interest.

The segmentation of video into scene is far more desirable

than simple shot boundary detection [2]. This is because

people generally visualize video as a sequence of scenes not of

shots, so shots are really a phenomenon peculiar to only video.

A number of researches have been devised to implement a

segmentation systems based on in reference to image regions

and features such as color, size, shape, and others[ ]. Some

techniques make use relevance to color histogram comparison

or motion tracking.

This paper investigates the method known as Normalized

Information Distance or (NID). NID approximates the value of

a theoretical distance between frames using the theoretical

Kolmogorov complexity. Kolmogorov complexity is a noncomputable function that can be used to measure the similarity

between objects, data or anything that can be encoded as finite

II. RELATED WORK

A great deal of researches has been done on content analysis

and segmentation of video using different techniques. In this

paper, we have based ourselves on a pervious work we have

experimented with using different segmentation techniques [a,

b] and a work done by other researchers such as:

Zhang, Kankanhalli, and Smoliar used a pixel-based

difference method, which is one of the easiest ways to

detect if two adjacent frames are significantly

different [2,3].

Kasturi and Jain used a statistical methods expand on

the idea of pixel differences by breaking the images

into regions and comparing statistical measures of the

pixels in those regions [4].

Zabih et al compared the number and position of

edges in successive video frames, allowing for global

camera motion by aligning edges between frames

[10].

1

III. OUR TECHNIQUE

We detect shot boundaries by calculating the distance

calculation between the two images in the types of videos, by

the result of calculations we derive how many images and

scenes per type of video with detect the value of d(x,y) when

the image or scene vary from the next image or scene , also

we compared the real calculation with the output from the

distance calculation (we discussed the result in result section)

, the distance equation is :

d(x, y)

max{(| c(xy) | -| c(y)|), ((| c(yx) | -| c(x) |)}

max{| c(x) |, | c(y)|}

are commonly used in the field of information retrieval .Recall

is defined as the proportion of shot boundaries correctly

identified by the system to the total number of shot boundaries

present (correct and missed ).

Precision is the proportion of correct shot boundaries

identified by the system to the total number of shot boundaries

identified by the system. We express recall and precision as:

Recall = Correct Scene Changes

Correct + Missed Scene Changes

Precision = Correct Scene Changes

Correct + False Scene Changes

Where :

| c(xy) | : length of compressed size of concatenated

IV. TEST BED

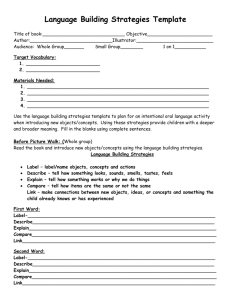

Table 2. Experiment results Recall and Precision

Video Type

Recall

Precision

News

93.3

78.6

Movies

95.3

100

Cookery program

90

100

Sports

88.9

72.7

Documentary

93.8

85

Comedy/ Drama

100

100

Commercials

100

91.7

To evaluate the results of our research, a database of various

compressed video types was used. The video clips were

digitized in Bmp format (total of 25442 frames) and

resolution of 320*240 pixels.

Video Type

No.of frames

News

4314

Movies

4194

Cookery program

3060

Sports

3045

Documentary

5752

Comedy/ Drama

2249

Commercials

2828

Total

25442

Table 1.Video types used & number of frames in each

EXPERIMENTAL RESULT

Results( Recall & Precision)

120

100

80

60

40

20

0

Ne

ws

The following table shows the video types used and the

number of frames were analyses

Sp

or

Do

ts

cu

m

en

Co

ta

m

ry

ed

y/

Dr

am

Co

a

m

m

er

cia

ls

images y and x .

| c(x)| : length of compressed size of image x .

| c(y)| : length of compressed size of image y .

Ideally, both recall and precision should equal 1. This would

indicate that we have identified all existing shot boundaries

correctly, without identifying any false boundaries.

Now we will describe the results obtained from this

experiment, the recall and precision for each video type as in

table 2 and figure 1 below :

M

Co

ov

ok

ie

s

er

y

pr

og

ra

m

images x and y .

| c(yx) | : length of compressed size of concatenated

Recall

Precision

In reporting our experimental results, we use recall and

precision to evaluate system performance. Recall and precision

2

Figure 5. Results obtained from experiment

(Recall&Precision) .

[5] X.U. Cabedo and S. K. Bhattacharjee: Shot detection

tools in digital video, in Proceedings Non-linear

Model Based Image Analysis 1998, Springer Verlag,

pp 121-126, Glasgow, July 1998.

[6]

V. CONCLUSION

Video segmentation has been gaining a great attention since

the world has been relaying on the internet for fast accessing

of digital multimedia files such as digital videos. Video

segmentation is the basic block for video indexing, searching,

and compression. We have presented a novel video

segmentation algorithm and demonstrated its performance.

Better features based on dual comparison measurements

further improved the segmentation.

Swanberg, D., Shu C.F., and Jain, R., “Knowledge

Guided Parsing and Retrieval in Video Databases”, in

Storage and Retrieval for Image and Video

Databases, Wayne Niblack, Editor, Proc. SPIE 1908,

February, 1993, pp. 173-187.

[7] Little, T.D.C, Ahanger, G., Folz, R.J., Gibbon, J.F.,

Reeve, F.W., Schelleng, D.H., and Venkatesh, D., “A

Digital On-Demand Video Service Supporting

Content-Based Queries”, Proc. ACM Multimedia 93,

Anaheim, CA, August, 1993, pp.427-436.

[8] R. Zabih, J. Miller, and K. Mai, A feature –based

algorithm for detecting and classifying scene breaks,

in Proceedings ACM Multimedia 95 , pages 189-200

, November 1993.

[9] J. Canny , A computational approach to edge

detection ,in IEEE Transaction on Pattern Analysis

and Machine Intelligence 8(6),pages 679-698,1986.

[10] Y. Gong, C. H. Chuan, and G. Xiaoyi, Image

indexing and retrieval based on color histograms, in

Multimedia Tools and Applications, volume 2, pages

133-156,1996.

REFERENCES

[1] H. J. Zhang, A. Kankanhalli and S. W. Smoliar,

Automatic partitioning of full-motion video , in

Multimedia Systems, volume 1 , pages 10-28, 1993.

[2] R. Kasturi, and R. Jain, Dynamic Vision, in Computer

Vision: Principles, R. Kasturi, and R. Jain, Editors,

IEEE, Computer Society Press, Washington, 1991.

[3] H. Ueda, T. Miyatake, and S. Yoshizawa, “IMPACT:

An Interactive Natural-motion-picture dedicated

Multimedia Authoring System, “in proceedings of

CHI, 1991(New Orleans, Louisiana, Apr-May, 1991)

ACM, New York, 1991, pp. 343-350.

[11] J. Meng, Y. Juan, S.-F. Chang , Scene change

detection in an MPEG compressed video sequence, in

IS&T/SPIE Symposium Proceedings , volume 2419,

February 1995.

[12] M. Tague, The pragmatics Information Retrieval

experimentation,

in

Information

Retrieval

Experiment, Karn Sparck Jones Ed., Buttersworth,

pages 59-102, 1981.

[13] Arman, F., Hsu, A., and Chiu, M-Y., “Image

Processing on Encoded Video Sequences”,

Multimedia Systems (1994) Vol.1, No. 5, pp. 211219.

[a] K. Kaabneh and H. Al-Bdour. “A New Segmentation

Technique and Evaluation.” Mu’tah Lil-Buhuth wadDirasat Journal, 19 (2): 39-51, Jan. 2004.

[4] A. Nagasaka and Y. Tanaka, Automatic video

indexing and full –video search for object

appearances , in Visual Database Systems II ,

Elsevier Science Publishers , pages 113-117, 1992.

3

[b] K. Kaabneh, O. Alia, A. Suleiman, A. Aburabela, “Video

Segmentation via Dual Shot Boundary Detection (DSBD)”,

In the proceedings of the 2nd IEEE International Conference

On Information & Communication Technologies (ICTTA’06),

April 24 - 28, 2006.

4