P093 - LAMDA Group

advertisement

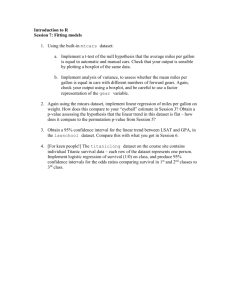

PAKDD Tournament DATA MINING REPORT Submitted by: PARTICIPANT 93 Group members: Karthik Vikram Yemmanur, Shehzad Hamza, Ravikiran Garimella, Sriram Munnangi and Ajay Cherukuri INTRODUCTION The PAKDD tournament provided us with a finance company’s customer data to find out a better solution for their existing cross-selling business problem. We followed the CRISP-DM process in creating our predictive model for the particular problem. The six major phases of the CRISP-DM processes are: 1. Business Understanding 2. Data Understanding 3. Data Preparation 4. Modeling 5. Evaluation 6. Deployment The report will focus on arranging our model creation phase using the CRISP-DM methodology. BUSINESS UNDERSTANDING The first step in creating a useful predictive model is to understand the business context of the problem that we are solving. The data has been provided to us from a consumer-finance company who want to find better solutions for a cross-selling business problem. The company currently has a base of credit card consumers as well as a customer base of mortgage customers. The overlap between these two services is very small and we are expected to find a solution that targets effectively the customers that are more likely to request a home loan. DATA UNDERSTANDING The modeling dataset provided has 40,700 observations of which 40,000 customers have a “No” response (Target_flag = 0) and 700 customers have a “Yes” response (Target_Flag = 1). The Target_flag variable represents a customer that opened a home-loan account within a year of opening a credit card account. There are 42 variables in the dataset including the Target_flag variable. The other variables in the dataset included demographic variables such as Annual Income Range, Monthly Disposable Income, Occupation Code, Number of Dependents and what District they applied from. There are also business specific variables such as Customer Age, Checking Account status, what type of credit cards they have, how long they have been staying at their current residence, how long they have been at their current state of employment, and a number of other variables that capture the number of Credit Bureau inquiries in the last 6 and 12 months. The detailed variable descriptions are provided with the modeling dataset and most of these data seem to be relevant in a consumer finance company. After understanding what the data meant, we assigned a measurement level to each variable based on their description. The variable roles are shown below in Figure 1. Figure 1 DATA PREPARATION Our first step in the data preparation step involved finding out what variables could be used for our modeling and what variables could be discarded. After exploring the data, we found the following variables could be rejected: DISP_INC_CODE – rejected due to excessive missing values B_DEF_UNPD_L12M – rejected because of only 1 value. On further exploration of the dataset we also found a perfect correlation between the following variables: B_DEF_PAID_L12M B_DEF_UNPD_IND B_DEF_UNPD_L12M B_DEF_PAID_IND Since they were representing the same data, we decided to reject three of the variables and then just use one of them, since they all captured the same data. We decided to use the B_DEF_PAID_IND as the variable in our model and rejected the other 3 variables. The tournament data set provided is a highly skewed dataset with a response rate of just 1.72%. The first step in creating a predictive model for a dataset with such a sparse distribution of a positive target response is to balance the data. We created a balanced sample of the data that included all the 700 “Yes” responses (Target_flag = 1) and then took a random sample of 700 “No” responses (Target_flag = 0). The sample that we built our model on had a total of 1400 observations with half of them having a “Yes” response and the other half having a “No” Response. We also entered the prior probabilities of the target distribution in the date before building our predictive models. One of the key issues in the model is how the Bureau Enquiries variables were entered. It had valid values up to 97 and then 98 and 99 were special values. However, we found that the ‘Z’ response for the B_DEP_PAID_IND variable captured all the Bureau Enquiries variables that had a value of 98. There were a negligible number of 99’s in the dataset and none of them had a “Yes” response, so we chose to ignore them. So we filtered all values of the Bureau Enquiries variables up to 97. We also filtered extreme values for CURR_EMPL_MONTHS, PREV_EMPL_MONTHS, PREV_RES_MONTHS. We also filtered out the records in the Diner’s club card membership that had less than 5 observations. We also replaced ANNUAL_INCOME_RANGE which is a nominal variable into an ordinal variable with the measurements (1-5) as opposed to the various income-range buckets. We also took a look at the missing values in the dataset and found that only the class variables (nominal variables) had missing values. Since regression and neural networks won’t function accurately with missing values, we used a tree imputation method to replace all the class variables. MODELING We built several models on our sample dataset to see what model performed the best. We built the following models on the dataset using variable selection and also transforming variables where necessary: 1. Logistic Regression (Stepwise selection, α=0.05) 2. Polynomial Regression (Stepwise selection, α=0.05) 3. Gini Decision Tree 4. Entropy Decision Tree 5. Neural Network (3,4,6,8 hidden layers) 6. Ensemble models combining various models. Our main criterion for selecting an appropriate model was the ROC index for every model followed by the misclassification rate. We also found that the logistic regression model had a high misclassification rate and as such, we chose to not include these in our model. We also built an ensemble model that was a combination of the Gini Tree, Entropy Tree, Neural Network and a Polynomial Regression model. We used variable selection for the neural network and also created various ensemble models depending on the similarity of the misclassification rate. EVALUATION To evaluate the various models built, we created a random sample from the main data set and then scored the dataset. Since we had the actual values of the target variable, we used it to calculate the ROC index for a model. The model with the highest ROC index on our scoring data set was the ensemble model. The complexity of the model definitely increased as we used an ensemble model, but however, the actual number of customers correctly predicted also greatly increased as well as the ROC index. DEPLOYMENT For this final phase, we scored the dataset given to us and then produced an Excel file with the predicted probability that a customer would purchase a home loan from the company. These are arranged by customer ID. This phase would be finally complete when we can validate our results against the withheld target values.