Density Clustering Method for Gene Expression Data

advertisement

Is there a need to footnote P-trees

technology? Or the EIN paper?

Tree-based Clustering for Gene Expression Data

Baoying Wang, William Perrizo

Computer Science Department

North Dakota State University

Fargo, ND 58105, USA

Tel: 1-701-2316257

{baoying.wang, william.perrizo}@ndsu.nodak.edu

ABSTRACT

Data clustering methods have been proven to be a successful

data mining technique in analysis of gene expression data and

many other types of data. However, some concerns and

challenges still remain, e.g., in gene expression clustering. In

this paper, we propose an efficient clustering method using

attractor trees. The combination of the density-based approach

and the similarity-based approach considers clusters with

diverse shapes, densities, and sizes. Experiments on gene

expression datasets demonstrate that our approach is efficient

and scalable with competitive accuracy.

hierarchical clustering using attractor trees, Clustering using

Attractor trese and Merging Processes (CAMP). CAMP consists

of two processes: (1) clustering by local attractor trees (CLA)

and (2) cluster merging based on similarity (MP). The final

clustering result is an attractor tree and a set of bit indexes to

clusters corresponding to each level of the attractor tree. The

attractor tree is composed of leaf nodes, which are the local

attractors of the attractor sub-trees constructed in CLA process,

and interior nodes, which are the virtual attractors resulted from

MP process. Figure 1 is an example of an attractor tree.

Virtual

attractors

Categories and Subject Descriptors

H.3.3 [Information Storage and Retrieval]: information

Search and Retrieval – Clustering.

Local

attractor

General Terms: Algorithms

Keywords: Gene expression data, clustering, microarray.

1. INTRODUCTION

Clustering analysis of mircroarray gene expression data has

been recognized as an important method for gene expression

analysis. However, some concerns and challenges still remain

in gene expression clustering. For example, many traditional

clustering methods originating from non-biological fields may

not work well if the model is not sufficient to capture the

genuine clusters among noisy data.

There are two main groups of clustering methods: similaritybased methods and density-based clustering methods. Most

hierarchical clustering methods belong to the similarity-based

algorithm class. As a result, they can not handle clusters with

arbitrary shapes well. In this paper, we propose an efficient

agglomerative hierarchical clustering method using attractor

trees. Our method combines the features of both the densitybased clustering approach and the similarity-based clustering

approach. It takes consideration clusters with diverse shapes,

densities, and sizes, and is capable of dealing with noisy data.

Experiments on standard gene expression datasets demonstrate

that our approach is very efficient and scalable, with

competitive accuracy.

2. THE METHOD

In this section, we propose an efficient agglomerative

Permission to make digital or hard copies of all or part of this work for

personal or classroom use is granted without fee provided that copies are

not made or distributed for profit or commercial advantage and that

copies bear this notice and the full citation on the first page. To copy

otherwise, or republish, to post on servers or to redistribute to lists,

requires prior specific permission and/or a fee.

SAC’05, March 13-17, 2005, Santa Fe, New Mexico, USA.

Copyright 2005 ACM 1-58113-964-0/05/0003…$5.00.

Figure 1.

The attractor tree

The data set is first grouped into local attractor trees by means

of density-based approach in CLA process. Each local attractor

tree represents a preliminary cluster, the root of which is a

density attractor of the cluster. Then the small clusters are

merged level-by-level in MP process according to their

similarity until the whole data set becomes a cluster.

2.1 Density Function

Given a data point x, the density function of x is defined as the

sum of the influence of all data points in the data space X. If we

divided the neighborhood of x into neighborhood rings, then

points within smaller rings have more influence on x than those

in bigger rings. We define the neighborhood ring as follows:

Definition 1. Neighborhood Ring of a data point c with radii r1

and r2 is defined as the set R(c, r1, r2) = {x X | r1<|c-x| r2},

where |c-x| is the distance between x and c. The number of

neighbors falling in R(c, r1, r2) is denoted as N = || R(c, r1, r2)||.

Definition 2. Equal Interval Neighborhood Ring (EINring)

of a data point c with radii r1=k and r2=(k+1) is defined as the

kth neighborhood ring EINring(c, k, ) = R(c, r1, r2) = R (c, k,

(k+1)), where is a constant. The number of neighbors falling

within the kth EINring is denoted as ||EINring(c, k, )||.

Let y be a data point within the kth EINring of x. The EINringbased influence function of y on x is defined as:

f(y,x) = fk(x) =

1

k

k = 1, 2, ...

(1)

The density function of x is defined as the summation of

influence within every EINring neighborhood of x.

DF(x)=

f k ( x) || EINring( x, k , ) ||

k 1

(2)

Is this coorect?

2.2 Clustering by Local Attractor Trees

3. PERFORMANCE STUDY

The basic idea of clustering by local attractor trees (CLA) is to

partition the data set into clusters in terms of density attractor

trees. Given a data point x, if we follow the steepest density

ascending path, the path will finally lead to a local density

attractor. If x doesn’t have such a path, it can be either a local

attractor or a noise. All points whose steepest ascending paths

lead to the same local attractor form a cluster. The resultant

graph is a collection of local attractor trees with the local

attractor as the root. The leaves are the boundary points of

clusters. An example of a dataset and the attractor trees are

shown in Figure 2.

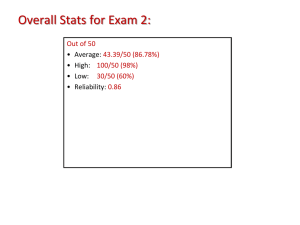

We used three microarray expression datasets: DS1 and DS2

and DS3 from [1,2,3]. DS1 contains expression levels of 8,613

human genes measured at 12 time-points. DS2 is a gene

expression matrix of 6221 80. DS3 is the largest dataset with

13,413 genes under 36 experimental conditions. The total run

times for different algorithms on DS1, DS2 and DS3 are shown

in Figure 3. Note that our approach outperformed k-means,

BIRCH and CAST substantially when the dataset is large. In

particular, our approach performed almost 3 times faster than kmeans and CAST for DS3.

Figure 2.

run time(s)

K-means

A dataset and the attractor trees

2.3 Cluster Merging Process

We consider both relative connectivity and relative closeness,

and define similarity between cluster i and j as follows:

CS(i, j) = (

hi

fi

hj

fj

)

1

d ( Ai , A j )

BIRCH

CAMP

10000

9000

8000

7000

6000

5000

4000

3000

2000

1000

0

DS1

(3)

CAST

Figure 3.

DS2

DS3

Run time comparisons

where hi is the average height of the ith attractor tree; f i is the

average fan-out of the ith attractor tree; d(Ai, Aj) is the Euclidean

distance between two local attractors Ai and Aj. The calculations

of hi and f i are discussed later.

The clustering results are evaluated by means of “Hubert’s

statistic” [3]. The accuracy experiments show CAMP and

CAST both are more accurate than the other two. However,

CAST is not efficient and scalable.

After the local attract trees are built in CLA process, the cluster

merging process (MP) starts combining the most similar subcluster pair level-by-level based on similarity measure. When

two clusters are merged, two local attractor trees are combined

into a new tree, called a virtual local attractor tree. It is called

“virtual” because the new root is not a real attractor. It is only a

virtual attractor which could attract all points of two sub-trees.

The cluster merging is processed recursively by combining

(virtual) attractor trees.

4. CONCLUSION

After merging, we need to compute the new virtual attractor Av,

the average height hv , the average fan-out f v of the new virtual

attractor tree. Take two clusters: Ci and Cj, for example, and

assume the size of Cj is greater or equal than Ci, i.e. ||Cj|| ||Ci||,

we have the following equations:

5. REFERENCES

|| Cj ||

( Ail A jl )

Avl =

|| Ci || || Cj ||

hv = Max{ hi , h j }+

fv =

l = 1, 2 ... d

|| Cj ||

d ( Ai , A j )

|| Ci || || Cj ||

|| Ci || * f i || Cj || * f j

|| Ci || || Cj ||

CAMP combines the features of both the density-based

clustering approach and the similarity-based clustering

approach. The combination of the density-based approach and

the similarity-based approach considers clusters with diverse

shapes, densities, and sizes, and is capable of dealing with

noise. Experiments on standard gene expression datasets

demonstrated that our approach is very efficient and scalable

with competitive accuracy.

1.

Ben-Dor, A., Shamir, R. & Yakhini, Z. “Clustering gene

expression patterns,” Journal of Computational Biology,

Vol. 6, 1999, pp. 281-297.

2.

Tavazoie, S. J. Hughes, D. and et al. “Systematic

determination of genetic network architecture”. Nature

Genetics, 22, pp. 281-285, 1999.

3.

Tseng, V. S. and Kao, C. “An Efficient Approach to

Identifying and Validating Clusters in Multivariate

Datasets with Applications in Gene Expression Analysis,”

Journal of Information Science and Engineering, Vol. 20

No. 4, pp. 665-677. 2004.

4.

Zhang, T., Ramakrisshnan, R. and Livny, M. BIRCH: an

efficient data clustering method for very large databases.

In Proceedings of of Int’l Conf. on Management of Data,

ACM SIGMOD 1996.

(4)

(5)

(6)

where Ail is the lth attribute of the attractor Ai. ||Ci|| is the size of

cluster Ci. d(Ai, Aj) is the distance between two local attractors A i

and Aj. hi and f i are the average height and the average fan-out of

the ith attractor tree respectively.