Probability and Statistics – Theoretical versus Empirical We now

advertisement

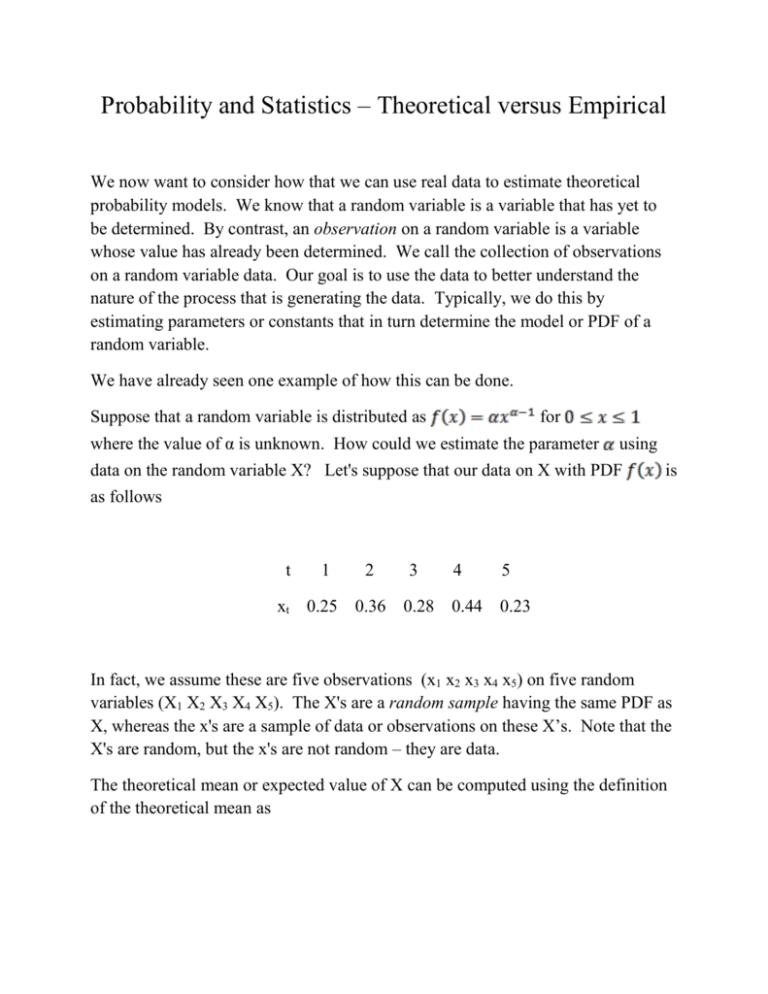

Probability and Statistics – Theoretical versus Empirical We now want to consider how that we can use real data to estimate theoretical probability models. We know that a random variable is a variable that has yet to be determined. By contrast, an observation on a random variable is a variable whose value has already been determined. We call the collection of observations on a random variable data. Our goal is to use the data to better understand the nature of the process that is generating the data. Typically, we do this by estimating parameters or constants that in turn determine the model or PDF of a random variable. We have already seen one example of how this can be done. Suppose that a random variable is distributed as for where the value of α is unknown. How could we estimate the parameter using data on the random variable X? Let's suppose that our data on X with PDF is as follows t 1 2 3 4 5 xt 0.25 0.36 0.28 0.44 0.23 In fact, we assume these are five observations (x1 x2 x3 x4 x5) on five random variables (X1 X2 X3 X4 X5). The X's are a random sample having the same PDF as X, whereas the x's are a sample of data or observations on these X’s. Note that the X's are random, but the x's are not random – they are data. The theoretical mean or expected value of X can be computed using the definition of the theoretical mean as Next, suppose that we use the data and compute the sample mean as which we easily find is If we had many observations, we might feel that this sample mean should be close to E[X] = . Setting E[X] equal to Therefore, we can solve for our estimate of α which we call . = which tells us that = 0.2378 approximately. The method we have just use to estimate α from data is called the method of moments (MM). There are other more complicated ways to guess the value of α. We will be studying a particularly useful way of estimating parameters in a model which is called ordinary least squares (OLS). In some cases MM is equivalent to OLS. Most of the time, these two estimators are different. We now turn to estimation in general. A joint PDF will have a number of things associated with it. For example, it's will have means, the variances, the covariances, and the correlations. We can make a short tale to show the differences between the theoretical object and its empirical counterpart. Theoretical Empirical --------------------------------------------------------------------------------------------------1. Random variable X 2. Random Sample X1 X2 ... XN 3. PDF 4. Mean 5. Variance Observation or datum x Sample of data x1 x2 ... xN frequency function or histogram Sample mean Sample variance 6. Covariance Sample covariance 7. Correlation Sample correlation There is one last step and you will know all about the basics of probability and statistics. Look at the right hand side of the table above. Each entry puts data into the formula. To get the sample mean, we just add x1, x2, x3,...,xN together and divide by N, the number of observations. This is easy to do. The number we get is called the sample mean and it is an estimate of the theoretical mean or expected value of X. But, what if instead we put a random sample X1, X2, ...,XN into each entry in the right hand side of the table above. Of course, whatever we compute is random, since the X's are all random. We call this new thing an estimator. An estimator is a random variable also since it is a function of random variables. It follows that we can make a table with three columns instead of two, as follows: Theoretical Estimator Estimate____ 1. X X x 2. X1...XN X1...XN x1...xN 3. 4. 5. 6. 7. We can compute the sample or empirical objects, if we have data. The empirical objects represent actual guesses we make of the unknown and unknowable theoretical objects. Here is some data. Using GRETL you can practice calculating each of the above entries in the table above. Suppose our data is as follows: obs x y 1 13.24789 14.60038 2 14.07515 14.83841 3 10.05335 13.27980 4 7.89558 8.73239 5 7.45343 10.02690 6 15.66481 17.70391 7 13.62395 16.01292 8 14.00609 14.68675 9 10.06575 12.24594 10 12.44744 12.93126 11 15.62270 17.02429 12 12.56344 14.24604 13 9.580510 9.76837 14 8.015410 8.57754 15 13.35889 14.76019 16 14.22871 15.90185 17 11.78969 12.91621 18 16.36569 16.88889 19 13.04588 12.86995 20 9.867530 12.43598 The number of observation is N = 20. Let us use GRETL to calculate each estimate in the table. (1) sample mean of x = 12.149 sample mean of y = 13.522 (2) sample variance of x = 7,2366 sample variance of y = 7.2087 (3) sample covariance of x and y = 6.8081 (4) correlation of x and y = 0.94246 Problems: #1. How is a random sample different from a sample of data? #2. How do you construct a histogram from data x1...xN ? #3. Explain the difference between an estimate and an estimator. #4. Suppose that E[X] = μ. Now suppose that you have a random sample X1, X2,...,XN all independent. Form the estimator for μ as . Now, prove that . We say that is an unbiased estimator of μ. #5. Suppose that E[X] = μ and Var[X] = σ2. Now suppose that you have a random sample X1, X2,...,XN all independent. Form the estimator for μ as . Now show that σ2/N. Note that when you have an unbiased estimator which has a variance that limits to zero as the sample size N →∞, the estimator is called a consistent estimator. #6. Suppose that you have data t 1 2 3 4 5 xt 8 3 2 2 5 yt 1 6 7 8 (a) Calculate the sample means and (b) Calculate the sample variances and . 3 (c) Calculate the sample covariance . (d) Calculate the sample correlation coefficient .