Appendix

advertisement

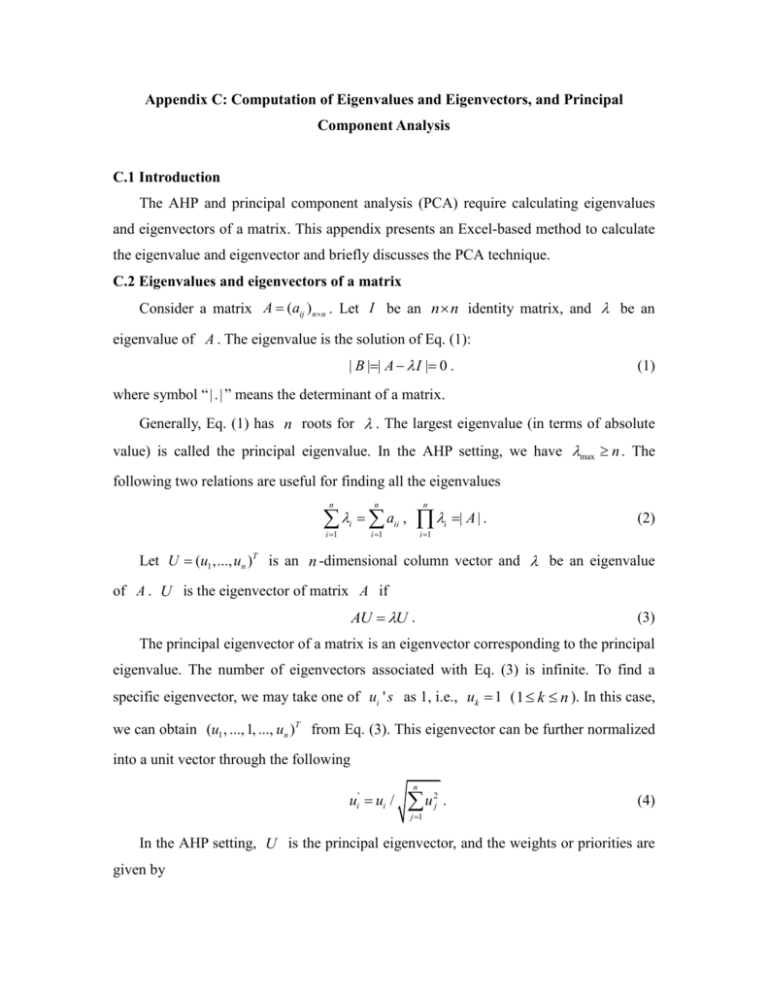

Appendix C: Computation of Eigenvalues and Eigenvectors, and Principal Component Analysis C.1 Introduction The AHP and principal component analysis (PCA) require calculating eigenvalues and eigenvectors of a matrix. This appendix presents an Excel-based method to calculate the eigenvalue and eigenvector and briefly discusses the PCA technique. C.2 Eigenvalues and eigenvectors of a matrix Consider a matrix A (aij )nn . Let I be an n n identity matrix, and be an eigenvalue of A . The eigenvalue is the solution of Eq. (1): | B || A I | 0 . (1) where symbol “ | . | ” means the determinant of a matrix. Generally, Eq. (1) has n roots for . The largest eigenvalue (in terms of absolute value) is called the principal eigenvalue. In the AHP setting, we have max n . The following two relations are useful for finding all the eigenvalues n n n a , i 1 i i 1 ii i i 1 | A | . (2) Let U (u1 ,..., un )T is an n -dimensional column vector and be an eigenvalue of A . U is the eigenvector of matrix A if AU U . (3) The principal eigenvector of a matrix is an eigenvector corresponding to the principal eigenvalue. The number of eigenvectors associated with Eq. (3) is infinite. To find a specific eigenvector, we may take one of ui ' s as 1, i.e., uk 1 ( 1 k n ). In this case, we can obtain (u1 , ..., 1, ..., un )T from Eq. (3). This eigenvector can be further normalized into a unit vector through the following ui' ui / n u j 1 2 j . (4) In the AHP setting, U is the principal eigenvector, and the weights or priorities are given by n u ui / u j . ' i (5) j 1 C.3 Using Excel Functions to find the eigenvalue and eigenvector of a matrix The following Excel functions are useful for finding the eigenvalue and eigenvector of a matrix. Mdeterm( A ) returns the determinant of matrix A . Minverse( A ) returns the inverse matrix of matrix A . Mmult(array 1, array 2) returns the matrix product of two arrays. The result is an array with the same number of rows as array 1 and the same number of columns as array 2. The number of columns in array 1 must be the same as the number of rows in array 2. When using Minverse( A ) or Mmult(array 1, array 2), first select the range of the outcome, enter the formula as an array formula, then press F2, and finally press CTRL+SHIFT+ENTER. Example C.1: Calculate the principal eigenvalues and principal eigenvectors of the matrices shown in Tables C.1 and C.2. Table C.1 A 4 4 matrix i \ j 1 2 3 4 1 1 1/5 1/8 1/6 2 5 1 1/2 1 3 8 2 1 1 4 6 1 1 1 Table C.2 A 3 3 matrix i \ j 1 2 3 1 1 2 1/2 2 1/2 1 1/3 3 2 3 1 The principal eigenvalue of the matrix given by Table C.1 equals 4.0407, and the principal eigenvector normalized by Eq. (5) is ( ui ,1 i 4 ) = (0.0494, 0.2476, 0.3944, 0.3086). The principal eigenvalue of the matrix given by Table C.2 equals 3.0092, and the principal eigenvector is ( ui ,1 i 3 ) = (0.2970, 0.1634, 0.5396). C.4 Principal Component Analysis Consider the situations where several variables are simultaneously observed. There can be correlations among the variables but the relationships among the variables may be implicit. To make the analysis clearer and simpler, the PCA uses orthogonal and linear transformations to transform the original variables into new and linearly uncorrelated variables, which are called principal components. The principal components are mutually independent and do not contain overlapped information. Through neglecting one or more unimportant principal components, the relationships among the original variables can be approximately represented by a few important principal components without losing too much information. As such, the PCA has been widely used for data compression and data explanation. Consider a set of n observations of p random variables. The observations can be presented in the form of a matrix as below: x11 ... x1 p X ... ... ... . xn1 ... xnp (6) X is called the sample matrix. Since the original variables are usually measured using different scales or units (e.g., temperature and pressure), it is necessary to standardize their observations by the following transformation: yij xij j sj ,1 j p (7) where j and s 2j are sample average and sample variance, respectively, and given by j Let A [aij ,1 i, j p ] 1 n 1 n 2 x s ( xij j ) 2 . , j ij n 1 i 1 n i 1 denote the covariance (8) matrix of Matrix Y [ yij ,1 i n,1 j p ] , which is actually the correlation matrix of Matrix X . Generally, Matrix A has p positive eigenvalues. Let j ,1 j p , denote the eigenvalues with: 1 2 ... p 0 . (9) Let V j = (v1 j ,..., v pj )T denote any one of the eigenvectors associated with j . The unit eigenvector U j (u1 j ,..., u pj )T is given by uij vij / p v i 1 2 ij . (10) As such, we can obtain p mutually independent and orthogonal unit eigenvectors. Define the j -th principal component as p Pj yi uij . (11) i 1 Clearly, u ij reflects the importance of Yi for Pj and is called the loading or weight. For a particular data point ( y1 ,..., y p ), the value of Pj obtained from Eq. (11) is called the component (or factor) score. The sum of the diagonal elements of a covariance matrix represents the total variance of the variables. The total variance equals the sum of all the eigenvalues (see Eq. (2)), i.e., each eigenvalue has a contribution to the total variance. The first principal component has the greatest contribution; the second principal component has the second greatest contribution; and so on. The contribution of the j -th principal component to the total variance is given by p c j j / l . (12) l 1 The cumulative contribution of the first j principal components to the total variance is given by j C j cl . (13) l 1 The information that a principal component contains can be represented by its contribution to the total variance. The information represented by the original variables can be approximated by the first k principal components if Ck 1 0.9 and Ck 0.9 . This is because the variance values in the other principal components are smaller than 10% and may be dropped without losing too much information. Each of the first k principal components can be further interpreted through examining the symbol and magnitude of u ij , and the physical or engineering meaning of X j . For example, if u1J is positive and largest among ( u1 j ,1 j p ), and X J is larger-the-better, then P1 mainly represents the effect of X J , and is also larger-the-better. Example C.2: The data shown in Table C.3 are the performance scores of 34 candidates. All the four criteria are larger-the-better. The problem is to find principal components. Table C.3 Data for Example C.2 i x1 x2 x3 x4 i x1 x2 x3 x4 1 57 60 90 128 18 47 63 76 103 2 50 64 80 138 19 55 61 62 118 3 40 55 124 111 20 53 59 72 106 4 55 64 92 69 21 61 64 59 108 5 51 60 100 111 22 62 58 62 95 6 41 61 107 122 23 48 58 74 101 7 55 66 85 112 24 56 62 61 123 8 53 64 88 98 25 45 69 59 137 9 64 67 73 115 26 54 60 57 144 10 61 56 83 99 27 39 56 68 116 11 48 60 89 112 28 46 49 71 114 12 44 62 89 112 29 45 59 61 135 13 49 70 74 104 30 46 67 71 118 14 56 62 72 94 31 51 66 64 134 15 46 65 79 119 32 43 55 70 135 16 55 64 70 105 33 44 66 98 113 17 49 60 80 113 34 50 57 66 125 From Table C.3, we have the correlation matrix shown in Table C.4. The eigenvalues of the correlation matrix are 1.4178, 1.2831, 0.9148 and 0.3843, respectively. The first three principal components have a total contribution of 90.4%, and hence we take k 3 . Table C.5 shows their unit eigenvectors. Table C.4 Correlation matrix x1 x2 x3 x4 x1 1 0.1818 -0.3246 -0.3052 x2 0.1818 1 -0.0840 -0.0097 x3 -0.3246 -0.0840 1 -0.2859 x4 -0.3052 -0.0097 -0.2859 1 Table C.5 Unit eigenvectors P1 P2 P3 u1 0.7194 0.2130 0.2748 u2 0.4222 -0.0031 -0.9018 u3 -0.5221 0.5632 -0.3038 u4 -0.1779 -0.7984 -0.1375 Table C.6 shows the correlation coefficients between principal components and original variables. From the table, we have the observations: P1 , P2 and P3 mainly reflect the effects of X1 , X 4 and X 2 . The correlation coefficients in Table C.6 are proportional to the eigenvectors in Table C.5 with the proportional coefficient being i . Table C.6 Correlation coefficients between principal components and original variables x1 P1 P2 P3 0.8566 0.2412 0.2629 x2 0.5027 -0.0035 -0.8626 x3 -0.6217 0.6379 -0.2906 x4 -0.2118 -0.9044 -0.1315