Reviewer Hon Keung Tony Ng

advertisement

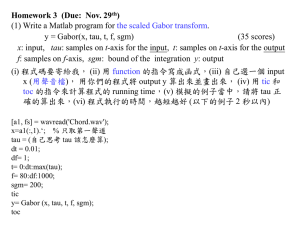

Cover letter: Dear Editor, We thank you and the reviewers for the constructive and helpful comments and suggestions. We have revised our manuscript according to these comments and suggestions. The abstract has been structured into Background, methods, Results, and Conclusion. We added Author’s contribution. The following is a point-to point response to issues raised by the reviewers. Sincerely, Guimin Gao University of Alabama at Birmingham. Response to Reviewer Dr. Hon Keung Tony Ng Issue 1: The method of Wang et al. (2004) can be problematic because the numbers X1 and X2 are not independent when the threshold (tau) is set to be the 90-th percentile. The authors may need to justify the use of the test procedure for two independent binomial proportions. Moreover, the tests considered in the manuscript (as well as Wang et al.) are only few of the existing test procedures for this purpose. One may refer to Newcombe (1998) and some other subsequent work for reference. To address this issue we have added text highlighted in yellow in section of Method, subsection of Development of the Tests (page 5). Our response to the independence issue raised by Dr. Ng is threefold. First, in our manuscript, we discuss and evaluate the method of Wang et al. (2004) because we compare our new method to the method of Wang et al. for the purpose of evaluating our new method. Moreover, the method of Wang et al. has been used by several investigators for lifespan studies in the fields of aging making it especially pertinent. Second, the method of Wang et al. uses the test procedures for two independent binomial proportions described by Mehrotra et al. 2003 and these procedures require that X1 and X2 are independent, where, Xj (j=1,2) is the number of observation exceeding threshold tau in the j-th group. We acknowledge that in the method of Wang et al. X1 and X2 are not independent when the threshold (tau) is set to be the 90-th percentile, and that this creates a theoretical problem. Nevertheless, on an empirical level, our simulations show that in the sample sizes we considered, this is not an apparent problem because the method of Wang et al. has high power and can control type I error quite well in the simulation studies using sample sizes that are practical for lifespan studies. We have mentioned this in the manuscript. When an assumption is not strictly met, simulation studies (including estimation of power and type I error) are a reasonable way to evaluate the methods as we have done in this manuscript. Further, that this theoretical concern seems not to be of practical import is bolstered by the fact the this procedure, if one replaces the 90th with the 50th percentiles, is just the ‘old fashioned medians test’(Siegel and Castellan Jr. 1988), advocated and used in this manner without concern. (Siegel S, Castellan Jr. N J: Nonparametric Statistics for The Behavioral Sciences. McGraw-Hill College, 1988, p 200) Third, although X1 and X2 are not independent if we pick a sample quantile as a threshold, this is only one way to proceed and we have also offered results when the threshold is set in advance according to prior knowledge (in this situation, X1 and X2 are independent). We have mentioned this in the manuscript. With respect to the work of Newcombe (1998) and the fact that more test procedures for two independent binomial proportions can be found in Newcombe (1998), we acknowledge the point and believe that studying the performance of tests offered by Newcombe will be a valuable endeavor for future research. Yet, no one paper can study everything and we believe that introducing the tests of Newcombe herein is beyond the scope of the current manuscript. Issue 2: In the simulation study, the underlying value of the threshold is assumed to be known and set to be 130. In real-life situations, this threshold is usually unknown and it plays an important role in the performance of the test procedures studies in the manuscript. One may consider a simulation study to investigate the effect of different choices of the threshold value. Dr. Ng raises an important opportunity for us to clarify this point. We must respectfully disagree with Dr. Ng on one aspect. Specifically, as people who participate extensively in model organism longevity research, we can say with confidence that in real-life situations, one usually does know the threshold of interest a priori. If one is studying mice, rats, dogs, fruit flies, and many other species, enough is known about their lifespans from past research that one could easily choose a threshold of interest a priori. By definition, we most often study the most commonly studied organisms and for these organisms we do have a very good idea of the threshold we would find of interest to define ‘old’. However, we do recognize that we will not have such knowledge in all cases. It is for this reason that when analyzing the simulated data, we also consider a threshold of the 90th percentile of the data allowing for an ad hoc data-based determination of a threshold. We now clarify this on page 9 (section of Delineation of Tests to Be Evaluated.)of the revised manuscript. Issue 3. 3. A. When defining the new variable Zi = I (Yi > tau)Yi, if there is a large number of lifespan < tau, it may raise problems to the rank-based (Wilcoxon-MannWhitney test) and permutation test since large number of Zi are equal to zero. On the other hand, I suspected that the power of the test may not be good when the number of Yi > tau are small in either the treatment group or control group. We acknowledge that if the observed data satisfy H0,A (i.e., the proportions of subjects with Yi > tau in the two groups are equal), the new methods (tests for H0,C ) may have lower power than the tests for H0,B if large number of Zi are equal to zero in any group. We have showed this in our simulation studies (see Table 3) in the manuscript. If the observed data don’t satisfy H0,A, then the group with longer lifespan (group1) usually has higher proportion of subjects with Yi > tau than the other group (group2). Therefore group1 has lower proportion of zero in the Z values than group2. Usually this will increase the power of the new methods (tests for H0,C) compared to the tests for H0,B . This is confirmed by our simulation studies (see Table 4). In brief, no test can have excellent power in all situations, especially those situations in which departures from the null hypothesis are very small. 3.B. Here is an example: Let both the treatment group and control group have 10 subjects, 6 (9) subjects in treatment (case) group have lifespan < 130, and we have the following Z values: Treatment: 0, 0, 0, 0, 0, 0, 190, 190, 190, 190 Control: 0, 0, 0, 0, 0, 0, 0, 0, 0, 200 It is clear that treatment group has a longer lifespan, but the (new) test for H0,C does not correctly reject the null hypothesis of two groups having equal maximum lifespan. Let us try to offer an alternative view and reasoning here. Dr. Ng states “It is clear that treatment group has a longer lifespan.” This statement is assuredly true in the sample data provided if one defines ‘longer lifespan’ on the basis of a mean, median, or proportion above a lifespan of say, 180. On the other hand, the statement is false if one defines ‘longer lifespan’ on the basis of the proportion above a lifespan of say, 195. Moreover, one does not perform inferential tests to draw conclusions about samples. One conducts inferential tests to draw conclusions about populations from samples and it is not at all clear that the treatment population has a longer lifespan than the control population from the data provided. Hence the basic premise of the argument that this example is intended to support does not hold up and the conclusion that “the (new) test for H0,C does not correctly reject the null hypothesis” is not supported. For the example above, both the traditional method (Wilcoxon-Mann-Whitney) for test of H0,B and the method for test of H0,C can not reject the null hypothesis. We cannot conclude that the new method for test of H0,C has lower power for the example. That is, we do not know that the null hypothesis should have been rejected. Issue 4. Please explain clearly how the permutation test is done. We thank Dr. Ng for pointing out the need for greater clarity here. To address this issue we have added paragraph (on page 10) highlighted in yellow (in section of Method, subsection of Delineation of Tests to Be Evaluated). Suppose in the observed dataset, treatment (control) group has n1 (n2) subjects. In the permutation test, we put all the (n1 +n2) subjects together, and then generate 1000 replicated datasets. For each replicated dataset, we randomly sample n1 subjects from the (n1 +n2) subjects and assign them to treatment group, and assign the left n2 subjects to control group. We run a T-test on the observed data and the 1000 replicated datasets. Let T0 be the T value for the observed dataset, then p-value is calculated as the proportion of replicated datasets with absolute T values greater than or equal to absolute valued of T0. Issue 5. In Table 2, the authors mentioned “The bolded values are those simulated type I error rate which are significantly higher than the nominal alpha level” Please give an explicit definition on “significant higher”. It is confusing that 0.014 is bolded but 0.017 and 0.016 are not bolded in the same column (for N=50, alpha = 0.01). We have added definition under the Table 2: The bolded values are those simulated type I error rates which are significantly higher than the nominal at the 2tailed 95% confidence level (i.e., the lower bound of confidence interval is higher than ). Note that for the permutation tests we used 1000 replicated datasets and for other tests we used 5000 replicated datasets. Why 0.014 is bolded but 0.017 and 0.016 are not bolded is that we used 1000 (5000) replicated datasets for the permutation tests (other tests). Different number of replicated datasets can affect the estimation of confidence intervals. Response to Reviewer Dr. Huixia Wang Issue 1: Note that E(Z) = P(Y | Y > tau)E(Y | Y > tau). Therefore the new test for H0,C is really testing whether P(Y > tau | X = 1)E(Y | Y> tau, X=1) = P(Y > tau | X = 0)E(Y | Y> tau, X=0), while the method for H0,B is testing whether E(Y | Y> tau, X=1) = E(Y | Y> tau, X=0) and the method for H0,A is testing whether P(Y > tau | X = 1) = P(Y > tau | X = 0). The difference of E(Z) between groups consists of two parts: the probability of Y exceeding tau, and the expectation of Y for the subpopulation exceeding tau. The difference in the first part is the focus of H0,A while the difference in the second part is the interest of H0,B . The defined Z is like zero-inflated data. Dominici and Zeger (2005) studied a similar problem. The author may consider discussing such connections in the paper. These comments are very useful for us explain our methods theoretically. According to the comments, we have added text (highlighted in yellow on pages 7-8, in subsection of Development of the Tests) to describe the property of test for H0,C and discuss connections among the tests for H0,A , H0,B and H0,C . We have discussed the connections between the method of Dominici and Zeger (2005) and our methods. The method of Dominci and Zeger (2005) estimates the mean difference of nonnegative random variables (Y) for two groups. Our methods test the mean difference of random variables (Y) which are greater than threshold (more details in the manuscript). Issue 2. Page 8, line 15. It seems surprising to me that the method for testing H0,B has larger Type I error than the new method for testing H0,C in simulation 1. We checked the results for simulation 1 (in Table 2) and realized that the type I error of the new method for testing H0,C is comparable to that of the method for testing H0,B . I have stated this in the manuscript (highlighted in yellow on page 11, in section of Results), and deleted the original statement “the new methods for testing H0,C have lower type I error rates that the corresponding methods for tests of H0,B .” Issue 3. The discussion of real data analysis is too brief. The author may consider providing some graphics, e.g., histograms to help readers understand the sources of differences between two groups. Quantities such as the proportion of observations exceeding tau and some estimation of E(Y|Y>tau) in each group may also be useful for demonstration. We have added histograms (Figures 3 and 4) to describe the two real datasets. We added descriptions (highlighted in yellow on pages 13 in section of ILLUSTRATION WITH REAL DATA): From Figure 3, we can see the upper tails of the histograms of the two groups are different. Similar results can be found in Figure 4. Also we added descriptions about the proportions of the observations greater than , the sample means of the observations greater than , and the sample means of the Zvalues in the two corresponding groups (see text highlighted in yellow on pages 13-14 in section of ILLUSTRATION WITH REAL DATA). Issue 4. Table 2. The confidence intervals for Type I errors should be included. We have added 95% confidences interval for type I errors in Table 2. Issue 5. Table 1. The parameters in simulation 1 are exactly the same as in simulation 3. So are the plots. We have added a proportion parameter rj (j = 0, 1). In simulation 1, r1 = 0.9 and in simulation 3, r1 = 0.8. The corresponding plots are different (see Table 1). Issue 6. Page 5, last line. “sample mean” of Z should be population mean of Z. We have changed the words “sample mean” into population mean Issue 7. Page 9, line 18. “90th percentile” should be the 90th percentile We have added “the” before 90th percentile.