The computer learner corpus: a versatile new source of data for SLA

advertisement

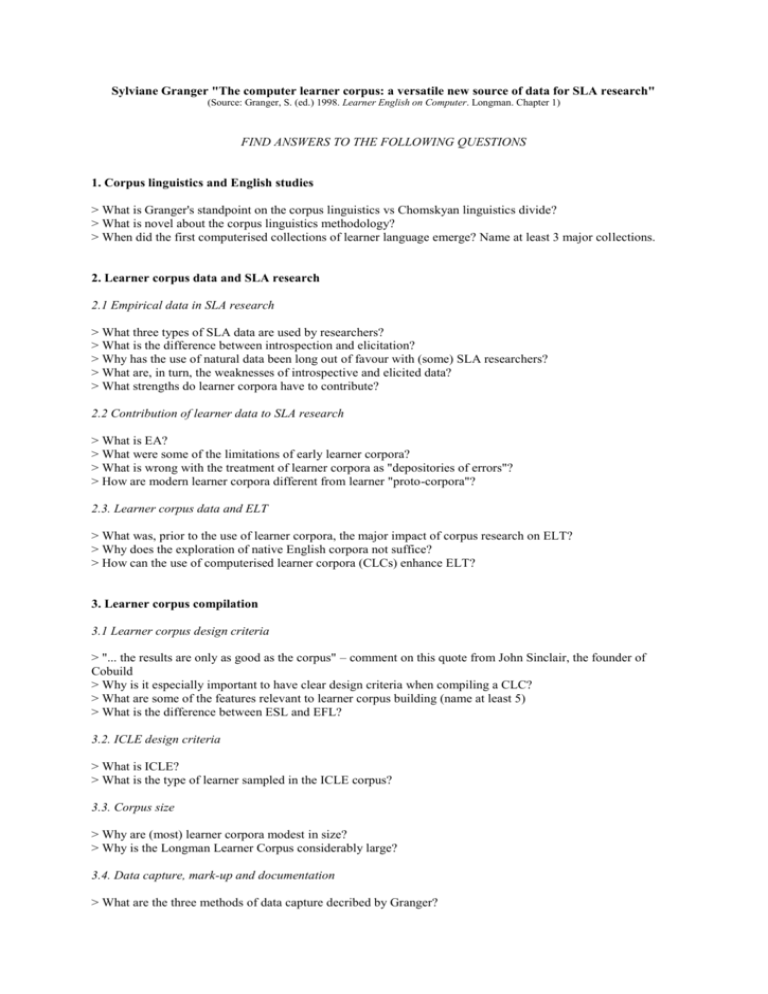

Sylviane Granger "The computer learner corpus: a versatile new source of data for SLA research" (Source: Granger, S. (ed.) 1998. Learner English on Computer. Longman. Chapter 1) FIND ANSWERS TO THE FOLLOWING QUESTIONS 1. Corpus linguistics and English studies > What is Granger's standpoint on the corpus linguistics vs Chomskyan linguistics divide? > What is novel about the corpus linguistics methodology? > When did the first computerised collections of learner language emerge? Name at least 3 major collections. 2. Learner corpus data and SLA research 2.1 Empirical data in SLA research > What three types of SLA data are used by researchers? > What is the difference between introspection and elicitation? > Why has the use of natural data been long out of favour with (some) SLA researchers? > What are, in turn, the weaknesses of introspective and elicited data? > What strengths do learner corpora have to contribute? 2.2 Contribution of learner data to SLA research > What is EA? > What were some of the limitations of early learner corpora? > What is wrong with the treatment of learner corpora as "depositories of errors"? > How are modern learner corpora different from learner "proto-corpora"? 2.3. Learner corpus data and ELT > What was, prior to the use of learner corpora, the major impact of corpus research on ELT? > Why does the exploration of native English corpora not suffice? > How can the use of computerised learner corpora (CLCs) enhance ELT? 3. Learner corpus compilation 3.1 Learner corpus design criteria > "... the results are only as good as the corpus" – comment on this quote from John Sinclair, the founder of Cobuild > Why is it especially important to have clear design criteria when compiling a CLC? > What are some of the features relevant to learner corpus building (name at least 5) > What is the difference between ESL and EFL? 3.2. ICLE design criteria > What is ICLE? > What is the type of learner sampled in the ICLE corpus? 3.3. Corpus size > Why are (most) learner corpora modest in size? > Why is the Longman Learner Corpus considerably large? 3.4. Data capture, mark-up and documentation > What are the three methods of data capture decribed by Granger? > Why is proofreading an obstacle when compiling a CLC? > Do learner corpora need to be extensively "marked up" (=textually annotated) ? > Why is documentation of a learner corpus a necessity? 4. Computer-aided linguistic analysis 4.1 Contrastive Interlanguage Analysis > What is Contrastive Interlanguage Analysis? > What can be gained by comparing native and non-native performance data? > What is a control corpus and why must it be characterised by "comparability of text-type"? > Within the ICLE Project: what is LOCNESS and why is it sometimes criticised as a control corpus? > What can be gained from comparing non-native corpora originated from learners with different L1's? > What is the difference between a "cross-linguistic invariant" and a "transfer-related feature"? > What is CA? Why is it essential to combine it with CIA? Is this a straightforward task, according to Granger? 4.2. Automated linguistic analysis 4.2.1 Linguistic software tools > What is the difference between text retrieval tools and annotation tools? > Is error tagging computerised? 4.2.2. CLC methodology > What is the difference between hypothesis-finding and hypothesis-testing approaches in CLC research? What are their respective limitations / risks? > Is "statistical significance" always of pedagogical use? > What are the linguistic limitations to a computerised (= "hypothesis-finding") approach? 5. Conclusion > In summary, what is the major promise of CLCs with respect to SLA theory and practice? Notes > Are free compositions natural data or elicited data? > Within ICLE, what would be a good candidate for a control corpus of texts written by professionals? > What is the difference between 'underuse' (sometimes also called 'underrepresentation', PK) and 'avoidance', in the context of learner language research?