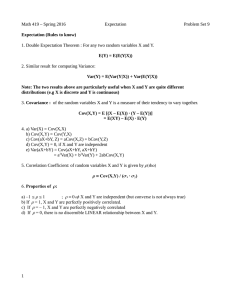

expectation

advertisement

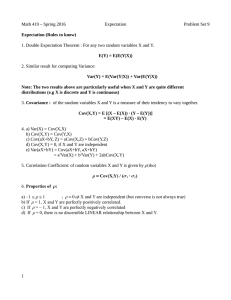

Chapter 3 EXPECTED VALUE OF A DISCRETE RANDOM VARIABLE 3.1 Expectation One of the most expected notions of probability theory is that of the expectation of a random variable. If X is a discrete random variable taking values x1, x2, …, then the expectation or the expected value of X, denoted by E[X], is defined by E[X]= xi P( X xi ) . i In words, the expected value of a random variable is a weighted sum of the values it takes, weighted by their likelihood of occurrence. For example, if X is taking the values 0 and 1 with probability ½, then, E[X]=0 (1/2)+1 (1/2)=1/2, which is the average of the values that X can take. Now, suppose X takes the value 1 with probability 2/3 and 0 with probability 1/3. In this case, E[X]=0 (1/3)+ 1 (2/3)= 2/3, is the weighted average of possible values where the value 1 is given twice as much weight as the value 0. Another motivation for the definition of the expected value is the frequency interpretation. Suppose the random experiment of interest is repeated N times where N is very large. Then, N P(X=xi) of time will result in the outcome xi, thus, the average of all the possible values after N repeatings is NP( X x ) x i i i , N which coincides with the definition of E[X]. Example: Find E[X] where X is the outcome when we roll a fair die. Since p(i)=1/6, for all i=1, 2, ..., 6, we obtain E[X]=1(1/6)+2(1/6)+3(1/6)+4(1/6)+5(1/6)+6(1/6)=7/2. Example: Consider the Bernoulli random variable taking values 0 in case of failure and 1 in case of success. If the probability of success is given by p, then the expected value of a Bernoulli random variable is equal to 0 (1-p) + 1 p=p. 3.2 Properties of the expected value 1. If a and b are constants and X is a random variable, then E[a X +b]= a E[X] + b 2. If X and Y are two random variables then E[X+Y]=E[X]+E[Y] 3. In general, if X1, X2, ..., Xn are n random variables then E[X1+X2+...+Xn]=E[X1]+E[X2]+... E[Xn]. 4. If g is a function, then E[g(X)]= g ( x ) P( X x ) . i i i 5. In particular, if g(x)=x^n, the n-th moment of X is given by E[Xn]= xin P( X xi ) . i Example: Consider a Binomial random variable consisting of n Bernoulli trials. Then, X=X1+X2+...+Xn, where each Xi is a Bernoulli trial taking values 0 or 1. Then using Property 3, E[X]= E[X1]+E[X2]+... E[Xn]=n p. 3.3 Variance Expected value, E[X], of a random variable X is only the weighted average of the possible values of X, so X also takes values around E[X]. One possible way of measuring the variation of X is through its variance. Variance measures the deviation of the random variable from its expected value ( or mean). If X is a random variable with mean , then the variance of X, denoted by Var(X), is defined by Var(X)=E[(X- )2]. Alternatively, Var(X) =E[(X- )2] =E[X2-2 X 2] =E[X2]-2 E[X] + E[ 2] =E[X2]- 2. Example: Compute the Var(X) when X represents the outcome of a fair die. Since P(X=i)=1/6, 6 E[X2]= i 2 P( X i ) =91/6. i 1 We had already computed =E[X]=7/2. Thus, Var(X)=91/6-(7/2)2=35/12. 3.3.1 Covariance We showed earlier that the expectation of a sum is the sum of the expectation. The same does not hold for the variance of a sum. In order to find the variance of a sum, we first need to introduce the concept of covariance. Given two random variables, X and Y, the Cov(X,Y) is defined by Cov(X,Y)=E[(X- x)(Y- y)] where x and y are the means of X and Y, respectively. Alternatively, Cov(X,Y)=E[XY]-E[X]E[Y]. Thus, Cov(aX,Y)=aCov(X,Y). and Cov(X,X)=Var(X). Moreover, Cov(X+Z,Y)=Cov(X,Y)+Cov(Z,Y). Indepence: If X and Y are independent, Cov(X,Y)=0 (E[XY]=E[X]E[Y]). Formula for the variance of a sum: Var(X+Y)=Var(X)+Var(Y)+2 Cov(X,Y) Example: Let X be a binomial random variable consisting of n independent Bernoulli trials: Then n n Var(X)= Var ( X i ) 2 Cov( X i , X j ) Var ( X i ) i 1 i j i 1 since Xi and Xj are independent when i j. Now, Var(Xi)=E[(Xi)2]-(E[Xi])2=12 pp=p(1-p). So, Var(X)= np(1-p).