Chapter 5

advertisement

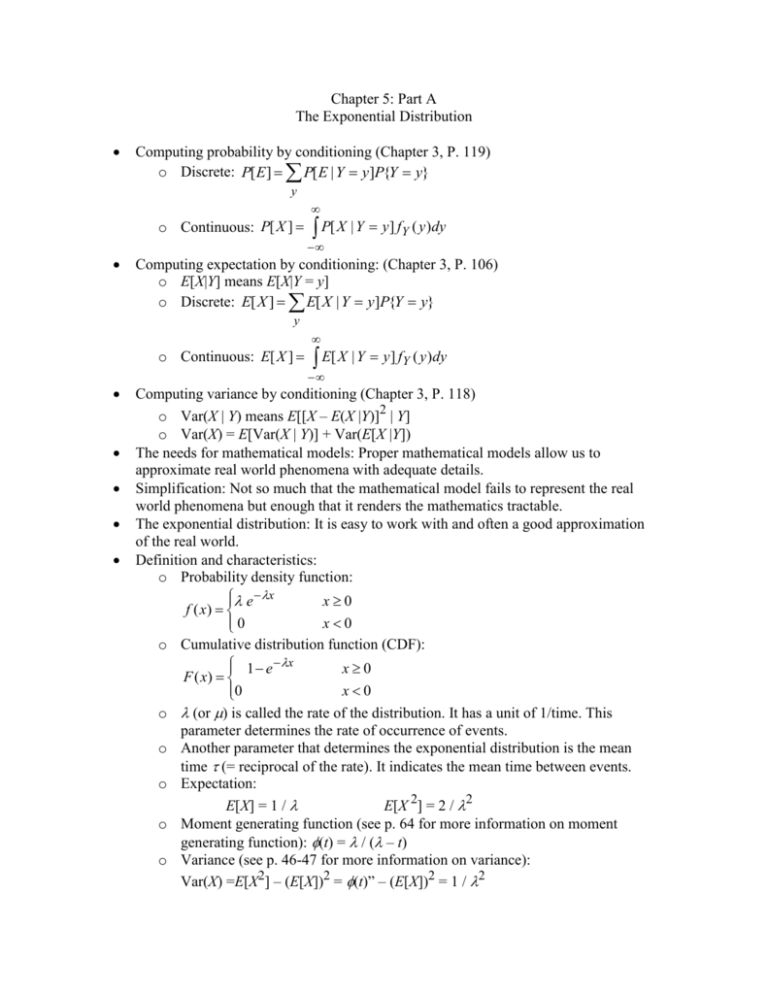

Chapter 5: Part A

The Exponential Distribution

Computing probability by conditioning (Chapter 3, P. 119)

o Discrete: P[ E ] P[ E | Y y]P{Y y}

y

o Continuous: P[ X ]

P[ X | Y y] fY ( y)dy

Computing expectation by conditioning: (Chapter 3, P. 106)

o E[X|Y] means E[X|Y = y]

o Discrete: E[ X ] E[ X | Y y]P{Y y}

y

o Continuous: E[ X ]

E[ X | Y y] fY ( y)dy

Computing variance by conditioning (Chapter 3, P. 118)

o Var(X | Y) means E[[X – E(X |Y)]2 | Y]

o Var(X) = E[Var(X | Y)] + Var(E[X |Y])

The needs for mathematical models: Proper mathematical models allow us to

approximate real world phenomena with adequate details.

Simplification: Not so much that the mathematical model fails to represent the real

world phenomena but enough that it renders the mathematics tractable.

The exponential distribution: It is easy to work with and often a good approximation

of the real world.

Definition and characteristics:

o Probability density function:

e x

x0

f ( x)

0

x0

o Cumulative distribution function (CDF):

1 e x

x0

F ( x)

0

x0

o (or ) is called the rate of the distribution. It has a unit of 1/time. This

parameter determines the rate of occurrence of events.

o Another parameter that determines the exponential distribution is the mean

time (= reciprocal of the rate). It indicates the mean time between events.

o Expectation:

E[X] = 1 /

E[X 2] = 2 / 2

o Moment generating function (see p. 64 for more information on moment

generating function): (t) = / ( – t)

o Variance (see p. 46-47 for more information on variance):

Var(X) =E[X2] – (E[X])2 = (t)” – (E[X])2 = 1 / 2

o Properties:

Memoryless: P{X > s + t | X > t} = P{X > s} for all s , t 0

t

t+s

P{ X s t , X t}

P{ X s}

s

P{ X t}

Since (X > s + t) (X > t),

we have P{X > s + t , X > t} = P{X > s + t}

For exponential distribution: e-(s + t) = e- s e- t

P{ X s t} P{ X t}P{ X s}

e se t

t

e s P{ X s}

e

Example: If Michael Jordan scores an average of 1 basket per 3.5

minutes, a) if he fails to score in the first half, on average how long

would it take for him to score his first basket in the second half? b)

what is the probability that he would not score for an entire game?

a) 3.5 minutes (memoryless)

b) P{X>48} = 1 – P(X<48} = 1 – F(48) = 1 – (1 – e-t) = 1.1E-6

The exponential distribution is the only distribution possessing the

memoryless property.

Remaining lifetime after t years:

1 F (s t )

1 F (t )

For exp. distribution: P{lifetime > s + t | lifetime > t} = 1 – F(s)

Failure rate r(t): The conditional probability density that a t-year-old

item will fail.

f (t )

In general: r (t )

1 F (t )

In general: P{lifetime > s + t | lifetime > t} =

Exponential distribution: r(t) = e-t / e-t =

Problems: 1, 2, 4, and 5

For two exponential processes with 1and 2:

P{ X i X j }

i

i j

i, j 1,2,

For N exponential processes with 1, 2, … N , The minimum :

1

1

1

min

1 i max

i

1 x

x

P{min( X 1 , X 2 ,, X N ) x} e i e i

P{ X i min X j }

j

i

j

E[tdeparture]

Example 5.3

Example: A facility performs 2 services for each customer. The first

service can only be served by Clerk #1 and the second service can then

be served by either clerk #2 or #3. Assuming that a customer can go to

either clerk with equal probability if both clerk 2 and 3 are not busy

and the service times are exponentially distributed with mean service

times 13, 15, and 14 minutes per customer, respectively. When you

arrive at 12 pm there is one customer (Mr. Jones) ahead of you, being

served by clerk #1, determine your expected departure time.

= E[twait for service 1] + E[tservice 1] + E[twait for service 2] + E[tservice 2]

= E[tw1] + E[ts1] + E[tw2] + E[ts2]

E[tw1] = E[ts1] = 13, E[tw2] = 0 (why?)

Jones

1/2

1/2

#2

#3

2

1 2

1

1 2

3

1 3

1/2

1/2

1

1 3

1/2

1/2

Total Expectation:

E[ts2] =

1 1

1 2 2 3 1 1

1 3 2 3

3

2

2 1 2

2 1 2 2 2 1 3

2 1 3 2

= 14.496

E[tdeparture]

=

40.496 minutes after 12 pm

Problems: 5-6, 15, 16, 20, 21, 22, 29, 30, and 32

Chapter 5: Part B

The Poisson Process

Poisson R.V. (Chapter 2, P. 32-33): It can be used to approximate the binomial R. V.

when n >> p.

i

p(i) = P{X = i} = e-

i = 0, 1, 2, …

i!

Examples:

o 2.11 (p. 33)

P{X = 0} = e-3 = 0.05

What about the probability that accident would occur today?

P{X 1} = 1 – e-3 = 0.95 (same as the exponential distribution: the

probability that the first accident would occur sometime today)

Or we can do the following:

0.95

o 2.12 (p. 33): Use both binomial and poisson

n large

n small

Stochastic processes (Chapter 2, P. 79):

o A stochastic process {X(t), t T} is a family of random variables, that is, for

each t T, X(t) is a random variable.

o A stochastic process describes the evolution through time of some (physical)

process.

o The index t is often referred to as time and as a result X(t) is referred to as the

state of the process at time t.

o T can be discrete or continuous.

Counting processes

o Definitions:

A stochastic process {N(t), t 0} is a counting process if N(t)

represents the total number of events that have occurred from time

zero up to time t. A counting process has the following properties:

- N(t) 0

- N(t) is integer valued

- N(s) N(t) if s < t

- For s < t, N(t) – N(s) = total number of events in (s, t)

Independent increments: Number of events occurring in disjoint time

intervals are independent.

Stationary increments: Number of events occurring in any time

intervals depends only on the length of the interval.

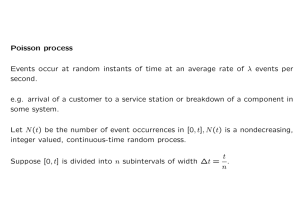

Poisson process

o Definitions:

N(0) = 0

Independent increments

Stationary increments (For homogeneous Poisson processes only).

It follows the Poisson distribution with mean = t:

P{N (t s) N ( s) n} e

t (t )

n!

n

n 0,1,2,

o Properties:

is called the rate of the process.

Expectation:

E[X] = t

Variance:

Var(X) = t

Example: The 1999 European soccer champion Manchester United is

one of the best offensive teams in the history with an average of 2.5

goals per game (90 minutes). a) What is probability that it would be

shut out for an entire game? b) What is probability that it would score

more than 2 goals for a game? What is probability that it would score 2

goals in 90 seconds?

a) t = 1, P{n = 0} = e-2.5 = 0.082

b) 1 – P{n = 0} – P{n = 1} – P{n = 2}

= 1 – e-2.5 – e-2.5 (2.5) – e-2.5 (2.5)2/2 = 0.46

c) t = 1.5 minutes (1 game / 90 minutes) = 1/60 game

P{n = 2} = e-2.5/60 (2.5/60)2/2 = 0.00083

(note: In the 1999 European Champions League final, Manchester

United was behind 1 goal only a few minutes into the game. They

fought hard but failed to score for the rest of the game. After the

official 90 minutes expired, they score 2 goals during the 90

seconds injury time added at the end of the game and won the

European Championship that year.)

Interarrival time:

Tn is the elapsed time between the (n – 1)th and the nth event. Tn , n = 1,

2, …, are iid (independent identically distributed) exponential random

variables having mean 1/. This is a direct consequence of the

independent and stationary increments property of a Poisson process.

n

Waiting time: Sn =

Ti

n1

i 1

Probability that the nth event occurs before or after t:

Before t: P{Sn t} = P{N(t) n} =

After t: P{Sn t} = P{N(t) < n} =

e

j n

n 1

e

j 0

t ( t )

j

j!

t ( t )

j

j!

Example:

P.336, 5-37 and 38 (s – minute)

= 3 / minute, P{N=0} = e-s = e-3s

P{S2 t} = P{N(t) < 2} = P{N=0} + P{N=1} = e-3s (3s + 1)

Example:

P.337, 5-42

E[S4] = 4/

E[S4|N(1) = 2] = 1 + E[time for 2 more events] = 1 + 2/

E[N(4) – N(2)|N(1) = 3] = E[N(4) – N(2)] = 2

Problems: p. 340, 5-57 and 58

Given that n events have occurred in (0, t), the set S1, S2, …, Sn are

uniformly distributed in (0, t).

Given that Sn = t, the set S1, S2, …, Sn-1 are uniformly distributed in

(0, t).

Example: Parts arrive at a warehouse at a Poisson rate of per day and

all parts are shipped out weekly on Monday. If the storage cost is C

cents/part/day, the average weekly storage cost is:

Average weekly cost = C 7 7 / 2

Problem: p. 340, 5-60, 64 (part a only)

o Type I and type II events:

If a Poisson process {N(t), t 0} contains two types of events, one

with probability p and the other (1 – p), then they called type I or type

II event, respectively, given that the probability of each event is

independent of all other events.

Let N1(t) and N2(t) denote respectively the number of the type I and

type II events occurring in [0, t].

N1(t) + N2(t) = N(t)

{N1(t), t 0} and {N2(t), t 0} are both independent Poisson

processes with rate p and (1 – p).

Example: If the number of cars crossing a bridge are assumed to be a

Poisson process a rate of 1 per minute and 5% of the cars are trucks.

a) Find the probability that 1 or more trucks will cross the bridge in 1

hour.

= 1/min, P = 0.05, P = 3/ hours

P{N1} = 1 – P{N=0} = 1 – e-3 = 0.95

b) If 10 trucks had crossed the bridge during the last hour, find the

expected number of cars crossed the bridge in that hour.

(1 – P) = 0.95/min = 57 non-truck/hour 10 + 57 = 67 cars

1

2

PS n S m - The probability that n events occur in one Poisson process (1)

o

before m events occur in a second and independent Poisson process (2).

1

1 2

n m 1

P S11 S12

P

S 1n

2

Sm

1

P S 12 S12

1 2

n m 1 1

k

1 2

k n

k

2

2

1 2

n m 1 k

o Nonhomogeneous Poisson process: The rate is a function of time.

t

= (t), let m(t ) ( ) d and t = m(t + s) – m(t)

0

We have: P{N(t+s) – N(s) = n} =

e

t ( t )

n

n = 0, 1, 2, …

n!

Example: Text P. 344, 5-78, Problem 5-77 (answer: e -11 11n / n!)

10

8

4

8 10 12 2

5

42 + 82 + (82 + 22/2) + (34 + 36/2) = 8 + 16 + 18 + 21 = 63

12

4

8

P{N (10 : 10) N (9 : 50) 1} ? t 10 10 2

6

60

60

e

t (t )

n!

n

e

1

2 ( 2)

1!

0.271