Notes 20

advertisement

Stat 521 Notes 20

Maximum Likelihood: Examples and Properties

Reading: Wooldridge, Chapter 13.

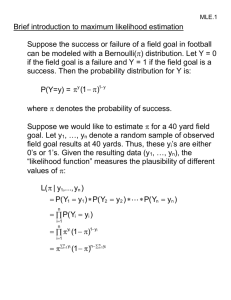

I. Maximum Likelihood Examples

The likelihood function is the joint density of the data viewed as

a function of the parameters. Suppose we have independent and

identically distributed random variables Z1 , Z 2 , , Z N with

common density f ( z | ) . Then the likelihood function given a

sample z1 , z2 , , z N is

N

L ( ) f ( zi | )

i 1

Its logarithm is referred to as the log likelihood function

N

L( ) log L ( ) log f ( zi | ) .

i 1

The maximum likelihood estimator (MLE) ˆMLE is the value of

that maximizes the likelihood, or equivalently the log

likelihood:

ˆMLE arg max L( )

Example 1: Poisson Distribution. Suppose X1 ,

Poisson( ), (0, ) ,

1

, X N are iid

e x

P( X x | )

, x 0,1, 2,

x!

N

N

L ( ) f ( xi | )

e

i 1

N

xi

i 1

n

x !

i 1

i

n

N

L( ) N xi log log xi !

i 1

i 1

To maximize the log likelihood, we set the first derivative of the

log likelihood equal to zero,

1 n

L '( ) N i 1 X i 0.

X is the unique solution to this equation. To confirm that X in

fact maximizes L ( ) , we can use the second derivative test,

1 n

L ''( ) 2 i 1 X i

n

L ''( X ) 0 as long as i 1 X i 0 so that X in fact maximizes

L ( ) .

When i 1 X i 0 , it can be seen by inspection that L ( ) is a

strictly decreasing function of and therefore there is no

maximum of L ( ) for the parameter space (0, ) ; the MLE

n

does not exist when

n

i 1

Example 2: Suppose X 1 ,

Xi 0 .

, X n are iid Uniform( 0, ].

2

1

,

f ( x | )

0

N

0 x

otherwise

L ( ) f ( xi | )

i 1

1

N

N

I

i 1

0 xi

if max xi or min xi 0

0

1

if max xi and min xi 0

N

By graphing the likelihood function, we see that, assuming that

min xi 0 , we have

ˆ max X .

MLE

i

Example 3: For most models, it is not possible to find a closed

form expression for the MLE. In the last class, we looked at the

exponential regression model for duration times. Let Yi be the

ith man’s unemployment spell and X i be the ith man’s

education. Let Zi (Yi , X i ) . The exponential regression model

is that the conditional density of Yi given X i is exponential with

arrival or hazard rate exp( 0 1 X ) , implying that the

conditional mean of Yi given X i is exp(( 0 1 X i )) and

f ( y | x, 0 , 1 ) exp( 0 1 x) exp( y exp( 0 1 x))

for positive y and zero elsewhere. This is an extension of the

exponential distribution allowing the arrival rate to depend on

covariates.

3

If we are only interested in the conditional distribution of Y

given X and not the marginal distribution of X , then we can

just maximize the conditional on x log likelihood function is

N

N

i 1

i 1

L( 0 , 1 )] log f ( yi | xi , 0 , 1 ) 0 1 xi yi exp( 0 1 xi )

(if we maximized over the likelihood function not conditioning

N

on x, i.e.,

log f ( y | x , , ) log f ( x ) , the MLE of ,

i 1

i

i

0

1

i

0

1

would not be changed because log f ( xi ) does not depend on

0 , 1 ).

The first derivatives of the log likelihood function are

N

L

(1 yi exp( 0 1 xi ))

0 i 1

N

L

xi (1 yi exp( 0 1 xi ))

1 i 1

Setting the first derivatives to zero does not lead to an analytic

solution for (0 , 1 ) .

We can use the optim function in R to numerically maximize the

likelihood. When using optim, we should investigate multiple

starting points as optim will find different local maxima

depending on the starting point.

# Duration data

durdata=read.table("durdata",header=TRUE);

dur=durdata$dur;

educ=durdata$educ;

4

y=dur;

x=educ;

# Log likelihood function

loglikfunc=function(theta,y,x){

beta0=theta[1];

beta1=theta[2];

loglik=sum(beta0+beta1*x-y*exp(beta0+beta1*x));

loglik;

}

# First derivative of log likelihood function

first.deriv.llfunc=function(theta,y,x){

beta0=theta[1];

beta1=theta[2];

fd.beta0=sum(1-y*exp(beta0+beta1*x));

fd.beta1=sum(x*(1-y*exp(beta0+beta1*x)));

first.deriv=c(fd.beta0,fd.beta1);

}

# Maximize likelihood

# control=list(fnscale=-1) makes it a maximization problem

thetahat.opt=optim(c(0,0),loglikfunc,gr=first.deriv.llfunc,method="BFGS",control

=list(fnscale=-1),y=dur,x=educ);

beta0hat=thetahat.opt$par[1];

beta1hat=thetahat.opt$par[2];

> thetahat.opt

$par

[1] -3.9742421 -0.1142210

$value

[1] -21116.75

$counts

function gradient

110

25

$convergence

[1] 0

5

$message

NULL

Note, it is important to check that $convergence = 0 when using

optim; if the convergence is not zero, then optim has not

converged.

We have ˆ1 0.11. This means that we estimate that a one

year increase in education multiplies the mean by

exp(( ˆ0 ˆ1 ( X i 1))

exp(ˆ1 ) exp(0.11) 1.11

exp(( ˆ ˆ X ))

0

1

i

In other words, we estimate that a one year increase in education

increases the mean unemployment spell by 11%; more educated

people tend to be unemployed for longer spells.

We will now study the properties of MLE estimates and in

particular their standard errors.

II. Maximum Likelihood Properties

For Z1 , , Z N iid p ( z | ), , under “regularity conditions”

on p ( z | ) [these are essentially smoothness conditions on

p ( z | ) ]:

1. The MLE is consistent.

2. The MLE is asymptotically normal:

ˆMLE

For a one dimensional parameter , SE (ˆ ) converges in

MLE

distribution to a standard normal distribution.

6

3. The MLE is asymptotically optimal: roughly, this means that

among all well-behaved estimators, the MLE has the smallest

variance for large samples.

Consistency of maximum likelihood estimates:

A basic desirable property of estimators is that they are

consistent, i.e., converge to the true parameter when there is a

“large” amount of data. Let ˆ

be the MLE based on

MLE , N

Z1 , , Z N . Consistency of the MLE means that for any 0

and 0 , there exists N ' such that

P(| ˆMLE , N | ) for all N N '

Score function and MLE as special case of GMM

To discuss the asymptotic normality of the MLE, we first define

the score of the log likelihood for observation i,

T

Li

Li

Li

si ( ) Li ( )

( ),

( ), ,

( )

2

p

1

where Li is the likelihood for observation i.

One key fact about the score function is that it has expected

value zero for the true (under smoothness conditions on

f ( z | ) that allow us to interchange integration and

differentiation). Note that

f ( z | )dz 1 for all so

7

0 f ( z | )dz f ( z | )dz = log f ( z | ) f ( z | )dz

Hence,

E [ si ( )] si ( ) f ( z | )dz = log f ( zi | ) f ( z | )dz = 0

Thus, E [ si ( )] 0 are moment conditions. The MLE is the

method of moments estimator based on the sample moment

1 N

conditions N si ( ) and can be viewed as a special case of

i 1

GMM.

Asymptotic Normality of MLE

Consider the Hessian of the log likelihood for observation i:

H i ( ) si ( ) 2 Li ( ) .

Let A0 be the negative of the expected value of the Hessian at

the true ; denote the true by 0 .

A0 E[ H i ( 0 )] . A0 is called the Fisher information matrix.

Asymptotic Normality of the MLE (Theorem 13.2 in

Wooldridge): Under smoothness conditions on f ( z | ) (see

Wooldridge for the exact conditions, these are satisfied in most

standard applications),

D

N ˆ 0

N 0, A01 .

We can estimate A0 by

N

1

Aˆ0 H i (ˆMLE )

N i 1

8

and we have that for large samples,

1

ˆMLE 0 N 0, Aˆ01

N

Example 3 continued: We have

2 Li

yi exp( 0 1 xi )

0

2 Li

xi 2 yi exp( 0 1 xi )

1

2 Li

xi yi exp( 0 1 xi )

0 1

# Estimated variance of MLE

A0hat=matrix(rep(0,4),ncol=2);

A0hat[1,1]=-mean(-y*exp(beta0hat+beta1hat*x));

A0hat[2,2]=-mean(-x^2*y*exp(beta0hat+beta1hat*x));

A0hat[1,2]=-mean(-x*y*exp(beta0hat+beta1hat*x));

A0hat[2,1]=A0hat[1,2];

A0hatinv=solve(A0hat);

varbetahat=(1/length(y))*A0hatinv;

se.beta1hat=sqrt(varbetahat[2,2]);

> se.beta1hat

[1] 0.008311749

If the log likelihood is difficult to differentiate analytically, we

can use optim to numerically estimate the Hessian of the log

likelihood.

# Use optim to numerically estimate the Hessian of the log

# likelihood

thetahat.opt=optim(c(0,0),loglikfunc,gr=first.deriv.llfunc,method="BFGS",control

=list(fnscale=-1),y=dur,x=educ,hessian=TRUE);

9

# thetahat.opt$hessian is an estimate of N times A0

A0hat.numerical=-(1/length(y))*thetahat.opt$hessian;

varbetahat.numerical=(1/length(y))*solve(A0hat.numerical);

se.beta1hat.numerical=sqrt(varbetahat.numerical[2,2]);

> se.beta1hat.numerical

[1] 0.0083114

Efficiency of the MLE

An important result in mathematical statistics is the Cramer-Rao

lower bound (Reference: Casella and Berger, Statistical

Inference, Chapter 7.3)

For Z1 , Z 2 , , Z N iid f ( z | ) , any unbiased estimator of

satisfies:

1

Var (ˆ) A01 .

N

Since the MLE has a variance in large sample approximately

1 1

equal to N A0 , the MLE is at least as efficient (i.e., has as

small variance) as any estimator in large samples.

Example 3 continued: For the exponential regression model, i.e.,

Yi | X i is exponential with mean exp(( 0 1 X i )) , we have

that

E (log Yi | X i ) (0 0.5772) ( 1 ) X i

[see attached derivation].

10

Thus, we could estimate 1 by least squares regression of log Yi

on X i .

> logy=log(y);

> lsmodel=lm(logy~x);

> beta1hat.ls=-coef(lsmodel)[2];

> se.beta1hat.ls=sqrt(vcov(lsmodel)[2,2]);

> beta1hat.ls

x

-0.1020073

> se.beta1hat.ls

[1] 0.009964888

The standard error of this least squares estimator of 1 is higher

than that of the MLE, .00996 compared to .00831.

11