Band Selection using Sparse Nonnegative Matrix Factorization with

advertisement

Band Selection using Sparse Nonnegative Matrix Factorization

with the Thresholded Earth’s Mover Distance for Hyperspectral

Imagery Classification

Weiwei Sun, a Weiyue Li,b Jialin Li, a Yenming Mark Lai c

a

Ningbo University, College of Architectural Engineering, Civil Engineering and Environment, 818

Fenghua Rd, Ningbo, Zhejiang, China, 315211

b

Shanghai Normal University, Institute of Urban Development, 100 Guilin Rd, Shanghai, China,

200234

c

University of Texas at Austin, The Institute for Computational Engineering and Sciences (ICES),

Austin, USA, 78712

Abstract. A sparse nonnegative matrix factorization method with the thresholded ground distance

(SNMF-TEMD) is proposed to solve the band selection problem in hyperspectral imagery (HSI)

classification. The SNMF-TEMD assumes that band vectors are sampled from a union of

low-dimensional subspaces and approximates a HSI data matrix with the product of a basis matrix

constructed from subspaces and a sparse coefficient matrix. The SNMF-TEMD utilizes the TEMD

metric to better measures approximation errors during the optimization of HSI data factorization. The

TEMD metric makes up the theoretical drawbacks in the Euclidean distance (ED) and

Kullback-Leibler divergence (KLD) metrics when measuring the approximation errors in HSI

datasets. The SNMF-TEMD is solved by the combination of min-cost-flow algorithm and

multiplicative update rules. The band cluster assignments are found according to positions of

largest entries in columns of the coefficient matrix and the desired band subset constitutes with the

bands closest to their cluster centers. Three groups of experiments on two HSI datasets are performed

to explore the performance of SNMF-TEMD. Four popular band selection methods are used to make

comparisons: affinity propagation (AP), maximum-variance principal component analysis (MVPCA),

SNMF with ED metric (SNMF-ED) and SNMF with KLD metric (SNMF-KLD). Experimental results

show that SNMF-TEMD outperforms all four methods in classification accuracy and its computational

speed is slower than SNMF-ED and SNMF-KLD. SNMF-TEMD is a better choice for band selection

among all five methods because of its overwhelming advantage in classification and the popular speed

remedy scheme from parallel computing and high-performance computers.

Keywords: band selection, sparse nonnegative matrix factorization, thresholded earth’s mover

distance, hyperspectral imagery, classification.

Corresponding Author: Weiwei Sun, Ningbo University, College of Architectural Engineering, Civil

Engineering and Environment, 50# Postbox, 818 Fenghua Rd, Ningbo, Zhejiang, 315211;

Tel:+0086-18258796120; Email: nbsww@outlook.com

1. Introduction

From the birth of image spectrometer, Hyperspectral imagery (HSI) has attracted much interest

from researchers in the remote sensing field. Hyperspectral imaging collects tens to hundreds

bands that reflect the radiant reflectance of ground objects from visible to near-infrared spectrum,

and could be used to recognize subtle differences in spectral responses among different ground

objects using the classification implementation (Du and Zhang 2014a). The classification result

greatly benefit many realistic applications, including land cover mapping (Tong et al. 2013), target

detection (Wang et al. 2013; Du and Zhang 2014b), ocean monitoring (Keith et al. 2014) and mine

exploration (Murphy and Monteiro 2013) and so on. Unfortunately, numerous bands and strong

intra-band correlations render that the classification process is trapped into the “curse of

dimensionality” (Melgani and Bruzzone 2004). Accordingly, making dimensionality reduction is

an effective scheme to solve the above problem.

Dimensionality reduction can be grouped into two main classes, feature extraction and band

selection (also called feature selection) (Sun et al. 2014). Band selection selects an optimal band

subset from the original band set of the HSI dataset while feature extraction preserves important

spectral features through mathematical transformations. In our paper, we focus our study of

dimensionality reduction on band selection.

Pervious schemes in band selection can be roughly divided into two categories: the maximum

information or minimum correlation (MIMC) scheme and the maximum inter-class separability

(MIS) scheme. MIMC selects an optimal band subset in which each single-band image has the

maximum information or minimum correlation with other bands. The MIMC scheme typically

implements three main criteria including the entropy criterion, the intra-band correlation criterion,

and the cluster criterion. The entropy criterion algorithm collects an optimal band subset by

maximizing the overall amount of information using entropy-like measurements (Arzuaga-Cruz et

al. 2003). The intra-band correlation criterion algorithms select an optimal band subset having

minimum intra-band correlations, such as the mutual information based algorithm and the

constrained band selection algorithm based on constrained energy minimization (CBS-CEM)

(Chang and Wang 2006). The cluster criterion algorithm selects a representative band from each

band cluster using certain clustering algorithms, including the hierarchical clustering algorithm

(Guo et al. 2006) and the affinity propagation (AP) algorithm (Qian et al. 2009).

In contrast, the MIS scheme maximizes the separability of different ground objects in the image

scene to select an optimal band subset. The MIS scheme is typically implemented using one of the

following criteria: the distance measurement criterion, the feature transformation criterion, and the

realistic application criterion. The distance measurement criterion algorithm maximizes the

inter-class differences using a distance-like measurement such as the spectral information

divergence (SID) (Ball et al. 2007) and Mahalanobis distance (Keshava 2004). The feature

transformation criterion algorithm selects an optimal band subset by analyzing the inter-class

separability of ground objects in a low-dimensional feature space constructed from feature

transformations, such as the independent component analysis algorithm (Du et al. 2003) and the

complex network algorithm (Xia et al. 2013). The realistic application criterion algorithm chooses

an optimal band subset through optimizing the defined objective function suitable for realistic

applications, such as the high-order movement algorithm (Du 2003) and the supervised algorithm

using the known class spectral signatures algorithm (Yang et al. 2011a).

In recent years, sparse nonnegative matrix factorization (SNMF) has drawn much attention

from researchers in the remote sensing community. SNMF approximates a two-dimensional HSI

data matrix using the product of a low-rank basis matrix and a sparse coefficient matrix with the

non-negativity constraint in both matrices. Current study of SNMF in the HSI dataset mainly

concentrates on hyperspectral unmixing (Jia and Qian 2009; Yang et al. 2011b; Zhu et al. 2014)

and feature extraction (Wen et al. 2013; Wen et al. 2014; Xiao and Bourennane 2014). Different

from the above, Li considered the clustering structures of SNFM and utilized SNMF to select an

optimal band subset from an HSI dataset through band clustering (Li and Qian 2011). After that,

Shi replaced the Euclidean distance (ED) in the object function of SNMF with the

Kullback-Leibler divergence (KLD) measure to ameliorate the band selection result (Shi et al.

2014). The above achievements greatly promote the applications of SNMF into band selection on

HSI datasets.

However, one significant problem was not carefully solved when implementing SNMF into

band selection of HSI datasets. The problem is that neither the ED nor KLD metric is an

appropriate choice to measure the dissimilarity between the original HSI data matrix and its

approximation. Both ED and KLD metrics have good mathematical properties such as the

bounded reconstruction error for the Frobenius norm (Hazan and Shashua 2007). However, they

always bring about inaccurate factorization results because of their contradictions with the nature

of noises in realistic HSI datasets (Donoho and Stodden 2003). For example, the ED metric

optimizes the object function in SNMF under the assumption that noises in the HSI datasets

follows the Gaussian distributions (e.g., independent identically distributed, i.i.d.). However, the

realistic HSI data points in high-dimensional feature space did not support the Gaussian

distribution assumption. Therefore, an alternative type of distance measurement is preferred to

solve the problem.

In the paper, we propose the SNMF algorithm with the thresholded earth’s mover distance

(TEMD), called SNMF-TEMD, for the purpose of solving the band selection problem in HSI

classification. Our goal is to alleviate the approximate error using the TEMD metric and promote

the performance of SNMF in selecting an optimal band subset from the HSI dataset. Although

Sandler tried to combine EMD with NMF and made some progress in image segmentation

(Sandler and Lindenbaum 2011), our study differs from that in two aspects. The first is that our

study stands on the SNMF rather than NMF and the aimed application of our study is band

selection of the HSI dataset. The second is that we implement the TEMD metric to measure the

approximation error and greatly reduce the computational speeds of EMD. Our SNMF-TEMD

method favors two main innovations. The first is that we utilize TEMD metric to improve the

approximation in the object function of SNMF. The TEMD regards the approximation error as a

complex local deformation of the original HSI dataset and could better quantify the error than both

ED and KLD metrics. The second is that our SNMF-TEMD method outperforms better in HSI

classification and takes lower computational time.

The rest of our paper is in the following. Section 2 briefly reviews the classical sparse

nonnegative matrix factorization. Section 3 presents our SNMF-TEMD method for selecting an

optimal band subset. Section 4 analyzes the performance of SNMF-TEMD in band selection using

two widely used HSI datasets. Section 5 states conclusions and outlines our future work.

2. A Brief Review of SNMF

In this section, we give a brief description of the classical SNMF method. SNMF decomposes the

original data matrix into the product of a set of bases (i.e., the basis matrix) and encodings (i.e.,

the coefficient matrix) where the basis is nonnegative and the encodings are both negative and

sparse. The nonnegative constraints in bases and encodings brings about the parts-based feature of

SNMF because they allows only additive combinations among different bases. Consider a real

data set X [ x1 , x 2 , , x N ] R

represents

a

feature,

D N

where each column represents a data sample and each row

SNMF

W [w1 , , w l , w k ] R Dk and

original matrix as

attempts

to

find

two

H [h1 , , hi , h N ] R k N

nonnegative

matrices

to approximate the

X WH , where W 0 , H 0 and k is the rank of W and H

with k min{m, n} . The placement of sparse constraint in columns of W , rows or columns

of H is to enforce the sparseness of the founded decompositions. A distance metric is always used

to measure the approximation error between the data matrices X and the product matrix WH , and

the SNM is solved by optimizing the following problem:

arg min Fk ( W, H ) dist ( X WH ),

W ,H

s.t . W 0, H 0, one of the following sparseness conditions :

1) : hi

0

s1 , s1 0, 1 i N ;

2) : H ( j ,:) 0 s2 , s2 0, 1 j k ;

3) : w l

0

(1)

s3 , s3 0, 1 l k ;

and one of the following scale conditions in W or H :

1) : H

2) : W

c1 , c1 0, f [0,1, 2];

f

f

c2 , c2 0, f [0,1, 2].

where min Fk ( W, H) is the approximate error term, and it quantifies the quality of approximation.

W,H

Two common choices in the function dist using the ED and KLD metrics constitute the Frobenius

norm term and the KLD term respectively. The sparseness conditions 1) and 2) are the L0 norm

constraints in columns and rows of the coefficient matrix H respectively; the sparseness condition

3) is the L0 norm constraint in columns of the basis matrix W ; and the norm operation

f

in the

scale conditions can be the L0, L1 or L2 norm constraints in the basis matrix W and the coefficient

matrix H respectively. The scale constraint in W or H is to avoid the unsteady solution in problem

(1) since over-large values in W or H renders the optimization result is unstable even unreasonable.

Different choices in sparseness conditions has their separate practical explanations. The sparseness

constraint in hi explains that each data point can be approximated by a linear combination of a

limited number of basis vectors in W . The sparseness constraint in the row vector H ( j ,:) of

H means that a limited number of data points in X is used to infer each basis vector. The

explanation of sparseness constraint in w l is that each basis affects only a small part of each

feature in X .

Different combinations of the sparseness and scale conditions result in divergent object

functions of problem (1). Hoyer (2002) constructed the object function with the Frobenius norm

term and the L1 norm of H in the scale condition 1). The proposed object function of nonnegative

sparse coding (NSC) was regarded as the paragon work of SNMF. After that, he relaxed the

sparseness conditions by using means of nonlinear projection at each iteration based on the

sparseness measures from the relationship between the L1 and L2 norm. The sparseness measure

quantifies how much energy of a feature vector is packed into only a few components. Hoyer then

presented an object function named NMF with sparseness constraints (NMFSC) by combing the

Frobenius norm term with the sparseness measures (Hoyer 2004). Some other objective functions

are also suggested, such as the object function using the combination of the Frobenius norm term

and the L2 norm of H (Gao and Church 2005) and the combination of the Frobenius norm term

and the L0 norm of W or H (Peharz and Pernkopf 2012).

Meanwhile, optimization algorithms were also proposed to solve the optimization problem (1)

to obtain the desired basis matrix W and the coefficient matrix H . The nonlinear least angle

regression and selection (NLARS) method (Morup et al. 2008) and the stable and efficient NSC

algorithm (Li and Zhang 2009) were proposed to optimize the NSC object function by Hoyer. The

alternating non-negativity constrained least squares (ANLS) method (Kim and Park 2007) by Kim

was used to optimize its object function derived from problem (1). The projected gradient method,

the Nesterov's optimal gradient method (Guan et al. 2012) and the fast gradient descent method

(Guan et al. 2011) were also introduced to resolve the optimization problem of SNMF. More

detailed analysis and discussions in the objective functions and solvers of SNMF can be found in

literatures by Wang (2013), Peharz (2012) and Cai (2011) and so on.

3. Band Selection using SNMF-TEMD

In this section, the band selection method using SNMF-TEMD is described. Section 3.1 presents

sparse representations of HSI band vectors from the aspect of SNMF. Section 3.2 introduces the

principle of EMD metric and utilizes the TEMD metric to improve the SNMF. Section 3.3

describes the band selection method with the optimization result of SNMF-TEMD. And section

3.4 summarizes the process of band selection using SNMF-TEMD.

3.1 Sparse Representations of HSI Band Vectors

Assume that a collection of HSI band vectors X xi i 1 R

N

D N

is lying in a union of linear

subspaces Cl l 1 with dimensions d l l 1 , where D is the dimensionality of high-dimensional

k

k

space that is equal to the number of pixels in the image scene, and N is the number of bands (i.e.,

the number of band vectors) with N

D . We assume each band yi R D1 lies in exactly one of

the k linear spaces Cl , and each band can be sparsely represented by a basis matrix constructed

from all k linear subspace, weighted by a sparse coefficient vector. Moreover, the position and

largest value of nonzero entries in the sparse coefficient vector coincides with its underlying

subspace that the band was sampled from.

Considering the corruption from noises in the realistic HSI dataset, each band vector xi X

could be sparsely represented with the basis matrix W as follows:

k

xi j w j Whi ei

(2)

j 1

where hi [1 , , j , , k ]

T

is the coefficient vector, j xi , w j w j xi 0 ,

T

W [w1 , w 2 , , w k ] R Dk is the basis matrix with W 0 , and e i is the error term. The

nonnegative constraint in W results from the nonnegativity of the spectral reflectance values in X .

The nonnegative constraint in j is to avoid negative reconstruction weights from the basis

matrix W . The coefficient vector hi [1 , , j , , k ] is s sparse with s k , that is, the

T

number of nonzero entries in hi is far smaller than k. The positions and values of nonzero entries

in hi reflect the weights from the basis vector from W in reconstructing the band x i . Furthermore,

when we stacking all the bands in columns, equation (2) becomes the matrix form in (3)

X WH E s. t. W 0, H 0, and hi 0 k ,1 i N

H [h1 , h 2 , , hi , h N ] R k N is the coefficient matrix, and the constraint

where

hi

0

(3)

k means that each column vector hi is sparse and the number of its nonzero entries is far

smaller than the dimension k. Considering the nonconvex property of L0 norm in hi , the L1 norm

constraint is adopted to make constraint on columns of the H factor since the L1 norm is proven to

obtain the same solution with the L0 norm under certain conditions (Candes et al. 2006; Ramirez

et al. 2013). Too large values in w i would render that the equation (3) has unstable and even trivial

results and the L2 norm (i.e., the Frobenius norm) constraint is accordingly imposed in the basis

matrix W . Therefore, equation (3) can be rewritten as the following optimization problem (4)

(Kim and Park 2007):

min f ( W, H) X WH

W,H

Where

2

F

+ W

F

2

2

F

N

hi

i 1

2

1

s.t . W 0, H 0

(4)

is the Frobenius norm, the parameter controls the size of entries in W with 0 ,

and the parameter controls the sparseness in columns of H with 0 . The basis matrix W and

the sparse coefficient matrix H can be obtained through optimizing the problem (4). However, the

ED metric in the Frobenius error term of (4) cannot perfectly represent the approximate error

between the data matrix X and its approximation WH because the Gaussian distribution

assumption behind the Euclidean measure contradicts with the reality of the HSI dataset.

Therefore, we utilize the EMD metric (shown in section 3.2) to take place the ED metric, aiming

to improve the objection function in (4) to achieve better optimization results.

3.2 The EMD Metric using Thresholded Ground Distance

The EMD metric is a cross-bin distance that mainly resolves the histogram or image matching

problems (Rubner and Tomasi 2000). The metric was widely used in many applications of

computer vision including image retrieval (Kundu et al. 2012), visual tracking (Karavasilis et al.

2011), and hand gesture recognition (Ren et al. 2011) and so on. The EMD metric, also called the

Monge-Kantarovich problem, aims to minimize the cost that must be performed when

transforming from one feature distribution (i.e., the histogram) into the other. Assume a

high-dimensional real dataset as X [x1x 2 , , x N ] R

D N

, where each column xm represents

the source histogram that corresponds to spectral responses of pixels in the m-th band, and let the

column vector xm X be the target normalized histogram. We utilize the normalized histogram

rather than the unnormalized to guarantee that the total reconstruction weights on the same band

from all the basis vectors sums to 1. The EMD distance between two histograms xm and xm is

formulated as a linear programming problem whose goal is to minimize the total cost in

transforming the source xm to the target xm as follows:

EMD(x m , x m ) f m (i, j )d (i, j ), s.t . f m (i, j ) 0,

i, j

i

where

(5)

f m (i, j ) = X(i,m), f m (i, j ) = X( j,m), f m (i, j ) 1

j

i, j

f m (i, j ) and d (i, j ) are the flow amount and flow cost between the i-th pixel of the

source histogram xˆ m and the j-th pixel of the target histogram xm respectively, and the flow cost

measures with the L1 ground distance. The constraint

f

m

(i, j ) 1 is because of the

i, j

normalized histograms of

xm and xm . Furthermore, the EMD distance between two

matrices X and X is the summation of EMD distances between each column in X and the

corresponding column in X and can be illustrated as follows (Sandler and Lindenbaum 2011):

N

EMD( X, X) ( x m , x m )

(6)

m 1

3

The computational complexity of EMD distance between X and X scales up to O( ND log D) ,

where N and D are the dimensionality and the number of band vectors in the HSI data matrix

respectively. The high computational complexity of EMD render it unfeasible in realistic

applications. Therefore, we utilize the thresholded earth’s mover distance (TEMD) metric (Pele

and Werman 2009) to reduce the computational complexity of the EMD in (5). The TEMD metric

considers noise distributions of spectral responses of HSI data points, and assigns different outliers

with the same large ground distance. The thresholded ground distance in TEMD metric is

represented as dT (i, j ) min(d (i, j ), T ) with the threshold T

0 . The TEMD metric replaces

the flow cost d (i, j ) in (5) with the thresholded distance dT (i, j ) . It reduces the computational

complexity of the original EMD metric between matrices by an order of magnitude. The TEMD

can be easily solved with the min-cost-flow algorithm (Ahuja et al. 1993; Goldberg 1997) and the

approximation error between the original HSI data matrix and its approximation can be measured

using the TEMD metric.

3.3 Band Selection using the Sparse Coefficient Matrix

The objective function in (4) is not convex with respect to both variables W and H , and

therefore multiplicative update rules are usually implemented to achieve a local optima. The

problem (4) can be iteratively optimized by consecutively fixing either W or H , and the

approximate error monotonically decreases as iterations increase. The problem can be

decomposed as the following two optimization subproblems:

H arg min W H X 2 ,s.t . H 0

t 1

1 F

t

H

2

Wt arg min Ht WT X2 ,s.t . W 0

F

W

(7)

Ht T

Wt 1

XT

X

,X

;H =

,X

where Wt 1

, Ht and Wt are the

e 1 01 N t I 2 0

k D

1k

k

optimization result in the t-th iteration .We then replace the Frobenius norm with the TEMD

metric, and problems (7) is transformed into (8):

H t arg min TEMD( X1 , Wt 1H ),s.t . H 0

H

TEMD( X 2 , H t WT ),s.t . W 0

Wt arg min

W

N

D

where TEMD(, ) f m (i, j ) dT (i, j ), s.t . f m (i, j ) 0,

(8)

m 1 i , j 1

dT (i, j ) min( d (i, j ), T ), f m (i, j ) 1

i, j

where the variables without notations are the same with the aforementioned. The basis

matrix W0 and the coefficient matrix H 0 are initialized with random matrices. At the t-th iteration,

the coefficient matrix Ht is optimized using the TEMD metric with the basis matrix Wt 1 fixed.

Analogously, the basis matrix Wt is updated using the TEMD metric and the optimized

coefficient matrix Ht . The two matrices in (8) are iteratively updated using the min-cost-flow

algorithm

and

the

update

terminates

until

the

absolute

error

EMD( X1 , Wt 1H )-EMD( X 2 , H t WT ) is smaller than a certain positive threshold . The

optimized basis matrix W and the optimized sparse coefficient matrix H is finally found.

With respect to each band x i , nonzero entries in the column vector hi show the weights from all

basis vectors of W in reconstructing the band x i . Specifically, the position of the largest entry

in hi explains that the band x i belongs to the subspace whose corresponding basis has the largest

weights in hi . All band vectors in X are accordingly segmented into k clusters and each band

vector x i has the cluster number Ci arg max(hi ) . Two schemes including random sampling and

the distance metrics can be implemented for choosing a representative band from each cluster. We

select the bands that are closest to its cluster centers as the selected band and the ED metric is

utilized to measure the distance between each band vector and its cluster centering. The selected k

bands constitutes the desired band subset using our SNMF-TEMD algorithm.

3.4 The Implementation of SNMF-TEMD

Considering the drawbacks of the ED metric, our SNMF-TEMD method improves SNMF with

the TEMD metric and ameliorates the approximate error during the decomposition process of

SNMF. Our SNMF-TEMD regards that each band is sparsely represented with the basis

matrix W constructed from k linear subspaces and factorizes the HSI data matrix with a

nonnegative basis matrix and a sparse nonnegative coefficient matrix. All the bands are then

segmented into k clusters through the rule that the largest nonzero entries in columns of the sparse

coefficient matrix coincides with the clusters that the corresponding band should belong to. The

bands closest to their clustering centers are selected as elements of the band subset using the

SNMF-TEMD. The process of band selection using SNMF-TEMD is as follows:

1) The HSI data cube is normalized and transformed into two dimensional real data matrix X .

2) The band selection is modelled as the decomposition of X into the basis matrix W and the

sparse coefficient matrix H by solving the optimization problem in (4).

3) The optimization problem is rewritten as the alternative problem (8) using the TEMD metric.

4) The optimization problem (8) is solved using the multiplicative update rules and the

min-cost-flow algorithm. The desired basis matrix W and the desired sparse coefficient

matrix H are then achieved.

5) The positions of the largest entry in columns of H determine the cluster alignments for each

band and the band vectors are segmented into k clusters.

6) The bands nearest to their clustering centers constitute the SNMF-TEMD band subset.

4. Experimental results and Analysis

In this section, three groups of experiments on two widely used HSI datasets including Indian

Pines and Urban datasets are designed to testify our SNMF-TEMD algorithm when selecting an

optimal band subset. Section 4.1 describes the information of Indian Pines and Urban HSI datasets.

Section 4.2 lists and analyzes experimental results from the three groups of experiments.

4.1 Descriptions of Two HSI datasets

The Indian Pines dataset was taken from Multispectral Image Data Analysis System group at

Purdue University (https://engineering.purdue.edu/~biehl/MultiSpec/aviris_documentation.html).

The dataset was acquired by NASA on June 12, 1992 using the AVIRIS sensor from JPL. The

dataset has 20 m spatial resolutions and 10 nm spectral resolutions within a spectrum range of

200-2400 nm. A subset of the image scene of size 145×145 pixels depicted in Figure 1 is used in

our experiment and covers an area of 6 miles west of West Lafayette, Indiana. The dataset was

preprocessed with radiometric corrections and bad band removal, and the final 200 bands left with

calibrated data values proportional to radiances. Sixteen classes of ground objects exist in the

image scene, and the ground truth for both training and testing samples for each class is listed in

Table 1.

The Urban dataset was downloaded from the website of US Army Geospatial Center

(www.tec.army.mil/hypercube). The dataset was acquired by a HYDICE sensor with 10 nm

spectral resolution and 2 m spatial resolutions. The low SNR band sets [1-4, 76, 87, 101-111,

136-153, 198-210] were eliminated from the initial 210 bands, leaving the final 162 bands. Figure

2 shows a small image subset of size 307×307 pixels selected from the larger image. The small

dataset covers an area at Copperas Cove near Fort Hood, TX and has twenty-two classes of

ground objects. Table 2 shows the ground truth information for training and testing samples in

each class.

Figure 1. The image of Indian Pines dataset

Figure 2. The image of Urban dataset

Table 1 The ground truth of training and testing samples in each class for the Indian Pines dataset

Class

Sample

Class

Sample

Label

Name

Train

Test

Label

Name

Train

Test

1

Alfalfa

9

37

9

Oats

4

16

2

Corn-notill

286

1142

10

Soybeans-notill

194

778

3

Corn-min

166

664

11

Soybeans-min

491

1964

4

Corn

47

190

12

Soybeans-clean

119

474

5

Grass/Pasture

97

386

13

Wheat

41

164

6

Grass/Trees

146

584

14

Woods

253

1012

7

Grass/pasture-mowed

6

22

15

Bldg-Grass-Tree Drives

77

309

8

Hay-windowed

96

382

16

Stone-Steel towers

19

74

2051

8198

Total

Table 2 The ground truth of training and testing samples in each class for the Urban dataset

Class

Sample

Class

Sample

Label

Name

Train

Test

Label

Name

Train

Test

1

AsphaltDrk

45

40

12

Roof02BGvl

17

22

2

AsphaltLgt

26

32

13

Roof03LgtGray

12

23

3

Concrete01

64

60

14

Roof04DrkBrn

39

45

4

VegPasture

116

120

15

Roof05AChurch

38

47

5

VegGrass

65

62

16

Roof06School

28

36

6

VegTrees01

123

140

17

Roof07Bright

35

37

7

Soil01

52

61

18

Roof08BlueGrn

21

24

8

Soil02

24

29

19

TennisCrt

47

49

9

Soil03Drk

27

32

20

ShadedVeg

17

23

10

Roof01Wal

57

61

21

ShadedPav

30

34

11

Roof02A

44

47

22

VegTrees01

126

135

1053

1159

Total

4.2 Experimental Results

Three groups of experiments are carried to test the performance of our SNMF-TEMD method in

band selection for the purpose of classification. Four widely used methods are used to make

holistic comparisons including affinity propagation (AP) (Qian et al. 2009), maximum-variance

principal component analysis (MVPCA) (Chang et al. 1999), SNMF with ED metric (SNMF-ED)

(Li and Qian 2011) and SNMF with KLD metric (SNMF-KLD) (Shi et al. 2014). First, we

quantify the band selection performance of SNMF-TEMD and compare the results with those of

the other four methods. The experiment assesses the performance of the SNMF-EMD in band

selection before classification. Second, we compare the classification accuracies of SNMF-TEMD

against those of the other four methods. Two widely used classifiers are used in the experiment,

K-nearest neighbor (KNN) (Cover and Hart 1967) and Support Vector Machine (SVM) (Steinwart

and Christmann 2008) classifiers. The overall classification accuracy (OCA) and average

classification accuracy (ACA) is adopted to measure the classification accuracy of all five

methods. The KNN classifier uses the Euclidean distance, and the SVM classifier uses the radial

basis function (RBF) kernel function with the variance parameter and the penalization factor

obtained via cross-validation. Third, we compare the computational time of SNMF-TEMD against

other four band selection methods when varying the size of band subset k. The experiment

investigates the computational performance of SNMF-TEMD. The following experimental results

without specific notations are the average results of ten different and independent experiments.

1) Quantitative evaluation of SNMF-TEMD. The experiment evaluates the band selection

results obtained from SNMF-TEMD and other four methods using three quantitative measures

before classification. The average information entropy (AIE) measures the information amount in

the band subset. The average correlation coefficient (ACC) estimates the intra-band correlations

within the band subset. The average relative entropy (ARE) (also called average Kullback-Leibler

divergence, AKLD) evaluates the inter-separabilities of selected bands for classification. The

reason for using three above quantitative measures is because they measure the three essential

performance characteristic of optimal band subset that is to have high information amount, low

intra-band correlations and high inter-separabilities. The band number k in SNMF-TEMD is

manually estimated after cross-validation and is set as the dimensionality of band subset for all

five methods. The parameters k in Indian Pines and Urban datasets are 15 and 19 respectively. In

the SNMF-ED and SNFM-EMD methods, the parameter β that controls the entry size of

dictionary matrix and the parameter η that determines the sparseness of coefficient matrix are

estimated using cross-validation and the optimal β and η having the best result are selected. In the

SNMF-KLD method, the parameter λ controls the balance between the sparsity of coefficient

matrix and the approximation error. For the Indian Pines dataset, the β and η in SNMF-ED and

SNMF-TEMD in Indian Pines dataset are chosen as 3.5 and 0.04 respectively, and the λ in

SNMF-KLD is 0.03. For the Urban dataset, the β and η in SNMF-ED and SNMF-TEMD in Indian

Pines dataset are chosen as 4.0 and 0.02 respectively, and the λ in SNMF-KLD is 0.02. The

thresholds T in TEMD of Indian Pines and Urban datasets are set as 20 and 15 via cross-validation.

The convergence thresholds in SNMF-TEMD of Indian Pines and Urban datasets are manually set

as 0.0003 and 0.0001 respectively.

Table 3 Contrast in quantitative measures of band subsets from all five methods on both datasets

Datasets

Quantitative Measures

AP

MVPCA

SNMF-ED

SNMF-KLD

SNMF-TEMD

Indian

AIE

9.5214

9.4289

10.0592

12.4762

14.6853

Pines

ACC

0.3079

0.7883

0.2908

0.2392

0.2047

(k=15)

ARE

32.2805

17.2572

31.0735

34.5842

36.7526

AIE

7.4287

7.0782

8.0031

8.7674

9.0258

ACC

0.6682

0.8976

0.6458

0.5946

0.5532

ARE

9.7286

0.6259

9.7873

10.6253

11.0248

Urban

(k=19)

Table 3 lists quantitative evaluation results of all five methods on Indian Pines and Urban

datasets. For the Indian Pines dataset, the SNMF-TEMD performs best among all the methods for

all three quantitative measures. The MVPCA performs worst in all three quantitative measures.

The SNMF-ED behaves better than MVPCA and AP whereas it is worse than SNMF-KLD in three

quantitative measures. The similar observations exist in the Urban dataset. The MVPCA

performance is the worst of all in the three quantitative measures. The SNMF-ED again behaves

worse than SNMF-KLD and SNMF-TEMD whereas it overpass AP and MVPCA in the three

quantitative measures. The advantage of SNMF-KLD over SNMF-ED explains the superiority of

KLD metric over ED metric in measuring the approximation error of the HSI datasets. The

SNMF-TEMD in the Urban dataset has the highest AIE and ARE and lowest ACC, and performs

better than SNMF-KLD and SNMF-ED. That means the EMD metric improves the approximation

measure of the HSI dataset than KLD and ED metrics and ameliorates the band selection result.

From above observations, the conclusions are in the following. The SNMF-TEMD has the best

performance in three quantative measures and is the best choice among all five methods in

selecting an optimal band subset. In contrast, the MVPCA perform worst in the quantitative

evaluations and is a bad choice for optimal band selection.

2) Classification performance of SNFM-EMD. This experiment tests the classification

performance of the SNMF-EMD by varying the number of bands k. We compare classification

accuracies using the OCA and ACA and compares with those of other four methods. For each

dataset, we repeatedly sub-sample the training samples and testing samples ten times to achieve

accurate classification accuracies. In the experiment, the size of band subset k in Indian Pines

dataset varies from 2 to 45 with a step interval of 2, and the k in Urban dataset varies between 2

and 50 with a step interval of 2. The neighbor size k1 in the KNN classifier is set as 3, and the

threshold of total distortion in the SVM classifier is set as 0.01. Using cross-validation, the β and η

in SNMF-ED and SNMF-TEMD in Indian Pines dataset are chosen as 3.2 and 0.05 respectively,

the β and η in Urban dataset are chosen as 3.8 and 0.02 respectively. The parameters λ of

SNMF-KLD method in Indian Pines and Urban datasets are 0.02 and 0.01 respectively. Other

parameters without notations in all the five methods are the same as their counterparts in the

previous experiment.

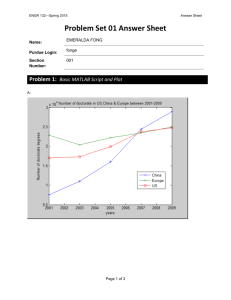

Figure 3 The OCA results of all the five methods on Indian Pines and Urban datasets

Figure 3 shows the OCA results of all the five methods on both HSI datasets, using the SVM

and KNN classifiers. We did not plot the ACA curves because of the similarity between the ACA

curves and the OCA curves. For each dataset and each classifier, the OCA curve is rising with the

increasing band number k. The curves changes slowly after a certain threshold of the band number

k and most curves become flat with slight fluctuations. The MVPCA curves have the lowest values

among all the methods, regardless of each classifier and each dataset. The SNMF-TEMD

outperforms best among all the methods, especially with a moderate or larger band number k. The

SNMF-KLD curves behave worse than SNMF-TEMD whereas the curves performs better than the

regular SNMF-ED curves. Moreover, we compare the OCA and ACA results of all the five

methods on both datasets, using a selected band number k. The selected band number k in Indian

Pines dataset is 15 and the k in Urban dataset is 19. Table 4 shows that the SNMF-TEMD has the

best classification accuracies for both datasets using different classifiers. The SNMF-KLD result

behaves better than SNMF-ED, AP and MVPCA in classification. The MVPCA has the worst

ACAs and OCAs among all the methods. We make the conclusions in the following. The

SNMF-TEMD preforms best among all five methods for HSI classification. SNMF-KLD behaves

better than SNMF-ED in band selection for classification and that coincides with the conclusion

by Shi (Shi et al. 2014). The MVPCA is the worst of all and that again support the conclusions in

the experiment 1). The SNMF-ED achieves similar or comparable classification accuracies to

those of AP.

Table 4 Classification accuracies of all methods using a selected band number on both datasets

Datasets

Indian

Classification Accuracy

AP

MVPCA

SNMF-ED

SNMF-KLD

SNMF-TEMD

ACA

0.6745

0.4978

0.7124

0.7328

0.7657

OCA

0.7566

0.6158

0.7492

0.7628

0.8216

ACA

0.6245

0.4492

0.6262

0.6521

0.6904

OCA

0.6570

0.5148

0.6660

0.6894

0.7219

ACA

0.8721

0.8481

0.8663

0.9280

0.9345

OCA

0.9387

0.9302

0.9451

0.9516

0.9697

ACA

0.8649

0.8276

0.8684

0.9148

0.9306

OCA

0.9342

0.9125

0.9276

0.9402

0.9495

SVM

Pines

(k=15)

KNN

SVM

Urban

(k=19)

KNN

3) Computational performance of SNFM-EMD. The experiment tests the computational speed

of SNMF-TEMD and the other four methods when varying the sizes of band subset k. For the

Indian pines dataset, k is set between 6 and 30 with a step interval of 6, and k in Urban dataset is

set between 10 and 50 with a step interval of 10. For the Indian Pines dataset, the β and η of

SNMF-ED and SNMF-TEMD are chosen as 3.5 and 0.01 respectively after cross-validation. The

β and η in Urban dataset are 3.0 and 0.03 respectively. The parameters λ of SNMF-KLD method in

Indian Pines and Urban datasets are 0.05 and 0.01 respectively. Other parameters in all the seven

methods are the same as their counterparts in the previous experiments.

We run the experiment on a Windows 7 computer with Inter i7-4700 Quad Core Processor and

16GB of RAM. Both SNMF-TEMD and other four algorithms are implemented in Matlab 2013b.

Table 5 shows the comparisons in the computations times of all the five methods on the Indian

Pines and Urban datasets. For each HSI dataset, computational times of all the methods increase

with the increasing k. The AP takes the longest computational times among all the methods. The

SNMF-TEMD has shorter computational times than AP but the computational speed is slower

than the other three methods. The SNMF-KLD takes longer computational times than SNMF-ED

and MVPCA, and the MVPCA has the shortest computational times among all the methods. The

longest computational times in AP is because it computes the entire similarity matrix of HSI band

vectors. The advantage in computational times of MVPCA results from the low computational

complexity in principal component analysis transformation of the HSI dataset. The longer

computational times in SNMF-TEMD than both SNMF-ED and SNMF-KLD is because of the

high computational complexity in TEMD metric although it has clear lower computational

complexity than the regular EMD. From above observations, the computational times in

increasing order of all the seven methods is as follows: MVPCA, SNMF-ED, SNMF-ED,

SNMF-KLD, SNMF-TEMD and AP.

Table 5 Computational times of five band selection methods using different k on two HSI datasets

Computational times (Seconds)

Size of band

Datasets

subset k

AP

MVPCA

SNMF-ED

SNMF-KLD

SNMF-TEMD

k=6

23.003

2.593

3.321

3.942

14.583

k=12

29.487

3.481

4.118

4.781

20.391

k=18

33.569

4.286

4.686

5.059

29.487

k=24

37.831

4.884

5.023

5.532

33.241

k=30

40.213

5.490

5.782

6.265

38.322

k=10

47.397

14.964

15.732

16.114

43.392

k=20

55.585

16.185

17.124

19.259

52.094

k=30

64.627

19.687

23.587

24.501

58.593

k=40

69.968

26.203

28.918

31.387

65.476

k=50

77.048

33.598

34.901

36.249

72.425

Indian

Pines

Urban

5. Conclusions and future work

In this paper, we propose a SNMF-TEMD method to solve the band selection problem in HSI data

classification. The SNMF-TEMD method models the band selection problem from the respective

of matrix factorization. Considering the theoretical drawbacks of ED and KLD metrics, the

SNMF-TEMD introduces the EMD metric to better measure the approximation errors during the

optimization process. Three groups of experiments are carried in order to testify the classification

performance of our method and the results are compared with those of four popular band selection

methods including AP, MVPCA, SNMF-ED and SNMF-KLD. The quantative evaluation results

show that SNMF-TEMD is the best choice in selecting an optimal band subset against other four

methods. The classification results shows that the SNMF-TEMD has the best performance in

classification accuracies, including both ACAs and OCAs. The computation results shows that the

SNMF-TEMD costs longer computational times than SNMF-KLD and SNMF-ED whereas it has

shorter computational times than the AP method. Therefore, comparing with SNMF-KLD and

SNMF-ED, the SNMF-TEMD is a better choice in band selection although the method fairly

compromises its computational speeds. The computational problem can be solved by using

parallel computing schemes and high-performance computers.

However, some problems have not been perfectly solved. First, too many parameters involved

in our SNMF-EMD method including the convergence threshold, the distance threshold T, the

balance parameters β and η. They all make effect on the performance of SNMF-TEMD in band

selection. Unfortunately, we have not found an automatic or semi-automatic estimation method

until now. The above parameters can only be manually determined via cross-validation or

experiences because of the complexity property of HSI data. Second, considering the paragraph

limits in the paper, we only make comparisons with four popular band selection methods on two

HSI datasets. The future work will comprises the comparisons between SNMF-TEMD with other

methods such as the linear constrained minimum variance-based band correlation constraint

(LCMV-BCC) (Chang and Wang 2006) method and the collaborative sparse model (CSM) method

(Du et al. 2012). Meanwhile, we will apply our SNMF-TEMD method into more realistic HSI

datasets to further testify and improve our proposed method.

Acknowledgement

This work was funded by National Nature Science Foundation of China (41401389, 41171073,

41471004), by Research Project of Zhejiang Educational Committee (Y201430436), by Ningbo

Natural Science Foundation (2014A610173), by Natural Science Foundation of Zhejiang

Province(Y5110321), by the Discipline Construction Project of Ningbo University

(ZX2014000400) and by the K. C. Wong Magna Fund in Ningbo University. The authors would

like to thank the editor and referees for their suggestions which improved the manuscript.

References

Ahuja RK, Magnanti TL, Orlin JB (1993) Network flows: theory, algorithms, and applications. Englwood Cliffs, New Jersey

Arzuaga-Cruz E, Jimenez-Rodriguez LO, Velez-Reyes M (2003) Unsupervised feature extraction and band subset selection

techniques based on relative entropy criteria for hyperspectral data analysis AeroSense 2003: 462-473

Ball JE, West T, Prasad S, Bruce LM (2007) Level set hyperspectral image segmentation using spectral information

divergence-based best band selection. Proceedings of 2007 IEEE International Geoscience and Remote Sensing

Symposium (IGARSS 2007) 4053-4056

Cai D, He X, Han J, Huang TS (2011) Graph regularized nonnegative matrix factorization for data representation IEEE

Transactions on Pattern Analysis and Machine Intelligence 33(8):1548-1560

Candes EJ, Romberg JK, Tao T (2006) Stable signal recovery from incomplete and inaccurate measurements Communications on

pure and applied mathematics 59(8):1207-1223

Chang C-I, Du Q, Sun T-L, Althouse ML (1999) A joint band prioritization and band-decorrelation approach to band selection for

hyperspectral image classification IEEE Transactions on Geoscience and Remote Sensing 37(6):2631-2641

Chang C-I, Wang S (2006) Constrained band selection for hyperspectral imagery IEEE Transactions on Geoscience and Remote

Sensing 44(6):1575-1585

Cover T, Hart P (1967) Nearest neighbor pattern classification IEEE Transactions on Information Theory 13(1):21-27

Donoho D, Stodden V (2004) When does non-negative matrix factorization give a correct decomposition into parts? Advances in

neural information processing systems 2004:1141-1148

Du B, Zhang L (2014a) Target detection based on a dynamic subspace Pattern Recognition 47:344-358

Du B, Zhang L (2014b) A Discriminative Metric Learning Based Anomaly Detection Method IEEE Transactions on Geoscience

and Remote Sensing 52(11):6844-6857

Du H, Qi H, Wang X, Ramanath R, Snyder WE (2003) Band selection using independent component analysis for hyperspectral

image processing. Proceedings of 32nd IEEE Applied Imagery Pattern Recognition Workshop 93-98

Du Q (2003) Band selection and its impact on target detection and classification in hyperspectral image analysis. Proceedings of

2003 IEEE Workshop on Advances in Techniques for Analysis of Remotely Sensed Data 374-377

Du Q, Bioucas-Dias JM, Plaza A (2012) Hyperspectral band selection using a collaborative sparse model. Proceedings of 2012

IEEE International Geoscience and Remote Sensing Symposium (IGARSS) 3054-3057

Gao Y, Church G (2005) Improving molecular cancer class discovery through sparse non-negative matrix factorization

Bioinformatics 21(21):3970-3975

Goldberg AV (1997) An efficient implementation of a scaling minimum-cost flow algorithm Journal of algorithms 22(1):1-29

Guan N, Tao D, Luo Z, Yuan B (2011) Manifold regularized discriminative nonnegative matrix factorization with fast gradient

descent IEEE Transactions on Image Processing 20(7):2030-2048

Guan N, Tao D, Luo Z, Yuan B (2012) NeNMF: an optimal gradient method for nonnegative matrix factorization IEEE

Transactions on Signal Processing 60(6):2882-2898

Guo B, Gunn SR, Damper R, Nelson J (2006) Band selection for hyperspectral image classification using mutual information

IEEE Geoscience and Remote Sensing Letters 3(4):522-526

Hazan T, Shashua A (2007) Analysis of L2-loss for probabilistically valid factorizations under general additive noise. Technical

Report 2007-13, The Hebrew University

Hoyer PO Non-negative sparse coding (2002). Proceedings of the 12th IEEE Workshop on Neural Networks for Signal

Processing 557-565

Hoyer PO (2004) Non-negative matrix factorization with sparseness constraints The Journal of Machine Learning Research

5:1457-1469

Jia S, Qian Y (2009) Constrained nonnegative matrix factorization for hyperspectral unmixing IEEE Transactions on Geoscience

and Remote Sensing 47(1):161-173

Karavasilis V, Nikou C, Likas A (2011) Visual tracking using the Earth Mover's Distance between Gaussian mixtures and Kalman

filtering Image and Vision Computing 29(5):295-305

Keith DJ, Schaeffer BA, Lunetta RS, Gould Jr RW, Rocha K, Cobb DJ (2014) Remote sensing of selected water-quality

indicators with the hyperspectral imager for the coastal ocean (HICO) sensor International Journal of Remote Sensing

35(9):2927-2962

Keshava N (2004) Distance metrics and band selection in hyperspectral processing with applications to material identification

and spectral libraries IEEE Transactions on Geoscience and Remote Sensing 42(7):1552-1565

Kim H, Park H (2007) Sparse non-negative matrix factorizations via alternating non-negativity-constrained least squares for

microarray data analysis Bioinformatics 23(12):1495-1502

Kundu MK, Chowdhury M, Banerjee M (2012) Interactive image retrieval using M-band wavelet, earth mover’s distance and

fuzzy relevance feedback International Journal of Machine Learning and Cybernetics 3(4):285-296

Li J-m, Qian Y-t (2011) Clustering-based hyperspectral band selection using sparse nonnegative matrix factorization Journal of

Zhejiang University SCIENCE C 12(7):542-549

Li L, Zhang Y (2009) SENSC: A stable efficient algorithm for nonnegative sparse coding Acta Automatica Sinica

35(10):1257-1271

Melgani F, Bruzzone L (2004) Classification of hyperspectral remote sensing images with support vector machines IEEE

Transactions on Geoscience and Remote Sensing 42:1778-1790

Morup M, Madsen KH, Hansen LK Approximate L0 constrained non-negative matrix and tensor factorization. Proceedings of

IEEE International Symposium on Circuits and Systems (ISCAS 2008) 1328-1331

Murphy RJ, Monteiro ST (2013) Mapping the distribution of ferric iron minerals on a vertical mine face using derivative analysis

of hyperspectral imagery (430–970nm) ISPRS Journal of Photogrammetry and Remote Sensing 75:29-39

Peharz R, Pernkopf F (2012) Sparse nonnegative matrix factorization with L0-constraints Neurocomputing 80:38-46

Pele O, Werman M Fast and robust earth mover's distances (2009). Proceddings of 2009 IEEE 12th international conference on

Computer vision 460-467

Qian Y, Yao F, Jia S (2009) Band selection for hyperspectral imagery using affinity propagation IET Computer Vision

3(4):213-222

Ramirez C, Kreinovich V, Argaez M (2013) Why L1 Is a Good Approximation to L0: A Geometric Explanation Journal of

Uncertain Systems 7(3): 203-207

Ren Z, Yuan J, Zhang Z (2011) Robust hand gesture recognition based on finger-earth mover's distance with a commodity depth

camera. Proceedings of the 19th ACM international conference on Multimedia 1093-1096

Rubner Y, Tomasi C (2000) Perceptual metrics for image database navigation vol 1. Springer

Sandler R, Lindenbaum M (2011) Nonnegative matrix factorization with Earth mover's distance metric for image analysis IEEE

Transactions on Pattern Analysis and Machine Intelligence 33(8):1590-1602

Shi B, Chen N, Sun W (2014) Sparse Nonnegative Matrix Factorization for Hyperspectral Optimal Band Selection Acta

Geodaetica et Cartographica Sinica 42(3): 351-358 [in Chinese]

Steinwart I, Christmann A (2008) Support vector machines. Springer Verlag

Sun W, Halevy A, Benedetto J,Czaja W, Liu C,Wu H, Shi B, Li W (2014) UL-Isomap based nonlinear dimensionality reduction

for hyperspectral imagery classification ISPRS Journal of Photogrammetry and Remote Sensing 89:25-36

Tong X, Xie H, Weng Q (2013) Urban Land Cover Classification With Airborne Hyperspectral Data: What Features to Use?

IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing 99: 1-12

Wang T, Du B, Zhang L (2013) A kernel-based target-constrained interference-minimized filter for hyperspectral sub-pixel target

detection IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing 6:626-637

Wang Y-X, Zhang Y-J (2013) Nonnegative matrix factorization: A comprehensive review IEEE Transactions on Knowledge and

Data Engineering 25(6):1336-1353

Wen J, Tian Z, Liu X, Lin W (2013) Neighborhood preserving orthogonal PNMF feature extraction for hyperspectral image

classification IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing 6(2):759-768

Wen J, Zhao Y, Zhang X, Yan W, Lin W (2014) Local discriminant non-negative matrix factorization feature extraction for

hyperspectral image classification International Journal of Remote Sensing 35(13):5073-5093

Xia W, Wang B, Zhang L (2013) Band selection for hyperspectral imagery: a new approach based on complex networks IEEE

Geoscience and Remote Sensing Letters 10(5):1229-1233

Xiao Z, Bourennane S (2014) Constrained nonnegative matrix factorization and hyperspectral image dimensionality reduction

Remote Sensing Letters 5(1):46-54

Yang H, Du Q, Su H, Sheng Y (2011a) An efficient method for supervised hyperspectral band selection IEEE Geoscience and

Remote Sensing Letters 8(1):138-142

Yang Z, Zhou G, Xie S, Ding S, Yang J-M, Zhang J (2011b) Blind spectral unmixing based on sparse nonnegative matrix

factorization IEEE Transactions on Image Processing 20(4):1112-1125

Zhu F, Wang Y, Xiang S, Fan B, Pan C (2014) Structured Sparse Method for Hyperspectral Unmixing ISPRS Journal of

Photogrammetry and Remote Sensing 88:101-118