5.1. The Public Stations

advertisement

Explaining How Submarines Perceive their Surroundings: A Museum

Installation

Emmanuel Frécon, Olov Ståhl, Jonas Söderberg, Anders Wallberg

Swedish Institute of Computer Science

Box 1263

SE-164 29 Kista

Sweden

{emmanuel, olovs, jas, andersw}@sics.se

Abstract

Under water, submarines are blind and rely heavily

on their sonar system to detect vessels and animals in

their surroundings. We describe a museum installation

that places the public into a cramped space to explain

the technical and psychological process that sonar

operators undergo. The installation is composed of

several coupled interactive stations offering different

perspectives onto a virtual environment representing a

part of the Baltic Sea. The virtual environment and its

presentation within the installation are implemented as

an application of the DIVE research toolkit. The

installation has been on display in several museums on

a daily basis for almost a year. We describe the

technical solutions that we have employed to ensure

stability of the installation and some of our learning.

1. Introduction

This paper describes the design and implementation

of a museum installation. This installation illustrates

how a submerged submarine uses sound to gather

information about its surroundings. A submarine under

water is more or less blind and relies heavily on its

sonar system to detect other vessels. Although the

periscope can be used to spot surface vessels, its range

is limited (a couple of miles) and its use also increases

the risk of the submarine being detected. A sonar on

the other hand can pick up sounds from objects

hundreds of miles away. To make this ambivalence

clear to visitors, the installation relies heavily on sound,

using a soundscape consisting of authentic underwater

recordings of a range of different types of vessels.

The installation is a collaboration between SICS and

the Swedish national maritime museums and is part of a

long-term touring exhibition to celebrate the centennial

of the Swedish submarine force. The installation

described here has been on display for about a year,

touring between a number of museums across Sweden.

Technically, the installation is an application based on

the Distributed Interactive Virtual Environment (DIVE),

a research prototype for the development of virtual

environments, user interfaces and applications based on

shared 3D synthetic environment (see [2] [3] [4] and

[5]).

Logically, the installation is based on a shared

virtual environment that represents the southern part of

the Baltic Sea. Within this environment navigate a

number of different vessels: the submarine used for the

purpose of the explanation and a number of boats and

other objects. The installation shows this virtual

environment from within five very different

perspectives at a number of publicly accessible and

interactive stations. One station takes the form of an

interactive nautical chart. A second station is a digital

periscope. The third station alternatively visualises the

direction of incoming sonar waves onto the submarine

and an identification process of audible nautical

objects. These three stations are complemented by a

surround sound system that renders the sound of all

objects virtually in the vicinity of the submarine.

2. Motivation

The submarine installation has been on constant

display to the public for long periods of time. It is

routinely started during the mornings and switched off

at museum closing time. The challenge of taking a

research prototype such as DIVE into such a stringent

environment the major motivation for our involvement

in the project. Later on we describe a number of more

detailed requirements that the installation have had. We

also present the technical solutions that we have

employed to minimise risks and maximise user

experience at each station while still utilising the

different perspectives on a single shared environment

and providing cues to cross-reference these.

In practice, there were two different actors involved

in ordering the installation. Once we had recognised

this, we looked into using participatory design

strategies when designing the installation and the

components that it would include. This resulted in a

more concerted exhibit where the requirements of all

parties were taken into account. We have previously

used such strategies in a number of projects and these

have shown the importance of the dialogue that occurs.

Typically, collaboration in shared virtual

environments assumes that each participant sees the

same content, still from a different perspective.

However, earlier experiences in 2D interfaces have

shown that this is not totally adequate and this has led

to the introduction of “subjective views” ([6] and [7]).

These are usually put in the context of fully navigable

environments and result in applications where the view

onto the environment is tweaked depending on the

participants roles. Previous experience with an

installation called the Pond [1] had shown us the

importance of shoulder-to-shoulder collaboration.

When designing the submarine installation, we wished

to explore whether these perspectives could meet.

Consequently, we opted for a strategy that would be

based on a shared virtual environment, without any

representation of participants, without any navigation

and with perspectives so different that the notion of

“sharing” almost disappears. The technical challenge

has been for us to see whether our system, which is

highly tuned for navigable shared environments, could

successfully be used in such a different context.

3. Background and Related Work

The ICE laboratory has a long tradition in building VRbased applications that uses 3D virtual worlds for

information presentation and exploration. The Web

Planetarium, a three-dimensional interpretation of

HTML structures in the form of a graph [13], the

Library Demonstrator, an information landscape

constructed from the contents of an online library

database [12], and the Pond, an aquatic environment

where database query result are presented as shoals of

water creatures, are three examples of such

applications. All of these applications has been built

using distributed VR-systems, where the application

process shares a virtual world with visualiser processes

that present the world, including the application

objects, to users.

Periscope-like devices for information presentation has

been used in several projects. In [15], an application is

described where users are able to experience a

historical world (including sounds) using a periscope.

Another project [14] uses a periscope in a woodland

setting to present QuickTime movies to children that

illustrates various aspects of how the wood changes

character over a season and how this affects some of its

inhabitants.

4. Requirements

The strongest requirement for the technological

implementation was the high degree of reliability that

the system would have to achieve. Bringing in a

research prototype into the picture to solve the task is

not a straightforward solution. However, years of

development have made us confident in the increasing

stability of the DIVE system and its viability as a more

industrial and robust platform. Actually, the successful

realisation of this very installation has given us even

more confidence in the readiness of the system for

more demanding applications.

This requirement on reliability was dubbed by the

nature of the project as a public installation.

Consequently, the different stations have to explain

enough without any need to actually be explained. We

attempted to design the stations, the interaction and the

application behind them as robustly as possible. While

the different stations are designed for a few individuals

gathering around them, we wished that other passing by

individuals would be able to understand what was

going on and to learn from this experience.

Also, during the design process we had the

requirement to provide an installation that would

explain how it was to be in a submarine and how

a submarine perceived its environment. Therefore

the experience should be as real as possible, while

still being explanatory enough. An example of

this thin balance between realism and didacticism

is the sea map. It is used very seldom for the purpose

of detection and recognition in real situations.

However, it acts as a central gluing and explaining

artefact in the installation.

5. The Installation

The installation room is intended to represent a

submarine, submerged somewhere in the Baltic Sea. A

nautical chart displayed on a plasma screen at the

Figure 1: A view of the installation in Malmö.

There the space also allowed for two back

projection screens, one on each side of the

room, showing a looped recording of the

forward and aft view of the control room of a

Swedish submarine.

centre of the room shows the current position of the

virtual submarine. The physical setup of the installation

has been slightly altered for each of the museums

visited so far to make best use of the available space.

However, the content and user experience have

remained more or less similar. The basic idea has been

to create a somewhat cramped space reminding visitors

of the restricted space aboard a submarine. In the open

hall of ”Teknikens och Sjöfartens hus” in Malmö, this

meant having custom made walls and roof (see Figure

1). In Gothenburg at the Göteborg Maritima Centre the

exhibition was built on the lower deck of a lighthouse

boat, which added a lot to the atmosphere but also

created a few problems for the setup (see Figure 3).

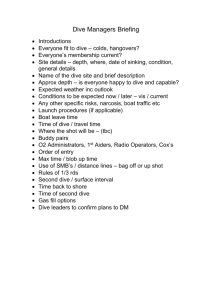

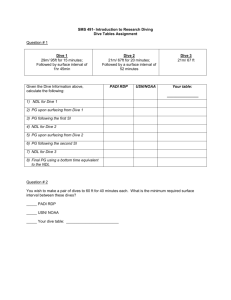

5.1. The Public Stations

The large-scale visual experience of the exhibition

is driven by four wall mounted 40-inch plasma

displays, one on each wall. In the room are also two

Figure 2: An example screen shot of the

interactive chart.

Figure 3: In Gothenburg, the installation was

built in a more confined space.

“hands-on” interactive stations. The first is an almost

horizontal 21-inch touch screen display containing an

interactive chart covering a part of the Baltic Sea south

of Stockholm including the north tip of Gotland. The

second interactive station is a digital periscope. These

can be seen respectively to the right and the left of

Figure 3. Screen dumps of the applications running at

these stations can be respectively be found in Figure 2

and Figure 4. The periscope presents a graphical view

of the ocean surface at the position of the submarine,

and can be rotated to look for ships in all directions.

The periscope also provides zoom and tilt-angle

controllers much like on an actual submarine. Ships

that are fairly close to the submarine (within a couple

of miles) will be visible as 3D objects moving slowly

through the oceans waves.

Two of the plasma displays mirror what is seen in

the periscope and in the nautical chart podium to allow

groups of people to experience what is on display. The

remaining two plasma displays shows an underwater

scene of the submarine with its sonar sensors and the

Figure 4: An example screen shot of the view

seen from the digital periscope.

Figure 5: A screen shot from the sonic wave

visualisation application.

Figure 6: Alternatively to the view of the

submarine and the sound waves, the display

also

shows

additional

information

in

connection with object identification.

sound environment it is in. Incoming sound waves are

displayed as colour-coded circles approaching the

submarine, as shown in Error! Reference source not

found.. During a user triggered sound analysis these

displays will also show frequency spectrum, sonar

operator video and eventually the identified vessel, as

shown in Figure 6.

An important part of the installation is the computer

controlled 8-channel sound system responsible for

rendering the soundscape. Eight loudspeakers are

evenly spread out around the room making it possible

to simulate sounds coming from all directions.

indistinguishable. The selection of individual sounds is

intended to illustrate the way that a submarine sonar

operator works. By isolating and analysing the sounds

one by one, indistinguishable sounds become

distinguishable and thus hopefully identifiable.

Although the installation focuses on why sounds are

so important to submarines, we also wish to point out

that the sounds that are mostly interesting are the ones

that are caused by vessels of various kinds. To make

this point clear the installation includes a digital

periscope where the visitors are able to see the ships

that are the sources of the sounds heard in the speaker

system. By looking at a particular ship in the periscope

and selecting the corresponding symbol on the nautical

chart, a visitor is thus able to see the ship and at the

same time hear the sound that it emits under water.

Visitors will also encounter tools like BTR (Bearing

Time Recorder) and LOFARgram (“waterfall”

frequency spectrum diagram). These are used aboard

modern submarines.

5.2. Intended User Experience

The installation is intended to give the visitors an

understanding of the importance of sound for a

submarine to detect vessels in its vicinity but also far

away. Via the eight channel sound system, the visitors

are presented with a cacophony of sounds coming from

all directions, representing a multitude of objects

(vessels, animals, etc) surrounding the submarine. Via

the touch sensitive nautical chart, visitors are able to

select symbols representing these sound-emitting

objects. When such a symbol is selected, all other

sounds will fade away and the selected sound will be

played in isolation. During this time the visitors are

able to experience the individual characteristics of this

sound, be it a seal, a small boat with an outboard

motor, or a large tanker. By selecting different symbols

(and thus sounds) the visitors are able to discover that

different types of vessels (animals) produce quite

different sounds. When a selected sound has been

played for a short while, all object sounds will start

again, making the previously selected sound

5.3. Design Process and History

In 2000, our lab designed an experimental

collaboration tool called the Pond as part of the EU

project eSCAPE. The Pond is composed of a 50 inches

plasma screen with a touch surface, surrounded by four

speakers and framed in an eye-catching wooden case.

The screen shows the interior of a pond where

information from databases or the Internet is

represented as animated flocks of swimming creatures.

Users gather around the table-like surface to surf,

gather or exchange information. The design concept of

the Pond was based on eye-to-eye contact and

shoulder-to-shoulder

collaboration, coupled to

unencumbered physical interaction. User studies have

shown that the Pond stimulates an alternative group

behaviour where entertainment plays a crucial role and

leadership is less prominent.

The Pond became rapidly a media attraction and

was shown in several trade fairs. The first curator of the

Swedish national maritime museums contacted during

one of these fairs. Inspired by the artefact, he wanted to

discuss ideas for a coming exhibition around

submarines. This first contact developed to a series of

meeting with his team to discuss what an installation

would have to mediate.

The global understanding that emerged from these

meetings was that visualisation would form the

essential part of the installation. Under the surface, a

submarine is essentially blind. Short distances can be

overcome with the periscope, but this is useless for

navigation or search missions. Furthermore, for secrecy

reasons a submarine shall not be seen during missions.

However, a submarine is able to listen to its

surroundings in details and with great precision. The

acoustic information from the sonar is shown aboard in

two different ways: the bearing of the acoustical object

and graphical frequency curves. Using this information

the operator builds an inside representation of how the

surroundings and the moving acoustic objects look like.

We were commissioned to visualise this technological

and psychological process to the public.

Once our assignment had been cleared out, we

started to design how the installation would look like

and show. We envisioned a dark room with projection

surfaces covering two of its sides. In the middle of the

room would stand a touch-sensitive console. From this

console, visitors would be able to manipulate an

articulated arm with a projector on its end able to reach

any point of the projection surfaces. An interaction

with the touch surface of the console would send an

active sonar pulse and the projector would diffusely

show its respective place on the projection surface.

Several interactions at the same location would clarify

the picture and pressing somewhere else would

“illuminate” a new area. We imagined that visitors

would thus be able to visualise a virtual underwater

landscape. The console would also show simple

navigation controls so as to be able to visit new virgin

territories. We also worked with the idea of painting

the projection surfaces with phosphorescent paint so

that the parts of the landscapes that would so be

discovered would not disappear at once but remain for

a while and slowly attenuate. The whole installation

would be complemented by an acoustic landscape that

would work in a similar manner. Every geographical

area would correspond to a musical theme, which could

be discovered in pace with the sonar pulses and

developed through the orchestration of more and more

parts and tunes.

We presented our ideas to our contact group and

they were enthusiastic about the concept. We produced

a video with simulations and animations that would

illustrate the ideas. Finally, together with the curator we

presented all the material and ideas at a meeting with a

reference group from the navy, composed of retired

navy officers. This group reacted very negatively to the

concept: they questioned our participation, our

presence, the competence of the museum, the project

leadership, etc. It is during that meeting that we

understood that exhibition was commissioned by two

different entities and that we would not be able to

implement our concept.

The objective part of the criticism was geared

towards two problems. Submarines have no windows.

Therefore, showing something outside of the submarine

is inadequate and can be misleading. Also, submarines

almost never use active sonar pulses. All the film

sequences showing torpedo approaching a submarine

are incorrect. Using an active sonar means exposing the

submarine and thus submarines simply stand still and

listen, being almost impossible to detect.

We had to start again from scratch. We decided to

use in parts the methods for participatory design that

we had inherited from the i3/ESE project KidStory.

Together with the first curator, we created our own

reference group. The first members were a group that

records underwater sounds for the Swedish navy. They

later gave us the authentic sonar sounds and frequency

analysis that we used in the final installation. The

second members were a group that works with

underwater acoustic with a focus on submarine hunt.

They explained to us the heterogeneous environment

formed by the Baltic Sea and the different salt and

temperature layers that compose it. The last member of

our reference group was an active submarine captain

who actually knew how modern submarines were

operated nowadays.

We operated a series of meetings with these three

different groups of people separately. We alternated

requests for information that we needed for the

installation and sessions where they could freely tell us

how they would design the installation. Slowly we

aggregated all information and designed a new concept

with the first curator. We had finally understood that

the real challenge of this project consisted in finding

the right balance between on one side the requirements

for realism and authenticity that the navy had and, on

the other side the requirements for experience and

pedagogical clarity that the museum had.

Once we had developed our new concept we took

again contact with the active captain and listened for

his comments. As the initial reference group, he wanted

in a first phase that visitors would enter an installation

that was a submarine look alike as much as possible.

However,

he

progressively

understood

the

requirements that the museum had. The captain was

also present during a new meeting with the initial

reference group. His presence was key to our success

and to the decision to start producing the installation

that is described in this paper.

User

Input Devices

Output Devices

Rendering

Audio and Video i/o

MIME

Tcl Scripting

Event System

6. DIVE

DIVE is a long-established system for CVE research

prototyping (see [2] [3] [4] and [5]). An overview of

the structure of the system is given in Figure 7. At the

conceptual and programming level, DIVE is based on a

hierarchical database of objects, termed entities.

Applications operate solely on the scene-graph

abstraction and do not communicate directly with one

another. This technique allows a clean separation

between application and network interfaces. Thus,

programming will not differ when writing single-user

applications or multi-user applications running over the

Internet. This model has proved to be successful; DIVE

has changed its inter-process communication package

three times since the first version in 1991, and existing

applications did not require any redesign.

While the hierarchical model is inherited from

traditional scene graphs, as used in the computer

graphics community, the DIVE database is semantically

richer. For example, it contains structures for storing

information about other users, or non-geometric data

specific to a particular application. In DIVE, the

database is partially replicated at all participating nodes

using a top-down approach, i.e. mechanisms are

offered to control the replication of sub-branches of a

given entity.

In DIVE, an event system realises the operations and

modifications that occur within the database.

Consequently, all operations on entities such as

material modifications or transformations will generate

events to which applications can react. Additionally,

there are spontaneous and user-driven events such as

collision between objects or user interaction with input

devices. An interesting feature of the event system is its

support of high-level application-specific events,

enabling applications to define their content and

utilisation. This enables several processes composing

of the same application (or a set of applications) to

exchange any kind of information using their own

protocol.

User

Interface

3D i/o

Database

Distribution

SRM Mechanisms

Network

Figure 7: The different modules that compose

the DIVE system, together with their interfaces.

Most events occurring within the system will

generate network updates that completely describe

them. Other connected peers that hold a replica of the

concerned entities will be able to apply the described

modification unambiguously. Network messages are

propagated using the multicast mechanisms that are

built in the system. DIVE uses a variation of SRM

(scalable reliable multicast [8]) to control the

transmission of updates and ensure the consistency of

the database at all connected peers. The SRM approach

requires the transport layer to be able to ask the

application (in this case DIVE as a whole) to regenerate

updates if necessary. Update regeneration is necessary

when gaps are discovered in the sequence numbers that

are associated with every entity in the database. Gaps

imply that network messages must have been lost along

the path from a sender to one of its receivers. In

addition it is possible to access any document using

more common network protocols (HTTP and FTP),

and to integrate these documents within the

environment by recognizing their media types (such as

VRML, HTML, etc.)

In any application, the content of the database must

be initialised. DIVE uses a module that manages several

three-dimensional formats and translates them into the

internal data structures that best represent their content.

Usually only one peer will load, and parse, a particular

file and the resulting entity hierarchy will be distributed

to other connected peers through a series of (multicast)

updates that describe the resulting entities.

DIVE has an embedded scripting language, Tcl,

which provides an interface to most of the services of

the system [9]. Scripts register an interest in, and are

triggered by, events that occur within the system. They

will usually react by modifying the state of the shared

database. Moreover, these modifications can lead to

other events, which will possibly trigger additional

scripts. A series of commands allow the logic of the

scripts to gather information from the database and

decide on the correct sequence of actions.

For animations, DIVE integrates the notion of key

frames that has been made popular by VRML. The idea

is to provide a number of animation keys in the form of

transformations at key points in time, together with the

time that should elapse between key frames. As time

goes by, intermediate positions and orientations are

interpolated between key frames. Time objects can be

associated to the hierarchy in various places to

simultaneously control the animation of a number of

objects.

Animation information is part of the distributed

database. Since animations described in such a way are

uniquely dependent on time, their execution can be

performed locally to each process and will not lead to

any network traffic. Operations for starting, stopping or

modifying animations will be propagated following the

same principles as any other distributed data. These

operations are also interfaced at the Tcl level, allowing

for scripts to control animations.

The primary display module is the graphical

renderer. Traditionally, the rendering module traverses

the database hierarchy and draws the scene from the

viewpoint of the user. DIVE also has integrated audio

and video facilities. Audio and video streams between

participants are distributed using unreliable multicast

communication. Audio streams are spatialised so as to

build a soundscape, where the perceived output of an

audio source is a function of the distance to the source,

the inter-aural distance and the direction of the source.

The audio module supports mono-, stereo- or quadriphony audio rendering through speakers or headphones

connected to the workstation. Input can be taken from

microphones or from audio sample files referenced by

a URL. Similarly, the video module takes its input from

cameras connected to the workstations or video files

referenced by URLs. Video streams can either be

presented to remote users in separate windows or onto

textures within the rendered environment.

The services described previously are independent

of any DIVE application. Many different DIVE

applications exist that use these services directly. The

DIVE run-time environment consists of a set of

communicating processes, running on nodes distributed

within both local and wide-area networks. The

processes, representing either human users or

autonomous applications, have access to a number of

databases, which they update concurrently. As

described earlier, each database contains a number of

abstract descriptions of graphical objects that, together,

constitute a virtual world. A typical DIVE application

will, upon connection to a virtual world, introduce a set

of objects to the environment that will serve as its userinterface and start listening to events and react

accordingly. One essential application of the system is

the 3-D browser, called vishnu. Vishnu is the

application that gives its user a presence within the

environment. It introduces a new entity called an actor

to the shared environment, which is the virtual

representation of the real user. vishnu renders a visual

and aural space and provides the users with an interface

that allows them to explore and interact with this space.

Vishnu is a default high-level user client.

Users may also be presented with a two-dimensional

interface that offers access to rendering, collaboration

and editing facilities. The interface itself is written

using the same scripting language as offered by the

world database. Consequently, CVE applications can

dynamically query and modify the appearance of the

2D interface. For example, the London Traveller

application [10] exploits this feature by adding an

application-specific menu to the regular interface of the

DIVE browser.

Finally, a MIME (Multimedia Internet Mail

Extensions) module is provided to better integrate with

external resources. It automatically interprets external

URLs. For example, an audio stream will be forwarded

onto the audio module where it will be mixed into the

final soundscape.

DIVE thus specifies a range of different services at

different levels. Programmers can use the core libraries

that provide core services and extended modules that

provide user services. However what tends to

distinguish DIVE from other systems is that there is a

default application, Vishnu, which integrates many of

these services. Vishnu can load a world description file

that, because it allows embedded Tcl scripts, is

powerful enough to describe a very wide range of

environments. Vishnu, the application itself changes

rarely so there is a high degree of interoperability

between instantiations at different users’ sites.

7. Implementing the Installation in DIVE

The submarine installation is implemented using the

DIVE toolkit[] running on three Windows XP 2.4GHz

P4 computers equipped with GeForce4 class graphics

cards. These computers, are networked together

without any connection to the outside world. The

following sections will describe the software

environments, describe some technical issues regarding

the design and implementation as well as experiences

learned as the work progressed.

7.1. The DIVE toolkit

Behöver vi verkligen detta avsnitt?

7.2. Software Design and Implementation

7.2.1. Overview

Kanske något övergripande om implementationen

As described earlier, the installation includes three

networked PCs that each runs a DIVE process. The

processes are responsible for generating the chart,

underwater and persicope scenes and communicate by

sharing a DIVE virtual world.

7.2.2. Subjective views

Since each scene is quite different graphically, the

world mainly serves as a common coordinate system in

which DIVE objects representing the submarine and a

number of sound emitters (surface and underwater

vessels as well as some animals) are placed. The world

itself has no common graphical representation since

each process needs to present it in a different way. For

instance, the chart station will display a sound emitter

as a 2D icon while the persicope will present the same

object using a 3D ship model. This is achieved by

using DIVE's holder mechanism. A holder is a DIVE

scene graph object that may contain the URL of a file

which, once loaded, creates a sub-hierarchy of objects

that are placed under the holder in the graph. By

making the holder a local object, DIVE will distribute

the holder, but not the objects that are placed beneath it

in the scene graph. The result is that each process will

load the file contained in the holder locally, but the

objects that are created as a result will be local to that

process. By placing files with different content but with

the same name on the three PCs, and by setting the

holder URL to the name of these files, each process

will load and create a different sub-hierarchy of objects

under the holder. In the chart process, a sound emitter

object will have a sub-hierarchy of objects that define a

2D icon while in the persicope the same object will

have a sub-hierarchy that looks like a 3D ship.

Thus, all three processes share the non-graphical

objects that define the position and orientation of the

sound emitters, while each process decides individually

how these objects look graphically (via the holder

URL). If a shared object is moved or rotated, all

processes will see the same change in the position or

orientation attribute, but visitors will experience such a

change differently depending on which graphical

station they are using.

7.2.3. Application logic

The DIVE code that implements and drives the

submarine installation can be divided into two

categories, Tcl scripts and C++ plugins. Scripts are

mainly used to handle initialisation and application

logic (i.e., handle user interactions, animations, the

starting and stopping of sounds, etc.) while plugins

implement more “heavy-weight services” that are used

and controlled by the scripts. For instance, there is a

Midi plugin that exports script commands to open a

Midi device and for starting and stopping Midi notes.

This plugin is loaded by one of the Tcl scripts that are

executed by the chart process and is then used to

control the playback of authentic underwater

recordings for various vessels and animals as the

visitors interact with the GUI. Other plugins are used

for rendering of the sea surface in the persicope, for

communicating with the periscope hardware and

updating the view as the persicope is turned, as well as

the generation and display of the BTR tool in the chart

GUI. It is our experience that the use of scripts in

combination with plugins is an efficient way to develop

applications. By using plugins, new features and

services can be introduced into DIVE in a flexible way,

without the need to modify the core system. Each such

plugin can be given an application neutral interface

(including a script interface) which can then be used by

scripts according to the specific application needs. By

using script interfaces we don't need to include

application specific code into the plugins which means

that they can be used for other purposes as well. For

instance, the Midi plugin mentioned above knows

nothing about sound emitter objects and what notes to

play as a result of visitors interacting with the chart

GUI. Had this information been compiled into the

plugin, we would have had to modify the code if we

wanted to use the plugin in some other application.

7.2.4. Process synchronization

The scripts that are executed by the chart, underwater

and persicope processes are mainly devoted to tasks

concerning the local station, e.g., updating the GUI,

starting sounds, etc. However, the scripts also contain

code that is intended to synchronise the three processes

at various points of time. When starting the installation

each day, the museum staff needs to start each process

individually, which is done in a certain order.

However, once started, each process waits for the

others to startup and initialise before it continues to

execute the main run-time logic, i.e., moving ships,

reacting to interactions, etc. This is achieved by each

process creating a property (a DIVE object attribute

that may be created during run-time) in the world

object (a shared object representing the world) and

setting the value of this property to indicate its startup

status. By examining these properties, it's possible for a

process to determine if others have initialised correctly

and if it is ok to continue to execute the main

application logic. Whenever a property is created,

updated or deleted, DIVE generates distributed events

(events which are triggered on all processes connected

to the world in which the property exists) which may be

caught and examined by any of the three processes.

The scripts will therefore set up event subscriptions for

the properties created and updated by the “other

processes”, which allows them to know exactly when

these processes are ready to start. A similar procedure

is used when the installation is shut down each night.

Properties are also used to synchronise the vessel

identification procedure. Whenever a visitor selects an

unidentified sound emitter for identificatioin in the

chart GUI, the chart script sets the value of a specific

property in the emitter object to the value ‘identify’.

This property change will generate a distributed event

which is caught by the underwater script. If no other

identification is in progress, the underwater script then

starts the video sequence that illustrates the work of a

sonar operator. Once the video sequence is finished and

the sound emitter is identified, the underwater script

changes the property value to ‘identified’. This change

will then cause the chart script to change the 2D icon

representing the the sound emitter to an icon that

represents the type of vessel or animal that was

identified.

We have found that using properties in this way makes

it fairly easy to program various process

synchronisation tasks. Although DIVE supports a

message passing API which could have been used to

send synchronisation messages between the processes,

using properties is much simpler since the script

doesn’t need to handle message construction, error

situations (e.g., sending a message to a process that

hasn’t started yet), remebering that a message with a

certain content has been received, etc. Properties are

persistent and distributed automatically by DIVE,

which means that if process A creates a process before

process B is started, process B will still see the

property and its value once started since the property is

part of the shared state. Also, since properties may be

added to objects during run-time, it’s easy to add

application specific object attributes since no C or C++

code in the DIVE system needs to be changed and thus

recompiled.

7.2.5. Sound rendering

The sounds that are used in the installation are

underwater recordings of various vessels and animals,

provided by the Swedish navy. Although DIVE

includes an audio module that supports 3D sound, it

wasn’t able to produce sound for the eight different

channels that was necessary to drive the speakers in the

installation. Instead, we choose to use an external audio

software called Cubase coupled with an software

sampler plugin. Cubase associates the different sounds

with particular Midi notes, which allows DIVE to start

and stop sounds by producing a stream of Midi events.

These events are caught by Cubase by looping Midi

output to Midi input via a cable on the chart station PC.

Cubase is started automatically by the chart

initialization scripts and terminated when the chart

process is shut down in the evenings.

By using a different Midi channel for each speaker, the

chart process is able to select the direction from where

each sound emitter will be heard in the installation

room. By determining the bearing of the sound emitter

relative to the submarine in the virtual world, the chart

process selects the most appropriate speaker and thus

Midi channel for that particular sound. The Midi note

corresponding to a particular sound is determined via a

lookup in a hard coded table in the chart script that

maps vessel types to Midi notes.

Even though the eight Midi channels allows us to

produce sounds in different directions, we also wanted

to change the sound volume to match the distance from

the submarine to the sound emitter. Although the Midi

standard includes a volume controller, it was of no use

to us since it changes the volume on all sounds for a

particular channel. That is, if several sounds where

mapped to the same speaker, they would all be given

the same volume which was unacceptable. Instead, we

use the velocity parameter which is part of the Midi

noteOn and noteOff events, and mapped velocity to

volume in the software sampler used by Cubase. This

allowed us to set different volumes for different sounds

even though they may be placed in the same speaker.

Since most of the sound emitter objects move within

the virtual world, the sounds will change speakers as

the bearing relative the submarine changes. This caused

us some problems at first since the transition of a sound

from one speaker to another was rather noticeable. It

was obvious that eight speakers isn’t good enough

resolution to produce a convincing sound spatialisation.

Each speaker corresponds to a particular sector with its

origin in the submarine position in the virtual world.

When a sound emitter object passes from one sector to

another, the sound will be switched to another speaker.

To avoid the discrete “jump” of a sound from one

speaker to another, the sound was played in both

speakers if an emitter object was close to the border

between two such sectors. The volume used in each

speaker corresponds to how close the object is to the

border line. If the line is crossed, the sound will be

louder in the “new” speaker and it will fade and finally

be turned off in the “old” speaker as the object

continues its journey. The effect is a much smoother

transition of sounds between speakers.

7.2.6. Video rendering

Whenever a visitor initiates an identification process to

determine the type of an unknown sound emitter, three

video sequences are started in the underwater scene to

illustrate the work of a sonar operator. We choose not

to use DIVEs built in video support since it is mainly

focused on distribution of video images, which wasn’t

necessary in this case as the video should only be

presented in the underwater station. Also, since the

display of video in DIVE is based on 3D texturing, the

performance is rather limited, especially if the images

are large. The solution is therefore to use an external

video player which is started by DIVE whenever

needed. The identification process is represented by

three different video sequences, one showing a sonar

operator at work, another a sound frequency spectrum

and a third that shows an animation of the identified

vessel or animal (see figure 6). Whenever the process is

initiated, DIVE starts three different instances of the

video player, each responsible for displaying one of the

sequences. Knowing the length of each video sequence

and using a timer, DIVE will terminate the video player

processes causing the video windows to disappear. This

marks the end of the identification process and the

underwater process will signal back to the chart process

that the identification was successful, as described in

7.3.4.

7.2.7. Periscope

The custom made periscope (see figure 1 and 3)

showing the ocean around the submarine is rigged with

two Leine & Linde 10-bit optical angle encoders for

measuring bearing and angle of elevation. Along with a

number of additional switches these encoders are

connected to a USB-based data acquisition system

called Minilab 1008, providing for a robust, low cost

way of getting the periscope state into the managing

DIVE plugin. The ocean graphics is displayed on an

off the shelf 12 inch LCD display located in the back of

the periscope. The algorithm for generating the wave

simulation is similar to [11]. A patch of waves is

generated and updated through the use of a Phillips

spectrum and inverse FFT. This patch is then tiled to

create the water surface within the frustum. This

algorithm is implemented in a DIVE plugin that uses a

number of hooks into the DIVE renderer for setup,

adjustments, and rendering of the ocean surface. This

plugin is also responsible for simulating curvature of

the ocean surface. DIVE uses Cartesian coordinates

and the curvature is simulated visually by adding an

offset to the position of surface vessels during

rendering based on the distance to the vessel and the

earth’s radius. A third plugin is responsible for

managing the graphics on the periscope hud, e.g.

current bearing, zoom, and elevation information.

7.3. Maintenance

The fact that the installation was going to be on

display in museums for almost two years without any

possibility for us taking part in its daily handling, made

it necessary to also focus on maintainability and

smooth operation. We decided early in the

implementation phase that the system would need to be

restarted each day as opposed to running continuously

for long periods of time. This relieved us from having

to consider nasty issues like power failures but also

meant that someone other than ourselves would have to

be able to start and stop the system without too much

trouble. Assuming that the museum staff were no

computer experts, we came up with a solution that

allows the whole system to be started by clicking on

three desktop shortcuts, and to be stopped by pressing

the Escape key on one of the PC keyboards. Not having

the computers on the Internet ruled out the possibility

of remote maintenance. The main reason for this design

choice was the fact that some of the museums visited

have rules against connecting publicly available PCs to

their network.

Even though the stability of DIVE has increased

over the years, we still had some doubts whether the

system would be able to run without problems week

after week. Even though the tests that we did prior to

the museum “release” indicated no major problems, we

assumed that problems could still occur and therefore

focused on logging. All three processes creates log files

where the application scripts and plugins continuously

write log messages concerning various issues (user

interactions, the state of various plugins and DIVE

modules, etc.) The idea is that if something does indeed

go wrong, the log files will give us an understanding of

the problems and hopefully the history of events that

caused the problem to occur. Each time the installation

is started, log files with unique names are created. This

prevents new log files from overwriting old ones (in

case the museum staff restarts the system after a crash,

causing the “crash log file” to be lost). This also keeps

the size of the log files small which has proven to be

important since the museum staff can use a floppy disk

to retrieve and send us the latest files. Had we used one

log file that would have been appended with each run,

the size would eventually have been in the order tens of

megabytes.

The maintenance issue is also one reason why we

choose to implement the application logic using scripts

and plugins. If any problems would be discovered, we

can easily modify scripts and mail them to the museum

to replace the old ones. With sufficient guidance, the

museum staff can even make minor script changes

themselves.

8. Conclusion and Future Work

This paper...

A user evaluation of the installation is currently

being conducted. Informal results, gathered during a

series of demonstrations before the installation was put

together in the first museum have been very positive...

XXX.. In particular, the periscope....XXXX.

The naturalness of the periscope as an interface

within this scenario has led us to conducting

experiments in using a periscopes as a 3D input and

output device in another application in order to

evaluate its usage as a more generic device. In this

application, which is intended to be used as an

attraction within large and geographically spread public

events such as trade fairs, the periscope is used in two

different ways. The application couples a 3D model of

the event together with networked web cameras that

can be remotely controlled and which picture can be

sent back to the computer driving the periscope. Firstly,

the periscope is used as an exploration device within

the 3D environment of the event. The handles are then

used for tilting and zooming. The web cameras are

represented within the 3D environment. Users can

select one of these cameras by aiming at its

representation and pressing a button. As a result, users

can take full control of the remote camera with the

periscope and receive the stream of images, which

allows them to see as from this location of the event.

We have already demonstrated this application twice

and have received very positive reactions.

9. References

[1] Ståhl O. and Wallberg A., “Using a Pond Metaphor for

Information Visualisation and Exploration”, In Churchill, E.,

Snowdon, D. and Frécon, E. (Eds), Inhabited Information

Spaces, Springer, pp. XXXX, January 2004.

[2] Carlsson C. and Hagsand O., “DIVE – A Multi-User

Virtual Reality System”, Proceedings of IEEE VRAIS’93,

Seattle, Washington, USA, pp.394-400, 1993.

[3] Frécon E. and Stenius M., “DIVE: A Scalable Network

Architecture for Distributed Virtual Environments”,

Distributed Systems Engineering Journal, pp. 91-100,

February 1998.

[4] Frécon E., Smith G., Steed A., Stenius M., and Ståhl O.,

“An Overview of the COVEN Platform”, Presence:

Teleoperators and Virtual Environments, Vol. 10, No. 1, pp.

109-127, February 2001.

[5] Frécon E., “DIVE: A generic tool for the deployment of

shared virtual environments”, Proceedings of IEEE

ConTel’03, Zagreb, Croatia, June 2003.

[6] Snowdon D., Greenhalgh C., and Benford S., “What You

See is Not What I See: Subjectivity in Virtual

Environments”, Proceedings of Framework for Immersive

Virtual Enviroments (FIVE'95), QMW University of London,

UK, pp. 53-69, December 1995.

[7] Smith G., “Cooperative Virtual Environments: lessons

from 2D multi user interfaces”, Proceedings of the

Conference on Computer Supported Collaborative Work'96,

Boston, MA, USA, pp. 390-398, November 1996.

[8] Floyd, S., Jacobson, V., Liu, C., McCanne, S., and

Zhang, L., “A Reliable Multicast Framework for Lightweight Sessions and Application Level Framing”,

IEEE/ACM Transactions on Networking, Vol. 5, No. 6,

December 1997, pp. 784-803.

[9] Frécon E. and Smith G., “Semantic Behaviours in

Collaborative Virtual Environments”, Proceedings of Virtual

Environments'99 (EGVE'99), Vienna, Austria, pp. 95-104,

1999.

[10] Steed A., Frécon E., Avatare A., Pemberton D., and

Smith G., "The London Travel Demonstrator", Proceedings

of the ACM Symposium on Virtual Reality Software and

Technology, London, UK, pp. 50-57, December 1999.

[11] Tessendorf, J., “Simulating Ocean Water”, In

Simulating Nature: Realistic and Interactive Techniques.

SIGGRAPH 2001, Course Notes 47.

[12] eSCAPE Deliverable 4.1., “The Library Abstract

eSCAPE Demonstrator” (eds. Mariani, J. & Rodden, T.),

Esprit Long Term Research Project 25377, ISBN 1 86220

079 3. 1999

[13] Schwabe D., and Stenius M., “The Web Planetarium

and Other Applications in the Extended Virtual Environment

EVE”, Spring Conference on Computer Graphics,

Budmerice, May 3rd-6th 2000

[14] Wilde, D., Harris, E., Rogers, Y. And Randell. C., “The

Periscope: supporting a computer enhanced field trip for

children”, Personal and Ubiquitous Computing, Volume 7,

Issue 3-4, pp. 227-233, 2003

[15] Benford, S., Bowers, J., Chandler, P., Ciolfi, L., Fraser,

M., Greenhalgh, C., Hall, T., Hellström, S.O., Izadi, S.,

Rodden, T., Schnädelbach, H. and I. Taylor (2001).

"Unearthing virtual history: using diverse interfaces to reveal

hidden virtual worlds", in G.D. Abowd, B. Brumitt, S. Shafer

(Eds.): Ubicomp 2001: Ubiquitous Computing Third

International Conference Atlanta, Georgia, USA, September

30 - October 2, 2001. Lecture Notes in Computer Science

2201. Springer-Verlag.