2.5. How does neural network structure affects its capabilities

advertisement

2.5. How does neural network structure affects its capabilities?

(translation by Łukasz Brodziak; lukasz.brodziak@gmail.com)

Let's consider now the relationship between the structure of a neural network and the tasks which it

is able to perform. As you already know neurons described in previous chapters are used to create

the network. The structure of such network is created by connecting outputs of neurons with inputs

of the following ones(according to chosen scheme) this creates a system capable of parallel and

fully concurrent processing of various information. For previously mentioned reasons we usually

choose layer-structured network and connections between the layers are made according to „each to

each” rule. Obviously specific topology of the network, meaning mainly the amount of chosen

neurons in individual layers, should be derived from kind of the task we want the network to

perform. In theory the rule is quite simple: the more complicated is the task, the more neurons in the

network are needed to solve it, as the network with more neurons is simply more intelligent. In

practice, however, it is not as unequivocal as it would seem.

In vast literature considering neural network one can find numerous works which prove that,

as a matter of fact, the decisions regarding network's structure affect its behavior considerably

weaker than expected. This paradoxical statement derives from the fact that behavior of the network

is determined in fundamental way by the process of network teaching and not by the structure or

number of elements used in its construction. This means that the network which has decidedly

worse structure is able to solve tasks more efficiently (if it was well taught) than the network with

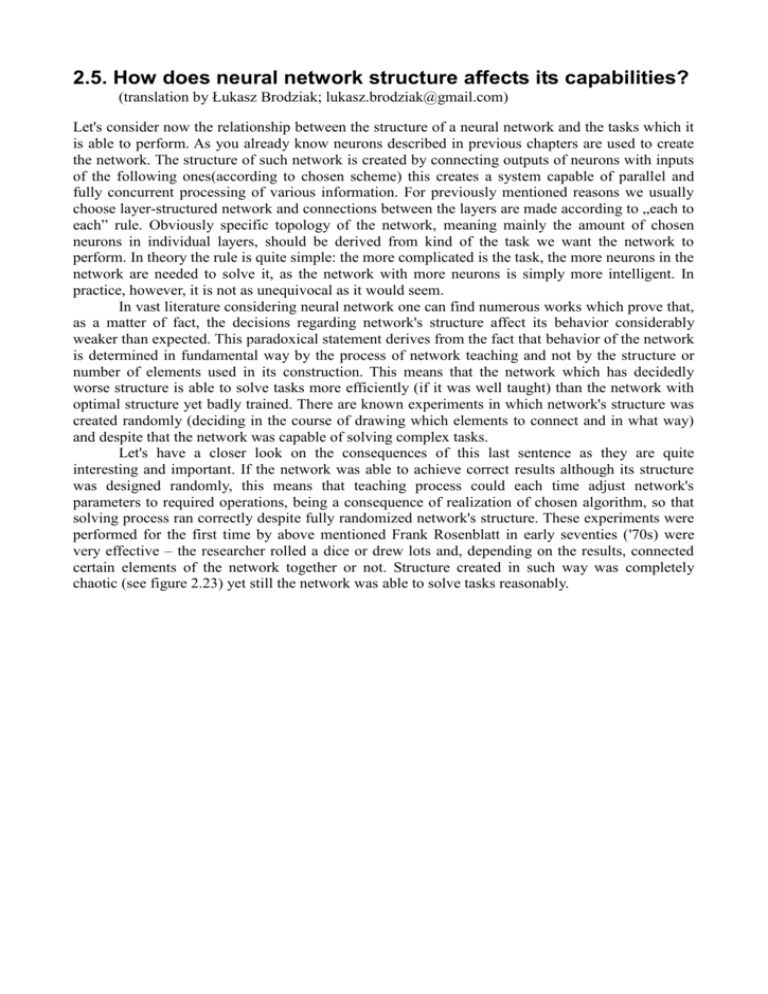

optimal structure yet badly trained. There are known experiments in which network's structure was

created randomly (deciding in the course of drawing which elements to connect and in what way)

and despite that the network was capable of solving complex tasks.

Let's have a closer look on the consequences of this last sentence as they are quite

interesting and important. If the network was able to achieve correct results although its structure

was designed randomly, this means that teaching process could each time adjust network's

parameters to required operations, being a consequence of realization of chosen algorithm, so that

solving process ran correctly despite fully randomized network's structure. These experiments were

performed for the first time by above mentioned Frank Rosenblatt in early seventies ('70s) were

very effective – the researcher rolled a dice or drew lots and, depending on the results, connected

certain elements of the network together or not. Structure created in such way was completely

chaotic (see figure 2.23) yet still the network was able to solve tasks reasonably.

Fig. 2.23. Connection structure of the network which elements were connected to each other using

the randomization rules. It is surprising that such network (after teaching process) is capable of

maintaining purposeful and efficient tasks.

Results of these experiments reported by Rosenblatt in his publications were so astonishing

that at first scientists did not believe that such thing is possible at all. However the experiments

were repeated later (among others Russian Academy Member Glushkov constructed a neural

network specially for this experiment and called it Alpha) and they proved that network with

random connections can learn correct solving of the tasks, although of course the teaching process

of such network is harder and takes more time than teaching the network which structure relates

reasonably to the task that has to be solved.

Interesting thing is that philosophers were also interested in results reported by Rosenblatt,

they claimed that this was a proof of certain theory proclaimed by Aristotle and later extended by

Locke. It is a certain concept in philosophy called tabula rasa – a concept of a mind being born as a

blank page which is filled in process of learning and gathering experiences. Rosenblatt proved that

this concept is technically possible – at least in form of a neural network. Separate issue is the

attempt to answer the question if it works with a mind of a particular man? Are, as Locke claimed,

inborn abilities indeed nothing, and gained knowledge – everything?

We do not know this for certain, but it may not be this way really. But we certainly know

that neural networks can gain all their knowledge only during learning and do not need to have

created in advance, adjusted to the task structure. Of course network's structure has to be complex

enough to enable „crystallization” of the needed connections and structures. Too small network will

never learn anything as its „intellectual potential” does not allow that – yet the issue is not the

structure itself but the number of elements. For example nobody teaches the rat the Relativity

Theory although it can be trained to find the way inside complicated labyrinth. Similarly no-one is

born already „programmed” to be a genius surgeon or only a bridge builder (this is decided by the

choice of the studies) although some people's intellect can handle only loading sand on a truck with

shovel yet still being supervised. It is just the way world is and no wise words about equality will

not change it. Some individuals have enough intellectual resources others - do not, it is the same

like with people being slender and well built and those looking as though they were spending too

much time working on a computer.

In case of network situation is similar – one can not make network having already inborn

abilities, yet it is very easy to create a cybernetic moron which will never learn anything as he has

too small abilities. Network's structure can be therefore of whatever kind as long as it is big enough.

Soon we will learn that it also can not be too large – as it is also unfortunate, but this we will

discuss in a while.