11. Computational Linguistics I) Computational phonetics and

advertisement

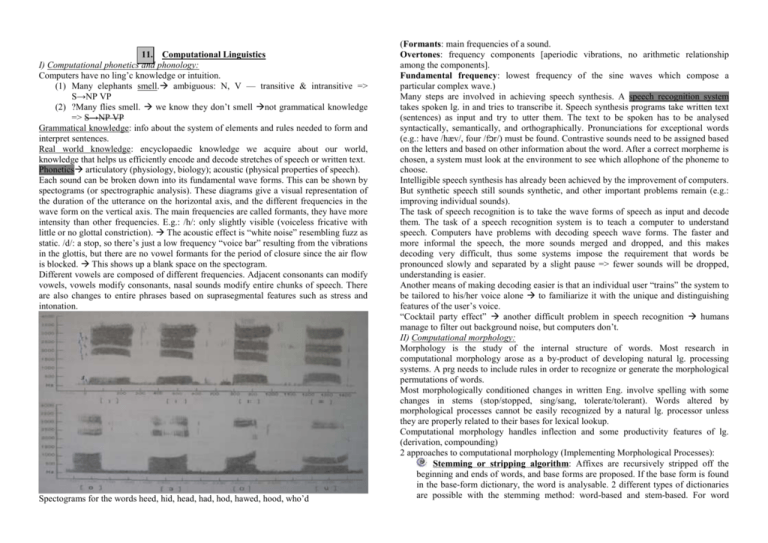

11. Computational Linguistics I) Computational phonetics and phonology: Computers have no ling’c knowledge or intuition. (1) Many elephants smell. ambiguous: N, V — transitive & intransitive => S→NP VP (2) ?Many flies smell. we know they don’t smell not grammatical knowledge => S→NP VP Grammatical knowledge: info about the system of elements and rules needed to form and interpret sentences. Real world knowledge: encyclopaedic knowledge we acquire about our world, knowledge that helps us efficiently encode and decode stretches of speech or written text. Phonetics articulatory (physiology, biology); acoustic (physical properties of speech). Each sound can be broken down into its fundamental wave forms. This can be shown by spectograms (or spectrographic analysis). These diagrams give a visual representation of the duration of the utterance on the horizontal axis, and the different frequencies in the wave form on the vertical axis. The main frequencies are called formants, they have more intensity than other frequencies. E.g.: /h/: only slightly visible (voiceless fricative with little or no glottal constriction). The acoustic effect is “white noise” resembling fuzz as static. /d/: a stop, so there’s just a low frequency “voice bar” resulting from the vibrations in the glottis, but there are no vowel formants for the period of closure since the air flow is blocked. This shows up a blank space on the spectogram. Different vowels are composed of different frequencies. Adjacent consonants can modify vowels, vowels modify consonants, nasal sounds modify entire chunks of speech. There are also changes to entire phrases based on suprasegmental features such as stress and intonation. Spectograms for the words heed, hid, head, had, hod, hawed, hood, who’d (Formants: main frequencies of a sound. Overtones: frequency components [aperiodic vibrations, no arithmetic relationship among the components]. Fundamental frequency: lowest frequency of the sine waves which compose a particular complex wave.) Many steps are involved in achieving speech synthesis. A speech recognition system takes spoken lg. in and tries to transcribe it. Speech synthesis programs take written text (sentences) as input and try to utter them. The text to be spoken has to be analysed syntactically, semantically, and orthographically. Pronunciations for exceptional words (e.g.: have /hæv/, four /fɔr/) must be found. Contrastive sounds need to be assigned based on the letters and based on other information about the word. After a correct morpheme is chosen, a system must look at the environment to see which allophone of the phoneme to choose. Intelligible speech synthesis has already been achieved by the improvement of computers. But synthetic speech still sounds synthetic, and other important problems remain (e.g.: improving individual sounds). The task of speech recognition is to take the wave forms of speech as input and decode them. The task of a speech recognition system is to teach a computer to understand speech. Computers have problems with decoding speech wave forms. The faster and more informal the speech, the more sounds merged and dropped, and this makes decoding very difficult, thus some systems impose the requirement that words be pronounced slowly and separated by a slight pause => fewer sounds will be dropped, understanding is easier. Another means of making decoding easier is that an individual user “trains” the system to be tailored to his/her voice alone to familiarize it with the unique and distinguishing features of the user’s voice. “Cocktail party effect” another difficult problem in speech recognition humans manage to filter out background noise, but computers don’t. II) Computational morphology: Morphology is the study of the internal structure of words. Most research in computational morphology arose as a by-product of developing natural lg. processing systems. A prg needs to include rules in order to recognize or generate the morphological permutations of words. Most morphologically conditioned changes in written Eng. involve spelling with some changes in stems (stop/stopped, sing/sang, tolerate/tolerant). Words altered by morphological processes cannot be easily recognized by a natural lg. processor unless they are properly related to their bases for lexical lookup. Computational morphology handles inflection and some productivity features of lg. (derivation, compounding) 2 approaches to computational morphology (Implementing Morphological Processes): Stemming or stripping algorithm: Affixes are recursively stripped off the beginning and ends of words, and base forms are proposed. If the base form is found in the base-form dictionary, the word is analysable. 2 different types of dictionaries are possible with the stemming method: word-based and stem-based. For word generation, all input to morphological rules must be well-formed words, and all output will be well-formed words. For word analysis, all proposed stems will be words. (Machine-readable dictionary: a dictionary that appears in computer form => have definitions, pronunciations, etymologies, and other info, not just spelling or synonyms.) Problem with more complex forms. E.g.: conceptualisation -ation attaches to infinitival Vs, conceptualise + -ation => there’s spelling change => without spelling rules *conceptualiseation would be allowed by the system. Stem-based system => -cept might be listed in a stem dictionary, thus the prefix conattaches to -cept. A word-based morphology system can use a regular dictionary as its lexicon, but no such convenience exists for a stem-bases system. 2-level approach: it requires a stem-based lexicon. Here the rules define correspondences btw. surface and lexical representations; they specify if a correspondence is restricted to, required by, or prohibited by a particular environment. Lexical and surface representations are compared using a special kind of rule system called a finite-state transducer. In the example try + -s tries, the rules would decide whether the lexical y could correspond to the surface i based on info the rules have already seen. The rules that compare lexical and surface form move from left to right, so when a successful correspondence is made, the rule moves along. Compounding 1 of the problems compounds are often not listed in a dictionary. (E.g.: bookworm => book N, sing., worm N, sing. BUT: What about “accordion?” not a compound analogous to bookworm!) Related problem due to overenthusiastic rule application; e.g.: really: [re-[allyV]V] meaning: “to ally oneself with someone again.” OR: [re-[sentV]V]: He didn’t get my letter, so I resent it. Vs. Did he resent the nasty comment? spelling of this word is truly ambiguous. III) Computational syntax: A syntactic analysis of a sentence permits a system to identify words that might go together for phrasing. This is particularly important for noun compounds in Eng. As many as 6 nouns can be strung together, and the pronunciation of the compound can change the listener’s interpretation of the meaning. E.g.: N N N N N N N N N N N Mississippi mud pie Mississippi mud pie Syntactic analysis can also determine the part of speech for N/V pairs that are spelled the same but pronounced differently, such as the V record /rekɔ́d/ & the N record /rɛ́kərd/. Research in computational syntax arose from 2 sources: 1.) Practical motivation resulting from attempts to build working systems to analyse and generate lg.; 2.) A desire on the part of theoretical linguists to use the computer as a tool to demonstrate that a particular theory is internally consistent. Parsers and grammars: Given a system of rules, an analyser will be able to break up and organize a sentence into its substructures. A parser is the machine or engine that is responsible for applying the rules. It can have different strategies for applying the rules. A parser tries to analyse each and every phrase or word in terms of grammatical rules. (to parse = to give a grammatical description of words, phrases and sentences. (a) I (pronoun, S) see you (pronoun, O) in the mirror. simple sentence (b) I see |you have finished. complex sentence (contains embedded clause) you: ambiguous 2 parsing approaches: Determinism vs. nondeterminism: Any time a syntactic parser can produce more than 1 analysis of the input sentence, the problem of bracketing is raised. E.g.: more than 1 possible ending: I see you — in the mirror (a) SVO(you)A — have finished (b). SV, S(you)V The term “nondeterminism” may refer to going back or backtracking if the 1st analysis turns out to be impossible. It may also mean following multiple paths in parallel, meaning that both analyses are built at the same time but on separate channels. Non-deterministic parsing is thus a type of automatic parsing by a computer in which either the computer is permitted to backtrack when an analysis fails, or in which more than 1 analysis may be built in parallel. The term “deterministic” means that a parser has to stick to the path it has chosen. Problem: as an analyser improves, it also becomes more and more cumbersome ‘cos each time it’s presented with more & more options. Deterministic parsing is a type of automatic computer parsing of lg. in which no backtracking is permitted. The final goal of a parser is to find a label for each actual word in the sentence (though in some cases it stops at the phrase level or peeks inside words and identifies morphemes). Top-down vs. bottom-up parsing: Phrase structure rules: S→NP VP; NP→(Det.) (A) N (PP); VP→V (NP) (PP); PP→P NP. =>2 ways to build analysis of a sentence, using these rules. We also need to give to these some lexical items (=terminal nodes e.g.: lexical items or prefixes, suffixes, stems) for each category (=nonterminal parts of a structure which are not lexical items, for example, VP, NP, Det, N): N→Curly V→sat P→on Det.→the N→grass. Nonterminal: category or phrase, such as N or NP. Terminal: a word, sometimes it’s part of a word or several words. In TD parsing, the analyser starts with the topmost node and finds a way to expand it. E.g: S Next rule: expansion rules: S NP VP NP VP N V NP This process continues untilno more expansions could apply, and until lexical items or words occur in the correct positions to match the input sentence. TD parsers suggest a hypothesis that a proposed structure is correct until proven otherwise. BU parsers take terminals (words) of a sentence one by one, replace them with proposed nonterminal or category labels, and then reduce the strings of categories to permissible structures: E.g.: Curly sat on the grass. N V P Det N Curly sat on the NP rule combines Det + N to build up a structure: N V P grass NP Det N Curly sat on the grass This continues until the structure of a sentence is built. Role of syntax and semantics: Some systems might claim that selections of prepositions by verbs, often considered a syntactic property, is actually dependent on the semantic category of the V. For example, not all Vs can take the instrumental as in He hit the dog with a stick. ?He told his story with a stick. Some systems assume that a syntactic analysis precedes a semantic one, and that the semantics should be applied to the output of syntactic analyses. Some systems perform syntactic and semantic analyses hand-in-hand. Other systems ignore the syntactic, viewing it as a second-step derivative from semantic analyses. Generation: Lg. generation has been the underling of computational linguistics. A lg. generator must be able to make decisions about the content of the text, about issues of discourse structure and about the cohesion of the sentences and paragraphs. For a lg. generator, only concepts and ideals are part of decisions to be made in building a text. There are 2 approaches to generation also: top-down and bottom-up. TD: 1st a very high-level structure of the output is determined, along with very abstract expressions of meaning and goal. Then lower levels are filled progressively. Subsections are determined, and examples of the verbs with their subjects and objects, if any, are proposed. This is refined, until the final stage, when lexical items are chosen from the dictionary. The BU process builds sentences from complex lexical items. 1 st, words to achieve the goals are hypothesized. Then sentences are composed, and finally high-level paragraph and text coherence principles are applied. The generation lexicon: the lexicon is just one link in many difficult steps involved in generating natural and cohesive text from an underlying set of goals and plans. A system must be capable of deciding what the meaning to be conveyed is, and then it must be capable of picking very similar words to express that meaning. The lexicon or dictionary must supply items to instantiate the link btw. meaning and words. IV) Computational lexicology and computational semantics: Computer systems need to contain detailed info about words. The individual words in the lexicon are called lexical items. In order for a structure to be “filled out,” e.g.: NPDet N, a program would need to have a match btw a word marked Det in the lexicon and the slot in the tree requiring a Det. The same goes for any part of speech. (E.g.: [[the] Det [grass]N]) A computer prg would need to know more than just the part of speech to analyze or generate a sentence correctly. Subcategorization and knowledge of thematic roles (agent, theme, goal, etc.) is also needed. A syntactic analyser would also need to know what kinds of complements a V can take. E.g.: the V “decide” can take an infinitive (I decided to go.) but cannot take a NP object and then the inf. (*I decided him to go.). The V “persuade” is the opposite (*I persuaded to go. ↔ I persuaded him to go.) The lexicon needs to know about the kinds of structures in which words can appear, about the semantics of surrounding words, & about the style of the text. Computational lexicon => Lexical item: 1. Part of speech, 2. Sense, 3. Subcategorization, 4. Semantic Restrictions, 5. Pronunciation, 6. Context & Style, 7. Etymology, 8. Usage, 9.Spelling (incl. abbreviations). There are several approaches to building a computational lexicon: i) To hand-build a lexicon specifying only those features that a given sys. needs & using the lexical items that are most likely to occur. E.g.: His interest is high this month. Most words have many different senses, and sometimes the different senses have very different grammatical behaviour. A major problem in building computational dictionaries is extensibility. The problem is how to add new info and modify old info, without stating over each time. ii) To use 2 resources: the power of the computer & data of machine-readable (MRD) dictionaries. An MRD is a conventional dictionary, but it’s in machine-readable form (i.e., on the computer), rather than on the bookshelf. MRDs are useful in building large lexicons, ‘cos the computer can be used to examine & analyse automatically info that has been organized by lexicographers, the writers of dictionaries. iii) Corpus analysis: the larger the corpus, or text, the more useful it is. A good corpus should include a wide variety of types of writing, such as newspapers, textbooks, popular writing, fiction, and technical material. In order to understand what a word, sentence, or text means, a computer prg has to know the semantics of words, sentences and texts. Although the semantics of words is an important component of any lg. sys., there is yet a broader issue: the semantics of sentences & paragraphs. 2 approaches to semantics & lg. analysis: 1.) Syntactically 2.) Semantically based systems In the 1st approach, the sentence is assigned a syntactic analysis. A semantic representation is built after the syntactic analysis is performed. Input sentence Syntactic Representation Semantic Representation The problem arises in getting from 1 representation to the other. This is sometimes called a mapping problem. In the semantically based system, first a semantic representation is built. Sometimes there is no syntactic analysis at all. Input sentence Semantic Representation WordNet is a large semantic network of English that can also be used as an electronic dictionary (more than 100,000 Eng. words, main organizing principle is synonymy, uses glosses [text enclosed in parentheses, the meaning of the word], hypernyms — holonyms — meronyms — frequent coordinate terms). Eng. inflection is incorporated into WordNet; it uses a processor program called Morphy. FrameNet (Baker, Fillmore & Lowe, 1998) is a project based on Frame Semantics. Words are grouped according to conceptual structures (frames). V) Practical applications of computational linguistics: A speech recognition system takes spoken lg. as input and tries to transcribe it. Speech synthesis programs take written text (sentences) as input and try to utter them. Text retrieval systems store a huge amount of written information. When you are interested in a particular piece of information, they help you find relevant passages even if a word-by-word match was not found. VI) Indexing and concordancing, machine translation: Concordances: ~ are alphabetical listings in a book of the words contained in a text, group of related texts, or in an author or group of authors, with, under each word (the headword or lemma), citations of the passages in which it is to be found. Biblical concordances: from 1230 AD. Computers have changed the way concordances are produced and used. Provided a text is stored in electronic form, a suitably programmed computer can perform all the tasks involved in compiling a concordance — locating all the occurrences of a particular word and listing the contexts — very rapidly and absolutely reliably. Concordancer programs generate concordance listings. Traditional, hand-made concordances employed so-called index cards (on which entries were written by volunteers) that were collated by the compiler, and then formed the printer’s copy. Machine translation has a strong tradition in computing. Great funds were available in the 50s and 60s for Machine Translation (military purposes, cold war). Failure at achieving high-quality general translations. Main components of a typical MT system: dictionaries, the parser, SL-TL conversion, interlingual approach, modular design (main analysis, transfer and synthesis components are separated), representations & linguistic models, representational tools (e.g.: charts, tree transducers, Q-systems), etc. VII) Computational lexicography: Lexicography is concerned with the meaning and use of words. Lexicographic research uses corpus-based techniques to study the ways that words are used. Advances in computer technology have given corpus-based lexicographic research several advantages: greater size of corpora, more representative nature, more complex, thorough & more reliable analyses. The term “corpus” is almost synonymous with the term machine-readable corpus. “Early corpus linguistics” is a term we use to describe linguistics before the advent of Chomsky. The studies of child lg. acquisition (diary studies of LA research, roughly 1876-1926!) was the first branch of linguistics to use corpus data it was based on carefully composed parental diaries recording the child’s locutions. Chomsky’s initial criticism: a corpus is by its very nature a collection of externalised utterances — it is performance data and is therefore a poor guide to modelling linguistic competence. It is a common belief that corpus linguistics was abandoned entirely in the 1950s, and then adopted once more almost as suddenly in the early 1980s this is simply untrue Quirk (1960): planned and executed the construction of the Survey of English Usage (SEU), which he began in 1961. 1961: Francis & Kucera began working on the famous Brown corpus. Jan Svartvik: Computerised the SEU, constructed the London-Lund corpus. Processes in computational corpus linguistics: Search for a particular word/sequences of words/a part of speech in a text Retrieving all examples of the word, usually in context Finding and displaying relevant text Calculating the number of occurrences of the word Sorting the data in some way ( Concordance the data count, locate, search) Corpus: Any collection of more than 1 text (Lat.: “body” corpus = a body of text); it is the basis for a form of empirical linguistics. Characteristics of a modern corpus: sampling and representativeness, finite size, machine-readable form, a standard reference. Corpus annotation: assigning POS labels (pos-tagging), syntactic annotation. assigning each lexical unit in the text a POS (N, V, Adj., etc.) code types: POS annotation, lemmatisation, parsing, semantics, discoursal and text linguistic annotation, phonetic transcription, prosody, problem-oriented tagging. E.g. lemmatisation involves the reduction of the words in a corpus to their representative lexemes (a head word form that one would look up in the dictionary