DIP_hw3_Report

advertisement

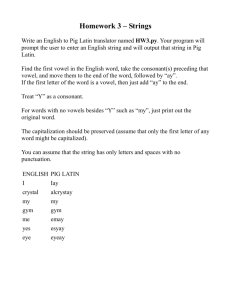

DIP Assignment #3 Report Name: Han-Ping Cheng Department/Grade: CSIE 3rd ID: B93902027 How to run my program: 1. My program is written by C language. 2. Compile the program by README file: command: make –f README 3. Executable file: The executable file of problem1: “prob1” Usage: ./prob1 <inputImageName> The executable file of problem2: “prob2_A” for the part (A), “prob2_B” for the part (B) and “prob2_C” for the part (C) Usage: ./prob2_A <inputImageName> ./prob2_B <inputImageName> ./prob2_B <inputImageName> The executable file of problem3: “prob3_A” for the part (A), “prob3_B” for the part (B) Usage: ./prob3_A ./prob3_B <inputImageName> 4. The output filename of problem1 is “shrink.raw” or “thin.raw” or “skeleton.raw” for the case of shrinking, thinning and skeletonizing respectively, depending on your choice. 5. The output filename of problem2 part (A) is “bin_T127.raw” or “bin_uniform_T127.raw” for the case of not adding uniform noise and adding uniform noise respectively, depending on your choice. 6. The output filename of problem2 part (B) is “dither2x2.raw” or “dither4x4.raw” or “dither8x8.raw” for the case of 2x2, 4x4, 8x8 dithering matrix respectively, depending on your choice. 7. The output filename of problem2 part (C) is “err_diff.raw” 8. The output of problem3 part (A) is represented on standard output. 9. The output of problem3 part (B) is “segment.raw”. 10. Delete object files: command: make clean –f README 11. Delete all output files include object files and executable files: command: make cleanAll –f README Following is the content of my README file: # DIP Homework Assignment #3 # May 31, 2007 # Name: Han-Ping Cheng # ID #: b93902027 # email: b93902027@ntu.edu.tw # compiled on linux with gcc CC=gcc LN=gcc All: prob1 prob2 prob3 prob1: @echo "Problem1" @echo "compiling and linking the code" $(CC) -c hw3_prob1.c $(LN) -o prob1 hw3_prob1.o @echo "usage: prob1 <InputImageName>" prob2: @echo "Problem2 PartA:" @echo "compiling and linking the code" $(CC) -c hw3_prob2_A.c $(LN) -o prob2_A hw3_prob2_A.o @echo "usage: prob2_A <InputImageName>" @echo "Problem2 PartB:" @echo "compiling and linking the code" $(CC) -c hw3_prob2_B.c $(LN) -o prob2_B hw3_prob2_B.o @echo "usage: prob2_B <InputImageName>" @echo "Problem2 PartA:" @echo "compiling and linking the code" $(CC) -c hw3_prob2_C.c $(LN) -o prob2_C hw3_prob2_C.o @echo "usage: prob2_C <InputImageName>" prob3: @echo "Problem3 PartA:" @echo "compiling and linking the code" $(CC) -c hw3_prob3_A.c $(LN) -o prob3_A hw3_prob3_A.o -lm @echo "usage: prob3_A" @echo "Problem3 PartB:" @echo "compiling and linking the code" $(CC) -c hw3_prob3_B.c $(LN) -o prob3_B hw3_prob3_B.o -lm @echo "usage: prob3_B <InputImageName>" clean: rm -fr hw3_prob1.o hw3_prob2_A.o hw3_prob2_B.o hw3_prob2_C.o hw3_prob3_A.o hw3_prob3_B.o cleanAll: rm -fr hw3_prob1.o hw3_prob2_A.o hw3_prob2_B.o hw3_prob2_C.o hw3_prob3_A.o hw3_prob3_B.o prob* shrink.raw thin.raw skeleton.raw bin_* dither* err_diff.raw segment.raw About my program: PROBLEM1: MORPHOLOGICAL PROCESSING Input image: PCB_board256.raw Approach: The approach of doing shrinking, thinning and skeletonizing is the same except their pattern tables are different. Step1. Convert conditional and unconditional pattern to numerical number (binary). Ex: Step2. For each pixel of input image, consider F( j, k )=0 as 1, F( j, k )=255 as 0, and using it and its eight neighbor pixels to calculate the numerical number as step1. Then compare it with numerical conditional pattern table. If there is a pattern hit, mark the pixel (T( j, k )=1 ). Step3. For each pixel marked (T( j, k )=1 ), using mark table to calculate the numerical number as step2, and compare it with unconditional pattern table. If there is no pattern hit, erase the pixel (F( j, k )=0 become 255). Step4. Repeat step2 and step3 until there is not any pixel to be erased. Result of shrinking: shrink.raw Result of thinning: thin.raw Result of skeletonizing: skeleton.raw Discussion: The result of thinning and skeletonizing keep more connection relationship and the result of shrinking and thinning look neater. In addition, comparing to the original binary image, shrinking loses more details but focuses on more complicated portion of original image; thinning looks like the minimal connected graph of original image which may tell us the structure of image; skeletonizing reveals some information about not only the structure but the shape of original image. PROBLEM2: DIGITAL HALFTONING Input image: pirate256.raw Part (a) Binary image Approach: For the case of just set threshold to 127, just let the pixel value great than 127 to be 255 and the pixel value less than or equal to 127 to be 0. For the case of adding uniform noise and set threshold to 127, add random number to each pixel of input image. Then do the same thing as not adding uniform noise. Result of just set threshold to 127: bin_T127.raw Result of adding uniform noise and set threshold to 127: bin_uniform_T127.raw Discussion: The result of just set threshold to 127 does not have the “shaded” properly, but the result of adding uniform noise to the original binary image first is also not very good. Maybe adding noise can reduce some dependency but there are also many noises that can interfere in our observation. Part (b) Dithering Approach: Step1. Use initial index matrix the formula: to obtain the Bayer index matrix by Step2. Transform Bayer index matrix obtained above to threshold matrix by using the following relationship: Step3. Use threshold matrix periodically across the full image. For each F( j, k ) ( pixel value of input image), convert to the B( j, k ) (pixel value of output binary image) by using the following formula: Result of using 2x2 dithering matrix: dither2x2.raw Result of using 4x4 dithering matrix: dither4x4.raw Result of using 8x8 dithering matrix: dither8x8.raw Discussion: The result of dithering looks better than adding uniform noise as part(a), and the different size of dithering matrix have different performance. The result of using 2x2 dithering matrix has less detail than 4x4 and 8x8. In other hand, the result of using 4x4 and 8x8 dithering matrix are similar, but 8x8 looks more blurred than 4x4. In this three results, I like the result of using 4x4 dithering matrix most, because it looks more like the original image. Part (c) Error Diffusion Approach: Step1. Normalize F( j, k) to lie between [0,1] ( F’( j, k) = F( j, k) / 255 ). Step2. Set threshold to 0.5 to do Floyd-Steinberg’s error diffusion with serpentine scanning: (B( j, k) is pixel value of output binary image) If F’( j, k) >= 0.5 B( j, k)=255 If F’( j, k) < 0.5 B( j, k)=0 E( j, k) = F’( j, k)- B( j, k) Example of left to right error diffusion: Result of Floyd-Steinberg’s error diffusion with serpentine scanning: err_diff.raw Discussion: In all method of problem2, I think Floyd-Steinberg’s error diffusion with serpentine scanning is the best one, because it not only have the shaded properly but also doesn’t look blurred, in other word, it keeps the contrast of original image and has less destruction. PROBLEM3: TEXTURE ANALISIS Part (a) Texture Classification Approach: Step1. For each sample image F (size: 64x64), do convolution by nine 3x3 Laws’ mask first to obtain nine microstructure arrays M (size: 64x64). Step2. Compute variance of nine microstructure arrays M to be 9 features T for each sample image. Ex: Step3. For each sample image, use 9 features of step2 to be a feature vector and perform K-means algorithm to these feature vectors to obtain the optimal solution (grouping 12 sample images to 3 groups). My K-means algorithm: Step1. Initialization: Compute the mean of feature vector of each sample image and then split the mean to three vectors V1, V2, V3 which are very close to the mean. Step2. Go through each feature vector, computing the distance between feature vector and V1, V2, V3 to see the feature vector is close to which one most. For example, if the feature vector of sample2 is close to V1 most, the sample2 will be classified to the group1. (The distance is computed by | feature vector-Vi | ^2 , 1<= i <=3) Step3. For each group (group1, group2, group3), recomputed the mean to be the new value of V1, V2 and V3. Step4. Repeat the iteration of step2 and step3 until the difference between sum of distance of last iteration and sum of distance of present iteration less than threshold. Result: group1: sample9.raw group2: sample10.raw sample11.raw sample12.raw sample5.raw group3: sample6.raw sample7.raw sample8.raw sample1.raw sample2.raw sample3.raw sample4.raw Discussion: In this part, each microstructure array obtained from the Laws’ mask can be transformed to features of image by using many statistic properties, like energy, mean, variance…etc. Finally, I choose to compute the variance of each microstructure array to discriminate the different image patterns, because the variance can stand for the dispersion of data, and I think the microstructure arrays of the same type of images usually have similar dispersion. According to the result, the classification is very successfully which also illustrates that variance has more discriminating power for each group of image. Part (b) Texture Segmentation Approach: Step1. For input image F (size: 256x256), do convolution by nine 3x3 Laws’ mask first to obtain nine microstructure array M (size: 256x256). Step2. For each pixel of input image, set window size to 53 and compute variance of nine microstructure array M in the window to obtain 9 features. Ex: Step3. For each pixel of input image, use 9 features of step2 to be a feature vector and perform K-means algorithm to these feature vectors to obtain the optimal solution (grouping 256x256 pixels of input image to 4 groups). Step4. Set gray value of pixels in group1 to be 0, gray value of pixels in group2 to be 85, gray value of pixels in group3 to be 170 and gray value of pixels in group4 to be 255 to obtain the output image. Result: Input image: textureMosaic.raw Output image: segment.raw Discussion: The method of this part is similar to the part(a), except in part(a), I calculate the variance of all value in one microstructure arrays obtained from Laws’ mask to acquire one feature which can represent the feature of one image, but in this part, I set window and calculate the variance of one microstructure arrays in the window to be the feature of the pixel. It is because in part(a), I have to group the same type of image, but now, I have to group the pixels of input image which has similar features to achieve the purpose of segmenting the image. Generally, larger window size may have more information and the variance will has more discriminating power, but it will require much more computation. In addition, too large window size is not good on pixels near boundary of different textures because there will be more pixels that have information include many type of textures. Ideally, we will hope that when we calculate the pixels’ feature, the microstructure array used in the window is all the same texture. Hence, I do some trial and error to determine the window size of this case, and finally, I let the window size be 53, which I consider as the best for segmentation.