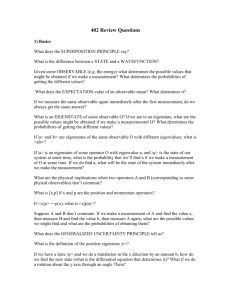

NOTES 4

advertisement

NOTES 5

Approximation Techniques

Most problems of quantum chemistry cannot be solved exactly, that is there is no

analytical solution. It is necessary to make some sort of approximation.

Four ways:

1) Use classical mechanics as a guide to the quantum mechanical solution and take note

of the small magnitude of Planck's constant. This is the Semiclassical Approximation.

2) Guess the form of the wavefunction of the system and use the variation principle to

help find the best values of the adjustable parameters in the wavefunction. The Variation

Principle basically says that the average energy derived from using a wavefunction that is

not the exact solution to Schrodingers equation will always be higher than the true value.

Thus finding the adjustable parameter values that minimize the energy give you the best

values and the best approximate wavefunction.

3) In the case that the exact solutions for a system that resembles the true system are

known, the differences in the Hamiltonians of the two systems can be used as a guide to

finding the best approximation to the true solution. This procedure is the main tactic of

Perturbation Theory. Perturbation Theory is most useful when one is interested in the

response of atoms and molecules to electric and magnetic fields. If the fields change with

time, then Time-Dependent Perturbation Theory is necessary.

4) The Self Consistent Field Theory is essentially a set of procedures which is an iterative

method for solving Schrodinger's Eqn. for systems of many particles that is based on the

Variation Principle.

1. Semiclassical Approximation

How might classical mechanics suggest the form of a wavefunction and the

corresponding energy?

Note it can be written:

ħ2 d2 /dx2 + px2 = 0

(A)

where px = {2m(E-V)}1 / 2

This is the classical expression for the linear momentum of a particle of mass m.

If the potential energy is uniform, the solution to equation (A) is e+ / - p x / ħ is a first

approximation to .

The WKB approximation says the solution should be of the form:

= c++ + c-If the potential was uniform

+/- = e+ - p x / ħ

But if the potential is varying slowly then replace product px by a function S+- so

+/- = e+ - i S + - / ħ

The WKB Method then seeks to find an expression for S+-.

putting this solution into eqn. (A):

-(dS+-/dx)2 +/- iħ (d2 S+-/dx2 ) + p2 = 0

(B)

No approximation are used up to here, the WKB Approximation says on can find S+- by

expanding it in powers of ħ.

S+- = S+-( 0 ) + ħS+-( 1 ) + ħ2 S+-( 2 ) + ……..

One can think of the transition from Q.M. to C.M. as corresponding to the replacement of

ħ by zero! and in retaining low-order terms in ħ one is bridging from classical to quantum

mechanics.

Substitution of the expansion in terms of the powers of ħ, into (B) and a collection of the

terms in the same power of ħ gives:

{-(dS+-( 0 ) /dx)2 + p2 } + ħ{-2(dS+-( 0 ) /dx)(dS+-( 1 ) /dx) +/- i(d2 S+-( 0 ) /dx2 )} + … = 0

The coefficient of each power of ħ is set to zero.

1st Order WKB Approximation

Consider only the terms up to ħ, yields

ħ( 0 ) term: -(dS+-( 0 ) /dx)2 + p2 = 0 implies

S+-( 0 ) = S+-( 0 ) (0) + 0∫x p dx

(C)

ħ( 1 ) term:

implies:

And if the constants of integration are absorbed into the coefficients c+-, the 1st order

WKB wavefunction becomes

= 1/p {

The probability density given by this expression is proportional to 1/p and is thus

inversely proportional to the speed of the particle.

This is consistent with the classical picture of particle motion: the faster a particle is

traveling, the less time it is found in a given region.

In classically allowed regions (where E>V), p is real and the solution may be written isn

some cases as:

=

The constants C and are related to c+ and c- using Euler's relation, e- i x = cos x + i sin x

C cos = i(c+ - c-)

C sin = (c+ + c-)

In classically forbidden regions (E<V), p is imaginary, and the solution is a linear

combination of exponentially decaying and increasing functions.

Also the approximation is not applicable to the classical turning points as p = 0 at those

points and the expression diverges. It is also not applicable when the potential energy is

very sharply varying.

Analysis shows that the semiclassical approximation is valid where:

|dV/dx| << {2m|E-V|}3 / 2 / (mħ)

Also to link the wavefunctions across a turning point where the WKB expressions are not

valid assume the potential energy function close to the turning point xtp can be written:

V = E + (x-xtp)(dV/dx)xtp (dV/dx)xtp = E + (x-xtp)F

For this type of a potential energy Schrodinger's eqn. is

ħ2 d2 /dx2 - 2m(x-xtp) F = 0

Solutions to this are the Airy function. To cross the turning point, then one needs to

match the oscillatory behavior on one side of xtp to an Airy function and extend the Airy

function to the other side and match that Airy function to the oscillating function on the

other side. This must be done at all of the turning points.

Turns out the for such confining potentials the wavefunction is well-behaved only for

This eqn. provides a semiclassical formula for the energy levels associated with the

stationary states "n" of the particle.

This is known as the Bohr-Sommerfeld quantization

As you might expect, the WKB approximation is useful in scattering problems.

2. Time Independent Perturbation Theory

In perturbation theory, we suppose that the Hamiltonian for the problem we are solving

can be expressed as:

H = H(0) + H(1) + 2 H( 2 ) .....

where H0 is a Hamiltonian that has an exact solution with known eigenfunctions and

eigenvalues.

H( 0 ) m( 0 ) = Em( 0 ) m( 0 )

H1 is a Hamiltonian which is a slight change from the exact solution.

Suppose that the true energy is the sum:

E = E0 + E( 1 ) + 2 E( 2 ) + ...

E0 is the eigenvalue for H0n 0n = E0n 0n, which is an exact analytical solution

E(1) is the first order correction, E(2) is the second order correction, etc.

The true wavefunction can also be written as = (0) + (1) + 2 (2) + ....

Where 0 is the eigenfunction shown above, 1 is the first order correction, etc.

Need to solve H = E.

same power of gives

Putting the above eqns into this and collecting terms with the

Because is an arbitrary parameter, the coefficient of each power of must equal zero

separately giving:

Often only need lst order correction to the wavefunction which is sufficient to give

higher order corrections to the energy.

The wavefunction is written as a linear combination of the unperturbed wavefuction of

the system because they are a complete basis set of functions:

o( 1 ) = n Cnn( 0 )

The sum is over all the states if the ideal model.

And substitution into the 2nd equation above allows solution for E0( 1 )

E0( 1 ) =

The first order correction to the wavefunction can be found by:

giving ck.

So

= 0( 0 ) + k0 {Hk0( 1 ) / (E0( 0 ) - Ek( 0 ) ) } k( 0 )

Here the ideal model is distorted by mixing into it the other states of the system. This

mixing is expressed by saying that the perturbation induces virtual transitions to these

other states of the model system. But in fact, the distorted state is beinig simulated as a

linear superposition of the unperturbed states of the system:

For a particular state k

a) makes no contribution to the superposition if Hk0( 1 ) = 0

b) makes a larger contribution (for a magnitude of the matrix element) the smaller the

energy difference |Eo( 0 ) - Ek( 0 ) |

Second Order Correction to the Energy

Use the same technique to extract the second-order correction

Write the second-order correction to the wavefunction as the linear combination

o( 2 ) =

Substitute in and multiply by left ket <0|

Left hand side is zero and

E0( 2 ) =

plugging in the coefficients cn

E0( 2 ) =

Example

Comments on Perturbation

Role of Symmetry

Degenerate States

3. Variation Theory

Based on the Variation Theorem which states that the true ground state energy is always

less than the energy that can be derived from a "trial wavefunction" which is a

wavefunction that is not the exact solution to H=E

That being the case one can try to optimize the trial function, if it has adjustable

parameters to make it have the lowest energy possible, and then this is the best possible

form of the trial wavefunction. We do this from the calculation of the average or

expectation value of the energy based on the trial wavefunction.

1st Step

Guess the form of the Trial Wavefunction, t

2nd Step

Plug it into the expression for the average energy also called the RAYLEIGH RATIO.

<E> = ∫t* H t d / ∫t* t d ]

Again typically the trial function is expressed in terms of one or more parameters that can

be varied until the Rayleigh Ratio is minimized.

Example

Rayleigh Ritz Method

Perhaps the most computer-friendly way to implement this method is to represent the trial

function as a linear combination of fixed basis functions with variable coefficients. The

coefficients then are treated as the variable sot be changed and optimized to find the

minimal average energy.

The trial wavefunction is taken to be

t = i ci i

(i are the basis functions)

<E> =

Finding the minimum value of this ratio involves

Thus solutions to the equations

j cj (Hij - ESij) = 0

Sij is the overlap integral

This is a set of simultaneous eqns. for the coefficients cj.

And the solution is that the following secular determinant should be zero.

det |Hij - ESij| = 0

The set of values of E are the roots of this eqn., and the lowest value is the best one.

Example

The variation principle leads to an upper bound for the ground state energy of the system.

One could also use it to determine an upper bound for the first excited state by

formulating a trial function that is orthogonal to the ground state function.

Hellmann-Feynman Theorem

Here the Hamiltonian depends on a parameter P (like the internuclear distance etc.) The

exact wavefunction is a solution of Schrodinger's Eqn., and so it and its energy also

depend on the parameter P.

Well then how does the energy of the system vary as the parameter is varied?

dE/dP = <H/P>

Example

4. Time-Dependent Perturbation Theory

Any sort of interaction of a molecule or atom with an electric field means that the system

is exposed to a field that changes in time, oscillates for as long as the perturbation is

imposed. Time-dependent perturbation theory is necessary then to calculate the transition

probabilities in spectroscopy and the intensities of spectral lines.

For time-dependent pertubation theory

Start off with perturbation wavefunction that depends on the time:

H = H0 + H1(t)

H( 1 ) (t) may for instance be 2H( 1 ) cost

Must use time dependent form of Schrodinger’s Eqn. (See Postulates) Hhd/dt

/

If E1( 0 ) and E2( 0 ) are the stationary energies of two of the states and their corresponding

time independent wavefunctions are 1( 0 ) and 2( 0 ) ; they are related to the timedependent unperturbed wavefunctions by

H( 0 ) n( 0 ) = iħ n( 0 ) /t where n( 0 ) (t) = n( 0 ) e- i E n t / ħ

When the perturbation H( 1 ) (t) is on, the state of the system is expressed as a linear

combination of the time-dependent basis functions:

(t) = c1(t)1( 0 ) (t) + c2(t)2( 0 ) (t)

The state may evolve with time as well

The probability that at any time t the system is in a state n is |cn(t)|2

Substituting the linear combination into Schrodingers eqn.:

Each basis function satisfies the unperturbed time-dependent equation so

Then the time variation of the coefficients can be extracted to give:

After simplification on obtains:

c1 H11( 1 ) (t) + c2 H12( 1 ) (t) e- i ω 2 1 t = i ħ dc1/dt

Often the time-dependent perturbation has no diagonals so H11( 1 ) (t) = H22( 1 ) (t) = 0

leaving:

When the perturbation is absent, the matrix elements H12( 1 ) (t) = H21( 1 ) (t) = 0

so dc1/dt = dc2/dt = 0. So coeff. don't change from intial values and the state is

(t) =

Even though (t) oscillates with time, the probability of finding the system in either of

the state is constant. In absence of the perturbation the system is frozen at the initial

composition.

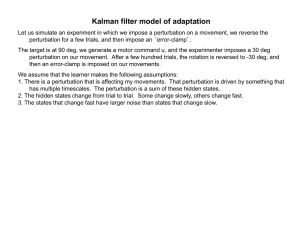

Case of a constant perturbation applied at t =0

fig

H12( 1 0 (t) =

Then

dc1/dt =

dc2/dt =

Further differentiation gives

The general solutons tot eh 2nd order differential eqn. are:

Now suppose that at t=0 the system is definitely in state 1. Then c1(0) = 1 and c2(0) = 0

These are initial conditions which lead to the solutions that:

c1(t) =

c2(t) =

Exact solutions

Rabi Formula

If interested in the probability finding the system in one of the two states as a function of

time, when the system was initially in state 1 at t=0, the time the constant perturbation is

applied. These probabilities are P1(t) = |c1(t)|2 and P2(t) = |c2(t)|2

One finds

P2(t) =

P1(t) = 1 - P2(t) for a two state system

Now consider a couple of special cases:

1. degenerate pair of states

ω21 = 0.

The probability of finding the system in state 2, if it was definitely in state 1 at t =0 is:

P2(t) =

Basically the system oscillates between the two states. The frequency is governed by the

magnitude of V and strong perturbations drive the system between its two states more

rapidly than weak perturbations.

2. Energy levels are widely separated

Widely separated in comparison with the strength of the perturbation, ω212 >>4|V|2

then

P2(t) ≈

Here the populations of the two states oscillate, but P2(t) never rises above 4|V|2 /ω212

Many level system

Requires dealing with Nt h order differential eqns. for an N-level system.

Use an approximation

Base the approximation on the idea that the perturbation is so weak and applied for so

short a time that all the coefficients remain close to their initial values.

Then if the system is in the state |i> at t=0, all the coeff. other than ci are close to zero

throughout the period for which the perturbation is applied and any coeff. of the state |f>

that is zero initially is given by:

cf(t) = 1/(iħ) ∫ ci(t)Hfi(t)ei ω f i t dt

and ci(t) is ~ 1

This ignores the possibility that the perturbation can take the system from its initial state

to the final by some indirect route which sequences several transitions.

So the perturbation is assumed to act only once.

Can be represented by a Feynman diagram

What about for a slowly switched constant perturbation

Such a perturbation is

H( 1 ) (t) = 0

for t<0

= H( 1 ) (1-e- k t ) for t≥0

H( 1 ) is a time-independent operator and for slow switching, k is mall and positive

The result is that for a state that is initially unoccupied:

cf(t) =

and for very long times after the perturbation has reached its final value, t>> 1/k, then

and the denominator in the second term can be replaced by -iωfi. This leads to

Which is just the result that would be obtained from first order time-independent

perturbation theory.

What about an oscillating perturbation?

Such as electromagnetic radiation in a spectrometer

A perturbation oscillating with an angular frequency and turned on at t=0 has the

form

H( 1 ) (t) = 2H( 1 ) cos t = H( 1 ) (ei ω t + e- i ω t )

for t≥0

one obtains

cf(t) =

Can calculate the probatility of finding the system in the discrete state |f> after time t if

initially it was in state i at time t=0 is

Pf(t)

Basically the same as the expression for a static perturbation applied to a two-level

system.

Note that the probability depends on the frequency offset.

Transition to continuum of states

Now consiter the final state is a part of a continuum of states. The molecular density of

state is M(E).

The total transition probability is given by:

And the if we express the transition frequency fi in terms of the energy E/ħ (set energy

of initial state to be zero of energy)

One finds

and the integral can be simplified to give

define the transition rate, W, as the rate of change of probatility of being in an initially

empty state:

W = dP/dt

It follows that

W=

which is called Fermi's Golden Rule.

These are related to Einsteins transition probabilities

Lifetime and Energy uncertainty