Marlise`s Matrix Model

advertisement

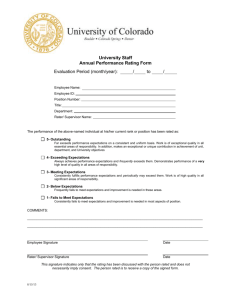

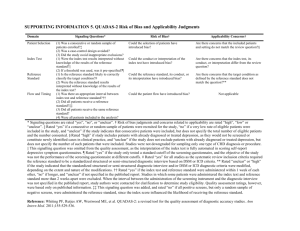

Test of a Model for Predicting Second Language Lexical Growth through Reading Marlise Horst Concordia University, Montreal What’s it going to be then, eh? There was me, that is Alex, and my three droogs, that is Pete, Georgie and Dim, Dim being really dim, and we sat in the Korova Milkbar making up our rassoodocks what to do with the evening, a flip dark chill winter bastard though dry. The Korova Milkbar was a milk-plus mesto, and you may, O my brothers, have forgotten what these mestos were like, things changing so skorry these days and everybody very quick to forget, newspapers not being read much neither. Well, what they sold there was milk plus something else. They had no license for selling liquor, but there was no law yet against prodding some of the new vesches.... (from A Clockwork Orange by Antony Burgess) BACKGROUND 1. Has research shown that L2 readers learn new words from reading? 2. Why are word learning gains so small in these read-andtest studies? Text Saragi et al 1978 Ferris C’work Orange Animal Farm Pitts 1988 1989 exp 2 Day Hul- Dupuy & Horst et al stijn Krashen et al 1991 1992 1993 1998 exp 1 C’work simp. simp. Trois Orange text text hommes simp. text Text 21,000 length 60,000 ??? 6700 Eng. Dutch 1032 907 Eng. video+ 15 pages No.of 45 items tested 90 50 Mean no. 5 of words learned 68 7* 28 2 17 3* 12 1 *gain established by comparison to a control group 7* 30 BACKGROUND 3. What do these studies leave unanswered? • How well are words learned and retained? • What happens to partially learned words? • What happens when partially learned words are encountered again? • How well does reading work for individuals? What can we expect? BACKGROUND 4. What might a predictive model offer? • something to test • insights into the word learning process • an eventual explanation? • a way of quantifying the effectiveness of reading • a basis for making teaching and learning decisions RESEARCH QUESTIONS 1. How many new words did the subject learn? 2. How well were the words learned? 3. How well did the matrix model succeed in predicting gains (and losses)? METHOD • case study • 8 readings of same text • adult learner of Dutch • comic-book text: Lucky Luke:Tenderfoot • tested on 300 words that occurred once (pre-test) • read text weekly (Saturdays) • tested on 300 words weekly (Wednesdays) • delayed post-test 10 weeks later TEST 0 = I definitely don't know what this word means 1 = I am not really sure what this word means 2 = I think I know what this word means 3 = I definitely know what this word means NOTE HOW INCREMENTAL KNOWLEDGE APPEARS IN MATRIX After 1 reading 0 1 2 3 0 75 4 2 0 1 27 20 4 0 2 9 20 13 7 3 3 6 35 75 2 6 20 12 10 3 2 6 32 109 After second reading 0 1 2 3 0 53 4 2 0 1 19 20 4 0 Notice: The diagonal represents words that have not changed position. Words below the diagonal are losing ground; above are gaining. With each reading, words are going in both directions. But, over readings, more words are heading up than down! RESULTS 1. How many new words did the subject learn? 30 0 28 0 26 0 24 0 22 0 20 0 18 0 W O 16 0 R D 14 0 S 12 0 10 0 80 60 40 20 0 Pre-test 1s t Read ing 2n d SURE I KNOW 3rd 4th THINK I KNOW 5th NOT SURE Rated 3 on pre-test: 82 Rated 3 after 8th reading 223 Gain: 141 Gain after 1st reading: Gain after 2nd reading: 37 45 6th 7th 8th DON'T KNOW de layed post RESULTS 2. How well were the words learned? 30 0 28 0 26 0 24 0 22 0 20 0 18 0 W O 16 0 R D 14 0 S 12 0 10 0 80 60 40 20 0 Pre-test 1s t Read ing 2n d SURE I KNOW 3rd THINK I KNOW Rated 3 after 8th reading: Rated 3 delayed post-test: Loss: Rated 3 on pre-test: 4th 5th NOT SURE 6th 7th 8th de layed post DON'T KNOW 223 198 25 82 90% of words rated “definitely known” (3) translated correctly RESULTS (MATRIX PROCEDURE) 3. How well did the matrix model predict gains? RAW DATA MATRIX Time 1 (after 1st reading) state 0 1 0 75 2 27 3 9 3 =114 Time 0 1 (before reading) 4 20 20 6 2 2 4 13 35 3 0 0 7 75 How to read this data: After one reading, some of the 114 words originally rated 0 (unknown, 75+27+9+3) had moved to other categories 75 had remained in category 0 The probability that a word rated 0 at pretest was still rated 0 after one reading was 75/114 = .66 … that a word rated 0 had moved to 1 (not sure) was 27/114 = .24 Same calculation for all cells probability matrix PROBABILITY MATRIX Time 1 (after 1st reading) state 0 1 2 3 0 .66 .24 .08 .02 1 .08 .40 .40 .12 2 .04 .07 .24 .65 3 .00 .00 .09 .91 Time 0 (before reading) The probability, multiplied by the original number, equals the number in the raw data above. So 114 original zero ratings, x .66 probability it will remain zero, = 75 words remaining at zero after one reading. And so on for all cells Now we can use the probabilities to predict how many words will remain at 0 after a second reading 75 x .66 = 49.5 And a third 49.5 x .66 = 32.67 And so on, for all cells, through any number of iterations, making predictions that can be compared to human data. RESULTS 3. How well did the matrix model succeed in predicting gains? REPORTED WORD KNOWLEDGE No. of words at state: 0 1 2 3 114 50 54 82 1 81 51 49 119 2 72 27 37 164 3 57 33 37 173 4 48 30 41 181 5 40 30 39 191 6 43 26 31 200 7 39 24 28 209 8 30 20 27 223 pre-test: reading round: MATRIX PREDICTIONS No. of words at state: 0 1 2 3 2 60 43 49 3 45 35 47 173 4 34 28 45 193 reading round 147 5 27 23 42 209 6 21 18 40 220 7 17 15 39 229 8 14 13 37 236 6 7 34 253 16+ if iterated…. RESULTS 3. How well did the matrix model succeed in predicting gains? Predictions and performance: "definitely known" and “think I know” ratings Based on multiplier from first reading, then iterated for each additional. Figure 8.3 Predictions and performance: "not really sure" and "definitely don't know" ratings CONCLUSIONS • An accurate and useful predictor? • Predicts incidental acquisition? • A lot of word learning occurred — some complete, some partial. • Eight reading encounters leads to learning that sticks. • Reading a text twice makes a big difference. • Give language learners comic books to read?