HW3Answer

advertisement

Homework 3

Due date: Friday, October 30 – submit by 11:00 PM

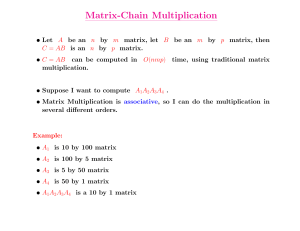

1. Purpose: reinforce your understanding of the matrix-chain multiplication problem,

memorization, and recursion.

a) (4 points) Please implement a recursive, dynamic programming, and a memoized

version of the algorithm for solving the matrix-chain multiplication problem described in

our textbook (Chapter 15), and design suitable inputs for comparing the run times of your

algorithms. Describe input data, report, graph and comment your results, you don’t have

to submit your program.

I implement the algorithms and generate random inputs of different

sizes to test the performance of the algorithms.

The table below shows the running time of the algorithms:

(* 0 means too small here…)

Number of

Matrices

15

16

17

18

19

20

500

1000

2000

Recursive(ms)

93

281

843

2515

7469

22454

Too long

Too long

Too long

Dynamic

Programming (ms)

0

0

0

0

0

0

437

3875

40078

Memorization(ms)

0

0

0

0

0

0

828

7547

Out of Memory

It is obvious that the running time of Recursive version of Matrix

Chain increase in exponential time. When size is from 15 to 20, the

running time becomes more or less three times longer when p increase by

1.

For Dynamic Programming and Memorization version, the running time is

increasing linearly. When size is 500, the running time of Dynamic

Programming is 437 and the one of Memorization is 828. When size is

increased to 1000, the running times of them become 2875 and 7547

respectively, which is near 10 times larger. And when size is changed

to 2000, we got 40078(ms) for Dynamic Programming, which is again 10

times longer. But we could not collect the result of Memorization due

to the out of memory problem caused by the recursive calls.

Therefore, we conclude that Recursive version’s running time is O(an) (a

is near 3) and Dynamic Programming and Memorization version’s are O(n).

Memorization version’s factor is a bit larger than Dynamic Programming

version and it requires more memory since it is implemented in

recursive way.

b) (2 points) Find an optimal parenthesization of a matrix-chain product whose sequence

of dimension is <5, 4, 4, 11, 7, 8, 7, 12, 6, 9, 8, 7, 5, 12>. How many multiplications does

this parenthesization require? How many multiplications are required by a naive

algorithm that multiplies the matrices in their input order?

The optimal parenthesization:

((A1(A2(((((((((A3A4)A5)A6)A7)A8)A9)A10)A11)A12)))A13)

The number of multiplications of the optimal is 2728;

The naïve algorithm requires 3210 multiplications.

2. Purpose: reinforce your understanding of chain matrix multiplication and greedy

algorithms (when these fail).

(4 points) Recall the matrix chain multiplication problem: minimize the number of scalar

multiplications when computing M = M1M2 ... Mn by choosing the optimum

parenthesization. For each of the following greedy strategies, prove, by coming up with a

counterexample, that it does not work. Recall also that the problem is fully defined by

dimensions di for i = 0, ..., n, and Mi is a di–1x di matrix. Thus each example you give

need only list the di values.

(a) First multiply Mi and Mi+1 whose common dimension di is smallest and repeat on the

reduced instance that has MiMi+1 as a single matrix, until there is only one matrix.

di={30,35,15,5,10,20,25}. Using the greedy algorithm, the chain would be

(A1((A2((A3A4)A5))A6))

But the optimal is: ((A1(A2A3))((A4A5)A6))

(b) First multiply Mi and Mi+1 whose common dimension di is largest and repeat on the

reduced instance that has MiMi+1 as a single matrix, until there is only one matrix.

di={30,35,15,5,10,20,25}. Using the greedy algorithm, the chain would be

(((A1A2)A3)(A4(A5A6)))

But the optimal is: ((A1(A2A3))((A4A5)A6))

(c) First multiply Mi and Mi+1that minimize the product di–1didi+1 and repeat on the

reduced instance that has MiMi+1 as a single matrix, until there is only one matrix.

di={30,35,15,5,10,20,25}. Using the greedy algorithm, the chain would be

(A1(A2(((A3A4)A5)A6)))

But the optimal is: ((A1(A2A3))((A4A5)A6))

(d) First multiply Mi and Mi+1that maximize the product di–1didi+1 and repeat on the

reduced instance that has MiMi+1 as a single matrix, until there is only one matrix.

di={30,35,15,5,10,20,25}. Using the greedy algorithm, the chain would be

((((A1A2)A3)A4)(A5A6))

But the optimal is: ((A1(A2A3))((A4A5)A6))

3. Purpose: reinforce your understanding dynamic programming.

(3 points) Do problem 15-4 on page 367.

Suppose there are n people in the company and people with higher title has a lower index.

For example, people with index 1 means that the biggest boss of the company.

We could define C[i][0] as the optimal conviviality of the tree rooted at i and node i is not

selected. Similarly, C[i][1] will be the optimal conviviality of the tree rooted at i and

node i is selected.

Since when a node i is selected, its direct children could not be selected, we could have

two cases for the selection of the root in a tree:

Case 1: If the root of a tree rooted at i is not selected, its max conviviality would be the

sum of the max conviviality of the trees rooted at its direct children with the root selected

or not.

Case 2: If the root of a tree rooted at i is selected, its max conviviality would be the sum

of the max conviviality of the trees rooted at its direct children with the root not selected.

To compute the max conviviality of the whole tree, which is also the tree rooted at node 1,

we need to compute the max conviviality of the tree rooted at node 2,3,4,..n. This means

that we can divide the problem into sub problems.

Since the tree rooted at node j may be the subtree of the tree rooted at i where i < j, the

sub problems of i and j are overlapped.

Thus, this problem could be solved by using dynamic programming.

Assume that the tree rooted at node i has k children, and Child[i] return the index of the

left child of node i, we have:

Case 1:

Case 2:

C[i][0]

Child ( i ) k

(max(C[ j ][0], C[ j ][1]))

j Child ( i )

C[i ][1]

Child ( i ) k

(C[ j ][0])

j Child ( i )

The answer of the problem is

max(C[1][0], C[1][1]) .

Since for each node i, we need to compute C[i][0] and C[i][1] and there are n nodes, to

fill all the values we need to compute 2*n times. Thus the running time of the problem is

( n) .

4. Purpose: reinforce your understanding of greedy algorithms.

(3 points) Do problem 16-2(a) on page 402.

Greedy Algorithm: First we pick up the task ai that has a smallest units of processing time

and repeat on the reduced instance until there is no task left.

That is to say, first we sort the tasks ai by their completion time.

And then we pick up ai from the sorted tasks one by one and this will give an optimal

solution.

Proof:

Supposed we have a task array S = {a1,a2,…,an}. Their corresponding processing time is

P = {p1,p2,…,pn}.

Assume that we have scheduled the tasks and their processing time is B={b1,b2,…,bn}, C

= {c1,c2,…,cn} is the completion time for each task.

From the definition, we have:

c1 = b1

c2 = b1 + b2

…..

cn = b1 + b2 + … + bn

The average completion time would be:

Avg(c) = (c1+c2+..+cn) / n

= (b1 + (b1 + b2) + .. + (b1+b2+…+bn))/ n

= (n * b1 + (n-1) * b2 + … + bn) / n

To minimize the average completion time, we just need to make sure that b1 is the

smallest units of processing time, b2 is the second smallest units of processing time and

so on.

Thus, the greedy choice is to always pick up the task whose units of processing time is

the smallest among the remaining tasks.