What is dynamic programming?

advertisement

Welcome to our presentation

Presentation topic : Dynamic programming

Presented by: 1. Shahin Pervin

(072-20-105)

2. Mostakima Yesmin

(092-15-793)

3. Jyotirmoyee Saha

(092-15-804)

What is dynamic programming?

Dynamic programming is a technique for solving

problem and come up an algorithm. Dynamic

programming divide the problem into subparts

and then solve the subparts and use the

solutions of the subparts to come to a solution.

The main difference between dynamic

programming and divide and conquer design

technique is that the partial solutions are stored

in dynamic programming but are not stored and

used in divide and conquer technique.

It’s history

The term dynamic programming was

originally used in the 1940s by Richard

Bellman to describe the process of solving

problems where one needs to find the best

decisions one after another.

Why does it apply?

Dynamic programming is typically applied

to optimization problems. In such

problems there can be many possible

solution. Each solution has a value, and

we wish to find a solution with the optimal

(minimum or maximum) value.

It’s step

The development of dynamic programming

algorithm can be broken into a sequence of four

steps Characterize the structure of an optimal solution.

Recursively define the value of an optimal

solution.

Compute the value of an optimal solution in a

bottom-up fashion.

Construct an optimal solution from computed

information.

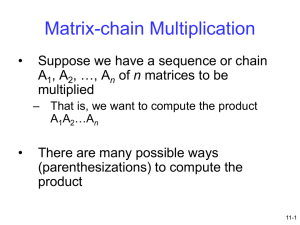

Example

Matrix-chain multiplication---If the chain of matrices is (A1,A2,A3,A4), the product

A1A2A3A4 can be fully parenthesized in five

distinct ways:

(A1(A2(A3A4)))

(A1((A2A3)A4))

((A1A2)(A3A4))

((A1(A2A3))A4)

(((A1A2)A3)A4)

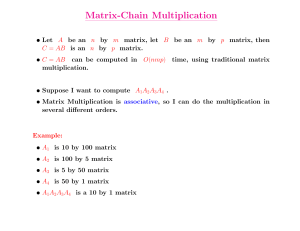

Matrix-chain Multiplication Problem

If columns A equal the numbers of rows of

B

For A=p*q and B=q*r. the resulting matrix C

is a p*r.

The matrix-chain multiplication problemit’s not actually multiplying matrices.It’s

goal is only to determine an order for

multiplying matrices that has the lowest or

highest cost.

Matrix-chain Multiplication Apply

We have many options because matrix multiplication is

associative. In other words, no matter how we

parenthesize the product, the result will be the same. For

example, if we had four matrices A, B and C we would

have:

(A(BC))= ((AB)C) = ((AC)B) = ....

However, the order in which we parenthesize the product

affects the number of simple arithmetic operations

needed to compute the product, or the efficiency. For

example, suppose A is a (10*100) matrix, B is a (100*5)

matrix, and C is a (5 × 50) matrix. Then,

((AB)C)= (10*5*100) + (10*50*5) = 5000 + 2500 = 7500

operations

(A(BC)) = (100*50*5) + (50*10*100) = 25000 + 50000 =

75000 operations.

Structure of an Optimal parenthesization

The structure of an optimal parenthesization

Notation: Ai..j = result from evaluating AiAi+1…Aj (i j)

Any parenthesization of AiAi+1…Aj must split the

product between Ak and Ak+1 for some integer k in the

range i k < j

Cost = cost of computing Ai..k + cost of computing

Ak+1..j + cost of multiplying Ai..k and Ak+1..j together.

A recursive solution

m[i, j ] = m[i, k] + m[k+1, j ] + pi-1pk pj

for i ≤ k < j

m[i, i ] = 0 for i=1,2,…,n

A recursive solution

But… optimal parenthesization occurs at one value

of k among all possible i ≤ k < j

Check all these and select the best one

m[i, j ] =

0

if i=j

min {m[i, k] + m[k+1, j ] + pi-1pk pj }

if i<j

i ≤ k< j

11-11

Algorithm to Compute Optimal Cost

First computes costs for chains of length l=1

Then for chains of length l=2,3, … and so on

Computes the optimal cost bottom-up

Input: Array p[0…n] containing matrix dimensions and n

Result: Minimum-cost table m and split table s

Takes O(n3) time

MATRIX-CHAIN-ORDER(p[ ], n)

Requires O(n2)

for i ← 1 to n

m[i, i] ← 0

for l ← 2 to n

for i ← 1 to n-l+1

j ← i+l-1

m[i, j] ←

for k ← i to j-1

q ← m[i, k] + m[k+1, j] + p[i-1] p[k] p[j]

if q < m[i, j]

m[i, j] ← q

s[i, j] ← k

return m and s

space

11-12

l =3

l=2

35*15*5=

2625

10*20*25

=5000

m[3,5] = min

m[3,4]+m[5,5] + 15*10*20

=750 + 0 + 3000 = 3750

m[3,3]+m[4,5] + 15*5*20

=0 + 1000 + 1500 = 2500

Constructing an optimal solution

Each entry s[i, j] records the value of k such that the optimal

parenthesization of AiAi+1…Aj splits the product between Ak and

Ak+1

A1..n A1..s[1..n] As[1..n]+1..n

A1..s[1..n] A1..s[1, s[1..n]] As[1, s[1..n]]+1..s[1..n]

Recursive…

Optimal parenthesization: ((A1(A2A3))((A4 A5)A6))

The end

Thank You