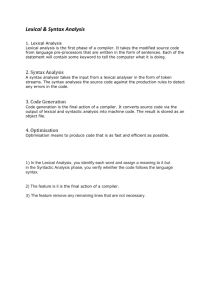

1.2. Human speech recognition

advertisement