The relationship between Linguistic Semantics and Controlled

advertisement

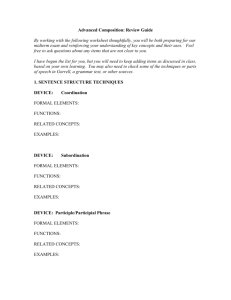

The relationship between Linguistic Semantics and Controlled English to support improved information extraction This technical report is the Quarter 3 deliverable for research carried out on International Technology Alliance (ITA) programme, specifically in Technical Area 6, Project 4, Task 2. The report outlines the various approaches that have been investigated in support of providing mechanisms to link linguistic semantic information to the various conceptual structures within a domain model. This is on-going research and some of the material in the report may be advanced further or superseded, and additional areas of investigation may arise. Some of the material in this report is likely to be used in the planned ICCRTS1 paper titled “Information Extraction using Controlled English to support Knowledge-Sharing and Decision-Making” and therefore the technical report should not be published until the material in that paper has been presented (June 21st 2012) The remainder of this document is the detailed content of the technical report. Language facts When used in support of information extraction processing Controlled English2 (CE) [CE1] is used for two purposes: as the target of the linguistic processing, where the CE is acting as the semantic representation language; as the means by which the language processing tools are configured to perform the processing. For the first purpose it is necessary to have a conceptual model of the domain (as has been described above) and to know the mapping between the words in a typical sentence and concepts in the domain conceptual model; for the second it is necessary to have another conceptual model, that of linguistic concepts and linguistic processing concepts, which is described in this section. However both of these models must be based upon common components in order that the semantics of words can be expressed and mapped onto the semantics of the domain. There is, in effect, a single conceptual model with multiple layers, each layer being based upon the concepts in a higher layer. The current layers (from top to bottom) are as follows: 1 17th ICCRTS 2012: Operationalizing C2 Agility, June 19th-21st 2012, Fairfax Virginia 2 For an introduction to, and definition of, the CE language and associated information please refer to the various [CEx] references, and for information on pre-cursor work on Common Logic Controlled English (CLCE) refer to reference [CE3]. 1. The Meta Model, allowing the description of the concepts themselves (such as ‘relation concept’) and the relations between them. 2. The General Domain Model, containing fundamental concepts such as ‘agent’, ‘spatial entity’, ‘situation’, ‘container’, together with basic relations between them, such as ‘contained in’ and “the situation s1 has the agent a1 as agent role”. 3. The Semiotic Triangle, based on that of Ogden and Richards [SEMTRI], providing fundamental concepts relating meanings, symbols and things in the domain world. Our particular version of the semiotic triangle is given in Figure 1. The high level concept of ‘meaning’ is the parent of the meta model concepts (such as ‘relation concept’) and also of other resource representations of meaning, such as ‘wordnet synset’. Two key relations in the triangle used extensively in the syntax-semantic interface are: o the symbol S stands for the thing T. o the symbol S expresses the meaning M 4. The General Linguistic Model, containing our theory of linguistics, including such concepts as ‘word’, ‘phrase’, ‘noun phrase’ (all subconcepts of ‘symbol’), ‘wordnet synset’ (subconcept of ‘meaning’), and structures such as “linguistic frame’ which holds relationships between CE statements about syntax and semantics (as described below). The general linguistic model also contains syntactic relations between parts of the parse tree, such as “the verb phrase np1 has the noun |dog| as head”. 5. The Domain Model, containing specific concepts (for example this might include ‘place’ or ‘village’ or ‘is located in’). These are based upon the more generic concepts (such as ‘container’ and ‘is contained in’). meaning expresses symbol conceptualises thing stands for Figure 1 – Semiotic Triangle As described below, the parser agent turns a syntactic parse tree into a set of CE sentences that is easier to process via linguistic rules. These sentences use the concepts defined in the general linguistic model. Given the sentence “the patrol in East Dulwich discovers the factory”, this might be turned into sentences including: the noun phrase np1 has the noun |patrol| has head and has the prepositional phrase pp1 as dependent and stands for the thing [001]. the prepositional phrase pp1 has the word |in| as head and has the noun phrase np2 as object. the noun phrase np2 has the proper noun |East Dulwich| as head and stands for the thing [002]. Here the syntax tree is represented in attributes such as ‘dependent’ and ‘head’, and the (minimal) semantics as ‘stands for’ (based on the idea that each noun phrase stands for some object in the domain). Mapping between language facts and domain facts In order to map between the syntax of the sentence and the semantics of the domain, we are assuming in our current research that there is a parser that will provide a basic syntactic parse tree (specifically the Stanford Parser [SP1, SP2]) allowing use to focus on the mapping of this parse tree into the specific semantics as represented in the analyst’s conceptual model. Our understanding of how syntax maps specifically to the semantics of the conceptual model is captured in the concepts and rules of our General Linguistic Model; at this stage we are still developing our understanding although it is based upon exiting linguistic and semantic principles; so in some areas the linguistic model is oversimplistic, and we plan to enhance it as more of our sentence corpus is analysed. Nevertheless we believe that expression of the linguistic model in Controlled English is of benefit in sharing and understanding linguistic processing information with the analyst. The construction of the semantics may be considered at two levels: mapping to general semantics (that which is independent of a specific domain) and mapping to specific semantics (that which is defined in the domain model). We undertake this mapping in an incremental fashion, in the spirit of least commitment, with rules that match general patterns inferring the general semantics followed by rules that match more specific domain-based patterns adding inferences about the more specific semantics. Since the domain model is itself derived from general concepts, this incremental mapping allows the more specific information to be consistent with the general information, but adding more detailed constraints. More specifically we undertake the mapping using the following functions (which may not necessarily follow this sequence): Words in the parse tree are matched to concepts in the domain conceptual model General structures in the parse tree are matched to generic semantic concepts Specific structures in the parse tree are matched to specific concepts Further inferences are made about the specific entities using domain specific rules Matching words to concepts is undertaken via CE sentences such as: the noun |patrol| expresses the entity concept ‘patrol unit’. based on the semantics that nouns represent concepts which are realised (or instantiated) by entities in the domain. Such linking sentences must be derived from the analyst’s understanding of the meaning of the concepts (s)he defined, and a tool called the Analyst’s Helper is being developed for this purpose (see below). Such mapping sentences are provided to cover nouns (linked to entity concepts) adjectives (also linked to entity concepts) and verbs (linked to relation concepts). The mapping is done by rules such as: if ( the noun phrase NP has the noun N as head and stands for the thing T ) and ( the noun N expresses the entity concept C ) then ( the thing T realises the entity concept EC ). where ‘the thing T realises the entity concept EC” states that T may be conceptualised by the concept EC. This maps between the meta level (‘the entity concept EC’) and the domain level (‘the thing T’); it seems that such mapping is required at some point in the syntax-semantic interface and therefore a linguistic model must also include a capability of representing meta models. This one-to-one mapping is simplistic, and we are augmenting the “expresses” CE sentences (and associated rules) with further information indicating pre-conditions that are required before the specific link can be inferred. The most generic mapping of parse tree structures to general semantics contained in the linguistic model (and based in the semiotic triangle) is that noun phrases ‘stand for’ entities in the domain. An example has already been given above where “the noun phrase np2 stands for the thing [002]”; here ‘[002]’ is a constructed unique identity of an entity presumed to exist in the real world (and is written to look like a “reference”). We do not at this stage know what entity [002] is, but later processing may add information about it. A similar CE sentence is used to state that verb phrases ‘stand for’ situations in the domain, where is a situation is a general concept covering event, activity, possession, family relation etc, where multiple entities are involved in different roles, and where additional information such as time and location may be associated. More detailed (but still generic) mapping between syntax and semantics may be represented as logical rules in the linguistic model. The concept of ‘container’ captures the idea that if something is “in” something else (for example expressed as a prepositional phrase headed by “in”), then the second in some sense “contains” the first. The current rule to infer this is: if ( the noun phrase NP1 stands for the thing T1 and has the prepositional phrase PP as dependent ) and ( the prepositional phrase PP has the word '|in|' as head and has the noun phrase NP2 as object ) and ( the noun phrase NP2 stands for the thing T2) then ( the thing T1 is contained in the container T2 ). Here the rule preconditions will match on earlier parse tree CE sentences to infer: the thing [001] is contained in the container [002]. Such a rule will be applicable irrespective of the domain model, but will not infer very specific information; it is left open as to whether a container is a place or an organisation or a time period, etc. However if other more specific inferences about the nature of the container [002] are available, for example that [002] is a place, then a more domainspecific rule might infer that the relationship between [001] and [002] may be specialised into ‘is located in’: if ( the thing T is contained in the container P ) and ( the container P is a place ) then ( the thing T is located in the place P ) inferring the CE sentence: the thing T is located in the place [002]. Further processing is necessary to determine the location of place [002], and the nature of thing [001]. Similar such rules may be used to turn the more generic sentence “the discovery situation s1 has the agent a1 as agent role and has the agent a2 as patient role” into the more specific sentence “the agent a1 finds the agent a2”. System/Architectural description The key user for a Controlled English based system is the non-technical “business” user, and the purpose of the CE language is provide a more human –friendly information representation language to lower the technical barrier between such users and the capabilities of the information processing system. Within the linguistic processing environment described in this paper we believe that there will be a number of natural specialisations either in terms of different individuals involved in the processing, or for smaller implementations perhaps the same individuals but with different operational contexts. Such specialisations might include: domain specialists (such as an intelligence analyst), linguists (to provide system knowledge to help processing of natural language documents), knowledge engineers (to help the domain user to better understanding their world-view, and techniques for modelling this effectively), IT specialists and systems integrators (concerned with the implementation of applications and databases or other middleware to enable an operational environment to be developed). In addition to these specialisations there are also likely to be different user roles, layers of management and work-flow/approval cycles that will be found in any such operational environment. SYNCOIN Reports Message PreProcessor Proper Nouns (places, units) Stanford Parser Entity Extractor Situation Extractor CEStore Names CE Aggregator "Stylistic" CE Conceptual Model (concepts, logical rules, linguistic expression) For Analysis Figure 2 Processing Architecture The aim of CE is to provide a common information representation format that can be used by all parties, with different (but overlapping) domain models supporting each specialisation in support of the whole endeavour. In addition to this there are some research grade tooling capabilities, such as the “CE Store” that can also be used to directly support some of the requirements of the IT specialist staff. CE is designed to be most useful in situations that have the following characteristics: A high degree of human interaction, usually involving specialist users with complex needs in non-trivial environments. A likelihood of rapidly evolving or uncertain tasks, queries or other knowledgebased activities. The need for collaboration, either between different people or teams, and/or across different disciplines. CE is of little value if there is no human-involvement, little complexity, or very firm and stable requirements, and in such circumstances traditional application development processes are a much more straightforward and low risk solution. In cases where there is a high degree of customisation, development, uncertain requirements or short lead times, especially in areas where human-led planning, thinking or decision making are required then CE (or similar human-friendly information processing environments) could be a very useful capability. Ontology-based information extraction, normalization and mapping The ability to define an ontology for a domain and then use this knowledge to enhance information extraction capabilities is a current research topic in the Natural Language Processing and Semantic Information communities. The approach outlined in this paper is very much aligned with this approach since the CE conceptual model(s) are synonymous with Semantic Web ontologies [CE4], but the specific augmentation of the underlying semantic “domain models” with explicit lexical information linkages enables the domain models to be much more strongly linked to the typical natural language terms used when discussing the underlying concepts. We have not undertaken any formal comparisons to specific ontology based information extraction techniques at this stage. Agent / Blackboard architecture Within the general CE-based information-processing environment there is the concept that agents (machine or human) will consume and produce information in the form of CE sentences. From a human perspective this can take the form of any valid CE sentence being contributed by any user at any point in time. This open-ended and unconstrained approach does allow for the unpredictability of human processing and “flashes of insight” that might arise during human thinking, and the assertion of any such new information can be made immediately available (if appropriate) to other machine or human agents within the system for further processing. From a machine-agent perspective there are two distinct types of processing that typically occur: the execution of logical inference rules, which are firmly based on the underlying logic, and which automatically generate rationale [CE2] to explain the reasoning steps for any new “facts” that are inferred; and the execution of agent code which may carry out any set of simple or complex processing against the input information in order to assert new information as a result (for example complex entity analytics, or estimation of current location based on historical information etc). In all cases the agent receives all information from CE sentences and asserts any new information in the form of CE sentences. Such new information may also be extensions to the underlying conceptual model, new logical inference rules, or simply new information to be added to the underlying CE corpus. All such new information is then immediately available for processing by the other agents should that be required, and the rationale is available for interrogation/inspection by the machine or human analyst for decision support for forensic processing [CE5]. Modules The Analyst’s Helper module. Our approach to linguistic processing relies upon the linking of words to concepts, specifically via the “expresses” sentences. Whereas the meaning of natural language words is generally understood by the community of speakers, the authoritative meaning of the concepts is only known to the analyst who developed the conceptual model. Only the analyst can determine the linking of words to the concepts, although (s)he may be assisted by tooling to perform this task. To this end we are developing an “Analyst’s Helper” (AH) to assist the analyst in constructing the linguistic mappings between words and each concept in the conceptual model, that is the “expresses” sentences. To reduce the burden on the analyst, the Analyst’s Helper uses WordNet ® [WN1, WN2, WN3] to suggest possible words for each concept. Each concept in the domain model is matched to all possible WordNet synsets (via a simple analysis of the words in the word senses) and the analyst is invited to choose the best matching synset from those found. When the choice is made, the Analyst’s Helper constructs suitable CE sentences describing the match between the synset and concept, and constructs ‘expresses’ CE sentences linking the words in the synset and the concept. Rationale for these sentences is also specified, to allow future explanation of the NL processing steps. At present this matching process is simplistic, and it is planned to extend the Analyst’s Helper to allow more complex matching of verbs and adjectives, to offer more “remotely” matching synsets and to feedback the sets of unrecognised words from the parser for consideration by the analyst. It may also be possible to build a set of predefined concepts and word/concept mappings which may be used as the basis for the building of a conceptual model by the analyst. conceptual model MetaModel generator meta information Analyst Helper semantic rules "expresses" the word |www| expresses the concept yyy NL parser Proper Names Analyst the word |xxx| is an unrecognised word wordnet/etc ITAnet translate wordnet/etc gazetteers etc translate gazetteers etc Figure 3 Analysts Helper CE Store The CE Store is a research-grade Controlled English processing environment that will be available shortly (during 2012) for evaluation and experimentation purposes with the CE language. The CE Store provides a basic CE processing environment that includes the following high-level capabilities: Basic CE sentence parsing Define/extend any concept model Assert any CE sentence conforming to the appropriate conceptual model(s) Define and execute any CE query Including an example “visual query composition” element Define and execute any CE rule Again, including a visual composition element Define and execute any “CE agent” In the form of Java code which conforms to a simple “CE Store” interface Operate entirely in memory, or persist information to a relational database format Example web-based client to allow rapid development and browsing of CE-based information Example agents to carry out basic information processing tasks Some capability to convert to/from OWL and RDF formats The purpose of the CE Store is to demonstrate a “pure” CE-based implementation of an information-processing environment within which human and machine agents can contribute and interact with complex information based on common conceptual models of a domain. Information Extraction Module Figure 2 shows the structure of the module to extract information from the sentences and to convert them into CE facts, using the formats defined in previous sections. This is based upon a sequence of agents running under the CE Store. Each agent reads the relevant CE sentences from the CE store, performs some processing and places the resulting CE sentences back into the CE store. The following agents are executed: 1. The reports are converted into sentences via the Message Preprocessor agent (as described elsewhere) 2. The Stanford parser agent is called on a sentence. This calls the Stanford parser Java API code [SP1, SP2] to produce a raw parse tree, and then turns the raw parse tree into a CE representation (defining phrases with heads and dependents, as described above). The use of this intermediate CE representation allows for minor deviations in the parse tree representation, and permits the insertion of other parsers in the future. 3. The entity extractor agent analyses the CE head/dependent representation and uses entity extraction rules to generate information about the ‘things’ stood for by the noun phrases, adjective phrases and prepositional phrases as outlined above. The result is a set of entities, their characterisations as domain concepts, and relations between them, as a set of CE sentences. As part of this processing, reference information is used, including: a. the ‘expresses’ links between words and entity concepts b. fact bases of proper nouns and their categorisations, and the domain-level attributes (e.g. the coordinates of places) 4. The situation extractor agent further analyses the CE head/dependent representation of the parse tree together with information about the entities extracted in the previous step. This uses further rules to extract the thematic roles for the verbs and to add further relations between the situation (representing the verb) and the participants in the situation. ‘expresses’ links are also used at this stage. The result is a set of CE sentences about the situation. 5. A “naming” agent is run to provide more readable names for the entities; this agent is in initial development. 6. As a result of the previous steps, there are a number of CE sentences describing the entities and situations. Due to the incremental nature of the architecture, these sentences are small and atomic in form, and are best presented to the user in an more expressive aggregated form. Thus a final “CE aggregation” processor is run to turn the atomic CE into a more “stylistic” CE, using techniques such as: aggregating all information about an entity into a single sentence; not duplicating information; not displaying supertypes that may be inferred; and not displaying relationships that are inferrable from other relationships. This process is also in initial development. The final output, the set of CE sentences representing the entities and relations is now available for further processing and analysis, via machine or human. In some of these steps (specifically 3 and 4) the rationale for the inferred CE sentences is also generated and stored, and is available for presentation to the user if a better understanding of where the information occurred from is required. CE Parser module Our experience of using CE in real applications indicates that it is of benefit but that it is desirable to extend the expressivity of the CE language [CE6], for example by adding prepositional phrases. The extension of the language may eventually add ambiguities, but we suggest that the careful and incremental addition of new expressiveness will allow control of such ambiguity. Our approach is to extend the CE language and associated parsing system to more closely match the syntactic and semantic structure of real Natural language, by following the same linguistic theories, and using the same linguistic model (including rules) in both CE and NL processing. Thus our CE becomes a more closely constrained version of a real NL. We believe this has several advantages: 1. We can use the CE language as a controlled example of a realistic NL, allowing the exploration of linguistic processing techniques including the representation of linguistic models in CE itself. This may help to define configuration capabilities for NL processing tools. 2. We can use our understanding of a real NL to guide the selection of new syntax and associated semantics in order to extend CE without introducing uncontrolled ambiguity 3. We can reuse models, rules and processing technologies in both CE and NL processing As part of this parallelisation of CE and NL processing we have developed the notion of a ‘linguistic frame’, as part of the CE linguistic model. A linguistic frame defines a phrase structure both as a syntactic component and a semantic component, together with the ways of mapping between the interface. A linguistic frame is really a specialised type of logical relationship, and we have integrated an interpreter of these logical relationships as part of a chart parser to provide the basis for a CE parser. There is a close correspondence between the logic in a linguistic frame and the rules used in the NL parsing, and it is our intention to further parallelise the processing of NL and CE. ACKNOWLEDGMENT This research was sponsored by the U.S. Army Research Laboratory and the U.K. Ministry of Defence and was accomplished under Agreement Number W911NF-06-3-0001. The views and conclusions contained in this document are those of the author(s) and should not be interpreted as representing the official policies, either expressed or implied, of the U.S. Army Research Laboratory, the U.S. Government, the U.K. Ministry of Defence or the U.K. Government. The U.S. and U.K. Governments are authorized to reproduce and distribute reprints for Government purposes notwithstanding any copyright notation hereon. References [CE1] Mott, D., Summary of Controlled English, ITACS, https://www.usukitacs.com/?q=node/5424, May 2010 [CE2] Mott, D. Status on Work on Rationale and CNLs https://www.usukitacs.com/?q=node/4420 [CE3] Common Logic Controlled English, Sowa, J., March 2007, http://www.jfsowa.com/clce/clce07.htm [CE4] Mott, D., The representation of logic within semantic web languages, ITACS https://www.usukitacs.com/?q=node/4986 August 2009 [CE5] Mott, D., and Dorneich, M. C., “Visualising rationale in the CPM”, 3rd Annual Conference of the International Technology Alliance (ACITA), Maryland, USA, 2009 [CE6] Mott, D. and Hendler, J., Layered Controlled Natural Languages, 3rd Annual Conference of the International Technology Alliance (ACITA), Maryland, USA, 2009 [WN1] Wordnet, a lexical database for English, http://wordnet.princeton.edu/ [WN2] George A. Miller (1995). WordNet: A Lexical Database for English. Communications of the ACM Vol. 38, No. 11: 39-41. [WN3] Christiane Fellbaum (1998, ed.) WordNet: An Electronic Lexical Database. Cambridge, MA: MIT Press. [SEMTRI] Ogden, C. K. and Richards, I. A. The Meaning of Meaning (1923) [SP1] The Stanford Parser, A statistical parser, http://nlp.stanford.edu/software/lexparser.shtml [SP2] Dan Klein and Christopher D. Manning. 2003. Accurate Unlexicalized Parsing. Proceedings of the 41st Meeting of the Association for Computational Linguistics, pp. 423-430.