This is a statistical thermodynamics text focused on forces that

advertisement

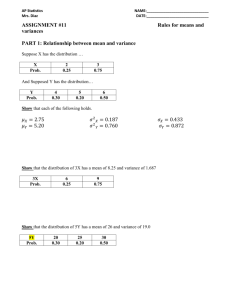

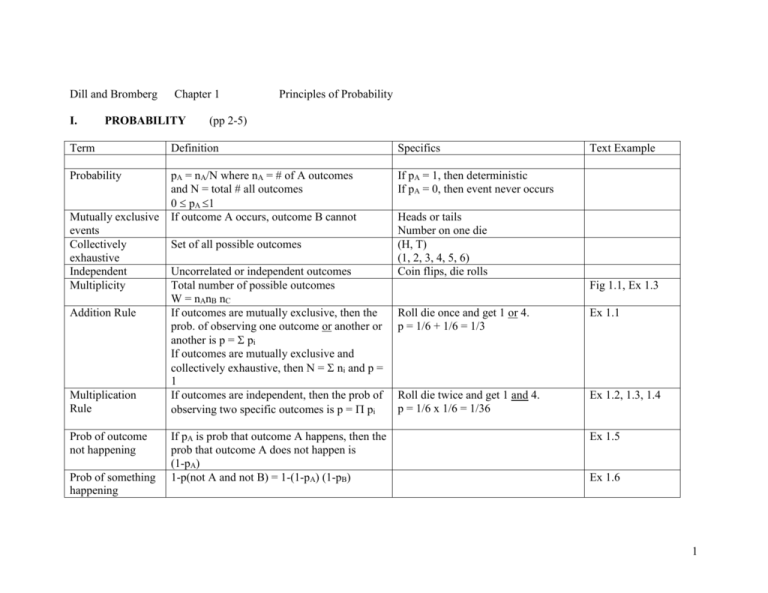

Dill and Bromberg I. Chapter 1 PROBABILITY Principles of Probability (pp 2-5) Term Definition Specifics Probability pA = nA/N where nA = # of A outcomes and N = total # all outcomes 0 pA 1 If outcome A occurs, outcome B cannot If pA = 1, then deterministic If pA = 0, then event never occurs Mutually exclusive events Collectively exhaustive Independent Multiplicity Addition Rule Multiplication Rule Prob of outcome not happening Prob of something happening Set of all possible outcomes Uncorrelated or independent outcomes Total number of possible outcomes W = nAnB nC If outcomes are mutually exclusive, then the prob. of observing one outcome or another or another is p = pi If outcomes are mutually exclusive and collectively exhaustive, then N = ni and p = 1 If outcomes are independent, then the prob of observing two specific outcomes is p = pi If pA is prob that outcome A happens, then the prob that outcome A does not happen is (1-pA) 1-p(not A and not B) = 1-(1-pA) (1-pB) Text Example Heads or tails Number on one die (H, T) (1, 2, 3, 4, 5, 6) Coin flips, die rolls Fig 1.1, Ex 1.3 Roll die once and get 1 or 4. p = 1/6 + 1/6 = 1/3 Ex 1.1 Roll die twice and get 1 and 4. p = 1/6 x 1/6 = 1/36 Ex 1.2, 1.3, 1.4 Ex 1.5 Ex 1.6 1 II. EVENTS (pp 5-13) The probability of some situations cannot be answered by the addition or multiplication rules alone, but reformulating the situation in terms of composite events allows it to be answered by these rules. Term Definition Elementary Composite A single event Set of elementary events Correlated or Conditional An event is conditional on the outcome of an earlier event If events are independent, then p(BA) = p(B) and p(BA)p(A) = p(B) p(A) = multiplication rule above; this is a priori probability. If events are conditional, then a posteriori probability. Correlated or Conditional If events are mutually exclusive, then p(AB) = 0 and prob = addition rule above Specifics Text Examples When events are independent AND composite events are mutually exclusive Conditional prob: p(B(second event)A(first event)) = p(BA) Ex 1.7 Ex 1.8 Joint prob: p(AB) = prob that both events, A and B, will occur General Multiplication Rule (Bayes Rule): p(AB) = p(BA)p(A) = p(AB)p(B) Ex 1.9, T 1.1 p.4 is a special case Holds for independent events and also conditional events. p(AB) = p(AB) General Addition Rule: p(AB) = p(A) + p(B) - p(AB) Ex 1.10 p. 3 is a special case If events are independent, then p(AB) = p(A)p(B) 2 Term Definition Specifics Correlated or Conditional If g = 1, events A and B are indep and not correlated. If g > 1, events A and B are positively correlated. If g < 1, events A and B are negatively correlated. If g = 0 and A occurs, B will not. Counting events; concerned with the composition of events not the sequence. Degree of Correlation = g = p(AB)/p(A)p(B) = conditional prob of B divided by unconditional prob of B alone Combinatorics (distinguishable objects) Text Examples W = N! = # permutations or different sequences for N distinguishable objects. Eqn 1.2 Ex 1.11 W = N!/( ni!) for indistinguishable objects. Eqn 1.18 Ex 1.13 Ex 1.12 Central to Thermodynamics Entropy is the tendency of matter to disorder Combinatorics (indistinguishable objects) Ex 1.14, Ex 1.15 Ex 1.16, Ex 1.17 Ex. 1.17 Consider n particles placed in M boxes. Or consider n particles with M-1 moveable box walls (Fig 1.3). How many permutations are there for arranging n particles and M-1 walls? W(n, M) = (M+n-1)!/(M-1)! n! Eqn 1.120 3 III. DISTRIBUTION FUNCTIONS (pp 13-21) Term Definition Distribution Function (DF) DF describes collections of probabilities = p(i) and p(i) = 1. Probability Density = p(x) where x refers to a continuous property; p(x)dx = 1 if normalized Binomial Distribution Function (t = 2) P(n,N) = probability that a series of N trials has n A outcomes and (N-n) B outcomes in any order = Binomial Distribution = P(n,N) = pn(1-p)N-n N!/n! (N-n)! Eqn 1.28 Multinomial DF (t = t) DF average value Expectation value DF std deviation (variance) P(n,N) = 1 over n = 0 to n = N P(n1, n2,… nt, N) = p1n1, p2n2,p3n3… ptnt x (N!/ ni!) ni = N over I = 1 to I = t Consider a property f(x) which depends on the property x. The DF p(x) describes the distribution of x values (assume continuous). So the average value of the property f(x) depends on the distribution of x according to Eqn 1.36 <f(x)> = f(x) p(x)dx = f(x) ψ(x)dx / ψ(x)dx σ2 = measure of distribution width = <(x - <x>)2> = <x2> - <x>2 Specifics Text Examples Each indepen elementary event has 2 mutually exclusive outcomes Eqn 1.31 <a f(x)> = a<f(x)> <f(x) + g(x)> = <f((x)> + <g(x)> ψ(x) is the quantum mech wavefunction that describes a particle (atom, electron, etc). Let mean value = <x> = a = constant Ex 1.20, 1.21, 1.22, 1.23 same 4