Additional introductory texts

advertisement

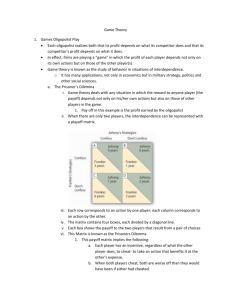

The LSE Summer School 2004 Management Programme MG106 - Organisation and Strategic Management Seminar 05: Game Theory Additional Introductory Readings Texts 1+2: The Prisoners’ Dilemma The "Prisoners' Dilemma" is the most basic model of Game Theory. The story goes that two criminals are arrested after a crime, and are immediately separated into two police cells. The police know that they committed the crime but have no evidence. Each prisoner is approached individually and told that if they confess and implicate the other person, they will get a significantly reduced sentence. The dilemma for each prisoner is as follows: If I confess, I get a reduced sentence and my colleague gets a full sentence. If I trust him not to confess and keep quiet, but he confesses, I get the full sentence. If I trust him not to confess and keep quiet, and he does the same, we can both walk free. Of course, if we both confess, we both get the full sentence! Unfortunately, we cannot communicate now we are in the cells and I do not know whether he will honour any agreement we made before we were arrested. ***** Prisoner's Dilemma game: A two-person game in which each player chooses between a cooperative C strategy (2) and a defecting D strategy, each receiving a higher payoff from D than C irrespective of whether the other player chooses C or D, but each receiving a higher payoff if both choose C than if both choose D. Its name derives from an interpretation first applied to it by the US mathematician Albert W(illiam) Tucker (1905–95) in a seminar at the Psychology Department of Stanford University in 1950: Two people, charged with involvement in a serious crime, are arrested and prevented from communicating with each other. There is insufficient evidence to obtain a conviction unless at least one of them discloses incriminating information, and each prisoner has to choose between concealing the information (C) and disclosing it (D). If both conceal, both will be acquitted, the second-best payoff for each; if both disclose, both will be convicted, the third-best payoff for each; and if only one discloses and one conceals, then according to a deal offered to them, the one who discloses will be acquitted and will receive a reward for helping the police, the best possible payoff, and the other will receive an especially heavy sentence from the court, the worst possible payoff. The game is paradoxical, because D is a dominant strategy for both players, so that it is rational for each player to choose it whatever the other player does, but each player receives a higher payoff if they both choose their dominated C strategies than if they both choose D. Source: Dictionary of Psychology. Andrew M. Colman. Oxford University Press, 2001. © Niels-Erik Wergin 2003-2004 Text 3: Game Theory While game theory has applications to "games" such as poker and chess, it is the social situations that are the core of modern research in game theory. Game theory has two main branches: Non-cooperative game theory models a social situation by specifying the options, incentives and information of the "players" and attempts to determine how they will play. Cooperative game theory focuses on the formation of coalitions and studies social situations axiomatically. This article will focus on noncooperative game theory. Game theory starts from a description of the game. There are two distinct but related ways of describing a game mathematically. The extensive form is the most detailed way of describing a game. It describes play by means of a game tree that explicitly indicates when players move, which moves are available, and what they know about the moves of other players and nature when they move. Most important it specifies the payoffs that players receive at the end of the game. Strategies Fundamental to game theory is the notion of a strategy. A strategy is a set of instructions that a player could give to a friend or program on a computer so that the friend or computer could play the game on her behalf. Generally, strategies are contingent responses: in the game of chess, for example, a strategy should specify how to play for every possible arrangement of pieces on the board. An alternative to the extensive form is the normal or strategic form. This is less detailed than the extensive form, specifying only the list of strategies available to each player. Since the strategies specify how each player is to play in each circumstance, we can work out from the strategy profile specifying each player's strategy what payoff is received by each player. This map from strategy profiles to payoffs is called the normal or strategic form. It is perhaps the most familiar form of a game, and is frequently given in the form of a game matrix: Player 2 Player 1 not confess confess not confess 5,5 0,9 confess 9,0 1,1 This matrix is the celebrated Prisoner's Dilemma game. In this game the two players are partners in a crime who have been captured by the police. Each suspect is placed in a separate cell, and offered the opportunity to confess to the crime. The rows of the matrix correspond to strategies of the first player. The columns are strategies of the second player. The numbers in the matrix are the payoffs: the first number is the payoff to the first player, the second the payoff to the second player. Notice that the total payoff to both players is highest if neither confesses so each receives 5. However, game theory predicts that this will not be the outcome of the game (hence the dilemma). Each player reasons as follows: if the other player does not confess, it is best for me to confess (9 instead of 5). If the other player does confess, it is also best for me to confess (1 instead of 0). So no matter what I think the other player will do, it is best to confess. The theory predicts, therefore, that each player following her own self-interest will result in confessions by both players. © Niels-Erik Wergin 2003-2004 Equilibrium The previous example illustrates the central concept in game theory, that of an equilibrium. This is an example of a dominant strategy equilibrium: the incentive of each player to confess does not depend on how the other player plays. Dominant strategy is the most persuasive notion of equilibrium known to game theorists. In the experimental laboratory, however, players who play the prisoner's dilemma sometimes cooperate. The view of game theorists is that this does not contradict the theory, so much as reflect the fact that players in the laboratory have concerns besides monetary payoffs. An important current topic of research in game theory is the study of the relationship between monetary payoffs and the utility payoffs that reflect players' real incentive for making decisions. By way of contrast to the prisoner's dilemma, consider the game matrix below: Player 2 Player 1 opera ballgame opera 1,2 0,0 ballgame 0,0 2,1 This is known as the Battle of the Sexes game. The story goes that a husband and wife must agree on how to spend the evening. The husband (player 1) prefers to go to the ballgame (2 instead of 1), and the wife (player 2) to the opera (also 2 instead of 1). However, they prefer agreement to disagreement, so if they disagree both get 0. This game does not admit a dominant strategy equilibrium. If the husband thinks the wife's strategy is to choose the opera, his best response is to choose opera rather than ballgame (1 instead of 0). Conversely, if he thinks the wife's strategy is to choose the ballgame, his best response is ballgame (2 instead of 0). While in the prisoner's dilemma, the best response does not depend on what the other player is thought to be doing, in the battle of the sexes, the best response depends entirely on what the other player is thought to be doing. This is sometime called a coordination game to reflect the fact that each player wants to coordinate with the other player. For games without dominant strategies the equilibrium notion most widely used by game theorists is that of Nash equilibrium. In a Nash equilibrium, each player plays a best response, and correctly anticipates that her opponent will do the same. The battle of the sexes game has two Nash equilibria: both go to the opera, or both go to the ball game: if each expects the other to go to the opera (ballgame) the best response is to go to the opera (ballgame). By way of contrast, one going to the opera and one to the ballgame is not a Nash equilibrium: since each correctly anticipates that the other is doing the opposite, neither one is a playing a best response. Games with more than one equilibrium pose a dilemma for game theory: how do we or the players know which equilibrium to choose? This question has been a focal point for research in game theory since its inception. Modern theorists incline to the view that equilibrium is arrived at through learning: people have many opportunities to play various games, and through experience learn which is the "right" equilibrium. Mixed Strategies While the battle of the sexes has too many equilibria, what about the game below? © Niels-Erik Wergin 2003-2004 Player 2 Player 1 Canterbury Paris Canterbury -1,1 1,-1 Paris 1,-1 -1,1 You may recognize this game as the Matching Pennies game. There is, however, a more colorful story from Conan Doyle's Sherlock Holmes story The Last Problem. Moriarity (player 2) is pursuing Holmes (player 1) by train in order to kill Holmes and save himself. The train stops at Canterbury on the way to Paris. If both stop at Canterbury, Moriarity catches Holmes and wins the game (-1 for Holmes, 1 for Moriarity). Similarly if both stop at Paris. Conversely, if they stop at different places, Holmes escapes (1 for Holmes and -1 for Moriarity). This is an example of a zero sum game: one player's loss is another player's gain. In the story, Holmes stops at Canterbury, while Moriarity continues on to Paris. But it is easy to see that this is not a Nash equilibrium: Moriarity should have anticipated that Holmes would get off at Canterbury, and so his best response was to get off also at Canterbury. As Holmes says "There are limits, you see, to our friend's intelligence. It would have been a coup-de-maître had he deduced what I would deduce and acted accordingly." However, this game does not have any Nash equilibrium: whichever player loses should anticipate losing, and so choose different strategy. What do game theorists make of a game without a Nash equilibrium? The answer is that there are more ways to play the game than are represented in the matrix. Instead of simply choosing Canterbury or Paris, a player can flip a coin to decide what to do. This is an example of a random or mixed strategy, which simply means a particular way of choosing randomly among the different strategies. It is a mathematical fact, although not an easy one to prove, that every game with a finite number of players and finite number of strategies has at least one mixed strategy Nash equilibrium. The mixed strategy equilibrium of the matching pennies game is well known: each player should randomize 50-50 between the two alternatives. If Moriarity randomizes 50-50 between Canterbury and Paris, then Holmes has a 50% chance of winning and 50% chance of losing regardless of whether he choose to stop at Canterbury or Paris. Since he is indifferent between the two choices, he does not mind flipping a coin to decide between the two, and so there is no better choice than for him to randomize 50-50 himself. Similarly when Holmes is randomizing 50-50, there is no better choice for Moriarity to do the same. Each player, correctly anticipating that his opponent will randomize 50-50 can do no better than to do the same. So perhaps Holmes (or Conan Doyle) is not such a clever game theorist after all. Mixed strategy equilibrium points out an aspect of Nash equilibrium that is often confusing for beginners. Nash equilibrium does not require a positive reason for playing the equilibrium strategy. In matching pennies, Holmes and Moriarity are indifferent: they have no positive reason to randomize 50-50 rather than doing something else. However, it is only an equilibrium if they both happen to randomize 50-50. The central thing to keep in mind is that Nash equilibrium does not attempt to explain why players play the way they do. It merely proposes a way of playing so that no player would have an incentive to play differently. Like the issue of multiple equilibria, theories that provide a positive reason for players to be at equilibrium have been one of the staples of game theory research, and the notion of players learning over time has played a central role in this research. Source: David K. Levine © Niels-Erik Wergin 2003-2004 Text 4: Game Theory Game theory is a bag of analytical tools designed to help us understand the phenomena that we observe when decision-makers interact. The basic assumptions that underlie the theory are that decision-makers pursue well-defined exogenous objectives (they are rational) and take into account their knowledge or expectations of other decision-makers' behavior (they reason strategically). The models of game theory are highly abstract representations of classes of real-life situations. Their abstractness allows them to be used to study a wide range of phenomena. For example, the theory of Nash equilibrium (Chapter 2) has been used to study oligopolistic and political competition. The theory of mixed strategy equilibrium (Chapter 3) has been used to explain the distributions of tongue length in bees and tube length in flowers. The theory of repeated games (Chapter 8) has been used to illuminate social phenomena like threats and promises. The theory of the core (Chapter 13) reveals a sense in which the outcome of trading under a price system is stable in an economy that contains many agents. The boundary between pure and applied game theory is vague; some developments in the pure theory were motivated by issues that arose in applications. Nevertheless we believe that such a line can be drawn. Though we hope that this book appeals to those who are interested in applications, we stay almost entirely in the territory of "pure" theory. The art of applying an abstract model to a real-life situation should be the subject of another tome. Game theory uses mathematics to express its ideas formally. However, the game theoretical ideas that we discuss are not inherently mathematical; in principle a book could be written that had essentially the same content as this one and was devoid of mathematics. A mathematical formulation makes it easy to define concepts precisely, to verify the consistency of ideas, and to explore the implications of assumptions. Consequently our style is formal: we state definitions and results precisely, interspersing them with motivations and interpretations of the concepts. The use of mathematical models creates independent mathematical interest. In this book, however, we treat game theory not as a branch of mathematics but as a social science whose aim is to understand the behavior of interacting decision-makers; we do not elaborate on points of mathematical interest. From our point of view the mathematical results are interesting only if they are confirmed by intuition. Games and Solutions A game is a description of strategic interaction that includes the constraints on the actions that the players can take and the players' interests, but does not specify the actions that the players do take. A solution is a systematic description of the outcomes that may emerge in a family of games. Game theory suggests reasonable solutions for classes of games and examines their properties. We study four groups of game theoretic models, indicated by the titles of the four parts of the book: strategic games (Part I), extensive games with and without perfect information (Parts II and III), and coalitional games (Part IV). We now explain some of the dimensions on which this division is based. Noncooperative and Cooperative Games In all game theoretic models the basic entity is a player. A player may be interpreted as an individual or as a group of individuals making a decision. Once we define the set of players, we may distinguish between two types of models: those in which the © Niels-Erik Wergin 2003-2004 sets of possible actions of individual players are primitives (Parts~ I, II, and III) and those in which the sets of possible joint actions of groups of players are primitives (Part IV). Sometimes models of the first type are referred to as "noncooperative", while those of the second type are referred to as "cooperative" (though these terms do not express well the differences between the models). The numbers of pages that we devote to each of these branches of the theory reflect the fact that in recent years most research has been devoted to noncooperative games; it does not express our evaluation of the relative importance of the two branches. In particular, we do not share the view of some authors that noncooperative models are more "basic" than cooperative ones; in our opinion, neither group of models is more "basic" than the other. Strategic Games and Extensive Games In Part I we discuss the concept of a strategic game and in Parts II and III the concept of an extensive game. A strategic game is a model of a situation in which each player chooses his plan of action once and for all, and all players' decisions are made simultaneously (that is, when choosing a plan of action each player is not informed of the plan of action chosen by any other player). By contrast, the model of an extensive game specifies the possible orders of events; each player can consider his plan of action not only at the beginning of the game but also whenever he has to make a decision. Games with Perfect and Imperfect Information The third distinction that we make is between the models in Parts II and III. In the models in Part II the participants are fully informed about each others' moves, while in the models in Part III they may be imperfectly informed. The former models have firmer foundations. The latter were developed intensively only in the 1980s; we put less emphasis on them not because they are less realistic or important but because they are less mature. Game Theory and the Theory of Competitive Equilibrium To clarify further the nature of game theory, we now contrast it with the theory of competitive equilibrium that is used in economics. Game theoretic reasoning takes into account the attempts by each decision-maker to obtain, prior to making his decision, information about the other players' behavior, while competitive reasoning assumes that each agent is interested only in some environmental parameters (such as prices), even though these parameters are determined by the actions of all agents. To illustrate the difference between the theories, consider an environment in which the level of some activity (like fishing) of each agent depends on the level of pollution, which in turn depends on the levels of the agents' activities. In a competitive analysis of this situation we look for a level of pollution consistent with the actions that the agents take when each of them regards this level as given. By contrast, in a game theoretic analysis of the situation we require that each agent's action be optimal given the agent's expectation of the pollution created by the combination of his action and all the other agents' actions. Scource: A course in game theory by Martin J. Osborne and Ariel Rubinstein (MIT Press, 1994). © Niels-Erik Wergin 2003-2004 Text 5: Strategy and Conflict – An Introductory Sketch to Game Theory Some Basics Game theory is a distinct and interdisciplinary approach to the study of human behavior. The disciplines most involved in game theory are mathematics, economics and the other social and behavioral sciences. Game theory (like computational theory and so many other contributions) was founded by the great mathematician John von Neumann. The first important book was The Theory of Games and Economic Behavior, which von Neumann wrote in collaboration with the great mathematical economist, Oskar Morgenstern. Certainly Morgenstern brought ideas from neoclassical economics into the partnership, but von Neumann, too, was well aware of them and had made other contributions to neoclassical economics. A Scientific Metaphor Since the work of John von Neumann, "games" have been a scientific metaphor for a much wider range of human interactions in which the outcomes depend on the interactive strategies of two or more persons, who have opposed or at best mixed motives. Among the issues discussed in game theory are 1. What does it mean to choose strategies "rationally" when outcomes depend on the strategies chosen by others and when information is incomplete? 2. In "games" that allow mutual gain (or mutual loss) is it "rational" to cooperate to realize the mutual gain (or avoid the mutual loss) or is it "rational" to act aggressively in seeking individual gain regardless of mutual gain or loss? 3. If the answers to 2) are "sometimes," in what circumstances is aggression rational and in what circumstances is cooperation rational? 4. In particular, do ongoing relationships differ from one-off encounters in this connection? 5. Can moral rules of cooperation emerge spontaneously from the interactions of rational egoists? 6. How does real human behavior correspond to "rational" behavior in these cases? 7. If it differs, in what direction? Are people more cooperative than would be "rational?" More aggressive? Both? Rationality The key link between neoclassical economics and game theory was and is rationality. Neoclassical economics is based on the assumption that human beings are absolutely rational in their economic choices. Specifically, the assumption is that each person maximizes her or his rewards -- profits, incomes, or subjective benefits -- in the circumstances that she or he faces. This hypothesis serves a double purpose in the study of the allocation of resources. First, it narrows the range of possibilities somewhat. Absolutely rational behavior is more predictable than irrational behavior. Second, it provides a criterion for evaluation of the efficiency of an economic system. If the system leads to a reduction in the rewards coming to some people, without producing more than compensating rewards to others (costs greater than benefits, © Niels-Erik Wergin 2003-2004 broadly) then something is wrong. Pollution, the overexploitation of fisheries, and inadequate resources committed to research can all be examples of this. In neoclassical economics, the rational individual faces a specific system of institutions, including property rights, money, and highly competitive markets. These are among the "circumstances" that the person takes into account in maximizing rewards. The implications of property rights, a money economy and ideally competitive markets is that the individual needs not consider her or his interactions with other individuals. She or he needs consider only his or her own situation and the "conditions of the market." But this leads to two problems. First, it limits the range of the theory. Where-ever competition is restricted (but there is no monopoly), or property rights are not fully defined, consensus neoclassical economic theory is inapplicable, and neoclassical economics has never produced a generally accepted extension of the theory to cover these cases. Decisions taken outside the money economy were also problematic for neoclassical economics. Game theory was intended to confront just this problem: to provide a theory of economic and strategic behavior when people interact directly, rather than "through the market." In game theory, "games" have always been a metaphor for more serious interactions in human society. Game theory may be about poker and baseball, but it is not about chess, and it is about such serious interactions as market competition, arms races and environmental pollution. But game theory addresses the serious interactions using the metaphor of a game: in these serious interactions, as in games, the individual's choice is essentially a choice of a strategy, and the outcome of the interaction depends on the strategies chosen by each of the participants. On this interpretation, a study of games may indeed tell us something about serious interactions. But how much? In neoclassical economic theory, to choose rationally is to maximize one's rewards. From one point of view, this is a problem in mathematics: choose the activity that maximizes rewards in given circumstances. Thus we may think of rational economic choices as the "solution" to a problem of mathematics. In game theory, the case is more complex, since the outcome depends not only on my own strategies and the "market conditions," but also directly on the strategies chosen by others, but we may still think of the rational choice of strategies as a mathematical problem -- maximize the rewards of a group of interacting decision makers -- and so we again speak of the rational outcome as the "solution" to the game. The Prisoners' Dilemma Recent developments in game theory, especially the award of the Nobel Memorial Prize in 1994 to three game theorists and the death of A. W. Tucker, in January, 1995, at 89, have renewed the memory of its beginnings. Although the history of game theory can be traced back earlier, the key period for the emergence of game theory was the decade of the 1940's. The publication of The Theory of Games and Economic Behavior was a particularly important step, of course. But in some ways, Tucker's invention of the Prisoners' Dilemma example was even more important. This example, which can be set out in one page, could be the most influential one page in the social sciences in the latter half of the twentieth century. This remarkable innovation did not come out in a research paper, but in a classroom. As S. J. Hagenmayer wrote in the Philadelphia Inquirer ("Albert W. Tucker, 89, Famed Mathematician," Thursday, Feb. 2, 1995, p.. B7) " In 1950, while addressing an audience of psychologists at Stanford University, where he was a visiting professor, Mr. Tucker created the Prisoners' Dilemma to illustrate the difficulty of analyzing" certain kinds of games. "Mr. Tucker's simple explanation has since given rise to a © Niels-Erik Wergin 2003-2004 vast body of literature in subjects as diverse as philosophy, ethics, biology, sociology, political science, economics, and, of course, game theory." Tucker began with a little story, like this: two burglars, Bob and Al, are captured near the scene of a burglary and are given the "third degree" separately by the police. Each has to choose whether or not to confess and implicate the other. If neither man confesses, then both will serve one year on a charge of carrying a concealed weapon. If each confesses and implicates the other, both will go to prison for 10 years. However, if one burglar confesses and implicates the other, and the other burglar does not confess, the one who has collaborated with the police will go free, while the other burglar will go to prison for 20 years on the maximum charge. The strategies in this case are: confess or don't confess. The payoffs (penalties, actually) are the sentences served. We can express all this compactly in a "payoff table" of a kind that has become pretty standard in game theory. Here is the payoff table for the Prisoners' Dilemma game: Al confess don't Bob confess 10,10 0,20 don't 20,0 1,1 The table is read like this: Each prisoner chooses one of the two strategies. In effect, Al chooses a column and Bob chooses a row. The two numbers in each cell tell the outcomes for the two prisoners when the corresponding pair of strategies is chosen. The number to the left of the comma tells the payoff to the person who chooses the rows (Bob) while the number to the right of the column tells the payoff to the person who chooses the columns (Al). Thus (reading down the first column) if they both confess, each gets 10 years, but if Al confesses and Bob does not, Bob gets 20 and Al goes free. So: how to solve this game? What strategies are "rational" if both men want to minimize the time they spend in jail? Al might reason as follows: "Two things can happen: Bob can confess or Bob can keep quiet. Suppose Bob confesses. Then I get 20 years if I don't confess, 10 years if I do, so in that case it's best to confess. On the other hand, if Bob doesn't confess, and I don't either, I get a year; but in that case, if I confess I can go free. Either way, it's best if I confess. Therefore, I'll confess." But Bob can and presumably will reason in the same way -- so that they both confess and go to prison for 10 years each. Yet, if they had acted "irrationally," and kept quiet, they each could have gotten off with one year each. What has happened here is that the two prisoners have fallen into something called a "dominant strategy equilibrium." Dominant Strategy: Let an individual player in a game evaluate separately each of the strategy combinations he may face, and, for each combination, choose from his own strategies the one that gives the best payoff. If the same strategy is chosen for each of the different combinations of strategies the player might face, that strategy is called a "dominant strategy" for that player in that game. Dominant Strategy Equilibrium: If, in a game, each player has a dominant strategy, and each player plays the dominant strategy, then that combination of (dominant) © Niels-Erik Wergin 2003-2004 strategies and the corresponding payoffs are said to constitute the dominant strategy equilibrium for that game. In the Prisoners' Dilemma game, to confess is a dominant strategy, and when both prisoners confess, that is a dominant strategy equilibrium. Issues With Respect to the Prisoners' Dilemma This remarkable result -- that individually rational action results in both persons being made worse off in terms of their own self-interested purposes -- is what has made the wide impact in modern social science. For there are many interactions in the modern world that seem very much like that, from arms races through road congestion and pollution to the depletion of fisheries and the overexploitation of some subsurface water resources. These are all quite different interactions in detail, but are interactions in which (we suppose) individually rational action leads to inferior results for each person, and the Prisoners' Dilemma suggests something of what is going on in each of them. That is the source of its power. Having said that, we must also admit candidly that the Prisoners' Dilemma is a very simplified and abstract -- if you will, "unrealistic" -- conception of many of these interactions. A number of critical issues can be raised with the Prisoners' Dilemma, and each of these issues has been the basis of a large scholarly literature: The Prisoners' Dilemma is a two-person game, but many of the applications of the idea are really many-person interactions. We have assumed that there is no communication between the two prisoners. If they could communicate and commit themselves to coordinated strategies, we would expect a quite different outcome. In the Prisoners' Dilemma, the two prisoners interact only once. Repetition of the interactions might lead to quite different results. Compelling as the reasoning that leads to the dominant strategy equilibrium may be, it is not the only way this problem might be reasoned out. Perhaps it is not really the most rational answer after all. "Solutions" to Nonconstant Sum Games The maximin strategy is a "rational" solution to all two-person zero sum games. However, it is not a solution for nonconstant sum games. The difficulty is that there are a number of different solution concepts for nonconstant sum games, and no one is clearly the "right" answer in every case. The different solution concepts may overlap, though. We have already seen one possible solution concept for nonconstant sum games: the dominant strategy equilibrium. Let's take another look at the example of the two mineral water companies. Their payoff table was: Perrier Apollinaris Price=$1 Price=$1 Price=$2 0,0 5000, -5000 Price=$2 -5000, 5000 0,0 We saw that the maximin solution was for each company to cut price to $1. That is also a dominant strategy equilibrium. It's easy to check that: Apollinaris can reason that either Perrier cuts to $1 or not. If they do, Apollinaris is better off cutting to 1 to © Niels-Erik Wergin 2003-2004 avoid a loss of $5000. But if Perrier doesn't cut, Apollinaris can earn a profit of 5000 by cutting. And Perrier can reason in the same way, so cutting is a dominant strategy for each competitor. But this is, of course, a very simplified -- even unrealistic -- conception of price competition. Let's look at a more complicated, perhaps more realistic pricing example: Another Price Competition Example Following a long tradition in economics, we will think of two companies selling "widgets" at a price of one, two, or three dollars per widget. the payoffs are profits -- after allowing for costs of all kinds -- and are shown in Table 5-1. The general idea behind the example is that the company that charges a lower price will get more customers and thus, within limits, more profits than the high-price competitor. (This example follows one by Warren Nutter). Acme Widgets p=1 p=1 0,0 Widgeon Widgets p=2 -10,50 p=2 p=3 50, -10 40,-20 20,20 90,10 p=3 -20, 40 10,90 50,50 We can see that this is not a zero-sum game. Profits may add up to 100, 20, 40, or zero, depending on the strategies that the two competitors choose. Thus, the maximin solution does not apply. We can also see fairly easily that there is no dominant strategy equilibrium. Widgeon company can reason as follows: if Acme were to choose a price of 3, then Widgeon's best price is 2, but otherwise Widgeon's best price is 1 -- neither is dominant. Nash Equilibrium We will need another, broader concept of equilibrium if we are to do anything with this game. The concept we need is called the Nash Equilibrium, after Nobel Laureate (in economics) and mathematician John Nash. Nash, a student of Tucker's, contributed several key concepts to game theory around 1950. The Nash Equilibrium conception was one of these, and is probably the most widely used "solution concept" in game theory. Nash Equilibrium: If there is a set of strategies with the property that no player can benefit by changing her strategy while the other players keep their strategies unchanged, then that set of strategies and the corresponding payoffs constitute the Nash Equilibrium. Let's apply that definition to the widget-selling game. First, for example, we can see that the strategy pair p=3 for each player (bottom right) is not a Nash-equilibrium. From that pair, each competitor can benefit by cutting price, if the other player keeps her strategy unchanged. Or consider the bottom middle -- Widgeon charges $3 but Acme charges $2. From that pair, Widgeon benefits by cutting to $1. In this way, we can eliminate any strategy pair except the upper left, at which both competitors charge $1. © Niels-Erik Wergin 2003-2004 We see that the Nash equilibrium in the widget-selling game is a low-price, zero-profit equilibrium. Many economists believe that result is descriptive of real, highly competitive markets -- although there is, of course, a great deal about this example that is still "unrealistic." Let's go back and take a look at that dominant-strategy equilibrium in Table 4-2. We will see that it, too, is a Nash-Equilibrium. (Check it out). Also, look again at the dominant-strategy equilibrium in the Prisoners' Dilemma. It, too, is a Nash-Equilibrium. In fact, any dominant strategy equilibrium is also a Nash Equilibrium. The Nash equilibrium is an extension of the concepts of dominant strategy equilibrium and of the maximin solution for zero-sum games. It would be nice to say that that answers all our questions. But, of course, it does not. Here is just the first of the questions it does not answer: could there be more than one Nash-Equilibrium in the same game? And what if there were more than one? Games with Multiple Nash Equilibria Here is another example to try the Nash Equilibrium approach on. Two radio stations (WIRD and KOOL) have to choose formats for their broadcasts. There are three possible formats: Country-Western (CW), Industrial Music (IM) or allnews (AN). The audiences for the three formats are 50%, 30%, and 20%, respectively. If they choose the same formats they will split the audience for that format equally, while if they choose different formats, each will get the total audience for that format. Audience shares are proportionate to payoffs. The payoffs (audience shares) are in the next table. KOOL CW IM AN CW 25,25 50,30 50,20 WIRD IM 30,50 15,15 30,20 AN 20,50 20,30 10,10 You should be able to verify that this is a non-constant sum game, and that there are no dominant strategy equilibria. If we find the Nash Equilibria by elimination, we find that there are two of them -- the upper middle cell and the middle-left one, in both of which one station chooses CW and gets a 50 market share and the other chooses IM and gets 30. But it doesn't matter which station chooses which format. It may seem that this makes little difference, since the total payoff is the same in both cases, namely 80 both are efficient, in that there is no larger total payoff than 80 There are multiple Nash Equilibria in which neither of these things is so, as we will see in some later examples. But even when they are both true, the multiplication of equilibria creates a danger. The danger is that both stations will choose the more profitable CW format -- and split the market, getting only 25 each! Actually, there is an even worse danger that each station might assume that the other station will choose CW, and each choose IM, splitting that market and leaving each with a market share of just 15. More generally, the problem for the players is to figure out which equilibrium will in fact occur. In still other words, a game of this kind raises a "coordination problem:" © Niels-Erik Wergin 2003-2004 how can the two stations coordinate their choices of strategies and avoid the danger of a mutually inferior outcome such as splitting the market? Games that present coordination problems are sometimes called coordination games. From a mathematical point of view, this multiplicity of equilibria is a problem. For a "solution" to a "problem," we want one answer, not a family of answers. And many economists would also regard it as a problem that has to be solved by some restriction of the assumptions that would rule out the multiple equilibria. But, from a social scientific point of view, there is another interpretation. Many social scientists (myself included) believe that coordination problems are quite real and important aspects of human social life. From this point of view, we might say that multiple Nash equilibria provide us with a possible "explanation" of coordination problems. That would be an important positive finding, not a problem! That seems to have been Thomas Schelling's idea. Writing about 1960, Schelling proposed that any bit of information that all participants in a coordination game would have, that would enable them all to focus on the same equilibrium, might solve the problem. In determining a national boundary, for example, the highest mountain between the two countries would be an obvious enough landmark that both might focus on setting the boundary there -- even if the mountain were not very high at all. Another source of a hint that could solve a coordination game is social convention. Here is a game in which social convention could be quite important. That game has a long name: "Which Side of the Road to Drive On?" In Britain, we know, people drive on the left side of the road; in the US they drive on the right. In abstract, how do we choose which side to drive on? There are two strategies: drive on the left side and drive on the right side. There are two possible outcomes: the two cars pass one another without incident or they crash. We arbitrarily assign a value of one each to passing without problems and of -10 each to a crash. Here is the payoff table: Mercedes Buick L L R 1,1 -10,-10 R -10,-10 1,1 Verify that LL and RR are both Nash equilibria. But, if we do not know which side to choose, there is some danger that we will choose LR or RL at random and crash. How can we know which side to choose? The answer is, of course, that for this coordination game we rely on social convention. Conversely, we know that in this game, social convention is very powerful and persistent, and no less so in the country where the solution is LL than in the country where it is RR. We will see another example in which multiple Nash equilibria provides an explanation for a social problem. First, however, we need to deal with one of the issues about the Prisoners' Dilemma that applies no less to all of our examples so far: they deal with only two players. We will first look at one way to extend the Prisoners' Dilemma to more than two players -- an invention of my own, so of course I rather like it -- and then explore a more general technique for extending Nash equilibria to games of many participants. © Niels-Erik Wergin 2003-2004