Taking Stock of the Bilingual Lexicon

advertisement

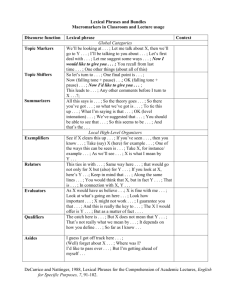

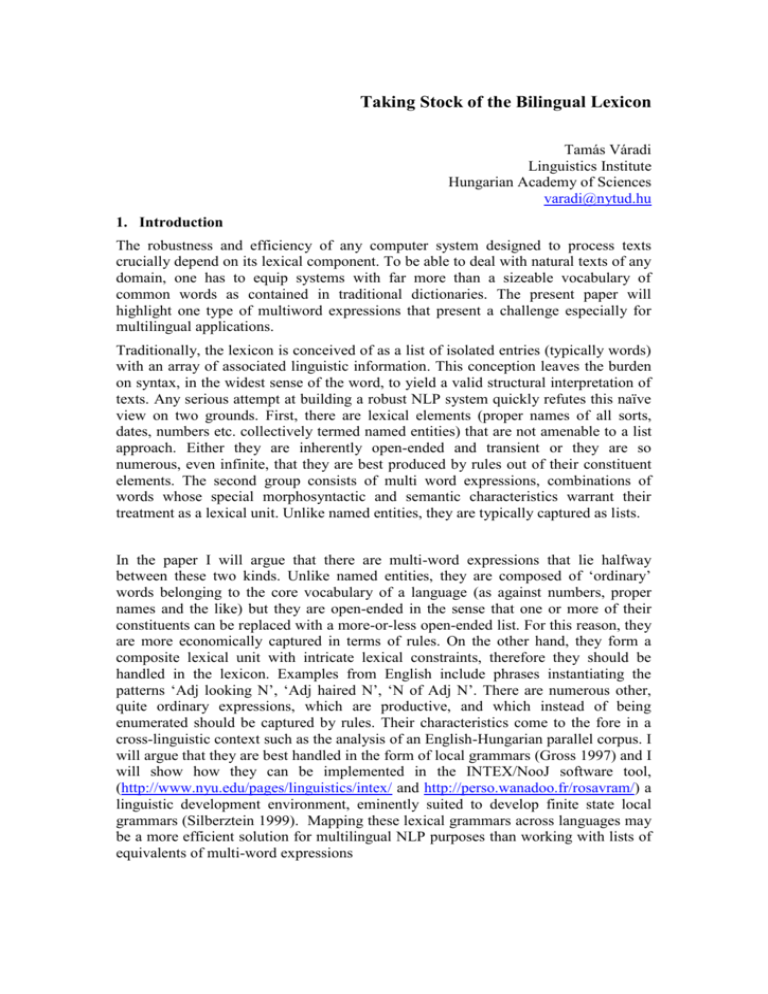

Taking Stock of the Bilingual Lexicon Tamás Váradi Linguistics Institute Hungarian Academy of Sciences varadi@nytud.hu 1. Introduction The robustness and efficiency of any computer system designed to process texts crucially depend on its lexical component. To be able to deal with natural texts of any domain, one has to equip systems with far more than a sizeable vocabulary of common words as contained in traditional dictionaries. The present paper will highlight one type of multiword expressions that present a challenge especially for multilingual applications. Traditionally, the lexicon is conceived of as a list of isolated entries (typically words) with an array of associated linguistic information. This conception leaves the burden on syntax, in the widest sense of the word, to yield a valid structural interpretation of texts. Any serious attempt at building a robust NLP system quickly refutes this naïve view on two grounds. First, there are lexical elements (proper names of all sorts, dates, numbers etc. collectively termed named entities) that are not amenable to a list approach. Either they are inherently open-ended and transient or they are so numerous, even infinite, that they are best produced by rules out of their constituent elements. The second group consists of multi word expressions, combinations of words whose special morphosyntactic and semantic characteristics warrant their treatment as a lexical unit. Unlike named entities, they are typically captured as lists. In the paper I will argue that there are multi-word expressions that lie halfway between these two kinds. Unlike named entities, they are composed of ‘ordinary’ words belonging to the core vocabulary of a language (as against numbers, proper names and the like) but they are open-ended in the sense that one or more of their constituents can be replaced with a more-or-less open-ended list. For this reason, they are more economically captured in terms of rules. On the other hand, they form a composite lexical unit with intricate lexical constraints, therefore they should be handled in the lexicon. Examples from English include phrases instantiating the patterns ‘Adj looking N’, ‘Adj haired N’, ‘N of Adj N’. There are numerous other, quite ordinary expressions, which are productive, and which instead of being enumerated should be captured by rules. Their characteristics come to the fore in a cross-linguistic context such as the analysis of an English-Hungarian parallel corpus. I will argue that they are best handled in the form of local grammars (Gross 1997) and I will show how they can be implemented in the INTEX/NooJ software tool, (http://www.nyu.edu/pages/linguistics/intex/ and http://perso.wanadoo.fr/rosavram/) a linguistic development environment, eminently suited to develop finite state local grammars (Silberztein 1999). Mapping these lexical grammars across languages may be a more efficient solution for multilingual NLP purposes than working with lists of equivalents of multi-word expressions 2. The shortcomings of commercial dictionaries Even the best commercial dictionaries, whether published in book form or on electronic media, cannot serve as adequate lexical resources to support computational applications. The root of the problem is that dictionaries are designed to be used by humans, which means that they inevitably draw on the intelligence, world knowledge, and language intuitions of their users. Even if all lexicographic conventions are adequately converted into explicit form and the implicit inheritance relations within different parts of the entry are correctly explicated and applied to the constituent parts, there still remains a broad area where the content of the dictionaries can only be tapped fully through “human processing”. 2.1 Lexical gaps Lexical information typically missing from dictionaries but which may be rife in running texts include the following. 2.1.1 Headwords Proper names, numbers, dates are ruled out as they cannot be captured in a list or even if they could in principle, the size of the list would simply prove prohibitive. Note that we should distinguish between names on the one hand and numbers and dates on the other. The latter form an infinite series whose members can be computed, whereas names are not inherently serial but they constitute a set. Names may not be infinite in size yet their exclusion from ordinary dictionaries is justified on space constraints and on the principle that such lexical elements do not belong to the common stock of words. Indeed, there are special dictionaries of place names, proper names and on the back of city maps, we may find a street index. It is interesting to note that there is no dictionary of street names. 2.1.2 Transparent, productive compounds and derived forms Dictionaries traditionally do not list among the headwords or even among the run-on entries compound and derived forms that have a transparent structure and whose meaning can be calculated from the constituents. Out of the 5421 verbs in the MacMillan English Dictionary, less than a tenth are included in the list of headwords ending in –able. 2.1.3 Rare, one-off occurrences Lexicographers seek to capture established usage. A word form or the sense or use of a word form must justify itself in terms of frequency before it is admitted to a dictionary. Given that language use is constantly changing, particularly as regards the emergence of new senses and uses of already existing word forms, making the process of change less easily perceptible, it is inevitable that at any moment there will be an array of new lexical phenomena that are not entered in dictionaries. Yet, they are clearly in evidence in texts, which is simply the direct record of language use. The lexical component of a robust human language technology application must be equipped to deal not only with established usage as sanctioned by published dictionaries but also with phenomena that have not made it into the dictionaries. There may be several reasons why they have not reached an imaginary currency threshold but whatever their frequency overall, if they occur in a given text, an application should be able to deal with them, the same way as humans do. This issue brings us to the following question. How could new words or new uses of words arise anyway if the lexical resources were a static set of items? Obviously, they are not and native language speakers have a remarkable facility to interpret and to produce new lexical phenomena. They must be using some sort of online processing to compute the new meaning either in the sense of interpreting or generating it. Languages typically provide a whole array of means (derivation, compounding, meaning shift etc.) that can be used to introduce new forms or uses in the mental lexicon (hiv**). The type of lexical rules proposed in the present paper will not only serve to make a computational application more robust but can also be seen as a model of this generative mental lexicon. 2.2 Incomplete coverage A phenomenon that has perhaps received less attention although it seems prevalent in conventional dictionaries is the amount of vagueness and fuzziness used in the definitions. Using partial lists by way of evoking the set of phenomena that a particular entry refers to or co-occurs with seems to be a standard practice. Consider the following example taken at random from the Oxford Advanced Learner’s Dcitionary (Wehmeier 2005) Figure 1 Part of the dictionary entry GRATE from OALD7 Both the definitions of the verb grate and the noun grater employ partial lists to indicate the set of things that can be grated and the kind of graters respectively. No indication is given of the boundaries of the sets except that perhaps astute readers might infer from the use of the word etc. that the set of ‘grateable’ objects is somewhat open ended whereas the kind of graters is not. Of course, there are more than just the two kinds of graters mentioned in the examples to the definition. On the other hand, not every grateable object name makes up a different kind of grater, so we are again left in the dark about the precise limits of the set of expressions. For NLP applications this is clearly unsatisfactory we cannot rely on the encyclopaedic semantic knowledge of human readers to establish the extent of the two sets. Note that the different size of the two sets calls for possibly different ways to deal with them. Assuming that we want to cover the set of conventional names for objects used to grate things with (that is, the lexicalised uses of the expression), we deal with a closed set of a handful of terms amenable to listing. The set of grataeble objects presumably form a much larger set with fuzzy edges, which can also be captured in lists but are economically dealt with through an intensional specification such as, roughly, the set of solid, edible objects. The fuzzyness creeps in through the vagueness in the cut-off point for both the features solid and edible. 3. The Scope of Phenomena In order to develop first an intuitive grasp of the phenomena, consider the following examples in 1) 1) English-speaking population French-speaking clients Spanish-speaking students It would not be difficult to carry on with further examples, each embodying a pattern <language-name> speaking <person> or <group of persons>. It is a prototypical example for our purposes because the words are interdependent yet they admit of open-choice in the selection of lexical items for certain positions. The phrases *speaking students, English-speaking, or English population are either not wellformed or does not mean the same as the full expression. The meaning of the phrase is predominantly, if perhaps not wholly, compositional and for native language speakers the structure may seem entirely transparent. However, in a bilingual context this transparency does not necessarily carry over to the other language. For example, the phrases in (1) are expressed in Hungarian as 2) Angol nyelvű English language-Adj lakosság population Francia nyelvű French language-Adj ügyfelek clients Spanyol nyelvű diákok Spanish language-Adj students The Hungarian equivalent bears the same characteristics of semantic compositionality and structural transparency and is open-ended in the same points as the corresponding slots in the English pattern. It would be extremely wasteful to capture the bilingual correspondences in an itemized manner, particularly as the set of expressions on both sides are open-ended anyway. At the other end of the scale in terms of productivity and compositionality one finds phrases like those listed in 2) 3) English breakfast French fries German measles Purely from a formal point of view, the phrases in 3) could be captured in the pattern <language name><noun> but the co-occurrence relations between items in the two sets are limited to the extreme so that once they are defined properly, we are practically thrown back to the particular one-to-one combinations listed in 3). Note that if we had a set like 4), where one element is shared it would still not make sense make sense to factorize the shared word French because it enters into idiomatic semantic relations. In other words, the multi-word expressions are semantically noncompositional even in terms of English alone. 4) French bread French cricket French dressing French horn The set of terms in 5) exemplifies the other end of the scale in terms of compositionality and syntactic transparency. They are adduced here to exemplify fully regular combinations of words in their literal meaning. 5) French schools French vote French driver French books In between the wholly idiosyncratic expressions, which need to be listed in the lexicon and the set of completely open-choice expressions, which form the province of syntax, there is a whole gamut of expressions that seem to straddle the lexiconsyntax divide. They are non-compositional in meaning to some extent and they also include elements that come from a more or less open set. Some of these open-choice slots in the expressions may be filled with items from sets that are either infinite (like numbers) or numerous enough to render them hopeless or wasteful for listing in a dictionary. For this reason, they are typically not fully specified in dictionaries, which have no of means of representing them explicitly in any other way than by listing. For want of anything better, lexicographers rely on the linguistic intelligence of their readers to infer from a partial list the correct set of items that a given lexical unit applies to. Bolinger (Bolinger 1965) elegantly sums up this approach as Dictionaries do not exist to define, but to help people grasp meaning, and for this purpose their main task is to supply a series of hints and associations that will relate the unknown to something known. Adroit use of this technique may be quite successful with human readers but is obviously not viable for NLP purposes. 4. Local Grammars For robust NLP appications we need to explicate the processing that human readers do in decoding dictionary entries. The most economical and sometimes the only viable means to achieve this goal is to integrate some kind of rule based mechanism that would support the recognition as well as generation of all the lexical units that conventional dictionaries evoke through well-chosen partial set of data. Fortunately, the syntax of lexical phrases proves computationally tractable trough finite state technology. The framework of Local Grammar developed by Maurice Grosss can be applied with great benefit. Local Grammars are heavily lexicalized finite state grammars devised to capture the intricacies of local syntactic or semantic phenomena. In the mid-nineties a very efficient tool, INTEX was developed at LADL, Paris VII, (Silberztein 1999) which has two components that are of primary importance to us: it contains a complex lexical component (Silberztein 1993) and a graphical interface which supports the development of finite state transducers in the form of graphs (Silberztein 1999). Local grammars are typically defined in graphs, which are then compiled into efficient finite state automata or transducers. In fact, both the lexicon and the grammar are implemented in finite state transducers. This fact gives us the ideal tool to implement the very kind of lexicon we have been arguing for, one that includes both static entries and lexical grammars. 5. Some example grammars The present article can only attempt to illustrate some relevant phenomena and the technology that should be applied to handle them. 5.1 Open-choice expressions The set of expressions discussed in 1) can be captured with the graph in Figure 2 It shows a simple finite state automaton of a single path with three nodes from the initial symbol on the left to the end symbol on the right. When the graph is applied to the text it covers all the text strings that match as the graph is traversed between the two points. Words in angle brackets stand for the lemma form, the shaded box represent a subgraph that can freely be embedded in graphs. The facility of graph embedding has the practical convenience that it allows the reuse of the subgraph in other contexts. At a more theoretical level, it introduces the power of recursion into grammars. Subgraphs may also be used to represent a semantic class, such as language name in the present case, and can be encoded in the dictionary with a semantic feature like +LANGNAME. INTEX/NOOJ dictionaries allow an arbitrary number of semantic features to be represented in the lexical entries and they can be used in the definition of local grammars as well. An alternative grammar using lexical semantic features is displayed in Figure 2. Figure 2 INTEX/NOOJ graph to capture phrases like English-speaking students Figure 3 Representing the phrases in Figure 2 with semantic features 5.2 Bilingual applications Finite state transducers can also be used to semantically disambiguate phrases in a bilingual context. Consider, for example, Adj+N combinations involving the word true e.g. true friend, true value, true story, true north. Whatever distinctions monolingual dictionaries make between the combinations, as long as they are rendered with different terms in the target language depending on the co-occurrence patterns, they need to be teased out and treated separately for bilingual purposes. Figure 4 INTEX transducer for semantic disambiguation 5.3 PP Postmodifiers Postmodifier prepositional phrases present an interesting case for applying finite state tranducers in the lexical component. In a monolingual setting, most of the phrases under study here would not be considered to belong to the lexicon. On the face of it, there is nothing in the phrases listed in 6) that would warrant their treatment in the lexicon, rather than in syntax. In a bilingual context, however, structural incompatibility between languages may require a pattern to be rendered in the target language in a way that need to be specified as a peculiarity of the source language pattern. In particular, Hungarian does not have prepositional phrases and uses extensive left branching in NP premodifying structures. English prepositional phrases are typically rendered with NP’s featuring a head with a locative case ending in Hungarian. However, such NP’s cannot directly premodify the head. In such a case, a support verb in participle form is used. The choice of the particular support verb depends on features of the head noun as well as the prepositional phrase, which can be pretty subtle, as the examples in 6) show. 6) a) the people in the room a szobában lévő emberek in the room being people b) page in front of him előtte fekvő oldal ‘before-him lying page c) the neighbours on the same floor az emeleten lakó szomszédok on the floor living neighbours d) the cold water from the tap a csapból folyó hideg víz from the tap flowing cold water Note that example a) employs the semantically least specific support verb that would also fit in example b), it is often replaced with something slightly more specific for merely stylistic reasons, ie to avoid repetition. In c) use of levő ‘being’ is also possible but it would suggest temporary location as against the generic lakó ‘living’. Example d) illustrates how the semantic characteristics of the location i.e. its dynamic feature can influence the support verb. *a csapból levő hideg víz is ill-formed. All the cases in 6) show some syntactic-semantic constraint together with varying amount of open-choice in the selection of lexical items filling a position, characteristics that call for finite-state algorithmic treatment. The more non-specific the support verb, the more general is the specification of the context. As a first approximation, one would apply the graph in Figure 5, which basically records the correspondences in 7) 7) N in N = NP+INE levő N, N on N = N+SUP levő N, N at N = N+ADElevő N etc. where +INE, +SUP, +ADE stand for the inessive, superessive and adessive case respectively. To simplify matters Figure 5 deals only with a bare N as the complement of the preposition, in fact the pattern could be enriched to take care of full NP’s. Note that although the pattern seems very general, there is still the constraint that the PP should indicate location. Figure 5 INTEX graph to translate N PP phrases into Hungarian Figure 5 illustrates cases where the semantic class of the NP complement of the PP postmodifier acts as a distinguisher between senses in the source English phrase that have different equivalents on the Hungarian side trade in XCURRENCY = XCURRENCYkereskedelem vs. trade in XPLACENAME = XPLACENAME-i kereskedés/forgalom. Similar examples can easily be cited from other semantic classes involved. Consider, the man in black, the woman in silk blouse etc. vs. the man in the basement flat, the woman in room 215. Again, the rendering of such phrases are completely different across the two groups, hence the correspondence need to be recorded. Yet, it is futile to use just a handful of particular examples in a bilingual NLP system. While ordinary dictionaries may afford to merely evoke the pattern with a few more or less well-chosen examples, it is clearly inedaquate to resort to this tactic when the goal is to build a robust NLP. Figure 6 word sense/translation equivalence selection with fst graphs 6. Lexical acquisition Phrases of the pattern in 1) present an interesting difference in the items that fit the two open-choice slots. The set of language names are well defined in the sense that apart from any possible fuzzyness between dialects and languages, one can clearly enumerate them. The slot for the noun head is not so obvious to define. One can set up some sort of intensional definition (humans with an abilty to speak a language, preferably their native language) but the particular set of lexical items that inherit this feature may not be so easy to enumerate. In any case, the pattern so defined covers potential items as well as actual ones. This is quite appropriate as a model of the mental lexicon. Sometimes, however, we want to establish the set of terms that actually occur in the particular position. There may be cases when we do not have clear intuitions about class membership as is the case, for example, between different collocates of true in Figure 4. As a further example, consider the following fragment from the OALD7: Hall …a concert / banqueting / sports / exhibition, etc. hall There are three dining halls on campus. The Royal Albert Hall (BrE) A jumble sale will be held in the village hall on Saturday. —see also city hall, dance hall, guildhall, music hall, town hall As it may not be immediately clear what kinds of halls there may be in English, it is useful to explore the set of terms that are actually applied so that we may attempt to define the boundaries of the set by some intensional means. To facilitate the collection of data from corpus, a local grammar of the type in Figure 7 can be compiled and run as a query on the corpus yielding actual instances, which then can be fed back into the grammar, replacing the term <MOT> (a general placeholder for any word) with the words that figured in the result set of the previous query. Provided the corpus was adequately large, this procedure would ensure that the enhanced local grammar would cover all instances and at the same time would not overgenerate spurious forms. Figure 7 a graph to run as query for lexical acquisition 7. Conclusions The paper explored a set of multi-word expressions that are typically underspecified in conventional dictionaries. A common characteristics of the phrases considered that they show a degree of open-endedness in the set of lexical items that can occur in one or several positions within the structure. They are either clearly enumerable or defined along some intensional criteria that may be more or less vague. In any case, their class membership must be clearly specified for the purposes of a robust NLP application. The prevailing lexicographic practice of evoking the set through partial lists is clearly inadequate. Fortunately, the phenomena can be efficiently treated with finite-state technology and the paper showed examples of how a powerful software tool employing a sophisticated and robust lexical component can incorporate lexical grammars in its lexical resources. A lexicon with an architecture that combines lexical entries along with lexical rules, both implemented in finite state technology, is not only efficient computationally but seems to be a viable model of the mental lexicon asl well. References Bolinger, D. (1965). "The Atomization of Meaning." Language 41: 555-573. Gross, M. (1997). The Construction of Local Grammars. Finite State Language Processing. Y. S. Emmanuel Roche, MIT Press: 329-352. Silberztein, M. (1993). Dictionnaires électorniques et analyse automatique de textes: le systeme INTEX. Paris, Masson. Silberztein, M. (1999). "Les graphes INTEX." Linguisticae Invetigationes XXII(1-2): 3-29. Silberztein, M. (1999). "Text Indexation with INTEX." Computers and the Humanities 33(3): 265-280. Wehmeier, S., Ed. (2005). Oxford Advanced Learner's Dictionary. Oxford, Oxford University Press.