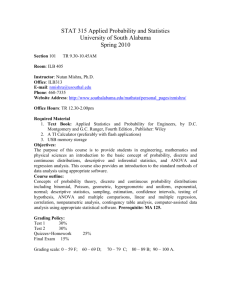

LinearModellingIPractical

Practical Linear Modelling I

1.

In this exercise you will reproduce analyses in the lecture to use R for correlation, linear regression and ANOVA on a simulated dataset. a.

Read the data set “sim30.txt” into a data frame using read.table() b.

Print out the data (it should have 3 columns “x”, “xx”, “y” and 30 rows); xx is the same as x except four of the observations have been replaced by outliers c.

Plot x vs y and xx vs y d.

Compute the Pearson and Spearman correlation coefficients and test them using

cor.test() e.

Use the lm() function to fit a linear model y ~ x and y ~xx f.

Use the anova() function to test the significance of the fits. g.

Show that the F statistic in anova() is the square of the T statistic in cor.test()

2.

In this exercise you will reproduce correlation, regression and ANOVA analyses in the lecture related to a dataset of Biochemistry measurements on mice. a.

Read in the data set “Biochemistry.txt” into a data frame b.

Print out the first few lines using head() and find the dimensions of the complete data set using dim() c.

Plot Biochem.HDL against Biochem.Tot.Cholesterol d.

Compute the Pearson and Spearman correlation coefficients for these variables and test them. e.

Fit a linear regression between HDL and Tot.Cholesterol using lm() and anova() and compare the results to correlation. f.

One important way of visualising the fit of the model is to plot the original observations against the predictions from the model. R will give you the necessary values; if f <- lm() then f is a list containing the elements f$fitted.values and f$y. However, you must first repeat the lm analysis using the additional option lm( formula, data, y=TRUE) .What happens if you plot the fitted values against the original data?. g.

Perform a one-way analysis of variance on HDL against Family, using lm() and anova().

Plot the observed against the residuals. h.

Perform a non-Parametric analysis of the dependence between HDL and Family.

3.

In this exercise we explore the Biochemistry data set in more detail. All the columns with the prefix “Biochem” are numerical measures of various biomarkers in blood serum. The remaining columns are covariates , ie variables collected at the same time that might influence the biochemistry measures. One of these is Family, used in Ex 2. Another is GENDER, the sex of the animal. a.

Fit the HDL ~ GENDER, where GENDER is treated first as a numeric variable and second as a factor. You can do this with the models Biochem.HDL ~ as.numeric(GENDER) and

Biochem.HDL ~ as.factor(GENDER) What do you notice about the anova() results of the two models? Why is this?

b.

Investigate which of the Biochem variables depend on GENDER, ie find out which chemical signatures are different for males and females. You should try to write an R function which will loop through the all variables with prefix Biochem, fit a linear model and store the results of the anova in a table. To do this, you will need to know a fact about the anova() function: If you store the results of anova in a variable, say a <-

anova() then a is a data frame and the P-value of the F test (in this example) is the element a[1,5] . Use this fact to extract the P-value from each model fit and put it into a data frame that you should create that also has the Biochem variable name as one of its columns. c.

Now estimate the heritabilities of all the Biochem variables by adapting the function you wrote in (b). d.

Modify your function to also compute the non-parametric analysis of Family effects.

4.

Investigate the multivariate capabilities of the cor() function. You should first read the help on this function by typing ?cor. You will also learn about a new plotting function image() a.

Find out how to compute all the pairwise correlations of the Biochem variables simultaneously, storing the results in a matrix. You may find it helpful to read in the reduced dataset “Biochemistry.reduced.txt” which only contains the biomarker data.

When you try to compute it you must decide how to treat missing data (cor() won’t compute a correlation if any data is missing, by default). b.

Now investigate the plotting function image() to display the results.

image(mat,axes=FALSE)

5.

This is a simulation exercise for investigate the degrees of freedom in a sum of squares of N

Normal variables where the sample mean has been subtracted from each value (ie the total sum of squares in the ANOVA). a.

Load in the example R code using source(“example.R”). This file contains several example functions., including df.example(df,nsim). This code will simulate the distributed of the squares of df mean-adjusted N(0,1) random variables and compare it to the distribution when the mean is no subtracted. The output of the programme is a list of two vectors, the unadjusted and mean.adjusted sums of squares.

Run the program, varying the df parameter, eg: out10 <- df.example(df=10,nsim=10000) b.

What do you notice about the mean of the adjusted and unadjusted SS? c.

Perform qq plots of the results of one run, comparing the adjusted and mean.adjusted data to the chi-squared distribution on df and df-1 degrees of freedom. It helps to also plot the line y=x, using abline(0,1)

6.

Optional Exercise for the mathematically minded (do this off line) a.

Show that the formulae given for least-squares estimators in the linear regression model are correct, eg by differentiating the residual SS. b.

Show that the LS estimates partition the TSS into FSS and RSS as in the lecture c.

Show that the Pearson Correlation Coefficient is related to the ANOVA F-statistic.