Squishin' Stuff

advertisement

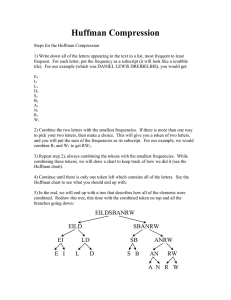

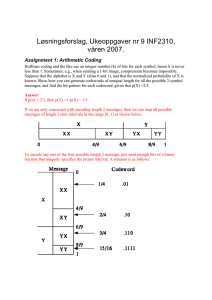

Squishin’ Stuff Huffman Compression Data Compression • • • Begin with a computer file (text, picture, movie, sound, executable, etc) Most file contain extra information or redundancy Goal: Reorganize the file to remove the excess information and redundancy • Lossless Compression: Compress the file in such a way that none of the information is lost (good for text files and executables) Lossy Compression: Allow some information to be thrown away in order to get a better level of compression (good for pictures, movies, or sounds) • • • • • Many, many, many algorithms out there to compress files Different types of files work best with different algorithms (need to consider the structure of the file and how things are connected). We’re going to focus on Huffman compression which is used many compression programs, most notably winzip. We’re just going to play with text files. Text Files • Each character is represented by one byte. Each byte is a sequence of 8 bits (1’s and 0’s) (ASCII code). • International standard for how a character is represented. • • • • A B ~ 3 01000001 01000010 01111110 00110011 • Most text files use less than 128 characters; this code has room for 256. Extra information!! • Goal: Use shorter codes to represent more frequent characters. • You have seen this before… Morse Code A .B -... C -.-. D -.. E . F ..-. G --. H .... I .. J .--K -.L .-.. M -- N -. O --P .--. Q --.R .-. S ... T U ..V ...W .-X -..Y -.-Z --.. 0 ----1 .---2 ..--3 ...-4 ....5 ..... 6 -.... 7 --... 8 ---.. 9 ----. Fullstop .-.-.Comma --..-Query ..--.. Example DANIEL LEWIS DREIBELBIS Break down the frequencies of each letter: Letter Frequency Letter Frequency A B D E I 1 2 2 4 4 L N R S W 3 1 1 2 1 Example Now assign short codes to the letters that occur most often: Letter Frequency Code E I L D S B A N R W 4 4 3 2 2 2 1 1 1 1 0 1 00 01 10 11 000 001 010 011 # of Digits 4 4 6 4 4 4 3 3 3 3 Total Digits: 38. Usual way: 21*8=168. Compression: 22.6% of the original size, or a 77.4% decrease. My name is now: 010000011000 000011110 01010011100011110 RAWA AWIS RINBABBE That didn’t work. If we do this, we need a way to know when a letter stops. Huffman coding provides this, though we’ll lose some compression. Huffman Coding Named after some guy called Huffman (1952). Use a tree to construct the code, and then use the tree to interpret the code. Huffman Chart E4 E4 E4 E4 EI8 I4 I4 I4 I4 EILD13 L3 L3 L3 LD5 D2 S2 D2 D2 EILDSBANRW21 S2 SB4 B2 SB4 B2 A1 SBANRW8 AN2 N1 R1 RW2 W1 LD5 ANRW4 ANRW4 4 4 SBANRW8 Issues and Problems What if all the frequencies were the same? AAAABBBBCCCCDDDDEEEEFFFFGGGG Or ABCDEFGABCDEFGABCDEFGABCDEFG Huffman can’t do much if the all the frequencies are the same, or if they are all similar (can’t give preference to higher occurring characters). Issues and Problems If there are patterns, you can use a different compression scheme to get rid of the patterns, then use Huffman coding to reduce the rest (this is what winzip does). If your text is random, then you are out of luck. FDSAFSDFASFASDFSFDADFSFFASFDDFASFASFDSFDS Also, method requires you to pass through the text twice. That’s time consuming. Adaptive Huffman Coding builds the frequency as you move along, and changes the tree as new information comes in. What’s the best you can do? • Obviously, there is a limit to how far down you can compress a file. • Assume your file has n different characters in it, say a1…an, each with probability p1…pn (so p1+p2+…+pn = 1). • The entropy of the file is defined to be negative of the sum of pilog2(pi). • Measures the least number of bits, on average, needed to represent a character. • For my name, the entropy is 3.12 (takes at least 3.12 bits per character to represent my name). Huffman gave an average of 3.19 bits per character. • Huffman compression will always give an average that is within one bit of entropy.