ETL processing

advertisement

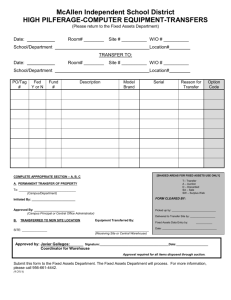

Dr. Bjarne Berg DRAFT ETL Processing Source Data: Definition & Purpose Transformation System: Definition & Purpose Types Knowledge Management Architecture • Internal • External • Non-traditional • Knowledge Management Architecture Applies descriptive information to data values. (0 = male; 1 = female) Metadata Metadata Source Data Extract Operational Data Store Transform Data Warehouse Functional Area Invoicing Systems Purchasing Systems General Ledger Other Internal Systems External Data Sources Marketing and Sales Corporate Information Attribute Summation Calculate Product Line Derive Location Purpose Summarize Synchronize • Data Mining Translate Segmented Data Subsets Summarized Data Operational Data Store Extract Transform Data Warehouse Functional Area Custom Developed Applications Purchasing Data Extraction Integration and Cleansing Processes Source Data Applications Statistical Programs Tap information in multiple systems of varying ages, architectures, and technologies. Query Access Tools • Data Marts Data Resource Management And Quality Assurance Invoicing Systems Purchasing Systems General Ledger Other Internal Systems External Data Sources Marketing and Sales Corporate Information Summation Product Line Location Applications • Custom Developed Applications Purchasing Data Extraction Integration and Cleansing Processes Attribute Segmented Data Subsets Calculate Derive Summarize Summarized Data Synchronize Calculation / Derivation Derives new data values based on predefined algorithms, quantitative methods, and measurements. Data Mining Translate Statistical Programs Query Access Tools • Data Marts Organize disparate data sources into a single database for user access. Translation / Attribution Aggregation / Summarization Sorts and totals rows to create summaries of broader data categories. Data Resource Management And Quality Assurance 1-13 1-13 Manipulates extracted and formatted data in order to add value by applying descriptors, aggregates, and calculations to the data tables. Source: Bjarne Berg “introduction to data warehousing”, PriceWaterhouseCoopers, 2002 This diagram shows examples of source data systems. Source data can come from legacy systems which have been around 10-20 years and are typically mainframe based. Source data can also come from transactional processing systems which are primarily client/server systems (as opposed to mainframe) and were probably developed within the past decade. Source data can also come from external sources. Source data can literally come from anywhere, even tape libraries with off line storage. As we build the data warehouse, we decide what data we want to pull out of which sources and literally extract that data into the warehouse. This involves data extraction, transformation, and transfer and replication. Since the data from the different sources was not coded and look the same, these processes provide the means to reconcile database system, then reading the data is easy using a number of SQL based tools and applications. If the data is stored in a proprietary system, then the file formats may not be known. Tools can be purchased to help with the extract step. A thorough cataloging of all sources and their operating systems is necessary. Then data extract tools are evaluated to determine how much coverage they can provide to support extract functions from proprietary systems. If extract tools are not available to do the job, then the project team must write the extract programs, often using Java connectors, PL/SQL or C languages. The objective is to extract and load only the data that has changed since the last time a snapshot was built. Because of the huge volumes of historical data contained in the warehouse, loading every record with every snapshot may quickly create a capacity constraint problem. the data into a single, integrated database in the warehouse. Each extract module is highly customized and tightly coupled to the unique source systems. There may be many source systems and the data among them is inconsistent. Extract modules must accommodate this diversity in platforms and data structures. If the data source is in a relational For example, if you have 50 million customers, you should not duplicate every customer name and address with every database snapshot. You should isolate and capture only those names or addresses that have changed. Each extract module generates a common output format consistent with the data warehouse design. 1 Dr. Bjarne Berg DRAFT Manage data quality: This could be described as a “data half-gallon size, candy apple red; “x” means male and “y” editing” function. It involves developing algorithms for means female. cleansing / scrubbing, integrity checking, and formatting the Calculation / Derivation: Derives new data values based on data. Data standards for naming conventions, codes, and pre-defined algorithms, quantitative methods, and abbreviations are applied to establish consistency to data measurements. Calculation “interprets” detailed data to extracted from multiple sources. derive new data sets. Examples might include contribution Data Transformation System from revenue and expense data, market share, forecasts and The data transform system manipulates extracted data in order sales compensation. to add value by applying descriptors, summations, and Aggregation/Summarization: This means to sort and to calculations to the tables. It is designed to transform the data create summaries of broader data categories. For example, into commonly requested and/or different values, and to this may involve summarizing the revenues for all products support query performance. The data transformation system within a particular product category, or to create sums for accepts data from the extract system and prepares it for performance measures (i.e. cost and sales data). installation into the data warehouse as summarized or The data transfer and replication system moves the calculated data. The extracted data is uniformly processed data within the warehouse architecture from source to target according to a well-defined set of enterprise transformation databases via automated or scheduled programs. It rules. The transformation system is designed to ensure that determines the links to the sources with the warehouse, the any changes to the data warehouse database design are physically moves the data, the management of processes and insulated from the extraction process. The data transformation the systems, as well as the loads of data. An understanding component is an automated, modular, repeatable process. of the data transfer and replication system impacts the Transformation modules are based on well-defined rules or design of the technology infrastructure. Once we have standards, and are tightly coupled to the data warehouse. The extracted the production data, isolated the changed records, definition of the data warehouse is a storage structure for and cleansed the data, it is time to create the load record and summarized; calculated data generated through the transfer the data to the warehouse. This includes moving transformation process, as opposed to the operational data data from one or more source databases to one or more which contains detailed, atomic level data. These are classic target databases. It involves supporting many data transfer definitions and do not preclude summarized data from being points (e.g. data sources, staging platforms, extract system, stored in the ODS. Some transformation of data must also take transport system, operational data stores, warehouses, marts, place prior to loading data into the ODS. Also, the and servers, desktop). There can also be many different transformation process is usually less complex than extraction technologies employed for data transfer (e.g. FTP, EDI, and since it doesn’t involve data integration and cleansing issues. Internet). Finally, this movement of data may be physically Translation / Attribution: Applies descriptive information to distributed, not just locally but globally as well. Metadata data values. Examples include assigning natural language has to be recorded including managing data transfer, load descriptions to coded data, such as Product 5-9872 is paint, sequences, and volumes. It also includes defining data audit procedures for each transfer point. 2