Chapter 5

advertisement

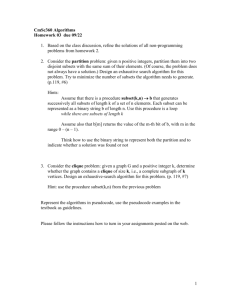

Chapter 5 FEATURE SELECTION Chapter 5 FEATURE SELECTION 5.1 Need for Feature Reduction Many factors affect the success of machine learning on a given task. The representation and quality of the example data is first and foremost. Nowadays, the need to process large databases is becoming increasingly common. Full text databases learners typically deal with tens of thousands of features; vision systems, spoken word and character recognition problems all require hundreds of classes and may have thousands of input features. The majority of real-world classification problems require supervised learning where the underlying class probabilities and class-conditionals probabilities are unknown, and each instance is associated with a class label. In real-world situations, relevant features are often unknown a priori. Therefore, many candidate features are introduced to better represent the domain. Theoretically, having more features should result in more discriminating power. However, practical experience with machine learning algorithms has shown that this is not always the case, current machine learning toolkits are insufficiently equipped to deal with contemporary datasets and many algorithms are susceptible to exhibit poor complexity with respect to the number of features. Furthermore, when faced with many noisy features, some algorithms take an inordinately long time to converge, or never converge at all. And even if they do converge conventional algorithms will tend to construct poor classifiers [Kon94]. Many of the introduced features during the training of a classifier are either partially or completely irrelevant/redundant to the target concept; an irrelevant feature does not affect the target concept in any way, and a redundant feature does not add anything new 63 Chapter 5 FEATURE SELECTION to the target concept. In many applications, the size of a dataset is so large that learning might not work as well before removing these unwanted features. Recent research has shown that common machine learning algorithms are adversely affected by irrelevant and redundant training information. The simple nearest neighbor algorithm is sensitive to irrelevant attributes, its sample complexity (number of training examples needed to reach a given accuracy level) grows exponentially with the number of irrelevant attributes (s. [Lan94a, Lan94b, Aha91]). Sample complexity for decision tree algorithms can grow exponentially on some concepts (such as parity) as well. The naive Bayes classifier can be adversely affected by redundant attributes due to its assumption that attributes are independent given the class [Lan94c]. Decision tree algorithms such as C4.5 [Qui86, Qui93] can sometimes over fit training data, resulting in large trees. In many cases, removing irrelevant and redundant information can result in C4.5 producing smaller trees [Koh96]. Neural Networks are supposed to cope with irrelevant and redundant features when the amount of training data is enough to compensate this drawback, otherwise they are also affected by the amount of irrelevant information. Reducing the number of irrelevant/redundant features drastically reduces the running time of a learning algorithm and yields a more general concept. This helps in getting a better insight into the underlying concept of a real-world classification problem. Feature selection methods try to pick up a subset of features that are relevant to the target concept. 5.2 Feature Selection process The problem introduced in previous section can be alleviated by preprocessing the dataset to remove noisy and low-information bearing attributes. “Feature selection is the problem of choosing a small subset of features that ideally is necessary and sufficient to describe the target concept” Kira & Rendell From the terms “necessary” and “sufficient” included in the given definition, it can be stated that feature selection attempts to select the minimally sized subset of features according to the following criteria: 64 Chapter 5 FEATURE SELECTION 1. the classification accuracy do not significantly decrease; and 2. the resulting class distribution, given only the values for the selected features, is as close as possible to the original class distribution, given all features. Figure 5.1 General criteria for a feature selection method. Ideally, feature selection methods search through the subsets of features, and try to find the best one among 2N candidate subsets according to some evaluation function. However this procedure is exhaustive as it tries to find only the best one. It may be too costly and practically prohibitive even for a medium sized feature set. Other methods based on heuristic or random search methods attempt to reduce computational complexity by compromising performance. These methods need a stopping criterion to prevent an exhaustive search of subsets. There are four basic steps in a typical feature selection method: 1. Starting point: Selecting a point in the feature subset space from which to begin the search can affect the direction of the search. One option is to begin with no features and successively add attributes. In this case, the search is said to proceed forward through the search space. Conversely, the search can begin with all features and successively remove them. In this case, the search proceeds backward through the search space. Another alternative is to begin somewhere in the middle and move outwards from this point. 2. Search organization: An exhaustive search of the feature subspace is prohibitive for all but a small initial number of features. With N initial features there exist 2 N possible subsets. Heuristic search strategies are more feasible than exhaustive ones and can give good results, although they do not guarantee finding the optimal subset. 3. Evaluation strategy: How feature subsets are evaluated is the single biggest differentiating factor among feature selection algorithms for machine learning. One paradigm, dubbed the filter [Koh95, Koh96], operates independent of any 65 Chapter 5 FEATURE SELECTION learning algorithm—undesirable features are filtered out of the data before learning begins. These algorithms use heuristics based on general characteristics of the data to evaluate the merit of feature subsets. Another school of thought argues that the bias of a particular induction algorithm should be taken into account when selecting features. This method, called the wrapper [Koh95, Koh96], uses an induction algorithm along with a statistical re-sampling technique such as cross-validation to estimate the final accuracy of feature subsets. 4. Stopping criterion: A feature selector must decide when to stop searching through the space of feature subsets. Depending on the evaluation strategy, a feature selector might stop adding or removing features when none of the alternatives improves upon the merit of a current feature subset. Alternatively, the algorithm might continue to revise the feature subset as long as the merit does not degrade. A further option could be to continue generating feature subsets until reaching the opposite end of the search space and then select the best. Many learning algorithms can be viewed as making a (biased) estimate of the probability of the class label given a set of features. This is a complex, high dimensional distribution. Unfortunately, induction is often performed on limited data. This makes estimating the many probabilistic parameters difficult. In order to avoid over fitting the training data, many algorithms employ the Occam’s Razor [Gam97] bias to build a simple model that still achieves some acceptable level of performance on the training data. This bias often leads an algorithm to prefer a small number of predictive attributes over a large number of features that, if used in the proper combination, are fully predictive of the class label. If there is too much irrelevant and redundant information present or the data is noisy and unreliable, then learning during the training phase is more difficult. Feature subset selection is the process of identifying and removing as much irrelevant and redundant information as possible. This reduces the dimensionality of the data and may allow learning algorithms to operate faster and more effectively. In some cases, accuracy on future classification can be improved; in others, the result is a more compact, easily interpreted representation of the target concept. 66 Chapter 5 FEATURE SELECTION 5.3 Feature selection methods overview Feature subset selection has long been a research area within statistics and pattern recognition [Dev82, Mil90]. It is not surprising that feature selection is as much of an issue for machine learning as it is for pattern recognition, as both fields share the common task of classification. In pattern recognition, feature selection can have an impact on the economics of data acquisition and on the accuracy and complexity of the classifier [Dev82]. This is also true of machine learning, which has the added concern of distilling useful knowledge from data. Fortunately, feature selection has been shown to improve the comprehensibility of extracted knowledge [Koh96]. GENERATION EVALUATION Distance Information Dependency Heuristic Relief [Kir92], Relief-F [Kon94], Segen [Seg84] DTM [Car93] , Koller & Sahami [Kol96] Complete Random Branch & Bound [Nar77], BFF [XuL88], Bobrowski [Bob88] MDLM [She90] POE1ACC [Muc71], PRESET [Mod93] Focus [Alm92], Schlimmer [Sch93], Consistency LVF [Liu96] MIFES-1 [Oli92] SBS, SFS [Dev82], SBS- LVW [Liu96b], SLASH [Car94], PQSS, Classifier BDS [Doa92], Schemata Ichino & Sklansky Error Rate search [Moo94], RC [Ichi84] [Ichi84b] GA [Vaf94], SA, RGSS [Doa92], [Dom96], Queiros & Gelsema [Que84] RMHC-PF1 [Ska94] Table 5.1 Different feature selection methods as stated by M. Dash and H. Liu [Das97]. 67 Chapter 5 FEATURE SELECTION There are a huge number of different feature selection methods. A study carried out by M. Dash and H. Liu [Das97] presents 32 different methods grouped based on the types of generation and evaluation function using in them. If the original feature set contains N number of features, then the total number of competing candidate subsets to be generated is 2N. This is a huge number even for medium-sized N. Generation procedures are different approaches for solving this problem, namely: complete, in which all the subsets are evaluated; heuristic, whose generation of subsets is made by adding/removing attributes (incremental/decremental); and random, which evaluates a certain number of random generated subsets. On the other hand, the aim of an evaluation function is to measure the discriminating ability of a feature or a subset to distinguish the different class labels. There are two common approaches: a wrapper uses the intended learning algorithm itself to evaluate the usefulness of features, while a filter evaluates features according to heuristics based on general characteristics of the data. The wrapper approach is generally considered to produce better feature subsets but runs much more slowly than a filter [Hal99]. The study of M. Dash and H. Liu [Das97] divides evaluation functions into five categories: distance, which evaluates differences between class conditional probabilities; information, based on the information gain of a feature; dependence, based in correlation measurements; consistency, in which an acceptable inconsistency rate is set by the user; and classifier error rate, which uses the classifier as evaluation function. According to the general approach, only the last evaluation function, classifier error rate, could be counted as a wrapper. Table 5.1 resumes the classification of methods in [Das97]. The blank boxes in the table signify that no method exists yet for these combinations. Since deeper analysis of each one of the included feature selection techniques is far from this thesis purpose, references for further information about them are given in the table. In [Hal99], M. A. Hall and L. A. Smith present a particular approach to feature selection, Correlation-based Feature Selection (CFS), that uses a correlation-based heuristic to evaluate the worth of features. Despite this method has not been plainly employed during this work, various ideas about feature selection using correlation measurements between features and between features and output classes have been extracted from it and its direct application is seriously taken into consideration for future 68 Chapter 5 FEATURE SELECTION work. Consequently a brief overview of this particular feature selection criteria is given in following section 5.3.1. 5.3.1 Correlation-based Feature Selection CFS algorithm relies on a heuristic for evaluating the worth or merit of a subset of features. This heuristic takes into account the usefulness of individual features for predicting the class label along with the level of intercorrelation among them. The hypotheses on which the heuristic is based can be stated: “Good feature subsets contain features highly correlated with (predictive of) the class, yet uncorrelated with (not predictive of) each other” [Hal99] Following the same directions, Genari [Gen89] states “Features are relevant if their values vary systematically with category membership.” In other words, a feature is useful if it is correlated with or predictive of the class; otherwise it is irrelevant. Empirical evidence from the feature selection literature shows that, along with irrelevant features, redundant information should be eliminated as well (s. [Lan94c, Koh96, Koh95]. A feature is said to be redundant if one or more of the other features are highly correlated with it. The above definitions for relevance and redundancy lead to the idea that best features for a given classification are those that are highly correlated with one of the classes and have an insignificant correlation with the rest of the features in the set. If the correlation between each of the components in a test and the outside variable is known, and the inter-correlation between each pair of components is given, then the correlation between a composite1 consisting of the summed components and the outside variable can be predicted from [Ghi64, Hog77, Zaj62]: rzc 1 krzi k k (k 1)rii (5.1) Subset of features selected for evaluation. 69 Chapter 5 FEATURE SELECTION Where rzc = correlation between the summed components and the outside variable. k = number of components (features). rzi = average of the correlations between the components and the outside variable. rii = average inter-correlation between components. Equation 5.1 represents the Pearson’s correlation coefficient, where all the variables have been standarised. The numerator can be thought of as giving an indication of how predictive of the class a group of features are; the denominator of how much redundancy there is among them. Thus, equation 5.1 shows that the correlation between a composite and an outside variable is a function of the number of component variables in the composite and the magnitude of the inter-correlations among them, together with the magnitude of the correlations between the components and the outside variable. Some conclusions can be extracted from (5.1): The higher the correlations between the components and the outside variable, the higher the correlation between the composite and the outside variable. As the number of components in the composite increases, the correlation between the composite and the outside variable increases. The lower the inter-correlation among the components, the higher the correlation between the composite and the outside variable. Theoretically, when the number of components in the composite increases, the correlation between the composite and the outside variable also increases. However, it is unlikely that a group of components that are highly correlated with the outside variable will at the same time bear low correlations with each other [Ghi64]. Furthermore, Hogart [Hog77] notes that, when inclusion of an additional component is considered, low intercorrelation with the already selected components may well predominate over high correlation with the outside variable. 70 Chapter 5 FEATURE SELECTION 5.4 Feature Selection Procedures employed in this work Neural Networks, principal classifier used during this thesis, are able to handle redundant and irrelevant features, assuming that enough training patterns are available to estimate suitable weights during the learning process. Since our database hardly satisfies this requirement, pre-processing of the input features becomes indispensable to improve prediction accuracy. Regression models have been mainly applied as selection procedure during this work. However, other procedures, such as Fischer’s discriminant (F-Ratio), NN pruning, correlation analysis and graphical analysis of features’ statistics (boxplot) have been tested. Subsequent subsections give a description of each one of these methods bases. 5.4.1 Regression models Mainly linear regression models are employed during this thesis to select a subset of features from a higher amount of them in order to reduce the input dimensionality of the classifier, as stated in former sections. For some specific cases (speaker independent), also quadratic regression models were used. Linear regression models emotions by linear combination of features and selects only those, which significantly modifies the model. Quadratic models performance works in a similar way but allowing quadratic combinations in the modelling of the emotions. Both models are implemented using R2, a language and environment for statistical computing and graphics. The resulting selected features are scored by R according to their influence on the model. There are four different scores ordered by grade of importance: - three points (most influential), - two points, - one point and, - just one remark (less influential). Which features are taken into account, for classification tasks, varies among different experiments and it is specified in the conditions descriptions in chapters 8 and 9. 2 http://www.r-project.org/ 71 Chapter 5 FEATURE SELECTION Feature sets selected through regression models are tested in following experiments: PROSODIC EXP. QUALITY EXP. SPKR DEPENDENT 8.2.1.1, 8.2.1.4, 8.1.2.5, 8.2.2.2 9.2.2.2 SPKR INDEPENDENT 8.3.1.2 9.3.2.1, 9.3.2.2 Table 5.2 Experiments where the regression-based feature selection is tested. 5.4.2 Fischer’s discriminant: F-Ratio Fischer’s discriminant is a measure of separability of the recognition classes. It is based on the idea that the ability of a feature to separate two classes depends on the distance between classes and the scatter within classes. In figure 5.2 it becomes clear that, although the means of X are more widely separated, Y is better at separating the two classes, because there is no overlap between the distributions: Overlap depends on two factors: The distance between distributions for the two classes and, The width of (i.e. scatter within) the distributions. Class 1 Class 1 Class 2 Class 2 Feature X Feature Y Figure 5.2 Performance of a recognition feature depends on the class-to-class difference relative to the scatter within classes. 72 Chapter 5 FEATURE SELECTION A reasonable way to characterise this numerically would be to take the ratio of the difference of the means to the standard deviation of the measurements or, since there are two set of measurements, to find the average of the two standard deviations. Fischer’s discriminant is based on this principle. f 1 2 2 (5.2) 12 2 2 Where n mean of the feature for the class n. n 2 variance of the feature for the class n. Generally there will be many more than two classes. In that case, the class-to-class separation of the feature over all the classes has to be considered. This estimation can be done by representing each class by its mean and taking the variance of the means. This variance is then compared to the average width of the distribution for each class, i.e. the mean of the individual variances. This measure is commonly called the F-Ratio: 1 F Ratio 1 m ( j ) 2 (m 1) j 1 m (5.3) n ( xij j ) m( n 1) j 1 i 1 2 Where n = number of measurements for each class. m = number of different classes. x ij = ith measurement for class j. μj = mean of all measurements for class j. = mean of all measurements over all classes. The F-Ratio feature selection method is tested in experiment 9.3.2.3. There, it’s observed that the performance of the classifier doesn’t improve when the set resulting from the F-Ratio analysis of the features is tested. However, an evident reason can be the cause of this lack of success: The F-Ratio is used for evaluating a single feature and when many potential features are to be evaluated, it’s not safe just to rank features by the F ratio 73 Chapter 5 FEATURE SELECTION and pick the best one for use unless all the features are uncorrelated, which usually are not. Two possible responses to this problem are proposed for future work: Use various techniques for evaluation combination of features. Transform features into dependent ones and then pick the best ones in the transformed space. 5.4.3 NN pruning This procedure is implicitly performed during the neural network training phase if the pruning algorithm is selected. A pruned neural network eliminates all those nodes (features) and/or links that are not relevant for the classification tasks. Since this selection method is closer of being a neural network learning method, detailed information about its functioning can be found in section 6.3.3.3. 5.4.4 Correlation analysis Despite there are many proposed algorithms to implement correlation-based feature selection, which uses heuristic generation of subsets and selects the best composite among many different possibilities, the implementation, test and search of an optimal algorithm would widely spread the boundaries of the present thesis and was proposed as a separate topic for a future Diploma Thesis. Therefore, the correlation analysis employed in the first experiments of this thesis was simply to apply the main ideas presented in section 5.3.1. First, the correlation matrix was calculated taking into account both the features and the outputs of the specific problem. Then, following the conclusions extracted from section 5.3.1, the features that were the most correlated with one of the outputs and, at the same time, had weak correlation with the rest of the features, were selected among all candidates. This procedure of feature selection was employed in the preliminary experiments carried out with prosodic features. The results showed that, despite the selected features actually seem to possess relevant information, the optimisation of a correlation-based method would be a valuable proposal for future work, as it has been already introduced. 74 Chapter 5 FEATURE SELECTION 5.4.5 Graphical analysis of feature statistics (“boxplot”) A “boxplot” provides an excellent visual summary of many important aspects of a distribution. The box stretches from the lower hinge (defined as the 25th percentile3) to the upper hinge (the 75th percentile) and therefore the length of the box contains the middle 1 2 3 0 e+00 0.0 e+00 1 e+05 5.0 e+07 2 e+05 3 e+05 1.0 e+08 4 e+05 5 e+05 1.5 e+08 half of the scores in the distribution. 1 2 3 (b) 100 100 150 150 200 200 250 250 300 300 350 (a) 1 2 (c) 3 1 2 3 (d) Figure 5. 3 Boxplot graphical representations of features (a) P1.5, (b) P1.7, (c) P1.14 and (d) P1.23 used for the selection in experiment 8.2.2.2. Each box represents one of the three activation levels (3 classes). The median4 is shown as a line across the box. Therefore 1/4 of the distribution is between this line and the top of the box and 1/4 of the distribution is between this line and the bottom of the box. 3 A percentile rank is the proportion of scores in a distribution that a specific score is greater than or equal to. For instance, if you received a score of 95 on a math test and this score was greater than or equal to the scores of 88% of the students taking the test, then your percentile rank would be 88. You would be in the 88th percentile. 4 The median is the middle of a distribution: half the scores are above the median and half are below the median. 75 Chapter 5 FEATURE SELECTION It is often useful to compare data from two or more groups by viewing “boxplots” from the groups side by side. Boxplots are useful to compare two or more samples by comparing the center value (median) and variation (length of the box) and to compare how well a given feature is capable of separating predetermined classes. For instance, figure 5.3 shows the boxplot representations of four different features when three outputs are considered (experiment 8.2.2.2). The first feature (a) would a priori represents a good feature to discriminate among the three given classes because their median values are well distanced and also the box lengths are not significantly overlapped. Also features (b) and (c) were considered for the selected set after the observation of their statistics. However, feature (d) was omitted because its statistics are not able to make distinctions among the classes, as depicted in figure 5.3 (d). This graphical method has been employed during this thesis in combination with other procedure, mainly linear regression, in order to achieve a enhanced analysis of the features. 76