Approximation & Errors

advertisement

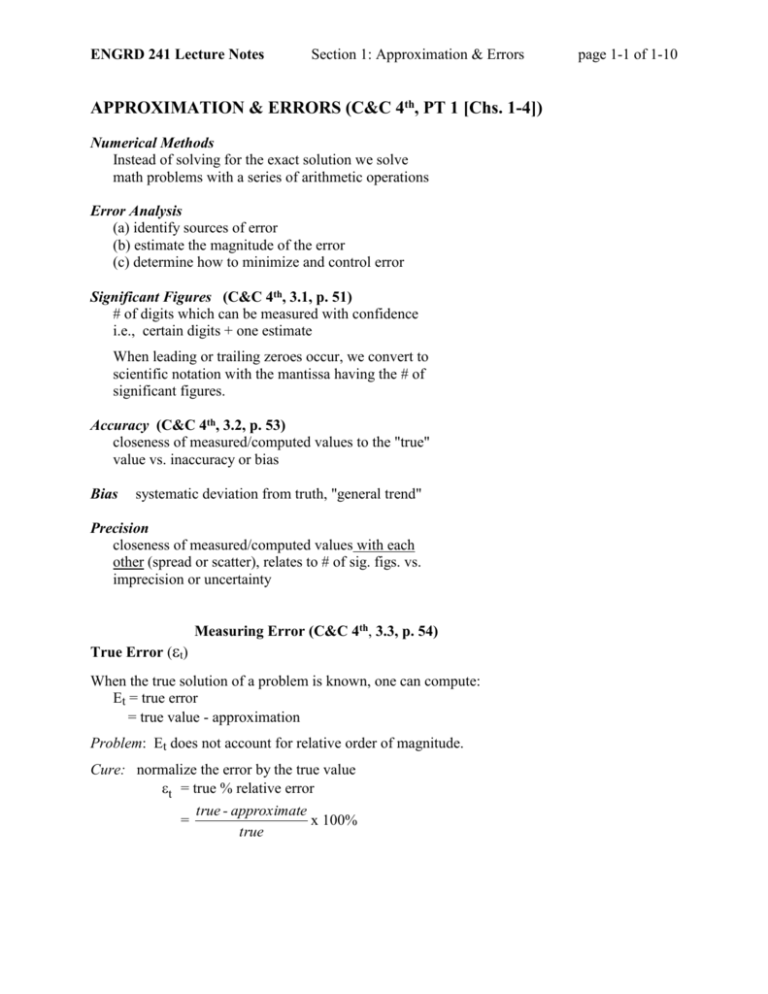

ENGRD 241 Lecture Notes

Section 1: Approximation & Errors

APPROXIMATION & ERRORS (C&C 4th, PT 1 [Chs. 1-4])

Numerical Methods

Instead of solving for the exact solution we solve

math problems with a series of arithmetic operations

Error Analysis

(a) identify sources of error

(b) estimate the magnitude of the error

(c) determine how to minimize and control error

Significant Figures (C&C 4th, 3.1, p. 51)

# of digits which can be measured with confidence

i.e., certain digits + one estimate

When leading or trailing zeroes occur, we convert to

scientific notation with the mantissa having the # of

significant figures.

Accuracy (C&C 4th, 3.2, p. 53)

closeness of measured/computed values to the "true"

value vs. inaccuracy or bias

Bias

systematic deviation from truth, "general trend"

Precision

closeness of measured/computed values with each

other (spread or scatter), relates to # of sig. figs. vs.

imprecision or uncertainty

Measuring Error (C&C 4th, 3.3, p. 54)

True Error (t)

When the true solution of a problem is known, one can compute:

Et = true error

= true value - approximation

Problem: Et does not account for relative order of magnitude.

Cure: normalize the error by the true value

t = true % relative error

true - approximate

=

x 100%

true

page 1-1 of 1-10

ENGRD 241 Lecture Notes

Section 1: Approximation & Errors

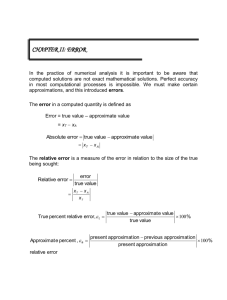

Approximate Error (a)

Suppose solving a sequence of approximations or iterative calculations

that is converging toward the correct solution.

When you don't know true (more often the case!), approximate the error in

the same way we approximated the solution:

a =

Present Approx. - Previous Approx.

x 100%

Present Approx.

This expression can be inaccurate as estimator of t.

Stopping Error (s)

We could stop an iterative procedure when

|a| < s

where s is our convergence criteria. To achieve n significant digits of

accuracy, one might use

s = (0.5 x 102-n) %

(NOTE: 102 is required because the result is a percent)

SOURCES OF ERROR

1. Idealization errors

a) Theory and/or Model Formulation

b) Data uncertainty

* c) Discretization inadequacies

2. Input Errors (blunders)

3. Process Errors (algorithm dependent)

a) Blunders, logic errors

* b) Truncation (convergence/stability)

4. Manipulation Errors

* a) Round-off

* b) Cancellation, critical arithmetic

5. Output Errors

a) Blunders (wrong output)

b) Interpretation

[C&C 4th, 4.4.2]

[C&C 4th, 4.4.3]

[C&C 4th, 4.4.1]

[C&C 4th, 4.4.1]

[C&C 4th, 4.1]

[C&C 4th, 3.4]

[C&C 4th, 3.4.2]

[C&C 4th, 4.4.1]

*These items are interrelated and will be treated extensively in this course.

page 1-2 of 1-10

ENGRD 241 Lecture Notes

Section 1: Approximation & Errors

Primary Error Types in Numerical Solutions:

Round-off Errors

limited # of sig. digits that can be retained by computer

Truncation Errors

due to approximation of numerical method employed

Round-off Errors (C&C 4th, 3.4, p. 57)

Representing a Floating Point #:

divide n bit word into +/-, signed exponent, and mantissa

+/signed exponent

mantissa

which refers to

± m be

m is mantissa

b is base of # system, i.e., 2, 10, ...

e is exponent

normalize to exclude "useless" zeros so mantissa satisfies

1

m 1

b

for binary: 0.5 < m < 1

decimal: 0.1 < m < 1

EXAMPLE: Base 10 Machine:

This "Machine" carries three sig. decimal digits and one exponent digit

after normalization.

± 0.DDDE ± e

with:

0 < e <9

100 < DDD < 999

We represent – 0.02778 in our machine:

– 0.277E-1 w/ chopping

t = 0.29%

– 0.278E-1 w/ rounding

t = 0.07%

(most computers round, but some chop to save time)

page 1-3 of 1-10

ENGRD 241 Lecture Notes

Section 1: Approximation & Errors

Consequences of Computer Representation of #'s:

Limited Range of quantities can be represented because there are a

finite # of digits in both the mantissa and the exponent.

"our machine" example:

smallest positive #:

largest positive #:

0.100E-9 or 10 - 10

0.999E+9 10 + 9

Finite # of floating point values can be represented within above range.

"our machine" example:

± (0.100 to 0.999) E-9 to E+9

2

900 #'s

19 #'s = 34,200 #'s

plus zero ( 0.000 E±D)

Thus, a total of 34,201 #'s can be represented within the above

range (zero appears only once).

The interval between successive represented #'s (quantization error) is

not uniform and increases as the numbers grow in magnitude.

– "quantization" error, x, is proportional to the magnitude x.

@ 0.312E+1 | x | = 0.01

@ 0.312E+4 | x | = 10.0

– normalizing | x | by |x| approximates the "machine epsilon"

Machine Epsilon:

Smallest number such that: 1 + > 1

x

with chopping

x

Quick formula [Eq. (3.11), p. 64, correct for chopping only]:

= b1-t for a machine with chopping [ = b-t for rounding]

where: b = base system

t = number of sig. figs. in mantissa

Trouble happens when you – (C&C 4th, 3.4.2, p. 66)

Add (or subtract) a small number to a large number.

Small numbers get lost.

Remedy: add or subtract small numbers together first.

Subtract numbers of almost equal size (subtractive cancellation).

Too few significant digits left.

Both can happen.

Consider series expansion of exp(x) for x = –10 (smearing). See

C&C, Ex. 3.8 and Fig. 3.12, p. 70-71, where negative values of

exp(-10) are possibly computed due to smearing.

page 1-4 of 1-10

ENGRD 241 Lecture Notes

Section 1: Approximation & Errors

Modern Computers (IEEE standard):

Single Precision:

32 BIT WORDS

24 BITS assigned to mantissa

(First bit assumed to = 1 and not stored.)

8 BITS to signed exponent

Double Precision:

64 BIT WORDS

56 BITS assigned to mantissa

8 BITS to signed exponent

223 = 8,400,000 or almost 7 full decimal digits

255 = 4x1016 or almost 17 decimal digits

27 – 1 = 127; 2+127 ~ 2x1038

(Be careful here as to how sign and number are represented.)

Truncation Error

Error caused the by nature of numerical technique employed to

approximate the solution

Taylor Series Expansion (C&C 4th, 4.1, p. 73)

Basic Idea:

Predict value of a function, f, at a point xi+1 based on the value of a

function and all of its derivatives, f, f ', f ",… at a neighboring point xi

General Form (Eq. 4.7 in C&C)

h2

h3

hn n

f(xi+1) = f(xi) + hf '(xi) +

f "(xi) +

f "'(xi) + … +

f (xi) + Rn

2!

3!

n!

with

h = "step size" = xi+1 – xi

Rn= remainder to account for other terms

=

h n 1 n+1

f () where xi < < xi+1

(n 1)!

= O (hn+1) with not exactly known

"on the order of hn+1"

Note: f(x) must be a function with n+1 continuous derivatives

page 1-5 of 1-10

ENGRD 241 Lecture Notes

Section 1: Approximation & Errors

page 1-6 of 1-10

Numerical Differentiation from Taylor Series Expansion (C&C 4th, 4.1.3, p. 85)

Objective:

Evaluate the derivatives of function, f (xi), without doing it analytically.

When would we want to do this?

1. function is too complicated to differentiate analytically

2. function is not defined by an equation (data points)

Backward Difference Approx.:

First Derivative:

f (xi–1) = f (xi) + (xi–1 – xi) f '(xi) +

h2

f "()

2!

with

h = xi – xi-1

h2

f "()

2!

h2

h f '(xi) = f (xi) – f (xi–1) +

f "()

2!

f (xi–1) = f (xi) – hf '(xi) +

f '(xi) =

f(x i ) f(x i1 )

h

+

f "()

h

2!

or f '(xi) =

f(x i ) f(x i1 )

+ O (h1)

h

first backward difference

Second Derivative:

(x i 2 x i )2

f (xi–2) = f (xi) + (xi–2 – xi) f '(xi) +

f "(xi) + O ([xi –2 – xi ]3)

2!

with h = xi – xi–1 and 2h = xi – xi–2

f (xi-2) = f (xi) – 2h f '(xi) + 2h2 f "(xi) + O (h3) [1]

f (xi-1) = f (xi) – hf '(xi)+

h2

f "(xi) + O (h3)

2!

[2]

subtracting 2*[2] from [1] yields:

f (xi–2) – 2f (xi–1) = – f (xi) + h2 f "(xi) + O (h3)

h2f "(xi) = f (xi) – 2f(xi–1) + f(xi–2) + O (h3)

f x i

f(x i ) 2f(x i1 ) f(x i2 ) O(h 3)

f x i

f(x i ) 2f(x i1 ) f(x i 2 )

h2

h2

Oh

second backward difference

ENGRD 241 Lecture Notes

Section 1: Approximation & Errors

page 1-7 of 1-10

Other Formulas:

Forward:

f '(xi) =

f "(xi) =

Centered:

f '(xi) =

f "(xi) =

f(x i1 ) f(x i )

+ O (h)

h

f(x i 2 ) 2f(x i1 ) f(x i )

h2

+ O (h)

f(x i1 ) f(x i1 )

+ O (h2)

2h

f(x i1 ) 2f(x i ) f(x i1 )

2

2

+ O (h )

h

Questions:

Which is better: forward, centered, backward?

Why?

When should I use which?

Note: We also can get higher forward, centered, and backward difference

derivative approximations [C&C 4th, Chapter 23, Figs. 23-1 thru 23-3]

Example Combining Round-off and Truncation Error (C&C 4th, 4.3, p. 93)

Determine h to minimize total error of forward finite-divided difference

approximation for f'(x)

f '(x)

f(x i1 ) f(x i )

h

Truncation Error:

From Taylor series the truncation error is

f ' (x) =

f(x i1 ) f(x i )

h

–

f "()

h

2

Round-off Error:

f x fˆ x f x

with = machine epsilon. As a result:

f(x i h) (1 ) f(x i ) (1 ) h

f̂ x

f

h

2

Roundoff Error

f(x i h) f(x i )

h

2 f(x i )

h

ENGRD 241 Lecture Notes

Section 1: Approximation & Errors

Total Error is the sum of the two:

h

E = | Total Error |

| f"() |

2

Truncation

Error

+

2 f(x i )

h

Round-off

Error

Note: truncation error decreases as h decreases.

round-off error increases as h decreases.

To minimize total error E with respect to h, set derivative to zero:

f ( )

2 f(x i )

dE

=

–

= 0

dh

2

h2

Solving for h and approximating f"() as f"(xi) yields:

h=

4 f(x i )

f (x i )

Linear Application

Determine h that will minimize total error in calculation of f’(x) for

f(x) = x

at

x=1

Using first forward-divided-difference approximation with error O(h) and

a 5-decimal-digit machine:

= b1-t = 101-5 = 10-4 = 0.0001

f '(x) =

h=

f "= 0

4 f(x i )

= infinity

f (x i )

h

f(x +h)=(x+h)

0

0.000001

0.00001

0.0001

0.001

0.01

0.1

1

3.1415

3.1415

3.1416

3.1419

3.1447

3.1730

3.4557

6.2831

f(x+h)-f(x)

0

0.0001

0.0004

0.0032

0.0315

0.3142

3.1416

f’(x) [f(x+h)-f(x)]/h

{exact = 3.1415}

0

10

4.0

3.2

3.15

3.142

3.1416

Underlined digits are subject to round-off error. They are likely to be in

error by ± one or two units. This does not cause much problem when

h=1, but causes large errors in the final result when h<10-4.

page 1-8 of 1-10

ENGRD 241 Lecture Notes

Section 1: Approximation & Errors

Nonlinear Application

Determine h that will minimize total error in calculating f’(x) for

f(x) = ex

at

x=3

Using first forward-divided-difference approximation with error O(h) and

a 5-decimal-digit machine:

= b1-t = 101-5 = 10-4 = 0.0001

f(x) = f '(x) = f "(x) = ex = 20.0855

h=

4 f(x i )

f (x

i)

= 0.02 or about 0.01

h

f(x +h)=exp(x+h)

f(x+h)-f(x)

0

0.00001

0.0001

0.001

0.01

0.1

1

20.085

20.085

20.087

20.105

20.287

22.198

54.598

0

0.002

0.020

0.202

2.113

34.513

{exact: 20.085}

[f(x+h)-f(x)]/h

0

20

20

20.2

21.13

34.513

full precision

[f(x+h)-f(x)]/h

20.086

20.086

20.096

20.18

21.12

34.512

Underlined digits subject to round-off error.

Bold digits are in error due to truncation.

Supplemental Information

Additional Error Terminology:

Error Propagation [see C&C 4.2.1-2]

errors which appear because we are basing current calculations on

previous calculations which also incurred some form of error

Stability and Condition Number [see C&C 4th 4.2.3]

Numerically Unstable: Computations that are so sensitive to round-off

errors that errors grow uncontrollably during calculations.

Condition: sensitivity to such uncertainty;

"well conditioned" vs. "ill conditioned"

page 1-9 of 1-10

ENGRD 241 Lecture Notes

Section 1: Approximation & Errors

Condition Number: measure of the condition; i.e., extent to which

uncertainty in x is amplified by f (x)

C.N. 1 ===> "well-conditioned"

C.N. >> 1 ===> "ill-conditioned"

Challenge: (C&C 4th, example 3.8, p. 70)

Compute value of e–10 using +, –, x, / and the exponential series

formula:

x 2 x 3 x 4 x5 . . . . .

ex = 1 + x +

+

+

+

2! 3! 4! 5!

Can we obtain an accurate solution with modest effort?

Effect of Large h in a Taylor Series Expansion:

General Form (Eq. 4.7):

h2

hn n

f(xi+1) = f(xi) + hf '(xi) +

f "(xi) + …+

f (xi) + O(hn+1)

2!

n!

Suppose f(x) of interest is ex and we wish to predict e3.5 by expanding

about x = 0 with h=3.5.

Here f(x) = f '(x) = f "(x) = ... = ex,

and e0 = 1,

so:

e3.5 = 1 + 3.5 + 6.125 + 7.1458

+ 6.2526 + 4.3768 + 2.5531 + ...

The terms grow until n > 4, i. e., n! grows much faster than hn for

increasing n. By, for example, n = 16 they are small:

3.516/16!

= 2.4235 x 10-5

35

30

value

25

20

single terms

sum of terms

15

10

5

0

0

5

10

# of terms

15

20

How many terms are required to obtain e3.5 to 4 significant figures?

e3.5 = 33.16 so

3.5 n/n! < 0.01

gives

n = 12

page 1-10 of 1-10

![1 = 0 in the interval [0, 1]](http://s3.studylib.net/store/data/007456042_1-4f61deeb1eb2835844ffc897b5e33f94-300x300.png)