A STUDY OF CODA AND GFS

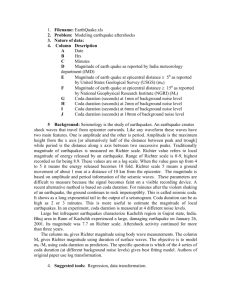

advertisement