Introduction to Multilevel Models and MLwiN

advertisement

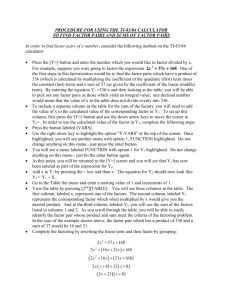

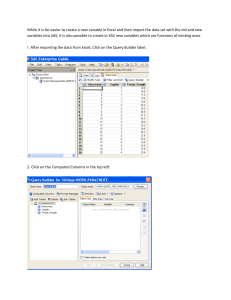

Practical 5: Introduction to Multilevel Models and MLwiN In this short practical you will have your first experience of using MLwiN. You will fit the variance components model discussed in the lecture to an educational dataset and look at certain features of this model. We will firstly give a little background on the dataset The tutorial data set The data in the worksheet we use have been selected from a very much larger data set of examination results from six inner London Education Authorities (school boards). A key aim of the original analysis was to establish whether some schools were more ‘effective’ than others in promoting students’ learning and development, taking account of variations in the characteristics of students when they started Secondary school. Below are a list of all the variables in the dataset. We will here however only focus on fitting models to the normexam variable and consider the standlrt variable as a predictor. The data set contains the following variables: School Student Normexam Cons Standlrt girl Schgend Avslrt Schav Vrband Numeric school identifier Numeric student identifier Student’s exam score at age 16, normalised to have approximately a standard Normal distribution. (Note that the normalisation was carried out on a larger sample, so the mean in this sample is not exactly equal to 0 and the variance is not exactly equal to 1.) A column of one’s. If included as an explanatory variable in a regression model, its coefficient is the intercept. See Chapter 7. Student’s score at age 11 on the London Reading Test, standardised using Z-scores. 1=girl, 0=boy School’s gender (1=mixed school, 2=boys’ school, 3=girls’ school) Average LRT score in school Average LRT score in school, coded into 3 categories (1=bottom 25%, 2=middle 50%, 3=top 25%) Student’s score in test of verbal reasoning at age 11, coded into 3 categories (1=top 25%, 2=middle 50%, 3=bottom 25%) Opening the worksheet and looking at the data When you start MLwiN the main window appears. Immediately below the MLwiN title bar are the menu bar and below it the tool bar as shown: P5-1 Below the tool bar is a blank workspace into which you will open windows. These windows form the rest of the ‘graphical user interface’ which you use to specify tasks in MLwiN. Below the workspace is the status bar, which monitors the progress of the iterative estimation procedure. Open the tutorial worksheet as follows: Select Open worksheet from the File menu. Select tutorial.ws from the list of files. Click on the Open button. When this operation is complete the filename will appear in the title bar of the main window and the status bar will be initialised. The Names window will also appear, giving a summary of the data in the worksheet: The MLwiN worksheet holds the data and other information in a series of columns, as on a spreadsheet. These are initially named c1, c2, …, but we recommend that they be given meaningful names to show what their contents relate to. This has already been done in the tutorial worksheet that you have loaded. Each line in the body of the Names window summarises a column of data. In the present case only the first 10 of the 400 columns of the worksheet contain data. Each column contains 4059 values, one for each student represented in the data set. There are no missing values, and the minimum and maximum value in each column are shown. Note P5-2 the Help button on the Windows tool bar. The remaining items on the tool bar of this window are for attaching a name to a column. We shall use these later. You can view individual values in the data using the Data window as follows: Select View or edit data from the Data Manipulation menu. When this window is first opened it always shows the first three columns in the worksheet. The exact number of values shown depends on the space available on your screen. You can view any selection of columns, spreadsheet fashion, as follows: Click the View button Select columns to view Click OK You can select a block of adjacent columns either by pointing and dragging or by selecting the column at one end of the block and holding down ‘Shift’ while you select the column at the other end. You can add to an existing selection by holding down ‘Ctrl’ while you select new columns or blocks. Use the scroll bars of the Data window to move horizontally and vertically through the data, and move or resize the window if you wish. You can go straight to line 1035, for example, by typing 1035 in the goto line box, and you can highlight a particular cell by pointing and clicking. This provides a means to edit data: see the Help system for more details. Having viewed your data you will typically wish to tabulate and plot selected variables, and derive other summary statistics, before proceeding to actual modelling. Tabulation and other basic statistical operations are available on the Basic Statistics menu. These operations are described in the Help system. P5-3 We will here consider a plot of our response normexam variable against the standlrt predictor variable. Select Customised graph from the Graphs menu In the dropdown list labelled y select normexam In the drop down list labelled x select standlrt Click on the Apply button The following graph will appear: The plot shows, as might be expected, a positive correlation where pupils with higher intake scores tend to have higher outcome scores. This suggests a regression relationship between normexam and standlrt and we can fit a linear regression model in MLwiN but we will here go straight into fitting a random intercept model. Setting up a random intercepts model in the Equations window In MLwiN we set up models in the Equations window. Select Equations from the Model menu. The Equations window will then appear: P5-4 We now have to tell the program the structure of our model and which columns hold the data for our response and predictor variables. We will firstly define our response (y) variable to do this: Click on y (either of the y symbols shown will do). In the y list, select ‘normexam’. We will next set up the structure of the model. The model is set up as follows In the N levels list, select ‘2-ij’. In the level 2 (j) list, select ‘school’. In the level 1 (i) list, select ‘student’. Click on the Done button. In the Equations window the red y has changed to a black yij to indicate that the response and the first and second level indicators have been defined. We now need to set up the predictors for the linear regression model. Click on the red x0. In the drop-down list, select ‘cons’. Note that ‘cons’ is a column containing the value 1 for every student and will hence be used for the intercept term. The fixed parameter tick box is checked by default and so we have added to our model a fixed intercept term. We also need to set up residuals and school effects so that the two sides of the equation balance. To do this: Check the box labelled ‘i(student)’. Check the box labelled ‘j(school)’. Click on the Done button. P5-5 We have now set up our intercept and residual terms but to produce the random intercepts regression model we also need to include the fixed slope (‘standlrt’) term. To do this we need to add a term to our model as follows: Click the Add Term button on the tool bar. Select ‘standlrt’ from the variable list. Click on the Done button. Click on the Estimates button on the tool bar. Note that this adds a fixed effect only for the ‘standlrt’ variable. The equations window should now look as follows: We now need to run this model using the IGLS algorithm and so to do this and see the estimates we do the following Click on the Start button. Click on the Estimates button on the tool bar. Having run the model the estimates will turn from blue to green to denote convergence of the IGLS method. The Equations window should now look as follows: P5-6 Here we see from the coefficient β1 that there is a strong positive effect of prior attainment (as measured by the London reading test) on the exam performance at age 16. We also see that the unexplained variance has been split into two parts, the between schools variance σ2u and the residual variance σ2e. It is often of interest to find how important the schools are in explaining the variation and this is captured by a statistic called the Variance Partition Coefficient (VPC) which measures the percentage of variation explained by a classification. Here the VPC = 0.092/(0.566+0.092)= 0.140, in other words after accounting for prior attainment schools explain 14% of the remaining variation. Note that you can calculate the VPC in MLwiN yourself by doing the following: Select Command Interface window from the Data Manipulation menu. Type calc 0.092/(0.566+0.092) in the Command Interface window Predictions We will probably also be interested in seeing a picture of the fitted school lines for our random intercepts model. This can be done via the predictions and graph windows. Select the Predictions window from the Model menu. At the bottom of the window click on variable and from the options that appear Select Include all explanatory variables. Click on the e0ij to remove level 1 residuals from the prediction. The Predictions window look follows: Select column c11 from should the dropnow down listasnext to output from prediction to P5-7 If you click on the Calc button then the fitted predictions will be stored in the column c11. We now wish to see these displayed graphically so we need to do the following: Select Customised graph(s) from the Graphs menu. Select D1 from the drop down list in the top left of this window. Select ‘c11’ from the y drop down list. Select ‘standlrt’ from the x drop down list. Select ‘school’ from the group drop down list. Select ‘line’ from the plot type drop down list. The window should be as follows: If we now click on the Apply button we will see the 65 parallel school lines that we have fitted. P5-8 What you should have learnt from this exercise How to set up variance components models in MLwiN How to calculate the VPC for a multilevel model How to graph predictions in MLwiN. P5-9