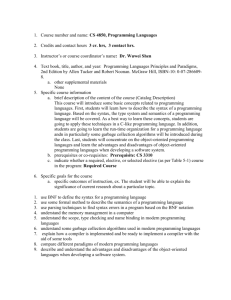

Compilers and Languages

advertisement

Ref: Compilers and Languages “Crafting A Compiler With C”, C. N. Fischer, R. J. LeBlanc. Harry Weatherford This document is an introduction to compilers and languages. The definitions and explanations of each are provided. 1. INTRODUCTION The compiler allows computer users to ignore the machine dependent knowledge required to fully control a computer device or target platform. By distancing the computer user from this area of esoteric knowledge, the user will be operating and programming at a level referred to as “device independence” or “machine-independence”. The compiler will act as a translator that will transform computer programs and algorithms into the lowest level of control called machine code. Machine code will be targeted for a specific platform, device or machine taking in account all low-level instructions to properly access and control the target. The term “compiler” came from Grace Murray Hooper in the early 1950s. The translation of programs to machine code was, at the time, viewed as a “compilation” of a group of subprograms eventually merging into one operational program. During that era, there was a great deal of skepticism whether this new technology, called “automatic programming”, would ever work. The translator, over the years, has matured and evolved into a reality and is still called a “compiler”. In the simplest terms, the compiler takes a source program as an input and transforms the program into machine code as the output target for a specific platform or device. Compilers can accept a number of source inputs (program languages) but are primarily distinguished by the types of output targets and the formats of the output. This code generated as the output can be targeted for any of the following: Pure Machine Code, Augmented Machine Code or Virtual Machine Code. First, the Pure Machine Code will contain “pure” code written with specific low-level machine instructions. This type of program does not require an operating system to execute and can operate on bare hardware without the dependence of any other software. Next, the Augmented Machine Code is designed to operate with the help of other support routines and software including the machine’s operating system. This combination of machine instructions, operating system and support routines are defined as a “virtual machine”. This “virtual machine” exists as a software and hardware combination. Finally, the Virtual Machine Code is composed entirely of “virtual instructions” producing a “transportable” compiler. This transportable compiler is able to run on a variety of computers. When an entirely virtual instruction set is used, it is necessary to interpret or “simulate” the instruction set in software. Almost all compilers generate code for a virtual machine where some of the operations are interpreted by software or firmware (the low-level operating system kernel). Some formats of the output include Assembly Language (Symbolic) Format, Relocatable Binary Format and Memory-Image (Load-and-Go) Format. ELCT 897 Compilers and Languages 1 2. COMPILERS Compilers must perform two major tasks: analysis of the source program and synthesis of a machinelanguage program that will correctly implement the intent of the source program. Almost all compilers are “syntax-directed” which is the recognition of the structure of the statements, by the parser, within the source program. As shown in Figure 1, there are a number of blocks required to perform these two major tasks using a syntax-directed scheme. The scanner reads the source program and generates tokens, the lowest level symbols that define the programming syntax (structure). The parser reads the tokens and generates the syntactic structure for the next module. The recognition of the syntactic structure is a major part of the analysis task. Next, the semantic routines supply the meaning (semantics) or intent of the program based on the structure of the statements. An example of semantics would be to test if the addition of a number and a Boolean flag are logical (which they are not). The semantic routines will generate the Intermediate Representation (IR) that may go through an optimization block or directly go to the code generation block. The code generation block outputs the desired machine-language code for the target platform (or device). Throughout this entire process, all modules access and use the data stored in the symbolic and attributes table. Source Program Scanner Tokens Parser Syntactic Structure Semantic Routines IR Symbolic & Attributes Table Intermediate Representation Code Generator Optimizer Target Machine Code Figure 1 – Structure of a Syntax-Directed Compiler The “Scanner” begins by reading the source program, character by character, and groups these characters into words or recognized symbols defined by the programming language. These grouped words and symbols will be the output of the scanner and are called “tokens”. Tokens are the basic program entities (primitives) such as identifiers, integers, reserved words, symbols, delimiters, etc. Often, the actions of building tokens are driven by token descriptions outlined in a table. ELCT 897 Compilers and Languages 2 Here are some of the key tasks performed by the scanner: Puts the program in a compact uniform format. Throws out all comment statements. Process processor control directives (preprocessor directives). Enters preliminary information into the symbolic and attributes table so that a cross-reference listing can be generated. Formats and lists the source program. The “Parser”, given a formal syntax specification, typically as a “context-free grammar” (to be explained later in this document), reads the tokens and groups them into units, statements or sentences as defined by the rules of the grammar being used. As the syntax structure is recognized, the parser may either build the semantic routines directly or build a syntax tree that is representative of the syntax structure. Main outlined functions of the parser: Reads tokens and groups them into statements in accordance with the rules of the grammar used. Verifies that the syntax is correct and generates “syntax errors” for those statements improperly constructed. May use an “error recovery table” and automatically fix syntax errors. Generate correct syntax structure for the Semantic Routines. The “Semantic Routines” are the heart of the compiler. The Semantic Routines define how each construct; statement or sentence is checked and translated. All aspects of compilation can be formalized via “attribute grammar” which represents the semantic properties such as variable type, value or correctness. If the input sentences or constructs are syntactically correct, then the Semantic Routines will perform the actual translation creating another language output called “Intermediate Representation” (IR). As an example, consider the code segment: SumTotal = CurrentTotal + bool_Flag; The statement above is syntactically correct. The construction of the statement follows all the correct rules yet it violates the rules of semantics. Semantics are the meaning or intent of the language and it makes no sense to try to add the numerical value of “CurrentTotal” to some Boolean expression or flag. Semantics will check for the types of data values to verify that the statements are logical or make sense. This semantic checking is governed solely by the semantics of the language used. The Semantic Routines will perform the following: Check the static semantics of each construct. Check for legal syntax. Verify that the variables used have been previously defined. Verify that correct variable types are used for constructs. ELCT 897 Compilers and Languages 3 The “Optimizer” is optional for the next step of compiling. This phase can be extremely complicated and can easily increase the amount of time required to compile a program since they are designed to produce efficient machine code. A less expensive, stripped-down version of an optimizer is called a “Peep-Hole Optimizer”. This technique looks at vary small code segments and attempts to do simple, machine-dependent code improvements. Some examples of this simple improvement technique would be to remove all multiplications by the value of one or remove all statements where a zero is added to another statement. The next and final stage is the “Code Generator”. This translation stage should always be left separate and unique from the Semantic Routines since the output of this stage is targeted toward a specific platform or device. By keeping these stages separate, a high degree of flexibility is added to the compiler so that a number of different output devices can be targeted. This offers modularity and the ability to have an output that may be targeted toward a specific micro-controller or different operating systems (UNIX, Windows, Linux, etc.). The Code Generator takes the Intermediate Representation (IR) as an input and maps the output machine code for a target machine. This requires the knowledge of the details of the target machine whether it is “pure code” or a “virtual machine” that will have the output control combinations of operating system and hardware. Quite often, target templates can be created to match the low-level IR to the unique instruction sets of various targets offering a very high degree of flexibility. One type of compiler is called a “One-Pass” compiler. This is a simplified compiler that has no optimization section and merges code generation with semantics, which eliminates the IR. The disadvantage is the restrictions imposed on the number of target devices from the output of the code generator. One advantage to this technique is that the translator can compile programs very quickly since some intermediate steps have been removed. The programming language Pascal uses this technique [Fischer and LeBlanc 1980]. One of the earlier compilers that used the techniques based on some of the topics discussed was the GCC, the GNU C-Compiler [Stallman 1989]. The GCC was a highly optimized compiler that could support C or C++ languages and had machine description files, used as templates, that could control many computer architectures. Regardless of the compiler used, a great deal of effort goes into the writing and debugging of the semantic routines that are the heart of the compiler. ELCT 897 Compilers and Languages 4 3. LANGUAGES A language is comprised of three basic components: the alphabet, the grammar and the semantics. The alphabet is the set of atomic elements (primitives) used to construct the sentences of the language. Grammar will be used to determine if the sentence is legal within the language. The semantics are used to define the meaning or intent of the sentences and statements within the language. See Figure 2 below for an illustrated view of the basic components of a language. Language alphabet grammar semantics a, b, c, ... Figure 2 – Language Components Illustrated A complete, formal definition of a programming language must include specifications of the syntax (structure) and the semantics (meaning or intent). There is an almost universal use of Context-Free Grammar (CFG) that is used as a mechanism to define the syntactic specification. The Grammar is typically divided into “context-free” and “context-sensitive” components. Context-free syntax defines legal sequences of symbols, regardless of any previous notion of the meaning of the symbol used. A context-free syntax might say A = A + B; is syntactically correct but A = B +; is not. Not all program structures can be described as “context-free” since variable type compatibility and scoping rules would require a “context-sensitive” definition. For the statement A = A + B; all the rules of syntax are observed and it is a legal statement until the meaning of the statement is interpreted. If ‘A’ is a number and ‘B’ is a Boolean value, then the statement would violate the rules of “context-sensitive” syntax, since it is not logical that a numerical expression could be added to a Boolean value. For that reason, all statements cannot be exclusively defined and interpreted by only “context-free” grammar. Since CFGs have context-sensitive issues, it must be handled as semantics (meaning or intent). Therefore, semantic components are divided into two classes: “Static Semantics” and “Run-time Semantics”. “Static Semantics” define the context-sensitive restrictions that must be enforced. Some examples would include: all identifiers declared, operands to be type compatible, and procedures or functions called with the correct number of arguments. Static semantics augment the context-free specification. They tend to be relatively compact and easy to read, but are usually imprecise. Semantic checks are often found within compilers. “Attribute Grammar” is a formal way to specify semantics. For example, A = A + B; might be augmented with a type attribute (or property) for the two variables A and B and a predicate requiring type compatibility such as: (A.type = numeric) AND (B.type = numeric). ELCT 897 Compilers and Languages 5 “Run-time or execution semantics” specify what a program does or what it computes. Typically, these semantics are expressed formally in a language manual or report. Alternately, a more formal operational or interpreter model can be used. In such a model a program’s “state” is defined and program execution is described in terms of changes to that state. For example: A = 1; is the state component corresponding to ‘A’ is changed to a value of 1. Some other models would include the “Vienna Definition Language (VDL)” [Bjorner and Jones 1978] that embodies abstract program trees, a variety of IR. The modified tree is traversed and decorated with values to model program execution. “Axiomatic Definitions” [Gries 1981] may be used to model executions at a level more abstract than operational models. This model formally specifies the relationship (predicates) between program variables. The axiomatic approach is a good for deriving proofs of program correctness and avoids the details of implementation while focusing on how program variables altered. The axiom defining “ variable = expression” usually states that the predicate involving “variable” is true after the statement execution, if and only if the predicate obtained replaces all occurrences of “variable” by “expression” is true beforehand. Example: y = x+1; For y > 3 to be true, then the predicate “x+1>3” would have to be true before the test “y > 3” statement is executed. For the statements: y = 21; x = 1; if(y=21).. y equals the value of 21 after the execution of the first statement. If variable ‘y’ is completely independent of the variable ‘x’, then the test condition would evaluate to true. If the variable ‘x’ is an alias of ‘y’, then just before the test condition, y will equal x, which is the numerical value of 1, and the test condition will evaluate to false. It can be a challenging task to model a program completely using this technique. Since there is no mechanism to describe the program implementation considerations, a system running out of memory could not be modeled. “Denotational models” are more mathematical in form than operational models. Since they rely on the notion and terminology drawn from mathematics, denotation definitions are often fairly compact. A denotational definition can be viewed as a syntax-directed definition where the meanings of the constructs are defined in terms of meaning of its immediate constituents. Example of a function designed to operate on integers: eval(Var1 + Var2) = eval(Var1) is Integer and eval(Var2) is Integer => range(eval(Var1 + Var2)) else error. Consider the following code statement: (I <> 0) and ( K/I > 10); If the ‘and” statement is treated as an ordinary binary operator, then this statement will be evaluated as false. If precedence is established for the order of evaluation starting on the left, then the statement will evaluate as true since the denominator ‘I’ has to be established as nonzero before continuing to evaluate the statement on the right. This technique is called “short-circuit evaluation” and is often employed with Boolean operators. When short-circuit evaluation techniques are used then the Boolean statements will always be well defined. ELCT 897 Compilers and Languages 6 4. CONCLUSION “A compiler often serves as a de facto language definition”. The compiler takes the algorithms and code written from a source file and translates that human written codes and instructions into low-level machine-specific code. The output target can be written in pure machine code that can run on pure hardware without the support of any other routines or operating system. The output target may also be augmented machine code that is designed to operate in unison with both hardware and software. Typically the firmware target will be the kernel of an operating system. The output target of a compiler may be a pure “virtual machine”. This is designed as a set of virtual instructions that can be used on many different platforms, but will require the aid of a simulator or interpreter. The compiler can only operate successfully after a well-defined language specification has been established. This specification should include definitions of the language’s alphabet, the rules of the grammar and semantic checks required. Since there are countless programming languages, compilers are primarily distinguished by the types of output targets and the formats of the output. The evolution and improvements in languages and compilers has made the initial visions of “automatic programming” a reality. Today, programmers can select from countless compiler options that allow them to adjust the target output and perform higher-level diagnostics and debugging on the software source code. This improved flexibility has allowed today’s programmers to be relatively distanced (device-independent) from the low-level machine specific instructions required for the target machine. ELCT 897 Compilers and Languages 7