Genetic Algorithm

advertisement

GENETIC ALGORITHMS TO CONSTRAINT SATISFACTION

PROBLEMS

Genetic algorithm is a population-based search method. Genetic algorithms are

acknowledged as good solvers for tough problems. However, no standard GA takes

constraints into account. This chapter describes how genetic algorithms can be used for

solving constraint satisfaction problems.

I. WHAT IS A GENETIC AGORITHM ?

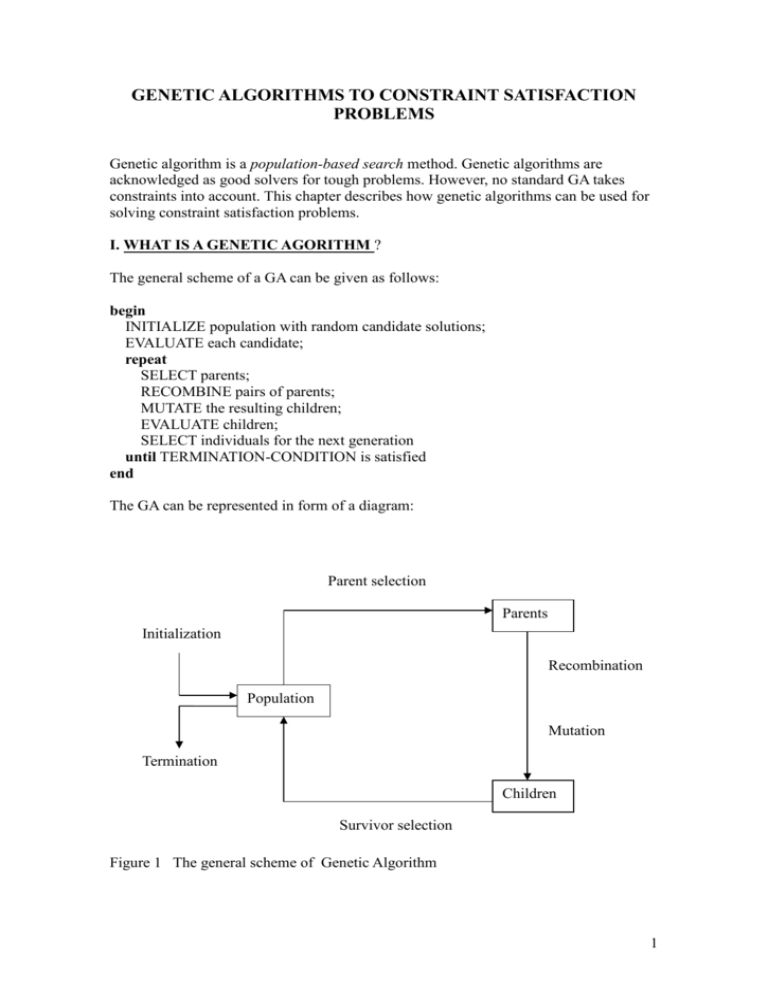

The general scheme of a GA can be given as follows:

begin

INITIALIZE population with random candidate solutions;

EVALUATE each candidate;

repeat

SELECT parents;

RECOMBINE pairs of parents;

MUTATE the resulting children;

EVALUATE children;

SELECT individuals for the next generation

until TERMINATION-CONDITION is satisfied

end

The GA can be represented in form of a diagram:

Parent selection

Parents

Initialization

Recombination

Population

Mutation

Termination

Children

Survivor selection

Figure 1 The general scheme of Genetic Algorithm

1

It’s clear that this scheme falls in the category of generate-and-test algorithms. The

evaluation function represents a heuristic estimation of solution quality and the search

process is driven by the variation and the selection operator. GA has a number of

features:

- GA is population-based

- GA uses recombination to mix information of candidate solutions into a new

one.

- GA is stochastic.

II COMPONENTS OF GENETIC ALGORITHMS

The most important components in a GA consist of:

+ representation (definition of individuals)

+ evaluation function (or fitness function)

+ population

+ parent selection mechanism

+ variation operators (crossover and mutation)

+ survivor selection mechanism (replacement)

Representation

Objects forming possible solution within original problem context are called

phenotypes, their encoding, the individuals within the GA, are called genotypes.

The representation step specifies the mapping from the phenotypes onto a set of

genotypes.

Candidate solution, phenotype and individual are used to denotes points of the space of

possible solutions. This space is called phenotype space.

Chromosome, and individual can be used for points in the genotye space.

Elements of a chromosome are called genes. A value of a gene is called an allele.

Variation Operators

The role of variation operators is to create new individuals from old ones. Variation

operators form the implementation of the elementary steps with the search space.

Mutation Operator

A unary variation operator is called mutation. It is applied to one genotype and delivers

a modified mutant, the child or offspring of it.

In general, mutation is supposed to cause a random unbiased change. Mutation has a

theoretical role: it can guarantee that the space is connected.

Crossover Operator

2

A binary variation operator is called recombination or crossover. This operator merges

information from two parent genotypes into one or two offspring genotypes.

Similarly to mutation, crossover is a stochastic operator: the choice of what parts of

each parent are combined, and the way these parts are combined, depend on random

drawings.

The principle behind crossover is simple: by mating two individuals with different but

desirable features, we can produce an offspring which combines both of those features.

Parent Selection Mechanism

The role of parent selection (mating selection) is to distinguish among individuals based

on their quality to allow the better individuals to become parents of the next generation.

Parent selection is probabilistic. Thus, high quality individuals get a higher chance to

become parents than those with low quality. Nevertheless, low quality individuals are

often given a small, but positive chance, otherwise the whole search could become too

greedy and get stuck in a local optimum.

Survivor Selection Mechanism

The role of survivor selection is to distinguish among individuals based on their quality.

In GA, the population size is (almost always) constant, thus a choice has to be made on

which individuals will be allowed in the next generation. This decision is based on their

fitness values, favoring those with higher quality.

As opposed to parent selection which is stochastic, survivor selection is often

deterministic, for instance, ranking the unified multiset of parents and offspring and

selecting the top segment (fitness biased), or selection only from the offspring (agebiased).

Initialization

Initialization is kept simple in most GA applications. Whether this step is worth the

extra computational effort or not is very much depending on the application at hand.

Termination Condition

Notice that GA is stochastic and mostly there are no guarantees to reach an optimum.

Commonly used conditions for terminations are the following:

1. the maximally allowed CPU times elapses

2. The total number of fitness evaluations reaches a given limit

3. for a given period of time, the fitness improvement remains under a threshold

value

4. the population diversity drops under a given threshold.

3

Note: Premature convergence is the well-known effect of loosing population diversity

too quickly and getting trapped in a local optimum.

Population

The role of the population is to hold possible solutions. A population is a multiset of

genotypes.

In almost all GA applications, the population size is constant, not changing during the

evolutional search.

III HOW DO GENETIC ALGORITHMS WORK ?

The details of how Genetic Algorithms work are explained below.

3.1 Initialization

While genetic algorithms are generally stated with an initial population that is generated

randomly, some research has been conducted into using special techniques to produce a

higher quality initial population. Such an approach is designed to give the GA a good

start and speed up the evolutionary process.

Example: Some authors propose a GA for exam timetabling problem in which the GA

works only with feasible solutions, implying that the initial population must also be

made up of feasible solution. Then the GA is run to improve the fitness of the initial

population.

Example 3.1: In a simple exam timetabling problem, we can use a non-binary bit string

representation to represent the chromosome because it is easy to understand and

represent. We use six positions representing six exams with each position’s value as the

time slot assigned to the exam. We can generate the population randomly to assign each

exam a timeslot.

Day

time1

Day1

Day2

AM

time2

e5

PM

time3 time4

e1

e3

e6

e2,e4

If we randomly generate six numbers 3, 8, 4, 8, 6, 7 as six timeslots for e1-e6, then the

chromosome is 3 8 4 8 6 7.

If the population size is 5, an initial population can be generated randomly as follows:

4

index

1

2

3

4

5

chromosome

384867

737613

535558

767722

174522

fitness

0.005

0.062

0.006

0.020

0.040

3.2 Reproduction

There are two kinds of reproduction: generational reproduction and steady-state

reproduction.

Generational Reproduction

In generational reproduction, the whole of a population is potentially replaced at each

generation. The most often used procedure is to loop N/2 times, where N is the

population size, select two chromosomes each time according to the current selection

procedure, producing two children from those two parents, finally producing N new

chromosomes.

Steady-state Reproduction

The steady-state method selects two chromosomes according to the current selection

procedure, performs crossover on them to obtain one or two children, perhaps applies

mutation as well, and installs the result back into that population; the least fit of the

population is destroyed.

3.3 Parent Selection mechanism

The effect of selection is to return a probabilistically selected parent. Although this

selection procedure is stochastic, it does not imply GA employ a directionless search.

The chance of each parent being selected is in some way related to its fitness.

Fitness-based selection

The standard, original method for parent selection is Roulette Wheel selection or

fitness-based selection. In this kind of parent selection, each chromosome has a chance

of selection that is directly proportional to its fitness. The effect of this depends on the

range of fitness values in the current population.

Example: if fitness range from 5 to 10, then the fittest chromosome is twice as likely to

be selected as a parent than the least fit.

If we apply fitness-based selection on the population given in example 3.1, we select the

second chromosome 7 3 7 6 1 3 as our first parent and 1 7 4 5 2 2 as our second parent.

Rank-based selection

5

In the rank-based selection method, selection probabilities are based on a chromosome’s

relative rank or position in the population, rather than absolute fitness.

Tournament-based selection

The original tournament selection is to choose K parents at random and returns the

fittest one of these.

3.4 Crossover Operator

The crossover operator is the most important in GA. Crossover is a process yielding

recombination of bit strings via an exchange of segments between pairs of

chromosomes. There are many kinds of crossover.

One-point Crossover

The procedure of one-point crossover is to randomly generate a number (less than or

equal to the chromosome length) as the crossover position. Then, keep the bits before

the number unchanged and swap the bits after the crossover position between the two

parents.

Example: With the two parents selected above, we randomly generate a number 2 as

the crossover position:

Parent1: 7 3 7 6 1 3

Parent2: 1 7 4 5 2 2

Then we get two children:

Child 1 : 7 3| 4 5 2 2

Child 2 : 1 7| 7 6 1 3

Two-point Cross Over

The procedure of two-point crossover is similar to that of one-point crossover except

that we must select two positions and only the bits between the two positions are

swapped. This crossover method can preserve the first and the last parts of a

chromosome and just swap the middle part.

Example: With the two parents selected above, we randomly generate two numbers 2

and 4 as the crossover positions:

Parent1: 7 3 7 6 1 3

Parent2: 1 7 4 5 2 2

Then we get two children:

Child 1 : 7 3| 4 5| 1 3

Child 2 : 1 7| 7 6| 2 2

Uniform Crossover

The procedure of uniform crossover : each gene of the first parent has a 0.5 probability

of swapping with the corresponding gene of the second parent.

6

Example: For each position, we randomly generate a number between 0 and 1, for

example, 0.2, 0.7, 0.9, 0.4, 0.6, 0.1. If the number generated for a given position is less

than 0.5, then child1 gets the gene from parent1, and child2 gets the gene from parent2.

Otherwise, vice versa.

Parent1: 7 *3 *7 6 *1 3

Parent2: 1 *7 *4 5 *2 2

Then we get two children:

Child 1 : 7 7* 4* 6 2* 3

Child 2 : 1 3* 7* 5 1* 2

3.5 Inversion

Inversion operates as a kind of reordering technique. It operates on a single

chromosome and inverts the order of the elements between two randomly chosen points

on the chromosome. While this operator was inspired by a biological process, it requires

additional overhead.

Example: Given a chromosome 3 8 4 8 6 7. If we randomly choose two positions 2, 5

and apply the inversion operator, then we get the new string: 3 6 8 4 8 7.

3.6 Mutation

Mutation has the effect of ensuring that all possible chromosomes are reachable. With

crossover and even inversion, the search is constrained to alleles which exist in the

initial population. The mutation operator can overcome this by simply randomly

selecting any bit position in a string and changing it. This is useful since crossover and

inversion may not be able to produce new alleles if they do not appear in the initial

generation.

Example: Assume that we have already used crossover to get a new string: 7 3 4 5 1 3.

Assume the mutation rate is 0.001 (usually a small value). Next, for the first bit 7, we

generate randomly a number between 0 and 1. If the number is less than the mutation

rate (0.001), then the first bit 7 needs to mutate. We generate another number between 1

and the maximum value 8, and get a number (for example 2). Now the first bit mutates

to 2. We repeat the same procedure for the other bits. In our example, if only the first bit

mutates, and the rest of the bits don’t mutate, then we will get a new chromosome as

below:

234513

IV. CONSTRAINT HANDLING IN GENETIC ALGORITHMS

There are many ways to handle constraints in a GA. At the high conceptual level we can

distinguish two cases: indirect constraint handling and direct constraint handling.

Indirect constraint handling means that we circumvent the problem of satisfying

constraints by incorporating them in the fitness function f such that f optimal implies

that the constraints are satisfied, and use the power of GA to find a solution.

7

Direct constraint handling means that we leave the constraints as they are and ‘adapt’

the GA to enforce them.

Notice that direct and indirect constraint handling can be applied in combination, i.e., in

one application we can handle some constraints directly and others indirectly.

Formally, indirect constraint handling means transforming constraints into optimization

objectives.

4.1 Direct constraint handling

Treating constraints directly implies that violating them is not reflected in the fitness

function, thus there is no bias towards chromosomes satisfying them. Therefore, the

population will not become less and less infeasible w.r.t. these constraints. This means

that we have to create and maintains feasible chromosomes in the population. The basic

problem in this case is that the regular operators are blind to constraints, mutating one or

crossing over two feasible chromosomes can result in infeasible offspring. Typical

approaches to handle constraints directly are the following:

eliminating infeasible candidates

repairing infeasible candidates

preserving feasibility by special operators

decoding, i.e. transforming the search space.

Eliminating infeasible candidates is very inefficient, and therefore hardly applicable.

Repairing infeasible candidates requires a repair procedure that modifies a given

chromosome such that it will not violate constraints. This technique is thus problem

dependent.

The preserving approach amounts to designing and applying problem-specific operators

that do preserve the feasibility of parent chromosomes. Note that the preserving

approach requires the creation of a feasible initial population, which can be NPcomplete.

Decoding can simplify the problem search space and allow an efficient genetic

algorithm. Formally, decoding can be seen as shifting to a search space that is different

from the Cartesian product of the domains of the variables in the original problem

formulation.

4.2 Indirect Constraint Handling

In the case of indirect constraint handling the optimization objectives replacing the

constraints are viewed penalties for constraint violation hence to be minimized. In

general penalties are given for violated constraints although some GAs allocate

penalties for wrongly instantiated variables or as the distance to a feasible solution.

Advantages of indirect constraint handling are:

- generality

- reduction of the problem to ‘simple’ optimization

8

-

possibility of embedding user preferences by means of weights.

Disadvantages of indirect constraint handling are:

- loss of information by packing everything in a single number

- does not work well with sparse problems.

V. GENETIC ALGORITHM FOR 8-QUEENS PROBLEM

5.1 Solution Representation

A chromosome is a permutation of the number 1,…,8 and a given g = < i1,…, i8>

denotes the board configuration where the k-th column contains exactly one queen

placed on the ik th row.

Example: the permutation g = < 1,2,3,4,5,6,7,8> represents a board where the queens

are placed along the main diagonal. The solution space is now the set of all

permutations of 1,…,8.

By using such chromosome we restrict the search to board configurations where

horizontal constraint violation (two queens on the same column) and vertical constraint

violation (two queens on the same row) do not occur. In other words, the representation

guarantees “half” number of the constraints and what remains to be minimized is the

number of diagonal constraint violations.

5.2 Crossover operator

(cut and crossfill)

Given two parents, which are two permutations, the following mechanism will create

two child permutations.

1. select a random position, crossover point, i {1, …, 7}

2. cut both parents in two segments after this position

3. copy the first segment of parent 1 into child 1 and the first segment of parent 2

into child 2

4. scan parent 2 from left to right and fill the second segment of child 1 with values

from parent 2 skipping those that already contained in it.

5. do the same for parent 1 and child 2.

Example: parent1

Parent2

1 3 5| 7 6 2 4 8

2 1 8| 6 4 3 5 7

Child1:

Child2:

1 3 5| 2 8 6 4 7

2 1 8| 3 5 7 6 4

5.3 Mutation operator

We select two positions in a given chromosome and swaps the values standing on those

positions.

9

Note that mutation will cause a small undirected change and crossover creates children

that inherit genetic material from both parents.

5.4 Parent selection and survivor selection

Parent selection (best 2 out of random 5) choosing 5 individuals randomly from the

population and taking the best two as parents that undergone crossover. This ensures a

bias towards using parents with relatively high fitness.

Survivor selection: (replace worst) after merging the population and offsprings, then

ranks them according to fitness and deletes the worst two.

5.5 Other issues

Recombination probability

Mutation probability

Population size

Initialization

Termination

100%

80%

100

random

Solution or 10000 evaluations

VI. A GENETIC ALGORITHM FOR EXAM TIMETABLING PROBLEM

Burke et al., 1995 [1] proposed a genetic algorithm for solving exam timetabling

problem. This algorithm combines direct representation and heuristic crossover

operators to ensure that the most fundamental constraints are never violated. Heuristic

crossover operators are used to propagate the most desirable features of the timetable to

produce good quality solutions.

6.1 Solution Representation

When using GA to solve an combinatorial optimization problem, the first important step

is to choose how to represent a solution of the problem as a chromosome. In applying

GA to exam timetabling problem, the most logical approach is to directly encode

solutions with events matched to periods. Figure 5.1 shows such an encoding for n

events where each gene in the chromosome represents which period in which a

particular event is to be scheduled.

Event 1

Period

1

Event 2

Period

3

Event n-1

Period

2

Event n

Period

7

Figure 5.1 A direct representation of a timetable

10

6.2 The Creation of an Initial Population

The following algorithm is used to generate conflict-free graph colorings which form

the initial population.

For each population member:

Generate a random ordering of exams

Take each exam in turn according to that ordering:

Find the first period in which the exam may be placed without conflict and so that

the number of students does not go above a predefined maximum.

Place the exam in that period.

This algorithm can quickly produce large populations of random feasible exam

timetables.

Note: The method allows the length of the timetable to vary.

The random sequential graph coloring algorithm is used to generate the starting

population. It uses on average about twice as many periods as the optimal amount.

Then the GA evolves new timetables, possibly reducing the length. This approach

guarantees a feasible timetable and does not create a search space in which no solution

exists.

6.3 Crossover Operators

It is clear that the crossover operator should satisfy the properties of respect and

assortment given by Radcliffe.

Respect is the property that if an exam is timetabled to the same period in both parents

then it will be scheduled to that period in the child.

Assortment is the property that the operator can generate a child such that if Exam1 is

scheduled to Period 1 in the first parent and Exam2 is scheduled to Period 2 in the

second parent then the child may have Exam 1 in Period 1 and Exam 2 in Period 2

providing that these are compatible.

The crossover operator works for the period i as follows:

The operator starts by looking at the first period. It takes exams scheduled in that period

(in both parents) and then uses an algorithm to select other exams so that none clash

with those already scheduled and the limit on the number of spaces is not violated. Once

this is completed, the crossover looks at period two and so on until all exams are placed.

11

Period i of child Timetable:

- Take those exams schedules in period i in both parents 1 and 2.

- select extra exams from the exams scheduled in period i in either parent 1 or

parent 2 or left over from period i-1.

(Any unscheduled exams are passed onto period i+1).

Figure 5.2 A Heuristic Crossover Operator

Once an exam is selected, all other exams that clash with it are labeled as unscheduled

for that period.

The authors construct a number of different crossover operators based on the same

framework but using alternative selection algorithms. The operators are as follows.

Random

Exams are selected at random. This is closest to the standard uniform crossover.

Largest Degree

Exams are selected according to the number of other exams they conflict with.

Most Similar Exams

Exams are selected according to how many conflicting exams they have in common

with those already scheduled.

Latest Scheduled in other Parent.

Exams are selected according to where the same exam is scheduled in the other

parent. Since unplaced exams are passed on the next period, this increases the chances

of shortening the length of the timetable.

Least Conflicting with Previous Period

Exams are selected so as to minimize the number of conflicts with exams in the

previously scheduled period.

6.4 Mutation Operator

Mutation, like crossover, must also ensure that a timetable remains feasible after its

action. It cannot take any exam and shift it to another period at random, since this may

cause a conflict between the moved exams and ones already scheduled.

We choose to incorporate mutation into the crossover algorithm. This is done by adding

exams to the current search that would otherwise not be considered until a later period.

6.5 Fitness Calculation and Selection

The evaluation function can be made up of any timetabling related factors. For example,

we may focus on two particular common requirements:

- The length of the timetable

- The number of conflicts between exams in adjacent periods.

Given a space P of candidate solutions to a problem, fitness function f(p) for p P

measures the quality of a solution p.

12

Notice that the quality of a solution p may not vary smoothly as the genes comprising p

vary since the genetic operators such as crossover and mutation do not vary the gene

values smoothly.

It seems reasonable to distinguish between timetables in terms of fitness based on the

numbers and kinds of different constraints violated. For instance, if V(p) is the number

of violated soft constraints in candidate p, one could choose:

f(p) = 1/(1 + V(p))

so that the range of f(p) is from 0 to 1.

If we have n kinds of soft constraints, the penalty associated with constraint-type i is wi,

p is a timetable, and ci(p) is the number of violations of constraints of type i in p, then

the fitness function becomes:

n

f(p) = 1/(1 + wici(p))

i =1

6.6 Other Issues

The Genetic algorithm for exam timetabling problem uses:

- Generational Reproduction

- Population size = 200

- Rank-based selection mechanism

6.7. Remarks

Burke et al. [1] combines traditional CSP solving heuristics with genetic algorithms.

The underlying motivation is to get the best of two worlds. The greediness of the

heuristics (which can lead to dead-ends) and the blindness of the stochastic genetic

search. The heuristics can be incorporated into the genetic operators mutation and

crossover.

VII CONCLUSIONS

Constraint handling is not straightforward in a GA because the search operators such as

mutation and recombination are ‘blind’ to constraints. There is no guarantee that if the

parents satisfy some constraints, the offspring will satisfy them as well.

However, genetic algorithms can be effective constraint solvers when knowledge about

the constraints is incorporated either into the genetic operators, in the fitness function,

or in repair mechanisms.

References

[1] Burke, E. K., Elliman, D.G., Weave, R. F., A Hybrid Genetic Algorithm for Highly

Constrained Timetabling Problems, Proc. of 6th International Conference on the

13

Practice and Theory of Automated Timetabling, Napier University, Edinburgh, UK,

1995.

[2] Craenen, B. C. W., Eiben, A. E. and Marchiori, E., How to Handle Constraint with

Evolutionary Algorithms. In L. Chambers (Ed.), The Practical Handbook of Genetic

Algorithms: Applications, 2nd Edition, volume 1, Chapman & Hall/CRC, 2001, pp. 341

– 361.

APPENDIX: Randomly Sequential Graph Coloring Algorithm

A coloring of a graph is an assignment of a color to each vertex of the graph so that no

two vertices connected by an edge have the same color.

One strategy for graph coloring is the following “greedy” algorithm.

Initially we try to color as many as vertices as possible with the first color, then as many

as possible of the uncolored with the new color, then as many as possible of the uncolored vertices with the second color, and so on.

To color vertices with a new color, we perform the following steps.

1. Select some uncolored vertex and color it with the new color.

2. Scan the list of uncolored vertices. For each uncolored vertex, determine

whether it has an edge to any vertex already colored with the new color. If there

is no such edge, color the present vertex with the new color.

Example: In figure A1 having colored vertex 1 red, we can color vertices 3 and 4 red

also.

3

red

red

1

2

5

4

red

Figure A1. Coloring vertices of a graph by randomly sequential graph coloring

algorithm

There are some heuristics on the order of selecting one vertex from the list of uncolored

vertices.

14