5 Algorithms Used for Searches

advertisement

5 Algorithms Used for Searches

In order to complete a search for the user’s desired information, special

algorithms have been devised. These heuristic algorithms were created by adding

additional steps to a classical LCS search technique. Additionally, special string

representations for character decompositions had to be created. There are various points

throughout the algorithm where further heuristic study could be used to determine the

parameters needed to get ideal results.

5.1 Overview of eRE Transformation

The user will input a string, which may fall into one of two categories. The first

category is that it is a standard regular expression. This would include things as varied as

‘[語翻方]{1:3}’ or ‘house’. The second category of input would be for the user to input

an eRE. The grammar of the eRE is described in chapter 4.

If the user inputs a standard regular expression, then the system will simply take

the input as-is, and search for occurrences of that regular expression within EDICT. On

the other hand, if the user input is not a standard regular expression, then the process

described below must be followed.

5.1.2 Parsing of Input String

The input string is taken from the user, and passed to <Function name>. This

function serves as a parser for extended regular expressions. Actually, this function

receives all input strings, and it is within this function that the decision as to whether to

call other functions is made. If the eRE escape sequence is found, then the string is

passed to the transformation functions. If not, then they are skipped, and the standard

regular expression is used.

The first step in the parsing process is to proceed recursively through the input

string. This allows the nesting of escape sequences. The recursive steps taken here

follow the grammar described in figure XXX.

S = KK

K = KQK |

Q = reg:reg Valid Regular Expressions

Figure 1

is the production described in figure XXX of chapter 4.

For example, the character 語 could be represented as \k(LR<言:吾>) without

using recursion. However, one possible extension to this serialized description would be

to use the following: \k(LR<言:\k(TB<五:口>)>). This use of nesting gives a much more

fundamental idea of the makeup of the character. As it turns out, the parsing step of the

input can be used as a sort of “master function” for the transformation process. In doing

so, there need be no changes to any other components besides this to allow recursive

nesting.

The mechanism that allows this is quite simple. Recursive parsing of the input

string will go to the most highly-nested portion of the escape sequence first. In this

example, that would be the \k(TB<五:口>) portion of the input. This substring would be

passed along through the procedures described in the following sections. Upon returning,

a set containing the best match(s) would be put in its place. This would leave the string

\k(LR<言:[吾]>) to be parsed. The process used for coming to [吾] as the “best match”

is described in the following sections.

5.1.3 Expanded String Creation

Each two-dimensional character contained in the decomposition dictionary will

eventually be used to create a serialized representation of itself. During the course of the

hierarchical score computation, each escape sequence entered by the user is compared to

each of the characters in the decomposition dictionary. This comparison is described in

full detail in the “Hierarchical Score Computation” section.

When a comparison needs to be made, the appropriate character is pulled from the

decomposition dictionary. The representation of this character, which varies based on

which class of character it is, is generated by the object containing the decomposed parts

of the character. For example, the character 明. This character is not stored in its

complete form. Instead, it is contained within a left-right object (See figure below). This

object contains a unit object, 日, in its left field, p’, and a unit object, 月, in its right field,

p’’. The string returned also contains a the whole character, 明,in the key field, c.

[c]f<[p’]:[p’’]>

cC, fF, p’,p’’F

Figure 2

When called on for a comparison, the left-right object first calls its left object, e

A, (which need not be a unit object- this process may continue recursively if defined as

such). The left object will return its own string representation. The same process occurs

on the right object. These two representations are placed in the proper location in the

left-right string structure.

明 LR<日:月>

Figure 3

In addition, the key used to find this left-right object will be placed in the string

representation. The key is the character that has been decomposed. So, for this example,

the final string that is used to represent the serialized form of 明 is shown in figure XXX

above. This string is returned to the requester for the comparison to be made.

5.1.4 Hierarchical Score Computation

The algorithm used to compute hierarchical scores requires that the eRE in

question be compared to each extended string representation in the dictionary. The

algorithm in place is actually a heuristic algorithm, as there is no deterministic way to

determine what is the “best match”. This limitation was considered in the final version

used, as it is possible to modify the criteria of search quite easily by changing a few

variable values.

The first step in the score computation is to make a call to the

“commonCharacters” function. This function takes the input string and compares it with

a string from the decomposition dictionary. A simple count is done on each character

found in the input string to see how many times it occurs in the dictionary string.

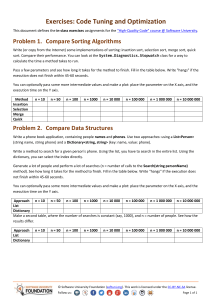

Example XX: User input: ‘cat’ Dictionary String: ‘attached’

The algorithm will go one character at a time through the input string, in this case ‘cat’. A

running sum of the number of matches is kept, and at the end of the input string is

returned to the user. The results from example XXX are shown in table XXX.

Iteration

Current letter

Matches for

Running total

Total common

this letter

of sum

characters

0

‘c’

1

1

1

‘a’

2

3

2

‘t’

2

5

Done

5

Table 1

This score is stored as part of a tuple. The next part of the tuple is calculated as

the normalized longest common subsequence found between the input and each character

in the decomposition dictionary. The length of LCS found is divided by the length of the

decomposition string in order to make a fair comparison. This is because, for matching

purposes, the ratio of the string that is in the LCS is a better indicator of relevance than

the absolute length. In other words, an LCS of 8 on a string of length 24 would only

score 8/24=0.333, while an LCS of 7 on a string of length 10 would score 7/10=0.70.

This would cause the LCS of length 7 to be ranked as a better match than the longer, but

less relevant, LCS of length 8.

For both the common character computation and the longest common

subsequence computation, there are possible pre-processing steps. Both computations

search for any indexes that are used to represent unprintable characters. For those input

by the user, the index number will be preceded by the “#” symbol. Those in the

decomposition dictionary are not preceded by ‘#’. The pre-processing step takes place

before moving through the list that has been provided to each function. The list is

searched for either the […..,’#’,’n’,’n’…] or […..,’#’,’n’,’n’…] , (where ‘n’ represents a

number from 0-9) which will be changed to […..,’nn’,…] in the list. This allows the LCS

and Common character computations to proceed without any in-line changes.

U = f<a’:a’’>

V = f’<a’’’:a’’’’>

Count = count(u V) u U

Figure 4

Additionally, the LCS function has two other pre-processing steps. The first is to

allow the use of the ‘*’ character inside eRE escape sequences. This pre-processing

simply replaces the ‘*’ character with the character in the same position in the

decomposition dictionary string. The other pre-processor is used to allow the use of

‘[ABC]’ (the [ ] brackets) within the eRE escape sequences (where A, B, and C are each

an individual character or index of an unprintable character). This is accomplished by

checking the character in the corresponding position of the decomposition dictionary

string for membership within the set (characters between the [ ]) entered by the user. If

there is a match, then the matching character is put in place of the set.

These pre-processing steps work on the LCS because the iteration amongst all of

the strings from the decomposition dictionary takes place in the function that calls LCS().

Because of this, the strings input to the function can be altered as needed, as on the next

LCS call, the string will be passed again in its original state, without any of the changes

made by the pre-processing steps of the previous iteration.

5.1.5 Finding Best Match from Scores

The scheme used for locating the “best match” found in the searching algorithm

described above is a simple process. The results from the above steps are stored in a list

that contains the character found in the decomposition dictionary, the common character

score, and the LCS score. These list entries are sorted in hierarchical order, with the

common character score as the major, and LCS as the minor criteria. To accomplish this

order, they are first sorted by LCS, then the result is sorted by common character. The

approach was inspired by radix sort.

Sort (L):

Given a List, L, composed of many l: l = (c, count, lcs) c C

Sort = sort(L by lcs)

Sort’=sort(Sort by count)

Return Sort’

Figure 5

The current implementation uses only those in the highest tier of the rankings as

the acceptable results. It is, however, possible to expand these by altering the selection

criteria for what strength of match will be accepted. The purpose behind the hierarchical

sort can be seen by examining the table of actual search results below. There are some

less-relevant search results that gain higher LCS scores than some in the highest tier

(most common characters level). However, these less-relevant results are ignored because

they do not appear on the highest level of the common character search.

INSERT TABLE FROM SEARCH RESULTS BELOW. MODIFY THE

EXPLANATION ABOVE TO FULLY DESCRIBE IT.

5.1.6 Creating and Using RE Generated by Best Match

After building the table of hierarchical results from which the “best matches” are

selected, the regular expression used for the dictionary search must be created. At this

point it important to note that while the aim of the process described in this paper is to

avoid the rigidity of being required to use a regular expression for the search, that

generating a regular expression, or some other concrete expression is in fact required.

Computers do not have a sense of “judgment” whereby they can decide what a user

intended. Instead, a concrete, fully specified data is required for comparison purposes.

The algorithms described in this paper serve as a middle layer that allows end-users more

freedom in specifying what they desire. This is demonstrated in figure XXX.

Given a Sorted List, L, composed of many l: l = (c, count, lcs) c C

Select B L : bB : b.count = max(L.count) b.lcs=max(L.lcs)

B is the set of Best Matches

Figure 6

To create the regular expressions, the characters with the highest LCS score are

selected from the highest tier of common characters score (Figure 6). Often, there will be

multiple characters found that constitute a “best match”. The set of possible characters is

returned from the best match function as a list. This list is then formed into a string with

‘[‘ and ‘]’ on either side. For example, a list of best Matches, B, with: B=[‘言’,’語’,’読’]

will be transformed into the string ‘[言語読]’. This can then be presented to the user if

being used as part of an input method, or passed to a regular expression engine for

matching against a dictionary, as is done in this implementation. For example, if the user

had searched for:

日本\k(LR<言:*>)

This might be returned as 日本[言語読]. This regular expression could then be

compared with the EDICT dictionary, resulting in the user finding the desired word,

without knowing the full kanji compound.

5.2 Matching Algorithm

The combination of steps described in section 5.1 is used in combination to form

the matching algorithm. The first step, parsing of the input string, will proceed

recursively to the most highly nested eRE escape sequence within the user input. This

escape sequence will then go one at a time through the steps that follow, until finally a

best match is found for the escape sequence. When this best match set, BM^k, is

returned, the string representing this set will be inserted in place of the escape sequence

of the original string, and the string parsing will continue. An example of how this

proceeds can be seen in figure XXX.

Input: \k(LR<言:\k(TB<五:口>)>)

Recursive parser first finds: \k(TB<五:口>)

BM=BestMatch(\k(TB<五:口>)) = [吾]

Now Repeat:

Recursive parser resumes:

\k(LR<言:BM>) = \k(LR<言:[吾]>)

BM’ = BestMatch(\k(LR<言:[吾]>)) = [語]

Figure 7

5.3 Imperfect “Fuzzy” Matching: Why it Works

The goal of this project, returning what a user means, not what he says, without

the use of actual intelligence is tricky. The problem is that inputs tend to be specific and

concise. Narrow inputs to searches tend to provide either narrow outputs (exactly what is

said) or overly broad, irrelevant outputs.

My algorithm’s approach to this problem is to have the user serialize the input to

describe what it is that he wishes to find. This serialization contains important structural

information on the characters that cannot be extracted from the Unicode encoding. Once

the user has input the serialized version, one side of the comparison is complete.

The second side of the comparison that must be made is to compare the characters

from the CJK language to what the user has provided. This process takes a considerable

amount of data preparation to execute. This is because Unicode simply treats each

character as an atomic unit, and is not organized in such a way to provide useful

structural information about individual characters. Therefore, the fuzzy matching system

must have a system in place to serialize the characters in the Unicode dictionary,

hopefully in a somewhat similar fashion to the way in which the user serialized the

characters.

Following this approach, there will be few if any perfect matches for a search.

This is because a user will oftentimes not serialize a character in the same manner that the

Unicode dictionary serialization was done. However, if the user has followed some

simple guidelines regarding general structure, there should be some significant

similarities between the user’s attempt at serializing a character and the serialization of

the character used by the search application. Additionally, in the case that the user is able

to fully specify the sub-characters used in the serialization, there should be few, if any,

instances of the user-input serialization being a better match to a non-desired character

than to the desired character. The case where the user is able to fully input all of the subcomponents of his desired character should result in a single best match.

In the case where the user is unable to fully specify all of the sub-components of

the character he desires, the results will be somewhat different. Instead of one match

alone having the highest score, large numbers of partial matches will be found. It is

likely that many of these matches will have the same hierarchical score. These partial

matches will then be filtered with the “best match” algorithm into hierarchical results.

This algorithm allows for the discovery of results that do not perfectly match the search

input, but at the same time have definite relevance.

This approach of increasing the amount of relevant information will tend to lower

the overall scores. The reason for this is obvious, as the more information two

independent sources (user and application) provide to describe an object, the more

divergent their descriptions will be. However, at the same time that the relevant results

have their scores diluted, the scores of non-relevant characters will also be diluted.

An advantage of this approach is that searches can be made on incompletely

specified objects. In my implementation, this means that users can search for matches

using only parts of Chinese characters. This approach allows the algorithm to seem to

intelligently determine what the user intended to find.