Vocal interface with memory of speech for dependant people

advertisement

VOCAL INTERFACE WITH A SPEECH MEMORY FOR

DEPENDANT PEOPLE

Stéphane RENOUARD, Maurice CHARBIT and Gérard CHOLLET.

Département de Traitement du Signal et des Images,

Ecole Nationale Supérieure des

Télécommunications,

CNRS-LTCI

{renouard, charbit, chollet}@tsi.enst.fr

ABSTRACT

We present here a new approach to automatic speech recognition based on speech memory. With this

approach, statistic acoustic models are continuously adapted and a meaningful confidence measure is

computed. Tests are conducted on the isolated utterance data database POLYVAR recorded over a one year

period. An autonomous vocal interface, a ‘majordome’ of the smart house, which constitues an help for the

everyday life of the dependent people is under processing.

1. INTRODUCTION

Speech is regarded as the most natural, flexible and effective means for human

communication. Progress in the area of automatic speech recognition, comprehension of

oral dialogue and speech synthesis let us hope of being able one day to dialogue naturally

with computers in any environment. However, the field of automatic speech recognition

(ASR) still presents many scientific and technological locks. For exemple, uncontrolled

factors such as distorted or disturbed speech, class of speakers (children, elderly people,

foreign people, disabled people), etc are still blocking factors.

Within the framework of the GET project ‘Smart Home and Independent living for

persons with disabilities and elderly people’, our research team set up a vocal interface (VI)

for physically disabled people. This VI brings a palliative mean of communication using

WIMP (Windows, Icons, Menus and Pointers) environment by offering further autonomy

to the user. Systems for ASR comprise in most instances three distinct phases : training,

recognition and adaptation. The training phase is the longest in time because of the

requirement of large audio databases, specially when building speaker independent models.

Even if in the speaker dependant case the duration of this phase can be reduced, user must

devote a considerable time to it.

We present here an original system based on a memory of speech [1,2]. Every

utterances prononced by the user are recorded to perform post processing applications such

as model adaptation, confidence measurement or speaker verification. For instance, we use

the memory of speech to perform speaker model adaptation and out of vocabulary words

rejection. Section 2 gives an overview of the system and a description of the memory of

speech. Section 3 shows the experiments on speaker model adaptation to reduce the training

phase in ASR using memory of speech. Section 4 presents results of out of vocabulary

words rejection using a confidence measurement calculated from utterences that may be

present in the memory of speech.

2. SYSTEM OVERVIEW

2.1 speech module and interface

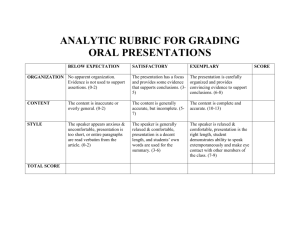

The figure belove (Fig.1) shows a block diagram of our speech interface. It was

developped in two parts, the interface and the speech module. This allow us to build the

speech module independently of the the interface. Another application possibility is to use

the interface on a client (PDAs, wearable computers…) and the speech module on a server

to improve portability and performance of the system.

The overall speech module was built using HTK 3.2 [3]. Acoustic analysis is based

on the extraction of 12 Mel-Scale Frequency Cepstrum Coefficients (MFCC) plus Energy

and their first and second order derivative. Frames of the speech signal are analysed every

20 ms using a Hamming windows function and a frame shift of 10 ms. The recognizer uses

Hidden Markov Models (HMM) with 25 phones models and 1 silence model. Each phone

model has 3 states and 16 gaussians per state. The baseline speech recognizer contains

phone HMM models trained on the french read-speech corpus BREF which contains over

100 hours of speech material from 120 speakers (55m/65f) [4]. The text materials were

selected from the French newspaper Le Monde, so as to provide a large vocabulary (over

20,000 words) and a wide range of phonetic environments.

The interface was build using BORLAND DELPHI 6.0. and proposes classical

fonctions of WIMP interfaces.

I

N

T

E

R

F

A

C

E

S M

P O

E D

E U

C L

H E

Microphone

Sending

order

Speech signal

or

Recognition

pattern

Confirmation

By user

Speech

memory

Accoustic

annalysis

Grammar

constraints

Pattern

matching

Post

processing

Recognition

decision

Figure 1. System overview

2.2 memory of speech

The speech memory is a database of voice constituted by the utterances prononced

by users. It could be viewed as a ‘live’ recorded speech database. We use data present in the

memory for two tasks : restimating user statistical model and training a rejection model for

‘out of vocabulary’ words.

On each new login on the interface, a speaker independent model is loaded. A short

training phase that require user cooperation is performed. Utterances recorded are used to

adapt speaker model to improve recognition performance (see section 3). Adapted models

are saved when closing the interface.

Data presents in the memory allow us to compute a confidence measurement (CM)

for each utterance pronunced using method described in section 4. Threshold for CM may

be continously adapted with this technique.

3. MODEL ADAPTATION

In this section we descibe speaker model adaptation using limited training data.

Phone models were trained using POLYPHONE data, while POLYVAR database was used

to simulate the content of the speech memory.

3.1 HMM Adaptation with a small quantity of data

It is commonly agreed that, for a given speech recognition task, a speaker dependant

system (SD) usually outperforms a speaker-independent system (SI), as long as a sufficient

amount of training data is available. When the amount of speaker specific training data is

limited, the gain in using such a model is not guaranted. One way to improve the

performance is to make use of existing knowledge, consisting in a large multispeaker

database, so that a minimum amount of training data is sufficient to modelize the new

speaker [5]. Such a training procedure is often refered to as speaker adaptation when a

priori knowledge is derived from a speaker-independent database, and as speaker

conversion when the knowledge is derived from a different speaker.

Using data contained from the memory of speech, speaker adaptation was

performed with maximum likelihood method using the expectation-maximization (EM)

algorithm [3,6]. For HMM parameter estimation, this algorithm is also called the BaumWelch algorithm. This algorithm exploits the fact that the complete-data likelihood of the

model is simpler to maximize than the likelihood of the adaptation (or incomplete) data, as

in the case that complete data have sufficient statistics.

3.2 Databases and experimental setup

Speaker adapation experiments were carried out on a corpus consisting of two

speech database: Swiss French POLYPHONE and POLYVAR [4]. The first one, which

contains 4,293 information service calls (2,407 female, 1,886 male speakers) collected over

the Swiss telephone network, was used to train a speaker independent model for each

phone. Tests were made using a part of the POLYVAR database, consisting in repetitions

of 17 french isolated words, by 5 locuteurs. The native model from POLYPHONE was

trained using iterative training as described in 3.1. We used tokens 00 to 30 (510 isolated

words) from locuteurs M00 to M04. Tokens from 00 to 03 were used for training and

tokens from 04 to 30 for testing. After each reestimation, a recognition is performed using

Viterbi algorithm on test data.

3.3 results

Table 1 shows the Word Error Rate (WER, i.e number of substitions + insertions /

total number of recognized words) for isolated words recognition with speakers M00 to

M04. The baseline model without retraining (T0) give overall good performances

(WERmean ~ 10.6 %). This baseline WER is compared to WER recognition after 17 words

(T1), 34 words (T2), T3 (51 words) and T4 (68 words) reestimations. For speakers M00 to

M04, WER decreases for T1 to T4 adaptations. Using 4 tokens (T4) for training does not

increase drastically recognition performance. Two tokens (T2) adaptation is sufficient in our

case to obtain very good recognition performances (WERmean ~ 1.32 %).

TABLE 1

WER Recognition Results on 447 isolated words.

Sp

M00

M01

M02

M03

M04

T0

11,6 %

10.5 %

8.8 %

9.6 %

11.2 %

T1

T2

6.8 %

4.0 %

3.8 %

6.2 %

7.0 %

3.1 %

0.5 %

1.7 %

0.7 %

0.6 %

T3

T4

1.2 %

0.2 %

1.2 %

0.5 %

0.5 %

0.4 %

0.2 %

0.2 %

0.4 %

0%

From Table 1, we can show that a small quantity of data used to adapt a speaker

independent model increases the baseline situation. Only two sets of words were necessary

to obtain overall good performances for such an application.

4. REJECTING ‘OUT OF VOCABULARY’ WORDS

In this section, we propose to test a rejection method for out of vocabulary words.

Data of the POLYVAR databases were used to simulate content of speech memory.

4.1 Rejecting ‘out of vocabulary’ words

One of the major problem that most interactive vocal services have to cope with is

the rejection of incorrect data. Indeed, the users of such services are generally not aware of

the constraints of the systems and may be talking in a noisy environnement. Thus,

Automatic speech recognition systems must be able to reject incorrect uterrances such as

out of vocabulary words, speaker’s hesitations or noise tokens. The problem is then to find

an acceptable trade off between the different possible type of errors : substitution errors,

false rejection errors (when a vocabulary word is rejected) and false alarm errors (when an

incorrect uterance is recognized as part of the vocabulary).

The idea is to post-process the hypotheses delivered by the recognizer, by

computing a confidence measure for each recognition hypothesis. Different solutions are

possible, such as the use of a segmental approach based on a phonetic level likelihood ratio

estimated with a set of phonetic or prosodic parameters [5, 9]. We choose here to estimate a

confidence measure based on word level likelihood ratios, which does not require the

extraction of any additional parameter [9].

The confidence measure used in this work is the normalized log-likelihood ratio

(LR) between the first and second best hypotheses in a N-Best decoding approach [3]. The

formalism of this strategy has been developped in [5]. To estimate the confidence of output

word W, for a given utterance X of T frames, we need to compute :

F (W )

1

LR X/W

T

(1)

under hypothesis H0 “Word belongs to the dictionary” and H1 “Word do not belongs to the

dictionary”. P(F(W)|H0,1) is approximated considering :

LR ( X/W ) Log (#1/#2), under H0

LR( X/W ) Log (#2/#3), under H1

(2)

where #1, #2 and #3 are scores for first, second and third best hypothesis from the Viterbi

decoder. We considere that Log (#2/#3) is close to a modelisation of W . In order to make

accept (H0) or reject (H1) decisions, we need to determine some threshold to compare the

confidence score to. The decision then become :

F(W)

Accept W

Reject W

(3)

4.2 Database and experimental setup

Confidence measurement experiments were carried out using locutor m00 of the

POLYVAR database. Silences were removed from each sound file. The reference model

was trained on repetitions 30 to 220 (Tt). Model trained on Tt gives a WER score of ~

0.1%. Others models were trained using one repetition token (T1), two repetition tokens

(T2) and three repetition tokens (T3). T0 is the native model from POLYPHONE (no

retraining). WER scores are the same as in Table 1. Tests were done using repetions 03 to

30. Results are representated using DET curve [8] and considerated at False rejection

probability equal to False Alarm probability (this point is known as EER, Equal Error

Rate).

4.4 results

Training set used for

model adaptation

T0

T1

T2

T3

Tt

Figure 2. DET curves on confidence measure for ‘out of vocabulary’

words, using training tokens T t and T0 to T3.

Figure 2. Shows that the reference model trained with Tt has EER ~ 10%. Native

model with WER = 11.6% has an EER of ~ 30%. EER results for others models decrease

with the quantity of adaptation data used. In fact, our test did not show enough robustness

with degradation of recognition performances. As WER decreases (depending on the

number of repetitions used for training), EER measure decreases in a drastic way. We need

to improve the robustness of our test.

5. CONCLUSION

Our results indicate that only few words are necessary to adapt a locutor dependant

model from a well trained locutor independent model. Adapted models show overall good

performances with only 3 repetition tokens. The use of a speech memory is quite relevant in

that case. The model depicted for ‘out of vocabulary’ words rejection gives results that may

be improved, in particular the robusteness of the model when speech recognition

performances decrease. In a close futur, we plain to develop a speaker verification task to

complete the interface using data from the speech memory.

At terms, the vocal interface with a speech memory will facilitate interactions with

a ‘majordome’ capable of interfacing with telephone, interphone, or system requiring a

remote control (television, HiFi equipement, etc.). The vocal interface with a speech

memory should be considerated as a central element of the smart house which thus

becomes a real communicating house.

6. REFERENCES

[1] D. Petrovska-Delacretaz et G. Chollet, “Searching Through a speech Memory for efficient Coding, Recognition and

synthesis”, 2002, in Phonetics and its Applications, Edited by Angelika Braun and Herbert R. Masthoff, Franz Steiner

Verlag

[2] T.C. Ervin II, J.H. Kim, “Speaker Independant Speech Recognition Using an Associative Memory Model”,

Southeastcon '93 Proceedings, 1993, p.4-7.

[3] Steve Young et al., “The HTK Book”, rev. dec 2002, copyright 2001-2002 Cambridge University Engineering Dept.

[4] L.F. Lamel, J.L. Gauvain, M. Eskénazi, ``BREF, a Large Vocabulary Spoken Corpus for French,'' EUROSPEECH-91.

[5] C.H. Lee and B.H Juang, “A study on speaker adaptation of the parameters of continuous density Hidden Markov

Models”, 1991, IEEE Transactions on Signal Processing, Vol. 39, n°4, p.806-814.

[6] JL. Gauvain and CH Lee, “Maximum a posteriori Estimation for Multivariate Gaussian Mixture Observations of

Markov Chains”, 1994, IEEE Transactions on speech and Audio Processing, vol.2, n°2, p.291-300

[7] D.Genoud, G. Gravier, F. Bimbot, G. Chollet, ”Combining Methods to Improve Speaker Verification Decision”, 1996,

Proc. ICSLP '96

[8] A.Martin, G Doddington, T.Kamm, M.Ordowski, M. Przybocki, “The DET curves in assessment of detection task

performance”, EuroSpeech 1997 Proceedings, Vol.4, p. 1895-1898

[9] N. Moreau, D. Charlet and D. Jouvet, “Confidence measure and incremental adaptation for the rejection of incorrect

data”, 2000, Acoustics, Speech, and Signal Processing, ICASSP '00. Proceedings, Vol. 3 , p. 1807 -1810

The autors would like to thank the fundation “Louis Leprince-Ringuet” for its financial contribution to the

GET project ‘Smart Home and Independent living for persons with disabilities and elderly people’