Latent Semantic Indexing and it`s place in Information Retrieval

advertisement

Latent Semantic Indexing and it’s place in Information Retrieval

By Michael Weller Autumn 2003

1. Abstract

This report investigates Latent Semantic Indexing and its place in Information

Retrieval. It provides an overview of the field of Information Retrieval, and its overall

purpose. The report describes current tools that are available for use in helping with

retrieving information on the Internet and quality measurement techniques used to

determine how successful a technique is at retrieving documents.

The report focuses on Latent Semantic Indexing, explaining what it is, its purpose, the

approach it takes and describes potential uses. It contrasts Latent Semantic Indexing

to other tools available and depicts the benefits and problems associated with the use

of LSI. The report also raises questions regarding the implementation of Latent

Semantic Indexing and how it could be improved.

Page 1 of 19

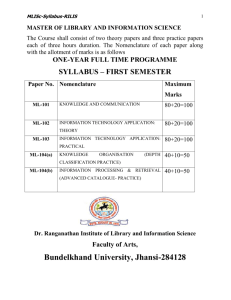

2. Contents

Latent Semantic Indexing and it’s place in Information Retrieval ................................ 1

1. Abstract .............................................................................................................. 1

2. Contents ............................................................................................................. 2

2.1.

Table of Figures ......................................................................................... 2

3. Discussion Notes ................................................................................................ 3

3.1.

Adding Meaning to Latent Semantic Indexing .......................................... 3

3.2.

Questions for Discussion ........................................................................... 4

4. Introduction ........................................................................................................ 4

5. Information Retrieval ......................................................................................... 4

5.1.

Quality Measurement; Recall, Precision, and Fallout ............................... 5

5.2.

Tools Available for Information Retrieval ................................................ 6

5.2.1.

Binary Matching ................................................................................ 7

5.2.2.

Vector Space Model ........................................................................... 7

5.2.3.

Latent Semantic Indexing .................................................................. 9

6. Latent Semantic Indexing .................................................................................. 9

6.1.

How does LSI work? ............................................................................... 10

6.1.1.

Content Search ................................................................................. 10

6.1.2.

Index Matrix Composition ............................................................... 10

6.1.2.1. Log-Entropy Weighting ............................................................... 11

6.1.2.2. Other Types of Weighting ........................................................... 11

6.1.3.

Term Space Modelling ..................................................................... 12

6.1.4.

Singular Value Decomposition ........................................................ 12

6.2.

Strengths of LSI ....................................................................................... 14

6.3.

Problems with LSI ................................................................................... 14

6.4.

The Uses and Potential Uses of LSI ........................................................ 15

6.4.1.

Relevance Feedback......................................................................... 15

6.4.2.

Information Filtering ........................................................................ 15

6.4.3.

Textual Coherence ........................................................................... 16

6.4.4.

Automated Writing Assessment ...................................................... 16

6.4.5.

Cross-Language Retrieval ................................................................ 16

7. Conclusions ...................................................................................................... 16

8. References ........................................................................................................ 17

9. Bibliography .................................................................................................... 18

2.1. Table of Figures

Figure 1 - Comparing Document Retrieval ............................................................. 5

Figure 2 - Ideal Retrieval Scenario .......................................................................... 6

Figure 3 - Worst Case Retrieval Scenario................................................................ 6

Figure 4 - Representing Document Space ............................................................... 8

Figure 5 - Simplified Vector Representation of the Index Matrix in Figure 4 ........ 8

Figure 6 - Vector Matching...................................................................................... 9

Figure 7 - Simplified Comparison of Term Space Modelling Diagrams ............... 12

Figure 8 - Pictorial Representation of SVD ........................................................... 13

Page 2 of 19

3. Discussion Notes

Latent Semantic Indexing has been found to work surprisingly well at finding more

relevant documents than keyword searching. It even finds documents that do not even

contain the specified keyword. However, it does only use pattern matching based

upon the frequency of content-describing words across a large collection of

documents.

With this found success, one question arises; can Latent Semantic Indexing be

improved outside its patented framework to improve the relevance of the returned

documents? One possible approach is to expand Latent Semantic Indexing to involve

meaning and context.

3.1. Adding Meaning to Latent Semantic Indexing

Although Latent Semantic Indexing is a great improvement over current search

technologies, it lacks the ability to determine whether a document is correct

according to the context and meaning as intended by the creator. Involving this

concept is not going to be easy, especially as years of research within the field of

Natural Language Processing has yet to prove fruitful.

It would be useless to use a search system to look at the meaning of every

document in its collection. This would not only be difficult from a processing

point of view, but the task of getting a machine to understand meaning has not yet

been solved, and is unlikely to do so for many years to come without a major

breakthrough. However, a more feasible approach would be to examine each

document’s abstract / summary. This would require less processing power, and

provides a general overview of the content of the entire document. However, this

overview would need to be constructed in a way which makes its content

unambiguous from a machine’s point of view, depicting the appropriate context.

For example, searching for a document which contains an image of a Jaguar

aircraft through the following query ‘image of a Jaguar’, would provide any

documents related to the Jaguar aircraft, the Jaguar car, the manufacturer and the

cat, that also contain the word image. If the system was able to search through the

abstract of each document to determine which of the different contexts was

appropriate, the search could then be narrowed down. The system would then be

able to prompt the user to clarify the search based upon the available options, and

provide only relevant documents.

For this to be at all feasible, a new standard for publishing information of the

Internet would be required. All documents would need to have a summary /

abstract provided within the metadata of the document, along with the main

keywords. These keywords could be used to provide an alternative method of

collating the documents.

By providing a context-sensitive description of the document, the system could

then verify that all documents found by its search are totally relevant. This could

Page 3 of 19

be implemented using some form of pattern matching between the user’s search

query and the metadata of the documents in the initial search results.

3.2. Questions for Discussion

Would updating web standards help in improving Latent Semantic

Indexing and Information Retrieval?

Is Latent Semantic Indexing the way forward or just a temporary solution

until Natural Language Processing is perfected?

Who should manage the Index Matrix?

There are a variety of possible implementations of Latent Semantic

Indexing, ranging from distributed to centralised systems. Which would

provide the most benefit?

4. Introduction

In the past, when searching for information on a particular topic, the best place to start

looking was the library. Here, the researcher would be able to browse through the

books in a particular section and find what was required, primarily by looking at the

contents and the index.

Whilst this is still available, a wider collection of sources is available thanks to the

development of the Internet, and with more and more users being connected to it. It is

all very well having access to all of these resources, but this is useless unless the

relevant resources can be easily found.

The most well known method of finding resources on the Internet is the use of search

engines, such as Google (www.google.com), AltaVista (www.altavista.co.uk) and

Yahoo (www.yahoo.co.uk). The use of search engines is only one method. There are

also electronic library catalogues and “the grepstring-matching tool in Unix” (Kolda,

T et al 1998, p.1).

With the Internet being so vast, it is essential for users to be able to find information

easily and efficiently. This becomes even more important for users that still use DialUp Internet connections, where the user is charged for the price of a telephone call.

Information Retrieval encapsulates this task and from this, Latent Semantic Indexing

(LSI) is a promising new tool being developed.

5. Information Retrieval

Information Retrieval is oriented around the indexing of documents, most of which

are textual based, but also those that contain images, other multimedia features, such

as video and sound, and may even be bibliographic data, relating to non-electronic

material.

Information Retrieval has existed since the 50s/60s, when the abilities of computers

began to show the potential for library automation.

The main focus of Information Retrieval is the “issues of how to find meaningful

index keywords” (The Everything Development Company 2000, p.1), with the aim of

Page 4 of 19

being able to organise them, allowing a user to use simple queries to find relevant

information. These queries should then be able to return documents (hits) that are

relevant to the search, even including those that the user would not have thought of

looking at.

In Information Retrieval, there are two main quality measures that are used to

measure performance of a system; recall and precision.

5.1. Quality Measurement; Recall, Precision, and Fallout

When looking for documents on the Internet, the ultimate goal is to retrieve the

ones that are only relevant to the topic being searched. To measure how well a

retrieval system has retrieved the results, three factors need to be considered; the

number of relevant documents (R), the number of relevant hits (RH) and the

number of hits (H).

Figure 1 - Comparing Document Retrieval

Recall compares the number of relevant hits to the number of relevant documents.

This is done using the formula:

Recall

RH

R

where the ideal result is where all the relevant documents are found, producing a

Recall value of 1.

Precision on the other hand compares the number of relevant hits to the number of

hits. This can be done using the formula:

Precision

RH

H

where the ideal result is where all of the hits returned are relevant, producing a

Precision value of 1.

Although Recall and Precision are the main quality measures, there is one other,

which is not as well known; Fallout.

Page 5 of 19

Fallout is the comparison of the number of Irrelevant Documents (ID) to the

number of hits. This can be done using the formula:

Fallout

ID

H

where the ideal result is where all of the hits returned are not irrelevant, producing

a Fallout value of 0.

Figure 2 - Ideal Retrieval Scenario

The best Information Retrieval system will be the one that has a Recall value of 1,

Precision value of 1 and a Fallout value of 0, returning all relevant documents and

no irrelevant ones (See Figure 2).

Figure 3 - Worst Case Retrieval Scenario

The worst case scenario is where the search provides hits which are completely

irrelevant (See Figure 3).

5.2. Tools Available for Information Retrieval

Searching for information can be difficult at the best of times. Information

Retrieval has led to a variety of tools being developed to help make the task

easier, some of which are more effective than others.

Page 6 of 19

5.2.1. Binary Matching

Binary Matching, also known as Boolean Matching, is one of the simpler

Information Retrieval techniques. It is based upon searching for keywords and

determining whether they are contained in a document or not.

The simplest format is where the user enters a keyword into a search engine

and a list of documents that contain that specified keyword is returned. Most

search engines demonstrate this “because it is fast and can therefore be used

online” (THOR 1999, p.1).

Binary matching makes use of Boolean queries, allowing the user to specify

connectives, such as AND, OR, and NOT, to improve the search results.

However, binary matching will tend to miss many documents as it only deems

a document to be relevant or irrelevant; there is no partial matching.

5.2.2. Vector Space Model

The Vector Space Model is designed to be an improvement over Binary

Matching by relaxing the restrictions caused by the ‘Relevant or Irrelevant’

concept. It, therefore, allows partially relevant matches to be found.

This modelling approach relies on the principle that the document’s meaning

can be determined by examining the terms (words) that make up the content of

the document.

Vector Space Modelling comprises of three main stages; document indexing,

term weighting and similarity coefficients.

Document Indexing is oriented around the extraction of all of the terms that

describe the content of the document. Many of the words, such as ‘the’ and

‘is’, do not describe the content and so can be excluded from the index list.

Once all of the ‘content-describing’ terms have been found, Term Weighting

is applied. This is done to highlight the more relevant terms.

There are a variety of ways to calculate the weighting for each term. These

include the frequency of each term, collection frequency and length

normalisation. To date, the weighting that provides the best results, in

accordance to the quality measures, makes use of “term frequency with

inverse document frequency and length normalization” (THOR 1999, p.2).

Page 7 of 19

Figure 4 - Representing Document Space

The results of applying the weighting to the index list are used to create the

Index Matrix, “relating each document in a corpus to all of its keywords”

(Belew, R 2000, p.86). A simplified approach is the construction of a table

with the documents listed across the top and the identified content terms listed

down the side (See Figure 4), with the weighting being equal to the number of

occurrences within the document.

Figure 5 - Simplified Vector Representation of the Index Matrix in Figure 4

This matrix can be represented using vectors (See Figure 5), but can be

difficult to visualise as they tend to work in a large number of dimensions

rather than the three that most people accept.

Once the weighting has been applied, the similarity coefficient stage is

applied. This looks at the terms within the query, with the appropriate

weighting applied, and compares them to terms found in the documents. The

score is calculated for each document by the use of either the dot product (See

Figure 6) or cosine similarity.

Page 8 of 19

Figure 6 - Vector Matching

The score that is calculated indicates the relevance of the document according

to the query entered based upon the appropriate weighting approach used, and

can be used to provide some form of ranking of the documents.

5.2.3. Latent Semantic Indexing

There are two main problems with language associated with context and

meaning. One of the problems is where objects can be referenced by multiple

terms. For example, a person giving a speech may be classed as the speaker or

spokesperson. This problem is known as Synonymy. The other problem is

where words can have more than one meaning. For example, the word Jaguar

has several different interpretations that can be applied to it based solely on

that word. It could be a car, a military aircraft, a company or a type of cat.

This type of problem is known as Polysemy.

If these two problems can be reduced, more relevant documents will be

returned than irrelevant ones. Latent Semantic Indexing aims to accomplish

this as it will be seen in Section 6.

6. Latent Semantic Indexing

Latent Semantic Indexing (LSI) was developed originally by Bellcore (now

Telcordia™ Technologies). It is an extension of Vector Space Modelling, designed to

provide search results related to a specified keyword, even if the keyword is not

contained within the document. It achieves this through the use application of

Singular Value Decomposition (SVD) to the index matrix as created by the Vector

Space Model.

LSI compares documents to determine if they contain many common words.

Documents that follow this trend are then deemed to be semantically close. This

approach is suggested by Yu, C et al (2003) to work surprisingly well, and also

generally mimics “how a human being, looking at content, might classify a document

collection” (Yu, C et al 2003, p.1). For this reason, LSI can return relevant results,

even without the documents containing the specified keyword.

One of the impressions that LSI provides is that it is apparently looking at the context

and is showing it’s comprehension of relationships. However, in practice, it does not

Page 9 of 19

do this. It applies pattern matching on word use, and establishes connections based

upon these patterns.

6.1. How does LSI work?

Latent Semantic Indexing is composed of four stages; content search, index matrix

composition, term space modelling, and application of Singular Value

Decomposition. All stages are applied to provide a refined set of search results.

6.1.1. Content Search

Before any processing can be undertaken, the content-describing words need

to be identified. At first glance, this may appear like an impossible task.

The main focus of narrowing down this search is based upon the principle that

natural language contains a lot of redundancy. This means that many of the

words can be removed and the remaining words will still describe the content.

Therefore, the first step is to remove these redundant words. There are many

approaches that could be used to achieve this process, but will generally all

achieve the same principle task.

Initially all words within every document in the collection are collated so that

all important words are available for use. All prepositions, conjunctions and

pronouns are removed along with common verbs and adjectives. Words,

which aid in the readability of the text, such as ‘therefore’, ‘however’ and

‘thus’, are also removed.

This process leaves a list, which is vastly reduced. However, it can be reduced

further by the removal of words that are contained in all documents, as they do

not distinguish the documents from each other. Words that are only contained

in one document are also removed, as they do not allow related documents to

be found. This provides the final list of content-describing words.

6.1.2. Index Matrix Composition

Once all of the content-describing words have been found, the index matrix,

which is also known as the term-document matrix, can be created. This index

matrix is the same as that created by the Vector Space Model (See Section

5.2.2).

As for the Vector Space Model, there are a variety of methods available to

calculate the term weighting. However, Latent Semantic Indexing usually

makes use of a “local and global weighting scheme” (Berry, M 1996, p.1).

The local weighting adjusts the relative importance of the terms within the

documents, whilst the global weighting adjusts the relative importance across

the collection of documents. These weightings are only applied to non-zero

elements within the matrix, and are done so by multiplying the global and

local weightings together.

Page 10 of 19

Mathematically, the resulting value for each element (a) is as follows:

atd fL(t , d ) * fG(t )

where fL() is the local weighting function, fG() is the global weighting

function and t is the term in document d.

From this it can be seen that fL(t,d) is the function that calculates the relative

importance of the term t within document d, as both the term and the

document need to be supplied. fG(t), however, only requires the term t to be

supplied, and it can be seen that this function calculates the relative

importance of the term across the entire collection.

There are a variety of different local and global weighting functions that have

been tried. The most advantageous scheme over the use of raw term

frequency was found by Dumais (cited in Berry, M 1996, p.1) to be the logentropy weighting scheme, providing a 40% advantage.

6.1.2.1.Log-Entropy Weighting

Log-entropy weighting is a local weighting function. It relies upon

dividing each of the columns values by the entropy of the column values.

The log of the result of this calculation is then taken to provide the

required weighting.

6.1.2.2.Other Types of Weighting

There are a variety of different weighting methods, which are classified as

either a local or a global weighting function.

Local weighting functions include term-frequency and binary weighting.

Binary weighting is based upon highlighting whether a term exists within a

document or not. The values of the matrix consist either of a 1 or a 0,

where 1 means that the term is in the document. Term-frequency takes

binary weighting one stage further. Rather than just stating whether a term

is contained within a document, this approach creates a matrix which

depicts how many times the term occurs in a given document.

Global weighting functions include GfIdf, and Inverse Document

Frequency (IDF) weighting.

IDF weighting is oriented around the “observation that people tend to

express their information needs using rather broadly defined, frequently

occurring terms” (Belew, R 2000, p.84). However, it is usually the less

frequently occurring terms that are more important, and so IDF makes use

of this fact to provide an alternative weighting approach. GfIdf is a

variation of IDF, which applies the principle of IDF and multiplies it by

the global term weighting.

Page 11 of 19

6.1.3. Term Space Modelling

Term Space Modelling is oriented around providing a graphical representation

of the Index Matrix. The process undertaken is the same as undertaken in

Vector Space Modelling (See Section 5.2.2). There are a variety of methods

accomplishing this modelling task, but all entail the representation of the

document in terms of the keywords (terms).

Figure 7 - Simplified Comparison of Term Space Modelling Diagrams

A graph can be created to show each of the documents. Each point on the

graph represents a document, whilst each of the axes represents a unique

keyword. As there are usually many unique keywords within a document, the

creation of a graph can be difficult to visualise as humans are only capable of

easily representing three dimensions (See Figure 7).

As it is difficult to represent, let alone manipulate documents in the scale of

thousands of dimensions, a method needs to be used to reduce this number

down to a more computationally manageable amount. This is done through

techniques, such as Singular Value Decomposition.

6.1.4. Singular Value Decomposition

Singular Value Decomposition (SVD) is an orthogonal decomposition and is

used to reduce the number of dimensions used to represent the documents. It

has been found that eigenfactor analysis is an efficient way to “characterize

the correlation structure among large sets of objects” (Belew 2000, p.156), and

SVD is just one of the techniques used to accomplish this.

Page 12 of 19

Figure 8 - Pictorial Representation of SVD

Singular Value Decomposition splits any rectangular matrix of size mxn into

three components; a mxn matrix (U), a nxn matrix (VT) and a nxn diagonal

matrix (S), which describes the relationship between the mxn matrix and the

nxn matrix (See Figure 7). This is done using the formula

X USV T

The mxn matrix (U) is composed of columns called left singular vectors

denoted as {uk}. The rows of the nxn matrix (VT) “contain the elements of the

right singular vectors, {vk}” (Wall, M et al 2003, p.1). The diagonal matrix

(S) contains the singular values, which are the elements within the matrix on

the diagonal. These diagonal values are non-zero, whilst all other elements

within the matrix are zero. This implies that

S diag ( S1 ,..., S n )

The singular vectors are ordered by sorting them from high to low. This

means that the highest singular value is in the upper left index of the diagonal

matrix.

Singular Value Decomposition for a mxm symmetrical matrix (X) is the same

as the result of solving the eigenvalue problem, or diagonalization. SVD

allows the calculation of

l

X (l ) u k sk vkT

k 1

The application of SVD on the symmetrical matrix (X) provides an important

comparison. It is found that X(l) calculated by the above formula is “the

closest rank-l matrix to X. The term “closest” means that X(l) minimises the

sum of the difference of the elements of X and X(l)” (Wall, M et al 2003, p.1).

It is possible to diagonalize XTX to calculate VT and S according to the

formula

X T X VS 2V T

Page 13 of 19

U can then be calculated by

U XVS 1

There are a variety of methods that have been implemented by the University

of California and IBM research to calculate the SVD of a matrix. These

methods include:

Householder Reflections and Given Rotations – This approach can be used

if the matrix is small and not very sparse after various processing techniques.

This approach tends to be impractical to use for Internet-based Information

Retrieval, due to its slow speed.

Power Method and Subspace Iteration – The Power method is used to find

the “largest eigenvector and corresponding eigenvector for a square matrix”

(King, O 1999, p.24). Subspace Iteration is based upon the Power Method.

Lanczos Algorithm – This method is used to calculate the singular values of

large matrices, but does require additional computations to find the associated

eigenvectors. There are a variety of variations, which include Full

Reorthogonalization (FRO), Selective Orthogonalization (SO), Scott’s

Othogonalization (SCO), Selective Orthogonalization II (SO2) and Partial

Orthogonalization.

All of these approaches have their advantages and disadvantages, with some

being more computationally viable than others. These approaches have been

experimentally evaluated and improvements have been found by combining

the approaches (See King, O 1999, p.24).

Within Latent Semantic Indexing, SVD is applied to the index matrix. Once

this has been done, the searching can begin. However, it does require a

readily available copy of the matrix U to be kept so that the search queries can

be transformed to make use of the same document dimensions.

6.2. Strengths of LSI

Latent Semantic Indexing provides the ability to find a broader range of relevant

documents due to looking at semantic relationships between groups of keywords

and the use of a high-dimensional representation. The LSI algorithm also

represents both terms and text objects in the same space, allowing all relevant

information to be processed together as a collection rather than unrelated

documents. This representational feature also helps in allowing objects to be

retrieved directly from the query terms.

6.3. Problems with LSI

As with most techniques in Information Retrieval there are advantages and

disadvantages. Although, LSI provides a significant improvement to the number

of returned documents that are relevant, there are a few main disadvantages that

need to be considered.

Page 14 of 19

As LSI makes use of Singular Value Decomposition, every time documents are

added or removed the SVD statistics of the entire collection are affected. How

much the statistics are affected depends on the size of the document collection.

The larger the collection, the less the statistics are affected.

Due to the compression of the document corpus is compressed, the queries must

be “transformed from the original space of “raw” keywords into the reduced kdimensional space” (Belew, R 2000, p.159). This has to be repeated for every

single query that is used. This also means that the matrix (U) used in the

transformation must be kept readily available.

These disadvantages all contribute to the need for extra storage space and extra

computational power. This means that LSI tends to be slower than the

conventional binary matching methods used in search engines.

6.4. The Uses and Potential Uses of LSI

Latent Semantic Indexing is generally thought of as being an improvement over

the current binary matching search engines. However, there are a variety of other

implementations.

6.4.1. Relevance Feedback

The standard search engine only requires users to enter a small number of

keywords to create a query. The larger this supplied list of keywords, the

more irrelevant documents are returned. LSI contrasts this approach by

making use of a larger set of related keywords to improve the recall.

Relevance feedback improves the query supplied by the user by making use of

the terms within relevant documents. This is achieved without increasing the

computational requirements needed to perform the query, and allows the recall

and precision of the results returned to be improved.

6.4.2. Information Filtering

As Latent Semantic Indexing is able to correlate related keywords, one

possible use is to use it for information filtering, where certain types of words

are removed or documents/text containing certain words are removed from

retrieved documents

An application of information filtering is the filtering of spam email. A major

problem with the use of email is the increasing amount of junk mail that users

have to wade through before actually getting to the important stuff. With an

appropriate implementation of LSI, information filtering could be used to

remove all documents that followed a generic structure. Initially, LSI would

be poor at achieving this until its collection of documents became substantial

enough to infer relationships between keywords in unwanted email.

Page 15 of 19

LSI would then be able to isolate these email messages or even rank the users

entire email messages in order of importance, putting all of the junk email to

the bottom of the list.

Information filtering does not need to be limited to the use of helping to

manage spam. It could be used to manage content expressed within chat

rooms, news groups, bulletin boards and family-suitable search engine results,

using a similar system.

6.4.3. Textual Coherence

When documents are written, one of the main tasks is to ensure that it flows

correctly from one topic to another. LSI could be used to determine the

semantic relationship between parts of the document, allowing a picture to be

developed of how the document flows from one topic to another.

6.4.4. Automated Writing Assessment

With a large collection of documents, a student’s report could be analysed to

determine whether any areas of research were missed. This allows the student

to be given feedback and be provided with areas of further research.

LSI could also aid in the task of fully automating academic integrity checking.

With a broad range of documents within its collection, a system could check a

submitted document to determine if any of the content is copied directly from

other sources.

One further area that could be explored is automating the marking of exams

and coursework. LSI could be improved to determine if the correct content

has been covered. Also if extended to include textual coherence and meaning,

the document could be assessed to determine if it makes sense and is readable.

6.4.5. Cross-Language Retrieval

Latent Semantic Indexing is able to find a wide range of resources related to a

given keyword. By being able to translate the keywords, documents in other

languages can also be found, that are still relevant. The system could then be

used to display all documents in any language that the user can read and

understand.

7. Conclusions

Latent Semantic Indexing is a mathematically-based solution to finding documents on

the Internet. It relies upon finding relationships between keywords and inferring a

semantic correlation between them. This approach provides an inaccurate perception

that the system understands the meaning of the documents, allowing it to find ones

that are related.

LSI makes use of a complex mathematical technique known as Singular Value

Decomposition to reduce the number of dimensions within the document space. Due

Page 16 of 19

to this and other computational requirements, LSI tends to be slower than binary

matching techniques, such as the search engine. However, this needs to be weighed

up with the ultimate advantage of an improved success at returning relevant

documents, even if they do not contain the specified keyword. LSI “has been shown

to be 30% more effective in finding and ranking relevant items than the comparable

word matching methods” (Telcordia Technologies Ltd. Undated, p.1).

There is a lot of potential for developing around LSI by incorporating true meaning

analysis. However, if the concept of meaning analysis does ever get accomplished, it

is likely that LSI will be made redundant as more accurate techniques are likely to be

developed.

8. References

BELEW, R (2000) Finding Out About: A Cognitive Perspective on Search Engine Technology

and the WWW Cambridge: Cambridge University Press

This book introduces Information Retrieval, and a variety of tools and approaches associated

with the field. It looks primarily at text retrieval, but aims to do so in an easily to understand

approach.

BERRY, M (1996) 2.2.2 Weighting [WWW] http://www.cs.utk.edu/~berry/lsi++/node7.html

[accessed 11/11/03]

This site provides a brief description of weighting within LSI.

KING, O (1999) Information Retrieval and Ranking on the Web: Benchmarking Studies II

[WWW] http://citeseer.nj.nec.com/cache/papers/cs/12049/

http:zSzzSzwww.trl.ibm.co.jpzSzprojectszSzs7710zSzdlzSztrlrepzSzrt298.pdf/informationretrieval-and-ranking.pdf [accessed 18/11/03]

This document experimentally investigates a variety of SVD approaches in order to provide a

general comparison.

KOLDA, T & O’LEARY, D (1998) A Semidiscrete Matrix Decomposition for Latent Semantic

Indexing in Information Retrieval [WWW] http://web2.infotraccustom.com/pdfserve/get_item/1/S7b0bf9w4_3/SB993_03.pdf [accessed 14/10/03]

This document describes LSI and how it fits into Information Retrieval by providing

comparisons to some of the alternatives. It also describes some of the variations of LSI.

TELCORDIA TECHNOLOGIES INC (undated) Telcordia™ Latent Semantic Indexing

Software (LSI): Beyond Keyword Retrieval [WWW]

http://lsi.argreenhouse.com/lsi/papers/execsum.html [accessed 10/11/03]

This is an executive summary about LSI by Telcordia, the company formally known as

Bellcore.

THE EVERYTHING DEVELOPMENT COMPANY (2000) Information

Retrieval@Everything2.com [WWW] http://www.everything2.com/index.pl [accessed

14/10/03]

This page provides a summary of the query ‘Information Retrieval’ providing a good starting

point for further investigation into the topic.

THOR (1999) Introduction: Boolean Retrieval [WWW]

http://isp.imm.dtu.dk/thor/projects/multimedia/textmining/node2.html [accessed 29/10/03]

Page 17 of 19

This page is one of a collection that briefly introduces Information Retrieval. This particular

page provides a brief description of Boolean Retrieval/Boolean Matching

WALL, M; RECHTSTEINER, A & ROCHA, L (2003) Singular value decomposition and

principal component analysis [WWW] http://public.lanl.gov/mewall/kluwer2002.html [accessed

12/11/03]

This website describes Singular Value Decomposition from a mathematical viewpoint, but

helps with the understanding by attempting to simplify the topic as much as possible.

YU, C; CAUDRADO, J; CEGLOWSKI, M & PAYNE, J. S (2003) Latent Semantic Indexing

[WWW] http://javelina.cet.middlebury.edu/lsa/out/lsa_definition.htm [accessed 21/10/03]

This is just one page out of a collection, which introduces the concept of improving search

engines and how LSI and Multi-Dimensional Scaling can help with this. This particular page

introduces LSI and provides an overview. Pages associated with this document explain how

LSI works and the uses/potential uses for LSI.

9. Bibliography

BERRY, M (1996) 2.2 Latent Semantic Indexing [WWW]

http://www.cs.utk.edu/~berry/lsi++/node5.html [accessed 11/11/03]

BERRY, M (1996) 2.2.1 Term-Document Representation [WWW]

http://www.cs.utk.edu/~berry/lsi++/node6.html [accessed 11/11/03]

BERRY, M (1996) 2.2.3 Computing the SVD [WWW]

http://www.cs.utk.edu/~berry/lsi++/node8.html [accessed 11/11/03]

BERRY, M (1996) 2.2.4 Query Projection and Matching [WWW]

http://www.cs.utk.edu/~berry/lsi++/node9.html [accessed 11/11/03]

BERRY, M (1996) 2.2.5 Relevance Feedback [WWW]

http://www.cs.utk.edu/~berry/lsi++/node10.html [accessed 11/11/03]

CARNELL, T (2000) Investigation into Internet search technology [WWW]

http://www.scism.sbu.ac.uk/inmandw/tutorials/irtutorials/O1.DOC [accessed 29/10/03]

ELKS, A (2000) Information Retrieval Techniques [WWW]

http://www.scism.sbu.ac.uk/inmandw/tutorials/irtutorials/O2.DOC [accessed 29/10/03]

FOLTZ, P & DUMAIS, S (1992) Personalized information delivery: an analysis of information

filtering methods [WWW] http://web2.infotraccustom.com/pdfserve/get_item/1/S7b0bf9w4_4/SB993_04.pdf [accessed 14/10/03]

FOLTZ, P (1990) Using Latent Semantic Indexing for Information Filtering [WWW]

http://www-psych.nmsu.edu/~pfoltz/cois/filtering-cois.html [accessed 14/10/03]

HAMMERSLEY, B (2003) Guardian Unlimited | Online | Time for new search techniques

[WWW] http://www.guardian.co.uk/online/story/0,3605,889299,00.html [accessed 21/10/03]

INMAN, D (2003) Introduction to Information Retrieval London: London South Bank University

JENNINGS, S (2003) Internet Cryptography Lecture Notes - Week 1 London: London South

Bank University

KOLDA, T & O'LEARY, D (2000) Algorithm 805: Computation and Uses of the Semidiscrete

Matrix Decomposition [WWW] http://web2.infotraccustom.com/pdfserve/get_item/1/S7b0bf9w4_5/SB993_05.pdf [accessed 14/10/03]

LETSCHE, T AND BERRY, M (1996) Large-Scale Information Retrieval with Latent Semantic

Indexing [WWW] http://www.cs.utk.edu/~berry/lsi++/ [accessed 29/10/03]

MERIS, G (2000) Latent Semantic Indexing [WWW]

http://www.scism.sbu.ac.uk/inmandw/tutorials/irtutorials/I1.DOC [accessed 29/10/03]

MURTAGH, F (2001) Information Retrieval [WWW]

http://www.cs.qub.ac.uk/~F.Murtagh/csc306/sxb-ho.pdf [accessed 18/11/03]

PERRY LIBRARY (2001) Referencing Electronic Sources London: London South Bank

University

PERRY LIBRARY (2001) Referencing using the Harvard System: frequently asked questions

London: London South Bank University

THOR (1999) Introduction: Latent Semantic Indexing [WWW]

Page 18 of 19

http://isp.imm.dtu.dk/thor/projects/multimedia/textmining/node10.html [accessed 29/10/03]

THOR (1999) Introduction: Vector Space Model [WWW]

http://isp.imm.dtu.dk/thor/projects/multimedia/textmining/node5.html [accessed 29/10/03]

YU, C; CAUDRADO, J; CEGLOWSKI, M & PAYNE, J. S (2003) Applications of LSI [WWW]

http://javelina.cet.middlebury.edu/lsa/out/lsa_applications.htm [accessed 21/10/03]

YU, C; CAUDRADO, J; CEGLOWSKI, M & PAYNE, J. S (2003) How LSI Works [WWW]

http://javelina.cet.middlebury.edu/lsa/out/lsa_explanation.htm [accessed 21/10/03]

YU, C; CAUDRADO, J; CEGLOWSKI, M & PAYNE, J. S (2003) LSI Example - Indexing a

Document [WWW] http://javelina.cet.middlebury.edu/lsa/out/tutorial.htm [accessed 21/10/03]

YU, C; CAUDRADO, J; CEGLOWSKI, M & PAYNE, J. S (2003) The Term-Document Matrix

[WWW] http://javelina.cet.middlebury.edu/lsa/out/tdm.htm [accessed 21/10/03]

Page 19 of 19