Word - Centre For Applied Cryptographic Research

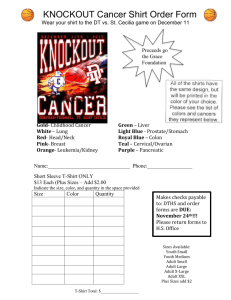

advertisement